In November of 2012, I embarked on a Quixotic attempt at building a BI prototype in 7 days. I dubbed the experiment ‘OLAP Sprint’.

The plan was to leverage everything great about the PASS Summit (access to experts, time away from the office to focus, a stimulating environment) and build a functional multi-dimensional cube from scratch. I had taken some courses and done some reading, but this was to be my first time putting everything together. By the end of my 7-day sprint in Seattle, I had my scotch-tape-and-paper-clips multidimensional cube built. It was a totally engrossing experience: frustrating, exhilarating, stimulating, and ultimately, totally rewarding.

Aside from the invaluable learning experience, the OLAP Sprint also produced a proof-of-concept that I could share with the business leaders at my office, to show them the light of BI, and set things in motion to upgrade our relational reporting solution to something more scalable and dynamic using SQL Server Analysis Services.

I am happy to report that, almost a year later, we have transformed that prototype into a production-level BI solution, and are primed to release our first set of reports.

What follows are some of my observations, on the stretch of development that has lead me to this point – from Prototype to Production.

Learning Methodology

I underestimated the challenge of going from thinking about the world using two-dimensional OLTP objects to thinking about the world in a multi-dimensional OLAP cube with facts and dimensions. The deep-seated rules of normalization, referential integrity, and constraints, made it awkward and uncomfortable when we first started building out the cube’s dimensions.

In order to get myself in the right frame of mind to deal with that transition, I tried to replicate some of the environmental aspects of the PASS Summit – most importantly the focus and concentration – by blocking most of my days for BI work only, and taking care of operational duties en-masse on other days. I also attended the inaugural PASS Business Analytics conference, which helped to solidify the new themes and concepts that I was learning.

I also alternated between conceptual learning and technical learning. On some days, I’d carve out time to read from “The Data Warehouse Lifecycle Toolkit” (Ralph Kimball), which built my understanding of BI themes and concepts. On other days, I’d focus on executing a certain technical task – for example creating a simple report in SSRS that reported on the cube, from “Professional MS SQL Server 2012 Reporting Services” (Turley, Bruckner, et al). The combination of the two approaches kept my learning balanced.

Expert Help

Before we got started, we had a ‘buy vs. build’ debate internally, to assess the time and risk of doing the build ourselves versus using a consultant to help us. In the end, we engaged a consulting company that specializes in the SQL Server product stack to help us. This was a huge time-saver for us, and also reduced the risk involved, because they were responsible for the major architecture. Our consultant was not only technically very astute, but also a good teacher. The experience was stressful at times, but enjoyable, and we were totally engaged in the development along the way, which means we’re comfortable owning it now.

Development Methodology

When it came time to actually build the SSIS packages, or attribute dimensions, or reports, or whatever, we diagrammed things out on a whiteboard beforehand. It allowed us to make mistakes, and when we sat down to do the work in SSDT, we had a goal to work toward. We also prioritized our deliverables, and in many cases, sought to simplify whatever it was we were working on. We gave ourselves realistic, achievable targets and made sure we hit our marks. If we got stuck, we simplified the deliverable. A good example was user hierarchies: when we first began, we envisioned them as robust and complex, but they were difficult to reproduce. In the end, in order to stay on track, we reduced their complexity quite a bit, in order to make sure that we could deliver something working. This is Agile development methodology 101, and it worked great for us.

I also tried to respect my limits. There were days when I’d want to put in more time, but my brain stopped being able to digest or assimilate the information. Knowing when to push a little harder to solve a stubborn bug and when to throw in the towel and come back fresh in the morning was vital. More often, a fresh mind solved problems faster than a stubborn one.

The ETL Takes a While

I had heard estimates that building the ETL/Data warehouse tiers of a BI project could consume as much as 80% of total time spent on the project. That number always sounded exaggerated to me, but when I counted up the days spent doing development on that tier, it was totally accurate.

In my prototype, I used views based on the OLTP source system to act as my OLAP fact and dimension tables, so I effectively skipped the ETL tier. That obviously wasn’t a feasible model for our Production solution. We spent a lot of time designing and testing packages in SSIS, to make sure that the data coming out of the source system made it to the data warehouse properly.

Like many others, we assumed that our data was clean. Boy were we wrong, especially with our geographic/address data. As we tried to build out our attribute relationships, we would be notified of duplicate keys in our dimension attributes, and it led to quite a period of data investigation and cleaning. The Data Profiling task in SSIS helped with that.

Don’t even get me started on MDX

Having progressed nicely from building the data warehouse and ETL tiers, I ran into MDX like a steel wall. I was at first confident that my knowledge of TSQL would help me make the transition, but was sorely disappointed. In fact, my background in TSQL actually interfered with my learning MDX, because some of the familiar constructs (SELECT, FROM, WHERE) have substantially different applications in MDX.

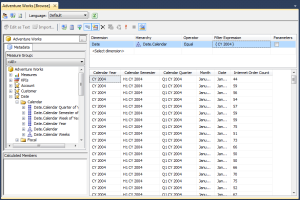

The biggest difference, aside from syntax nuances, is of course that TSQL deals with the 2-dimensional world of columns and rows, but MDX isn’t limited to two dimensions. Visualizing the multi-dimensional result set that I wanted to produce was difficult, so writing the code to get there was challenging. The life-saver is the query wizard that Microsoft sprinkles throughout SQL Server to help you get what you want (in SSAS, SSMT, SSDT, etc). You can build the query visually, and then hit the ’Design Mode’ button to see the results in MDX.

Oh My God is it Ever Fast

The performance results have been unbelievable. We were giggling like school girls when we ran our first set of reports, and hugely relieved to see just how well the BI solution performed. Queries that had taken minutes to run on OLTP was taking fractions of a second on OLAP. Having been the one to pioneer and advocate for a BI solution, this was immensely satisfying for me. There was a huge investment of time, money, and effort, and to see it all pay off when we ran those reports made everyone breathe a sigh of relief, and smile at the possibilities.

Conclusion

One of the more illuminating quotes I heard from a presenter at the PASS BA conference in Chicago was the following: ”BI projects aren’t projects. They’re Programs”. With this in mind, I’m excited about the future potential of this program, fully aware that just because they build is over, it doesn’t mean the work is done. Hopefully, the best is yet to come.