Thoughts on storing repetitive data

-

May 14, 2020 at 2:18 pm

Hi all,

I have data in a file that I get on a daily basis and use SSIS to import it in to my database. The file is MTD, so I will keep getting most of the same data throughout the month but some records will drop off and new ones will be added but again most of the data will remain the same. For example, customer data. I get new customers and lose customers through out the month but the bulk of them remain the same.

My struggle is how to store this data. Ideally I don't want the same customer records in the database but the file is used by others and I can't change it. Additionally, I have users that need to report on the number of customers within a date range, so I need to track them that way.

Any suggestions on the best approach? I thought about adding a date/time to the import for the customer records and the reporting folks can use that to track the customers in any given date range but that doesn't solve my issue of having so many redundant customer records. Or should I split use multiple tables to accommodate the reporting needs as well as reduce my number of redundant records? Or should I just import it as-is and let the users deal with it? The latter is easy but I do want to do my best to accommodate everyone if possible. It may be worth mentioning that my primary concern is reporting.

Thanks!

-

May 14, 2020 at 2:47 pm

My opinion (others may disagree), I try not to denormalize reporting data unless some of the data is going to be used by multiple reports. I try to reduce the number of joins required to get all of the data for my report so that the report can load as fast as possible. This results in repetitive data, but saves time with the joins.

That being said, if the data is used in multiple reports, I try to separate it into smaller chunks so other reports that DO join, can look at a lot less data. Indexes can help with this too depending on how much data it is.

It also depends on what the reports are going to show. If you add a date imported field, then for the report if all they care about is the count of customers in a time range, they can easily do a distinct count on customer name where the timestamp is between a certain range. it really depends on what the reports are going to show though. It may be useful to keep track of when a customer first became a customer and when they were no longer a customer or keep track of when a customer became a customer again after not being a customer.

My overall opinion - I wouldn't worry about repetitive data in a data warehouse/data mart; I would be more concerned about if I can get all of the data that is required for the reporting. Import date is something I would definitely capture. The method I would probably do is to have a "raw data" table where you pull in the data from the flat file without changing anything except adding on an "import date" column. Then the reporting people can see the raw data, but I'd build up views or new tables with the data required for the report. The biggest reason to do that is in the event that your flat file format changes and the data import fails, the reports still run, they just will be missing some recent data while you work to resolve the problem. This is true if they are using the raw data as well, but if you need to change something at the raw data side (such as the flat file gets an extra column added to it that you need to capture, or you need to rename a column), the reports do not need to be modified. Do all of the report processing that you can in SSIS, so the only thing the report needs to do is pull data from one table\view and filter out data they don't need (preferably rows, not columns).

At my workplace, we pull data from source tables or flat files into staging tables. The staging tables are then used to create the reporting tables. If someone comments that a report is wrong, we can show them the source data and prove that the report is showing what it is supposed to be showing.

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

May 14, 2020 at 3:38 pm

I mostly agree with what Brian wrote. I am wary of denormalizing, and I do it when multiple places need the data and/or performance is an issue. Trading space for time is something I do often.

It's not quite clear about the imports. I usually always import to staging tables, so that I can then decide what to move over. It's often faster to import and then delete some data than make decisions on the import. If I need the data, but not dates, I can not copy over the same data to real tables from stagings areas. If I need dates, I might separate those out, but not if it causes a lot of hassle in reporting.

At the same time, duplicates in a table with different dates sometimes cause confusion. There's no right answer here, and likely your history and how people deal with things now makes a difference.

If you have specific requirements to point out, happy to give more thoughts, but Brian has done a great job of summarizing what I think

-

May 14, 2020 at 3:59 pm

I always try to be brief in postings but know that short changes the description of the issue. Sorry about that.

In theory, I could import the last file of the month and that would contain all the data I need with only 1 record for each customer. Customers that leave and accounts that close are still included in the report but show a status of "closed". The problem is that I get the files daily and need to report on it daily and they want all data in 1 reporting tool with the ability to filter by month/year.

My initial approach was to import the files in to tables ending in the month and year, like Customer_Jan_2020, Customer_Feb_2020, etc. Afterwards, I moved 1 copy of each customer record to a "final" table, or Customer_Final. This was fine for basic reporting but that's when I encountered the issue with filtering customer records by Month/Year. I have no date field in the "Final" table and that opened up similar issues as well. All my files are set up the same way as the Customer file and will need to report on the data and filter by Month/Year.

Mark

-

May 14, 2020 at 4:40 pm

I would capture as much data as I can that makes sense. A timestamp of the import is something I try to capture in the staging and historical tables because you never know when someone will change a requirement and instead of filtering by month and year, they want to add in filtering by date.

If you have captured the data, you can report off of it in the future. If you didn't capture it, the data is lost.

That being said, some pieces of data are known useless to capture. It is very unlikely that the filename of the import will be useful in the future for reporting in your case, but there may be cases where that is important. Or who ran the import (manually or scheduled), etc.

You can only report on data you have captured. In your example, you are saying the "Customer_Final" table has no timestamp on it. Therefore, the only way you could do the filter on month/year would be to look at the Customer_month_year tables which would be a pain in the butt and is very likely to have performance issues. Generally, the fewer tables the report writers need to pull from, the faster the report will load. Still need good indexes on things too.

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

May 14, 2020 at 5:32 pm

Thanks. All good advice and I'm seeing a few things I need to do.

I already have staging table (the month_year ones) but I may collapse them to 1 table with the time stamp of the import of the files. That would allow for date searches and keeps all data in original format. Then I'll get better requirements for additional reporting needs an build out tables for those. I see now that the "one-size-fits-all" approach simply won't work here. I was looking at this from a relational DB perspective and not a reporting one. More so because I have a LOT of tables and that may confuse the people trying to report on the data.

Indexes aren't my strength so I may have to come back for assistance on that. Some of these tables will have millions of row and I can already see issues with queries but I'll get specifics about those and make a post.

As always, thanks for the assistance.

Mark

-

May 14, 2020 at 5:40 pm

Make your Final table a view instead, so that it selects the max(createdate) for each customer. That way, even when they weren't all updated at the same time, you see the last version of all of them.

-------------------------------------------------------------------------------------------------------------------------------------

Please follow Best Practices For Posting On Forums to receive quicker and higher quality responses -

May 14, 2020 at 6:02 pm

You don't actually need to store all rows that don't change to be able to do PIT (Point-in-Time) reporting. IMHO, it's actually foolish to do so because not only will backups take a beating but so will memory and performance, which is what you're trying to save on.

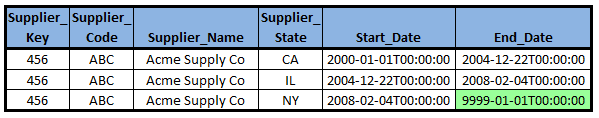

Instead, have a look at Type 2 Slowing Changing Dimensions (SCDs) at the following link. Specifically look for the "'effective date' columns" rendition. Just don't add that bloody "Current_Flag" column. It's a total waste of table, memory, and index space. Also, DO NOT USE NULL for an EndDate. Trust an ol' dude on this one. Use '9999-01-01" instead of NULLs for open end dates and don't use '9999-12-31' because it leaves no room for "smart" calculation using >= and < for "Closed/Open" (inclusive/exclusive) temporal periods. Your code, your indexes, and your users will love you and so will performance.

Here's the link for Type 2 SCDs...

https://en.wikipedia.org/wiki/Slowly_changing_dimension#Type_2:_add_new_row

Go for something like the following whether you use a history table or not.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

May 14, 2020 at 6:20 pm

p.s. You might want to also look into "Temporal Tables", which are a combination of Type 2 + Type 4 SCDs that do most of the work for you. All you need to do is determine if a row has been updated or is a new row to be inserted and the system pretty much takes care of the rest including auto-magically combining the history table with the main (current) table plus the optimization of searching only the current table when you're looking only for current data.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

May 15, 2020 at 2:01 am

I strongly disagree with Jeff. If you want a good discussion of representing temporal values in SQL, download the paper by Rick Snodgrass at the University of Arizona, as a PDF. The ANSI/ISO model of temporal intervals is based on half-open intervals (we know the start, but only approach the ending point as a limit) . This is why 24:00:00. Hrs today is actually 00:00:00. tomorrow. This temporal model was not very well covered in classes a few decades ago.

Thus, I prefer (and so do people who've worked with temporal data in SQL on the standards committee) to use

CREATE TABLE Foo

(foo_start_date DATE NOT NULL

foo_end_date DATE,

CHECK(foo_start_date <= foo_end_date), -- important constraint!

...):

I can then create a view, computed column, or whatever with COALESCE (foo_end_date, CURRENT_TIMESTAMP) to get the current picture of my data. The guest has not yet checked out of his hotel room. The employee is still in the database. Etc.

If you try to assign multiple meanings to an actual date, as Jeff suggested, you have to make this case, expression in your code to find out if it's really a date or null. Gee, we already have a null, so why don't we just use that?

Please post DDL and follow ANSI/ISO standards when asking for help.

-

May 15, 2020 at 1:41 pm

Disagreement noted.

However, there's nothing in what I stated that uses 24:00:00, nothing that would violate the constraint on the EndDate, and thee method I suggested actually does use the proper Closed/Open method for delineating periods of performance. Using an extreme future EndDate instead of NULL also avoids the performance death of having to use "OR" in the WHERE clause or Joins whereas your use of NULL does not.

And if you going to cite an important work, you should also provide the URL for it.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

May 16, 2020 at 3:16 am

http://www2.cs.arizona.edu/~rts/publications.html

He has other good papers posted there, but they are not of immediate interest.

Your suggestion of using 9999-01-01 as a dummy date/place marker for eternity is a kludge on top of an error. This is an actual date and it appears in some contracts, but so does the phrase "in perpetuity" is a legal term. They are different. I can model the latter with the null, and this is not the same as an actual date.

I honestly don't believe that a NULL end_date is that much overhead. The modern database is pretty good at this sort of stuff. However, having to write complicated CASE expressions to determine whether or not the dummy date is an actual date or just a place marker gets pretty complicated. You have to use a CASE expression and not a COALESCE () function call. I am going to assume that most SQL engines will have optimized for coalescing, but they can optimize the same way for case expressions.

Again, the ISO standard model for temporal data has always been half-open intervals.

It is also worth noting that DB2 and several other SQL products automatically change 24:00:00 to 00:00:00

Please post DDL and follow ANSI/ISO standards when asking for help.

-

May 17, 2020 at 3:56 pm

Using 9999-01-01 is no more of kludge than using NULL but the former has advantages over using NULL when it comes to performance, especially if you understand that the use of things like COALESCE on a temporal column will produce a Non-SARGable query. If you follow the true definition of NULL, NULL also does not mean that there IS an stop date. The definition of NULL is UNKNOWN, which also means there may or may not be one but we just don't know. NULL, therefore, is a kludge where someone assigns the irrational value of INFINITY to it rather than using its true meaning of UNKNOWN. Talk about kludges.

Since there is no actual functionality in SQL Server (or any other RDBMS that I know of) that has a built in "value" for INFINITY, even Mr. Snodgrass used '9999-12-31' in chapter 2 of his PDF (located at the following URL which was on the reference page you cited, thank you) as his "Forever" date. He doesn't even mention the use of nulls for stop dates until chapter 3 and then uses them only with the temporal criteria operator of "OVERLAPS", which is not available in SQL Server and so rendering it a moot point for the discussion on this thread.

file:///C:/Users/jbmod/Downloads/Snodgrass%20Temporal%20Book.pdf

Mr. Snodgrass also acknowledges the limitations of RDBMS software as not having a valid constant for "Infinity" and that's why even he uses a non-null surrogate of 9999-12-31 to represent a value in the extreme future.

Now, I DO absolutely agree that using a non-null, non-infinity surrogate for "in perpetuity" does have a limit where it should not and that it cannot be meant to mean "in perpetuity". I have not yet come across a use case that is active in SQL Server that would require such a value as "in perpetuity". If I did, then I would likely use NULL, even though that would certainly be a kludge. But for most things, an extreme future date occurring sometime in the last year (9999) that SQL Server is capable of recognizing and storing will suffice.

Using such a surrogate values as '9999-01-01' also has performance and resource usage advantages over using NULL for the same thing AND it DOES actually follow the ISO standard of "Half Open" assignment of periods. The examples in chapter 2 that Mr. Snodgrass created use the "Half Open" period dates, as do I. That's also why I use '9999-01-01' so that there's headroom to add a day, week, or month to a stop date at the query level without violating the maximum date of 9999-12-31 that SQL Server can recognize. It supports the idea/standard of always using "Half Open" dates.

It actually surprises me a whole lot for you to even mention "Half Open" dates to define periods because of the total fits you've thrown on other threads about how BETWEEN should be used for such a thing and my adamant stance on those same threads (which you incorrectly chastised me for) that you should never use BETWEEN for such a things. Please make up your mind as to which stance you're going to take because if you actually do support the concept of "Half Open" period notation and reject BETWEEN as a viable method for doing so, you and I don't have an argument.

I'll also state that the 24:00:00 issue is well known (there is no such time, actually) but that's also a moot point because I've not suggested it's use and so I don't know why you even though it worth mentioning. But, since you brought it up, you are incorrect because you can't actually use 24:00:00 as a time in SQL Server as the following code proves.

SELECT CONVERT(DATETIME,'2020-01-01 24:00:00'); --Creates an out-of-range error

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 13 posts - 1 through 13 (of 13 total)

You must be logged in to reply to this topic. Login to reply