Split String into columns

-

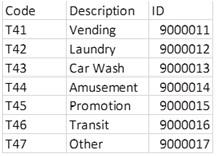

Can someone help me figure out how to turn this string:

' ;T41,Vending,9000011;T42,Laundry,9000012;T43,Car Wash,9000013;T44,Amusement,9000014;T45,Promotion,9000015;T46,Transit,9000016;T47,Other,9000017;T73,?,9000011'

into a set of results like this?

-

--===== Test string

DECLARE @String VARCHAR(8000) =

' ;T41,Vending,9000011;T42,Laundry,9000012;T43,Car Wash,9000013;T44,Amusement,9000014;T45,Promotion,9000015;T46,Transit,9000016;T47,Other,9000017;T73,?,9000011'

;

--===== Solution

WITH cteSplit1 AS

(--==== Split the groups out based on the ";" delimiter, not returning any null or blank groups.

-- Note that this could be read from an entire table rather than just one string.

SELECT ItemNumber = ROW_NUMBER()OVER(ORDER BY split1.ItemNumber)

,split1.Item

FROM dbo.DelimitedSplit8K(@String,';') split1

WHERE split1.Item > ''

)--==== Split the elements in each group based on the "," delimiter and repivot back into rows.

SELECT --split1.ItemNumber

Code = MAX(CASE WHEN split2.ItemNumber = 1 THEN split2.Item ELSE '' END)

,Description = MAX(CASE WHEN split2.ItemNumber = 2 THEN split2.Item ELSE '' END)

,ID = MAX(CASE WHEN split2.ItemNumber = 3 THEN split2.Item ELSE '' END)

FROM cteSplit1 split1

CROSS APPLY dbo.DelimitedSplit8K(split1.Item,',') split2

GROUP BY split1.ItemNumber

;Here's the DelimitedSplit8K function. To be sure, it blows the doors off of every other string splitter except for a well written CLR.

CREATE FUNCTION [dbo].[DelimitedSplit8K]

/**********************************************************************************************************************

Purpose:

Split a given string at a given delimiter and return a list of the split elements (items).

Notes:

1. Leading and trailing delimiters are treated as if an empty string element were present.

2. Consecutive delimiters are treated as if an empty string element were present between them.

3. Except when spaces are used as a delimiter, all spaces present in each element are preserved.

Returns:

iTVF containing the following:

ItemNumber = Element position of Item as a BIGINT (not converted to INT to eliminate a CAST)

Item = Element value as a VARCHAR(8000)

Note that this function uses a binary collation and is, therefore, case sensitive.

The original article for the concept of this splitter may be found at the following URL. You can also find

performance tests at this link although they are now a bit out of date. This function is much faster as of Rev 09,

which was built specifically for use in SQL Server 2012 and above andd is about twice as fast as the version

document in the article.

http://www.sqlservercentral.com/Forums/Topic1101315-203-4.aspx

-----------------------------------------------------------------------------------------------------------------------

CROSS APPLY Usage Examples and Tests:

--=====================================================================================================================

-- TEST 1:

-- This tests for various possible conditions in a string using a comma as the delimiter. The expected results are

-- laid out in the comments

--=====================================================================================================================

--===== Conditionally drop the test tables to make reruns easier for testing.

-- (this is NOT a part of the solution)

IF OBJECT_ID('tempdb..#JBMTest') IS NOT NULL DROP TABLE #JBMTest

;

--===== Create and populate a test table on the fly (this is NOT a part of the solution).

-- In the following comments, "b" is a blank and "E" is an element in the left to right order.

-- Double Quotes are used to encapsulate the output of "Item" so that you can see that all blanks

-- are preserved no matter where they may appear.

SELECT *

INTO #JBMTest

FROM ( --# of returns & type of Return Row(s)

SELECT 0, NULL UNION ALL --1 NULL

SELECT 1, SPACE(0) UNION ALL --1 b (Empty String)

SELECT 2, SPACE(1) UNION ALL --1 b (1 space)

SELECT 3, SPACE(5) UNION ALL --1 b (5 spaces)

SELECT 4, ',' UNION ALL --2 b b (both are empty strings)

SELECT 5, '55555' UNION ALL --1 E

SELECT 6, ',55555' UNION ALL --2 b E

SELECT 7, ',55555,' UNION ALL --3 b E b

SELECT 8, '55555,' UNION ALL --2 b B

SELECT 9, '55555,1' UNION ALL --2 E E

SELECT 10, '1,55555' UNION ALL --2 E E

SELECT 11, '55555,4444,333,22,1' UNION ALL --5 E E E E E

SELECT 12, '55555,4444,,333,22,1' UNION ALL --6 E E b E E E

SELECT 13, ',55555,4444,,333,22,1,' UNION ALL --8 b E E b E E E b

SELECT 14, ',55555,4444,,,333,22,1,' UNION ALL --9 b E E b b E E E b

SELECT 15, ' 4444,55555 ' UNION ALL --2 E (w/Leading Space) E (w/Trailing Space)

SELECT 16, 'This,is,a,test.' UNION ALL --4 E E E E

SELECT 17, ',,,,,,' --7 (All Empty Strings)

) d (SomeID, SomeValue)

;

--===== Split the CSV column for the whole table using CROSS APPLY (this is the solution)

SELECT test.SomeID, test.SomeValue, split.ItemNumber, Item = QUOTENAME(split.Item,'"')

FROM #JBMTest test

CROSS APPLY dbo.DelimitedSplit8K(test.SomeValue,',') split

;

--=====================================================================================================================

-- TEST 2:

-- This tests for various "alpha" splits and COLLATION using all ASCII characters from 0 to 255 as a delimiter against

-- a given string. Note that not all of the delimiters will be visible and some will show up as tiny squares because

-- they are "control" characters. More specifically, this test will show you what happens to various non-accented

-- letters for your given collation depending on the delimiter you chose.

--=====================================================================================================================

WITH

cteBuildAllCharacters (String,Delimiter) AS

(

SELECT TOP 256

'ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789',

CHAR(ROW_NUMBER() OVER (ORDER BY (SELECT NULL))-1)

FROM master.sys.all_columns

)

SELECT ASCII_Value = ASCII(c.Delimiter), c.Delimiter, split.ItemNumber, Item = QUOTENAME(split.Item,'"')

FROM cteBuildAllCharacters c

CROSS APPLY dbo.DelimitedSplit8K(c.String,c.Delimiter) split

ORDER BY ASCII_Value, split.ItemNumber

;

-----------------------------------------------------------------------------------------------------------------------

Other Notes:

1. Optimized for VARCHAR(8000) or less. No testing or error reporting for truncation at 8000 characters is done.

2. Optimized for single character delimiter. Multi-character delimiters should be resolved externally from this

function.

3. Optimized for use with CROSS APPLY.

4. Does not "trim" elements just in case leading or trailing blanks are intended.

5. If you don't know how a Tally table can be used to replace loops, please see the following...

http://www.sqlservercentral.com/articles/T-SQL/62867/

6. Changing this function to use a MAX datatype will cause it to run twice as slow. It's just the nature of

MAX datatypes whether it fits in-row or not.

-----------------------------------------------------------------------------------------------------------------------

Credits:

This code is the product of many people's efforts including but not limited to the folks listed in the Revision

History below:

I also thank whoever wrote the first article I ever saw on "numbers tables" which is located at the following URL

and to Adam Machanic for leading me to it many years ago. The link below no long works but has been preserved herer

for posterity sake.

http://sqlserver2000.databases.aspfaq.com/why-should-i-consider-using-an-auxiliary-numbers-table.html

The original article can be seen at then following special site, as least as of 29 Sep 2019.

http://web.archive.org/web/20150411042510/http://sqlserver2000.databases.aspfaq.com/why-should-i-consider-using-an-auxiliary-numbers-table.html#

-----------------------------------------------------------------------------------------------------------------------

Revision History:

Rev 00 - 20 Jan 2010 - Concept for inline cteTally: Itzik-Ben Gan, Lynn Pettis and others.

Redaction/Implementation: Jeff Moden

- Base 10 redaction and reduction for CTE. (Total rewrite)

Rev 01 - 13 Mar 2010 - Jeff Moden

- Removed one additional concatenation and one subtraction from the SUBSTRING in the SELECT List for that tiny

bit of extra speed.

Rev 02 - 14 Apr 2010 - Jeff Moden

- No code changes. Added CROSS APPLY usage example to the header, some additional credits, and extra

documentation.

Rev 03 - 18 Apr 2010 - Jeff Moden

- No code changes. Added notes 7, 8, and 9 about certain "optimizations" that don't actually work for this

type of function.

Rev 04 - 29 Jun 2010 - Jeff Moden

- Added WITH SCHEMABINDING thanks to a note by Paul White. This prevents an unnecessary "Table Spool" when the

function is used in an UPDATE statement even though the function makes no external references.

Rev 05 - 02 Apr 2011 - Jeff Moden

- Rewritten for extreme performance improvement especially for larger strings approaching the 8K boundary and

for strings that have wider elements. The redaction of this code involved removing ALL concatenation of

delimiters, optimization of the maximum "N" value by using TOP instead of including it in the WHERE clause,

and the reduction of all previous calculations (thanks to the switch to a "zero based" cteTally) to just one

instance of one add and one instance of a subtract. The length calculation for the final element (not

followed by a delimiter) in the string to be split has been greatly simplified by using the ISNULL/NULLIF

combination to determine when the CHARINDEX returned a 0 which indicates there are no more delimiters to be

had or to start with. Depending on the width of the elements, this code is between 4 and 8 times faster on a

single CPU box than the original code especially near the 8K boundary.

- Modified comments to include more sanity checks on the usage example, etc.

- Removed "other" notes 8 and 9 as they were no longer applicable.

Rev 06 - 12 Apr 2011 - Jeff Moden

- Based on a suggestion by Ron "Bitbucket" McCullough, additional test rows were added to the sample code and

the code was changed to encapsulate the output in pipes so that spaces and empty strings could be perceived

in the output. The first "Notes" section was added. Finally, an extra test was added to the comments above.

Rev 07 - 06 May 2011 - Peter de Heer

- A further 15-20% performance enhancement has been discovered and incorporated into this code which also

eliminated the need for a "zero" position in the cteTally table.

Rev 08 - 24 Mar 2014 - Eirikur Eiriksson

- Further performance modification (twice as fast) For SQL Server 2012 and greater by using LEAD to find the

next delimiter for the current element, which eliminates the need for CHARINDEX, which eliminates the need

for a second scan of the string being split.

REF: https://www.sqlservercentral.com/articles/reaping-the-benefits-of-the-window-functions-in-t-sql-2

Rev 09 - 29 Sep 2019 - Jeff Moden

- Combine the improvements by Peter de Heer and Eirikur Eiriksson for use on SQL Server 2012 and above.

- Add Test 17 to the test code above.

- Modernize the generation of the embedded "Tally" generation available as of 2012. There's no significant

performance increase but it makes the code much shorter and easier to understand.

- Check/change all URLs in the notes abobe to ensure that they're still viable.

- Add a binary collation for a bit more of an edge on performance.

- Removed "Other Note" #7 above as UNPIVOT is no longern applicable (never was for performance).

**********************************************************************************************************************/

--=========== Define I/O parameters

(@pString VARCHAR(8000), @pDelimiter CHAR(1))

RETURNS TABLE WITH SCHEMABINDING AS

RETURN

--=========== "Inline" CTE Driven "Tally Table” produces values from 0 up to 10,000, enough to cover VARCHAR(8000).

WITH E1(N) AS (SELECT N FROM (VALUES (1),(1),(1),(1),(1),(1),(1),(1),(1),(1))E0(N))

,E4(N) AS (SELECT 1 FROM E1 a, E1 b, E1 c, E1 d)

,cteTally(N) AS (--==== This provides the "base" CTE and limits the number of rows right up front

-- for both a performance gain and prevention of accidental "overruns"

SELECT TOP (ISNULL(DATALENGTH(@pString),0)) ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) FROM E4

)

,cteStart(N1) AS (--==== This returns N+1 (starting position of each "element" just once for each delimiter)

SELECT 1 UNION ALL

SELECT t.N+1 FROM cteTally t WHERE SUBSTRING(@pString COLLATE Latin1_General_BIN,t.N,1)

= @pDelimiter COLLATE Latin1_General_BIN

)

--=========== Do the actual split.

-- The ISNULL/NULLIF combo handles the length for the final element when no delimiter is found.

SELECT ItemNumber = ROW_NUMBER() OVER (ORDER BY s.N1)

, Item = SUBSTRING(@pString,s.N1,ISNULL(NULLIF((LEAD(s.N1,1,1) OVER (ORDER BY s.N1)-1),0)-s.N1,8000))

FROM cteStart s

;--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

July 11, 2021 at 11:21 am

Similar to the accepted solution but without the common table expression. It appears Jeff began to code it one way and then switched

select max(case when sp2.ItemNumber=1 then sp2.Item else null end) [Code],

max(case when sp2.ItemNumber=2 then sp2.Item else null end) [Description],

max(case when sp2.ItemNumber=3 then sp2.Item else null end) [ID]

from dbo.DelimitedSplit8K(@string, ';') sp1

cross apply dbo.DelimitedSplit8K(sp1.Item, ',') sp2

where sp1.Item<>''

group by sp1.ItemNumber

order by [Code];- This reply was modified 4 years, 7 months ago by Steve Collins.

Aus dem Paradies, das Cantor uns geschaffen, soll uns niemand vertreiben können

-

July 11, 2021 at 5:14 pm

Here is a little different take on the solution:

Select [Code] = ltrim(substring(v.Item, 1, p01.pos - 2))

, [Description] = ltrim(substring(v.Item, p01.pos, p02.pos - p01.pos - 1))

, [ID] = ltrim(substring(v.Item, p02.pos, p03.pos - p02.pos - 1))

From dbo.DelimitedSplit8K(@String, ';') As s1

Cross Apply (Values (concat(s1.Item, replicate(',', 3)))) As v(Item)

Cross Apply (Values (charindex(',', v.Item, 1) + 1)) As p01(pos)

Cross Apply (Values (charindex(',', v.Item, p01.pos) + 1)) As p02(pos)

Cross Apply (Values (charindex(',', v.Item, p02.pos) + 1)) As p03(pos)

Where s1.Item > '';If you are on 2016 or higher - you don't need the custom splitter function:

Select [Code] = ltrim(substring(v.[value], 1, p01.pos - 2))

, [Description] = ltrim(substring(v.[value], p01.pos, p02.pos - p01.pos - 1))

, [ID] = ltrim(substring(v.[value], p02.pos, p03.pos - p02.pos - 1))

From string_split(@String, ';') As s1

Cross Apply (Values (concat(s1.[value], replicate(',', 3)))) As v(value)

Cross Apply (Values (charindex(',', v.[value], 1) + 1)) As p01(pos)

Cross Apply (Values (charindex(',', v.[value], p01.pos) + 1)) As p02(pos)

Cross Apply (Values (charindex(',', v.[value], p02.pos) + 1)) As p03(pos)

Where s1.[value] > '';- This reply was modified 4 years, 7 months ago by Jeffrey Williams.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

July 11, 2021 at 7:28 pm

Steve Collins wrote:Similar to the accepted solution but without the common table expression. It appears Jeff began to code it one way and then switched

select max(case when sp2.ItemNumber=1 then sp2.Item else null end) [Code],

max(case when sp2.ItemNumber=2 then sp2.Item else null end) [Description],

max(case when sp2.ItemNumber=3 then sp2.Item else null end) [ID]

from dbo.DelimitedSplit8K(@string, ';') sp1

cross apply dbo.DelimitedSplit8K(sp1.Item, ',') sp2

where sp1.Item<>''

group by sp1.ItemNumber

order by [Code];Actually, no... the way I wrote it was quite deliberate. We'll need to do a larger test to be sure but start off checking on the execution plans.

As a bit of a sidebar and observation, it only returned 26 item rows when I tested it with an average of 100,000 rows with an average of 10 items each for a total of 1,000,000 item rows that should have been returned. That's a part of the reason why I typically test against a million rows even if it only has to work on a single string... because someone in a pinch will pick up a piece of code and use it for things it wasn't specifically designed to do when it comes to the number of rows.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

July 11, 2021 at 9:04 pm

Jeffrey Williams wrote:Here is a little different take on the solution:

Select [Code] = ltrim(substring(v.Item, 1, p01.pos - 2))

, [Description] = ltrim(substring(v.Item, p01.pos, p02.pos - p01.pos - 1))

, [ID] = ltrim(substring(v.Item, p02.pos, p03.pos - p02.pos - 1))

From dbo.DelimitedSplit8K(@String, ';') As s1

Cross Apply (Values (concat(s1.Item, replicate(',', 3)))) As v(Item)

Cross Apply (Values (charindex(',', v.Item, 1) + 1)) As p01(pos)

Cross Apply (Values (charindex(',', v.Item, p01.pos) + 1)) As p02(pos)

Cross Apply (Values (charindex(',', v.Item, p02.pos) + 1)) As p03(pos)

Where s1.Item > '';For speed, that's absolutely the ticket. Nicely done and nicely formatted code!

Jeffrey Williams wrote:If you are on 2016 or higher - you don't need the custom splitter function:

Select [Code] = ltrim(substring(v.[value], 1, p01.pos - 2))

, [Description] = ltrim(substring(v.[value], p01.pos, p02.pos - p01.pos - 1))

, [ID] = ltrim(substring(v.[value], p02.pos, p03.pos - p02.pos - 1))

From string_split(@String, ';') As s1

Cross Apply (Values (concat(s1.[value], replicate(',', 3)))) As v(value)

Cross Apply (Values (charindex(',', v.[value], 1) + 1)) As p01(pos)

Cross Apply (Values (charindex(',', v.[value], p01.pos) + 1)) As p02(pos)

Cross Apply (Values (charindex(',', v.[value], p02.pos) + 1)) As p03(pos)

Where s1.[value] > '';It's interesting how you "safetied" the splits with the concatenation of 3 commas. I would have expected the concatenation to have slowed things down a whole lot more than they did in my testing. The CCA (Cascading Cross Apply) and using the 3rd parameter of CHARINDEX to short-circuit the comma-finds also worked really well in my testing. Both methods certainly be the double calls on the DelimitedSplit8K function and was absolutely worth the little bit of extra coding.

And again and and not my my job to judge but well worth mentioning... nicely formatted code. I love "vertically aligned" code. It makes things incredibly easy to read and do things like find aliases, etc.

Just so you know, your first method above came in at 2 second and your second method came in at 5 on my 2017 laptop. The double call to delimited split 8K took 18 seconds. Here's the code I used to create a more substantial set of strings to test against. I used STRING_AGG() to create the test table so you'll need 2017 or above to run it without modification.

DROP TABLE IF EXISTS #MyHead;

WITH cteBuildRows AS

(

SELECT Grp = ABS(CHECKSUM(NEWID())%100000) --About 10 item sets per group @ 1 Million rows.

,SomeData = CONCAT(

CONCAT('T',CONVERT(BIGINT,CONVERT(BINARY(2),SUBSTRING(CONVERT(CHAR(36),ca.RdmNid),20,4),2))),','

,RIGHT(ca.RdmNid,17),','

,CONVERT(BIGINT,CONVERT(BINARY(6),RIGHT(ca.RdmNid,12),2))

)

FROM dbo.fnTally(1,1000000)t

CROSS APPLY (SELECT NEWID())ca(RdmNid)

)

SELECT SomeString = STRING_AGG(SomeData,';')

INTO #MyHead

FROM cteBuildRows

GROUP BY Grp

;--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

July 12, 2021 at 4:53 pm

There is a concern with using CONCAT to safety the string. If that string is varchar(8000) - and the data length is 8000 - this can and will fail with an invalid length error. The problem is that CONCAT will succeed but not actually add the additional delimiters at the end of the string, which is required for the SUBSTRING.

However - it won't fail if you use string_split and the input string is a varchar(max).

I am a bit surprised that the custom function performed better than STRING_SPLIT. I would have thought those performed about the same.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs

Viewing 7 posts - 1 through 7 (of 7 total)

You must be logged in to reply to this topic. Login to reply