Size of DB on physical disk doesn't match Disk Usage by Table report

-

April 23, 2025 at 3:55 am

Hi everyone

I am looking at the size of my db on disk (ie c-drive) and comparing it against SS report Disk Usage by Table. The size of the mdf file on my storage drive is close to 37 GB. The sum of all tables and indexes (columns Data (KB) + Indexes (KB)) on the Disk Usage by Table report is close to 11 GB. I am sure there are some overhead items that are not included in the report but does that overhead amount to close to 26 GB?? Seems really high. Does the size of the DB need to be reduced somehow so it reflects reality? I remember when I used MS Access the db would keep growing in size even if data was removed. I had to reduce the size of the Access DB so it reflected reality. Is SS the same way? It keeps growing out of touch with reality and then it has to be reduced to bring the numbers back to reality?

Thank you

-

April 23, 2025 at 6:49 am

SQL will not release space automatically to match what the internal size is.

If you want to release the space back to the OS you need to manually and consciencely issues a DBCC SHRINKFILE or DBCC SHRINKDATABASE command.

BUT be warned, shrinking causes fragmentation and other internal data movement as it tries to move data around the data files, so you will have to rebuild indexes you feel are over fragmented, which will cause the files to grow again after you have shrunk them, so you are then in a catch 22 situation. Shrink -> Rebuild -> Grow -> Shrink -> Rebuild -> Grow.

You really should be doing some data modelling about how much size increase on a daily basis you are increasing by, once you know daily you add 1GB of data for example, you know in 3 months you need 90GB space, 1 year 365GB space, if you're not going to fill that 26GB in the next 6 - 12 months, then yeah possible cause to shrink it, but if you will use it, just leave it.

Adding space, shrinking space is a very expensive operation.

Remember also that the database grew that large for a reason, if you can't find the reason, then maybe shrinking isnt an option as if you cant fix the cause of the database growing, then it will just grow again the next time that routine runs.

Maybe you did a data purge and it cleaned up a lot of space, OK that could be cause to then shrink but it again goes back to knowing your growth and seeing if you will need that space in the near future.

DON'T just shrink for the sake of shrinking, you need to look at EVERYTHING going on.

Sure if you google for "gotchas of using DBCC SHRINKFILE" or "gotchas of using DBCC SHRINKDATABASE" you will get a lot of results.

-

April 23, 2025 at 6:17 pm

Thanks for the reply. Very helpful.

Is there a way to look at the system to see why the increase is happening? A more "scientific" answer to figure out the root cause? Maybe a query?

I have created this db as a test db. I have a SSIS package that downloads data and runs a bunch of SP. To date, all work on the db has been testing in nature. I will add data, delete data, create index, remove index etc just to test out different things and their impact on SP performance. This sort of testing is largely done. All that is left to do is remove the test data and replace it with production data.

I was doing some analysis on projected storage needs. If I use the Disk Usage by Table report as a basis for analysis then I have enough storage space to store the new production data. If I use size on disk metric then I don't have enough space. That is why find out the "true" size of the db is crucial for me. I suspect that the Disk Usage by Table report is more accurate assessment vs the size on disk metric based on what you have said about SS not releasing data.

Do you have a suggested query I can run to get SS to release the data that it doesn't need? You suggested DBCC SHRINKFILE or DBCC SHRINKDATABASE command but I do not know what parameters to use.

Thank you in advance for your help.

- This reply was modified 9 months, 2 weeks ago by water490. Reason: fixed a typo

-

April 23, 2025 at 6:32 pm

I found queries to help

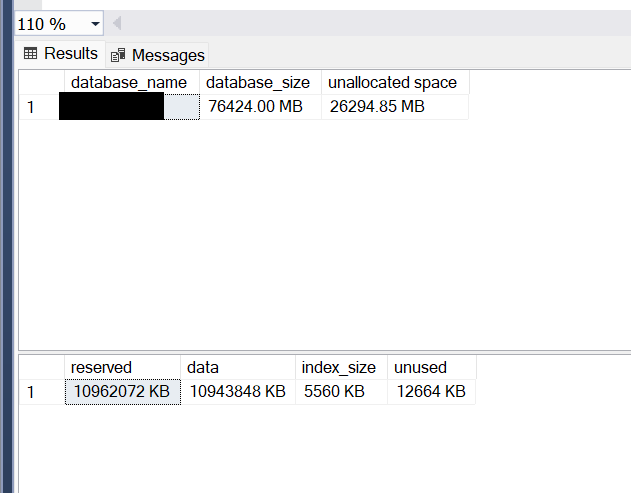

How do I interpret the results? I ran sp_spaceused to get this:

The MDF is close to 37GB and LDF is close to 40GB which gives the db size of about 77 GB (pretty close to above screenshot figure).

-

April 24, 2025 at 6:58 am

Well everything needs space, all the data you have been loading, deleting, changing, indexes added / deleted it will have all taken space. When you drop that index which took 10GB of log space to build and 15GB of data space to store, SQL won't release that space back.

So it sounds like this is all natural growth based on development.

If your now ready to production'ise it, create a blank DB with the final structure and then use that new DB as your prod DB and profile disk / table usage every day for a month, then you have data then to extrapolate a years worth of potential growth.

-

April 24, 2025 at 9:18 pm

The "Used but Unallocated Space" is usually because of a "wonderful" feature known as "Fast Inserts". It is meant to provide a performance gain when doing inserts containing a huge number of rows.

What it does is it doesn't even look to see if pages are available when inserting new data. It just allocates new extents (8 pages) as a bare minimum ... even for... just one row. And the front end of a whole lot of applications makes the mistake of using the "insert bulk" feature (not to be confused with "Bulk Insert") and it creates an 8 page extent (64KB) for any new row, even it if only has 1 byte of user information.

If you check for the unallocated space by table (either by script or through the built in "Disk Usage by Top Tables" report in the object explorer), you can see the amount of "unused" space per table. Read about trace flag number "692" at the following link to learn more about the problem that "Fast Inserts" causes, although they mention nothing of the "insert bulk" issue that I cited.

The only way that I know of to recover that space is to do some index maintenance on the tables that have the problem. If it's on a HEAP, you'll need to do an ALTER TABLE REBUILD.

I don't know of a way to turn that off for an individual database. It's a much larger problem the Microsoft suggests though. I turned it off server-wide by invoking trace flag 692 as a part of the server startup parameters.

To prevent the problem,

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 25, 2025 at 2:25 am

I used DBCC SHRINKFILE (1,0) to shrink the MDF file. The size of the MDF file is about 20GB. How long should this command take to run? Should it be quick? Should it take long time? This command has been running for over 30 minutes and still not done yet. I just don't know if this is normal or not.

As a reference point, I used DBCC SHRINKFILE (2,1024) to shrink the LDF file. The file is about 20 GB. It finished in about 3 seconds. So not sure why MDF is taking so long. Maybe it is normal given the structural differences between MDF and LDF.

Any guidance on this would be greatly appreciated.

- This reply was modified 9 months, 2 weeks ago by water490.

-

April 25, 2025 at 6:01 am

minutes, hours, days, weeks, depends how much data on the right of the file it has to move to the left and how fast your storage IO subsystem can move the data.

Viewing 8 posts - 1 through 8 (of 8 total)

You must be logged in to reply to this topic. Login to reply