Rebuild vs Reorganize

-

December 9, 2019 at 12:00 am

Comments posted to this topic are about the item Rebuild vs Reorganize

-

December 9, 2019 at 7:31 am

Simple yet tricky question. I messed up between REBUILD Clustered Index and DROP/CREATE Clustered Index.

However, it refreshed the learning. Nice question !

-

December 9, 2019 at 3:14 pm

Thanks for this instructive question. I've seen rebuild and reorg statements all the time yet got it wrong.

-- webrunner

-------------------

A SQL query walks into a bar and sees two tables. He walks up to them and asks, "Can I join you?"

Ref.: http://tkyte.blogspot.com/2009/02/sql-joke.html -

December 9, 2019 at 6:03 pm

I beg to differ on the "As compared to the rebuild index operation, reorganize index is less resource-consuming operation" assertion. That is not a clear-cut assertion either way. From a transaction log standpoint, reorganize index generates a lot more small transactions and can lead to substantially more resource-consumption than a rebuild. Rebuilds are generally faster, generate more sequential I/O in larger chunks rather than more random I/O in smaller chunks, and fix-up the upper level pages. The main advantage to reorganize is that it is online for Standard Edition and that it can be interrupted without losing work on versions prior to SQL 2017 (SQL 2017 introduced pausing and resuming rebuilds). I'm not sure how pausing/resuming interacts with an offline index rebuild.

- This reply was modified 6 years, 2 months ago by t.ovod-everett. Reason: Clarify sequential vs. random

-

December 11, 2019 at 5:24 am

Enjoyed this question, thanks Sergey

____________________________________________

Space, the final frontier? not any more...

All limits henceforth are self-imposed.

“libera tute vulgaris ex” -

December 24, 2019 at 4:51 am

t.ovod-everett wrote:I beg to differ on the "As compared to the rebuild index operation, reorganize index is less resource-consuming operation" assertion. That is not a clear-cut assertion either way. From a transaction log standpoint, reorganize index generates a lot more small transactions and can lead to substantially more resource-consumption than a rebuild. Rebuilds are generally faster, generate more sequential I/O in larger chunks rather than more random I/O in smaller chunks, and fix-up the upper level pages. The main advantage to reorganize is that it is online for Standard Edition and that it can be interrupted without losing work on versions prior to SQL 2017 (SQL 2017 introduced pausing and resuming rebuilds). I'm not sure how pausing/resuming interacts with an offline index rebuild.

Lordy...THANK YOU, THANK YOU, THANK YOU!!! There's someone else out there that understands that REORGANIZE is NOT the tame little kitty that it has been advertised to be. It also doesn't do what people think it does when it comes to following the Fill Factor. It cannot make extra pages and so while it may pack less dense pages up to the the Fill Factor, it needs to do so on a whole lot of pages or the critical area between the Fill Factor and being 100% full is never cleared. The bottom line there is that you've removed critical free space from the index at the worst time possible and that's going to perpetuate the need for page splits. Right about the time the index has "recovered" by page splitting to make some free space, fragmentation from the page splits dictates that REORGANIZE needs to be executed again, with the same awful results. It's like a bad drug habit... the more you use, the more you need it, the more you use it, ad infinitum.

p.s. I got the question right because I knew that's what they were looking for, even though that particular answer is far from being correct. How far? I had a 146 GB Clustered index that had 12 % logical fragmentation caused by deletes. It caused my 20GB log file to explode to 227 GB and took an hour and 21 minutes. Since I did this on a test box, I was able to do a restore and try it again with REBUILD. The log file grew from 20 GB to only 37 GB (I wasn't doing backups) and it only took 12 minutes in the Bulk Logged Recovery Model. Doing the restore one more time, I also did a REBUILD in the Full Recovery Model. Of course the log file grew by the same amount as the size of the Clustered Index and it took longer (about the same amount of time as the reorg) because the REBUILD wasn't minimally logged anymore but the log file still didn't suffer anywhere near the growth caused by REORGANIZE.

I've also proven that it's frequently better to just stop doing index maintenance if you can't do REBUILDs and comes damned close to not being not worth it if you do ONLINE rebuilds.

To wit, I went more than 3 years with no regular index maintenance. The only things I hit were tables that suffered DELETEs and then it was only to recover disk space. My overall CPU usage dropped from 22% across 16 core/32 threads to 8% in 3 months and stayed there the rest of the time. The only thing I did other than fixing some of the indexes with DELETEs was to rebuild statistics on a regular basis.

Now, I'm not recommending doing no index maintenance. It was a grand experiment that I could afford to make and I've ended the experiment in the last couple of month. I've since started doing my own brand of index maintenance (you won't find the word REORGANIZE or ONLINE anywhere in the code). I've been able to save quite a bit of disk space and the average CPU has dropped a bit more (about 1%). The disk savings (and related memory usage) are what's really worth it. If you want speed, rebuilding the indexes only helps a little (unless you've totally inverted your indexes using a DBCC SHRINK or the deletes have taken all pages down below 30%). True speed comes from just one place and that's fixing queries. Yes... index maintenance helps some but nothing like a code fix.

This is also one of the many reasons why I don't do anything with the log file that requires the Full Recovery Model 100% of the time. I don't know the exact mechanism for it but the infrastructure group uses what they are calling "SAN replication" instead of relying on the database log file. It gives me a great amount of flexibility like rebuilding a 146 GB Clustered Index in 12 minutes with a nearly trivial amount of log file space used.

And, yeah... I sometimes have to wait for a quiet time on the larger indexes. NOT using ONLINE saves a remarkable amount of time and does a better job at defragging which means the time between REBUILDs is a little longer.

And if you're stuck with a system that uses GUID PKs, NEVER REORGANIZE. Start of with an 81% Fill Factor and adjust up to 91% or down to 71% and do your REBUILDs as soon as you go over 1% fragmentation. You'll be amazed at how long you can go between REBUILDs. I'm talking weeks and months without ANY page splits, good or bad (you do have to watch for "ExpAnsive" updates and LOBs. LOBs can also trap "short rows" between "long rows" and be even less than 1% full and, not, that's NOT a misprint).

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

December 24, 2019 at 8:56 am

You gave great insights on the Index Maintenance, Rebuild and Reorganize. Thanks for your time spent on the experiments and as a result of which we have such a wonderful explanation.

I do agree with you. Code Fix should be considered the first solution instead of REBUILD/REORGANIZE. Somehow, there is lack of knowledge I guess that can be filled with some good article. Please see if you can spend some time to write some article/series of articles with steps to identify the code problem and possible solutions to fix those code problems. It would be a great contribution to DBA community !

Thanks !

-

December 31, 2019 at 7:33 am

Jeff Moden wrote:And if you're stuck with a system that uses GUID PKs, NEVER REORGANIZE. Start of with an 81% Fill Factor and adjust up to 91% or down to 71% and do your REBUILDs as soon as you go over 1% fragmentation. You'll be amazed at how long you can go between REBUILDs. I'm talking weeks and months without ANY page splits, good or bad (you do have to watch for "ExpAnsive" updates and LOBs. LOBs can also trap "short rows" between "long rows" and be even less than 1% full and, not, that's NOT a misprint).

This i can relate to. My previous contract insists on using GUIDs for CLUSTERED PRIMARY INDEXES and, no matter what a DBA tells them, they refuse to listen, but still struggle with page splitting and performance issues. they also have automated Index maintenance and creation jobs running that:

> Reorganise all indexes on a daily basis, and

> create every index that gets entered into the missing index DMV (just in case it might be used again) (on one of the busiest tables in their database, the table had 395 nonclustered indexes, the majority of these having been used only once since creation).

____________________________________________

Space, the final frontier? not any more...

All limits henceforth are self-imposed.

“libera tute vulgaris ex” -

January 6, 2020 at 6:25 pm

Brahmanand Shukla wrote:I do agree with you. Code Fix should be considered the first solution instead of REBUILD/REORGANIZE. Somehow, there is lack of knowledge I guess that can be filled with some good article. Please see if you can spend some time to write some article/series of articles with steps to identify the code problem and possible solutions to fix those code problems. It would be a great contribution to DBA community !

To be fair, Jeff Moden has been writing articles for and providing insights to the SQL community for decades - he's definitely in my pantheon! If identifying and fixing poorly performing code while guaranteeing perfect behavioral compatibility with the assumptions of the application were a simple matter, SQL Server would be doing it automatically already! Jeff Moden is famous for teaching people how to avoid RBAR (Row By Agonizing Row - his Modenism), but developers have to be ready to listen and do the hard work of transforming their code, doing the required performance analysis and testing, and identifying the preferred alternative given the trade-offs involved.

-

January 7, 2020 at 11:44 pm

Thank you for the extremely kind and generous words but we have a difference in languages and understanding here. I've "talked" with Brahmanand through other methods and he is a gentleman and honestly meant it as a compliment (he thinks I'm capable). He's new to this forum and just doesn't know the past of any of us here.

He also doesn't realize the breadth of the task and that none of us know it all. We could all get together and write a 4 foot thick book on fixing and optimizing code and... still miss a whole lot.

To that same point, someone recently stated that you needed a whole lot of experience to solve "random complicated problems". My retort was that's how you get experience. If you already have experience, it will be neither random nor complicated. 🙂

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

November 25, 2020 at 9:38 am

Thank you for pointing this out to anyone! Optimizing queries and keep your statistics up to date is far more efficient for the performance. The impact of fragmentation is even less when you are using SSD drives.

/Håkan Winther

MCITP:Database Developer 2008

MCTS: SQL Server 2008, Implementation and Maintenance

MCSE: Data Platform -

November 26, 2020 at 2:16 am

SQLWinther wrote:Thank you for pointing this out to anyone! Optimizing queries and keep your statistics up to date is far more efficient for the performance. The impact of fragmentation is even less when you are using SSD drives.

Like I said in one of my previous posts on this thread... I do agree that logical fragmentation is a lot more painless than it used to be but you still can't afford to ignore logical fragmentation entirely. Expect for one certain Insert/Update pattern of indexes (which I call "Sequential Silos" for short), fragmentation occurs because of the "bad" type of page splits. "Bad" page splits usually cause one page to spit to two with each being approximately 50% full. Pages cannot be operated on unless they are in memory. In this single example, you're wasting 50% of your memory and the comparatively expensive SSDs. With skewed page splits, some pages can help you waste 90% of your memory in some areas as empty but allocated space.

I'll emphasis a couple of different but very related points again, as well.

- It's better to do no index maintenance and only update stats than it is to do index maintenance incorrectly.

- If you're following the current industry-wide best practices of doing index maintenance (Reorganize between 5 and 30% logical fragmentation and Rebuild at >30%) or doing index maintenance on indexes that have a "0" (default) Fill Factor, then you're doing index maintenance incorrectly.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

November 26, 2020 at 3:21 pm

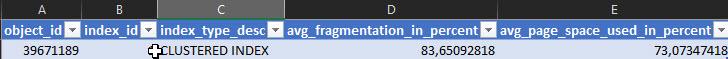

I totally agree with you that a lot of half full pages is bad for disk storage, memory consumption and reading a lot of extra pages due to the low density. But if you have indexes that look like this, with 83% fragmenation and avg_page_space_used_in_percent = 73%. you probably will not see a big improvement by rebuilding the index on SSD drives.

And what is the effect of page compression as it is still compressed in the cache? Do you suffer from the extra free space in the page?

/Håkan Winther

MCITP:Database Developer 2008

MCTS: SQL Server 2008, Implementation and Maintenance

MCSE: Data Platform -

November 29, 2020 at 10:39 pm

SQLWinther wrote:I totally agree with you that a lot of half full pages is bad for disk storage, memory consumption and reading a lot of extra pages due to the low density. But if you have indexes that look like this, with 83% fragmenation and avg_page_space_used_in_percent = 73%. you probably will not see a big improvement by rebuilding the index on SSD drives.

And what is the effect of page compression as it is still compressed in the cache? Do you suffer from the extra free space in the page?

Hi Håkan... thanks for your reply.

If you have a page density of only 73%, you not wasting just 27% of your RAM. You're actually wasting (100/73-1)*100 or 36.986% of both your RAM and SSD space. That's more than a 3rd. If your target Fill Factor is only 80%, then you're "only" wasting (80/73-1)*100 or 9.589%. That might be ok if the index has an evenly distributed insert order but it would be a mistake (and so would the Fill Factor of 80) if the index was an "ever-increasing" index that suffered only from "near end of index ExpAnsive Updates", in which case you'd either need to create a work around to eliminate the expansion or rebuild your index to a Fill Factor of 97 (just so you can tell what it is in the future by looking at only the Fill Factor) to prevent the totally wasted free space in the first 80 or 90% of the index. If your interested on how much low page density or unnecessarily low Fill Factors can cost you, here's a short article on the subject.

https://www.sqlservercentral.com/articles/rebuild-index

I mostly agree that 83% fragmentation doesn't matter (especially on an OLTP table that usually suffers only single row reads) once it's in memory but, although it's affect is much smaller on SSDs, you still have the issue with Read Aheads for non-OLTP functionality slowing you down a bit (although it's better to examine segment size rather than fragmentation for such a thing). It may not seem to matter much if you're looking only at a single index or even all the indexes on a single table but it does add up. So while an ounce of prevention may no longer be worth a pound of cure, it' can still be worth the ounce or two of cure, especially on systems that aren't dedicated only to OLTP.

I'll also agree that nothing is a panacea for indexes and access rates, etc. You have to know about the individual indexes AND their INSERT/UPDATE patterns to make a good decision but it's frequently a good thing to repair page density.

And, to be sure, I went from Jan 2016 'til early in 2020 without doing any index maintenance (except for one 250GB clustered index that was being deleted from, and yeah, I rebuilt stats on a regular basis) and, just like you say, because of SSDs, it really didn't affect performance much (there was some measurable improvement but it wasn't Earth shattering by any means) when I went back and rebuilt the ones that were logically fragmented. I did, however, save a shedload of space and it allowed much more of the databases to be in-memory all the time and that did make a fair bit more than just a trivial improvement for reporting and other multi-row non-OLTP processes.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 14 posts - 1 through 14 (of 14 total)

You must be logged in to reply to this topic. Login to reply