Finding a String Anywhere in Another String

-

August 2, 2018 at 2:25 pm

Jeff Moden - Thursday, August 2, 2018 9:02 AMhakan_l_borg - Thursday, August 2, 2018 12:09 AMThats not what I said either.Understood but a whole lot of people take it the way Luis stated.

To be quite honest, I dont understand what you mean then. Partitioning is primarily used for speeding up queries. Dont see any other reason than that for using the technique and if used correctly it certainly does just that.

-

August 2, 2018 at 3:47 pm

hakan_l_borg - Wednesday, August 1, 2018 9:58 AMHi Luis, well the APS sure ships as a parallellism solution to query perf issues so not sure what you mean?hakan_l_borg - Thursday, August 2, 2018 2:25 PMJeff Moden - Thursday, August 2, 2018 9:02 AMhakan_l_borg - Thursday, August 2, 2018 12:09 AMThats not what I said either.Understood but a whole lot of people take it the way Luis stated.

To be quite honest, I dont understand what you mean then. Partitioning is primarily used for speeding up queries. Dont see any other reason than that for using the technique and if used correctly it certainly does just that.

Actually, partitioning frequently slows things down. Parallelism can also slow things down. Your original post said...

Have you considered trying parallellism such as partition the table or similar to speed things up further

... and you followed that up with...well the APS sure ships as a parallellism solution to query perf issues

... and that's all what Luis was responding to. Neither parallelism nor partitioning is a panacea of performance and frequently works the opposite to actually slows things down. Even MPP appliances aren't a panacea because, in order for them to work correctly, you actually have to make a paradigm shift in the code that will live on them.--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 2, 2018 at 4:05 pm

Jeff Moden - Thursday, August 2, 2018 3:47 PMhakan_l_borg - Wednesday, August 1, 2018 9:58 AMHi Luis, well the APS sure ships as a parallellism solution to query perf issues so not sure what you mean?hakan_l_borg - Thursday, August 2, 2018 2:25 PMJeff Moden - Thursday, August 2, 2018 9:02 AMhakan_l_borg - Thursday, August 2, 2018 12:09 AMThats not what I said either.Understood but a whole lot of people take it the way Luis stated.

To be quite honest, I dont understand what you mean then. Partitioning is primarily used for speeding up queries. Dont see any other reason than that for using the technique and if used correctly it certainly does just that.

Actually, partitioning frequently slows things down. Parallelism can also slow things down. Your original post said...

Have you considered trying parallellism such as partition the table or similar to speed things up further

... and you followed that up with...well the APS sure ships as a parallellism solution to query perf issues

... and that's all what Luis was responding to. Neither parallelism nor partitioning is a panacea of performance and frequently works the opposite to actually slows things down. Even MPP appliances aren't a panacea because, in order for them to work correctly, you actually have to make a paradigm shift in the code that will live on them.Well, you can write most queries in a poor way if you dont know what youre doing I guess. Bit dont see this discussion going any further so have a great day Sir.

-

August 2, 2018 at 7:30 pm

Heh... tough crowd! 😉 Have a good one!

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 4, 2018 at 11:06 am

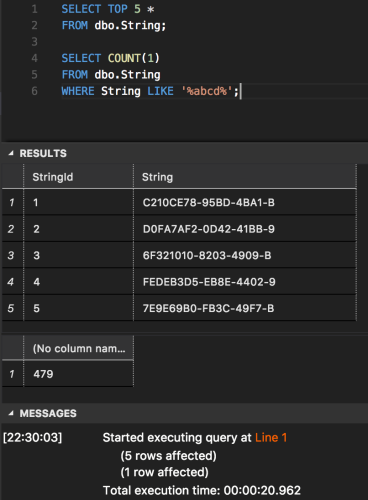

Janeta Parakosova - Thursday, August 2, 2018 1:34 PMHi Jeff,I used GUIDs to generate random strings with LEFT(NEWID(), 20) for the article. I downgraded the SQL Azure instance to the S0 tier since I wrote the article and the query now takes around 20 seconds.

The "sequential StringId" I referred to is the identity column.

Again, thanks for the article and thanks for posting the above, which was a real help.

You said that you were at the S2 level and now you've downgraded to the S0 level. I'm not an Azure user but, OMG, Azure is apparently terrible for performance even on such simple things as even the first tests on the 5 Million row table. That's what really caught my eye about this article... 14 seconds to do a handful of Index Seeks on a well indexed 5 million row table? That's crazy and so I wanted to check it out.

Since I'm both shocked at the poor performance of Azure (14 seconds divided by 235ms is 60 times slower!!!) and I was doing the testing anyway, I figured I'd post my test harness to allow people easily do their own testing and to see that the method you posted really does work even when Azure is such a dog. BigGrin

First, you just have to know I'm going to use a "Tally Table"-like function to do the splits to create the StringSplit table. It also come in handy as the row source for the "Pseudo-Cursor" that generates the original 5 Million row String table. Here's the code to create that. It comes in real handy for a whole lot of things.

CREATE FUNCTION [dbo].[fnTally]

/**********************************************************************************************************************

Purpose:

Return a column of BIGINTs from @ZeroOrOne up to and including @MaxN with a max value of 10 Quadrillion.Usage:

--===== Syntax example

SELECT t.N

FROM dbo.fnTally(@ZeroOrOne,@MaxN) t

;Notes:

1. This code works for SQL Server 2005 and up.

2. Based on Itzik Ben-Gan's cascading CTE (cCTE) method for creating a "readless" Tally Table source of BIGINTs.

Refer to the following URL for how it works. http://sqlmag.com/sql-server/virtual-auxiliary-table-numbers

3. To start a sequence at 0, @ZeroOrOne must be 0 or NULL. Any other value that's convertable to the BIT data-type

will cause the sequence to start at 1.

4. If @ZeroOrOne = 1 and @MaxN = 0, no rows will be returned.

5. If @MaxN is negative or NULL, a "TOP" error will be returned.

6. @MaxN must be a positive number from >= the value of @ZeroOrOne up to and including 10 Quadrillion. If a larger

number is used, the function will silently truncate after 10 Quadrillion. If you actually need a sequence with

that many values, you should consider using a different tool. ;-)

7. There will be a substantial reduction in performance if "N" is sorted in descending order. If a descending

sort is required, use code similar to the following. Performance will decrease by about 27% but it's still

very fast especially compared with just doing a simple descending sort on "N", which is about 20 times slower.

If @ZeroOrOne is a 0, in this case, remove the "+1" from the code.DECLARE @MaxN BIGINT;

SELECT @MaxN = 1000;

SELECT DescendingN = @MaxN-N+1

FROM dbo.fnTally(1,@MaxN);8. There is no performance penalty for sorting "N" in ascending order because the output is explicity sorted by

ROW_NUMBER() OVER (ORDER BY (SELECT NULL))Revision History:

Rev 00 - Unknown - Jeff Moden

- Initial creation with error handling for @MaxN.

Rev 01 - 09 Feb 2013 - Jeff Moden

- Modified to start at 0 or 1.

Rev 02 - 16 May 2013 - Jeff Moden

- Removed error handling for @MaxN because of exceptional cases.

Rev 03 - 07 Sep 2013 - Jeff Moden

- Change the max for @MaxN from 10 Billion to 10 Quadrillion to support an experiment.

This will also make it much more difficult for someone to actually get silent truncation in the future.

**********************************************************************************************************************/

(@ZeroOrOne BIT, @MaxN BIGINT)

RETURNS TABLE WITH SCHEMABINDING AS

RETURN WITH

E1(N) AS (SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL

SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1) --up to 10 rows

, E4(N) AS (SELECT 1 FROM E1 a, E1 b, E1 c, E1 d) --up to 10 Thousand rows

,E16(N) AS (SELECT 1 FROM E4 a, E4 b, E4 c, E4 d) --up to 10 Quadrillion rows

SELECT N = 0 WHERE ISNULL(@ZeroOrOne,0)= 0 --Conditionally start at 0.

UNION ALL

SELECT TOP(@MaxN) N = ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) FROM E16

;Here's the code to do the whole shebang. Details, of course, are in the comments.

-- REF: http://www.sqlservercentral.com/articles/Performance+Tuning/174811/

-- DROP TABLE dbo.String, StringSplit -- manual prep for a rerun

--NOTE: Just to set expectations so you don't panic during the runs...

-- The first table of 5 million rows takes 23 seconds to create.

-- The second table of 100 million rows takes about 9.6 minutes.

CHECKPOINT

;

--=============================================================================

-- This just creates the first table and populates it as prescribed by the

-- author using only T-SQL so folks don't have to buy a special tool.

--=============================================================================

RAISERROR ('

========== Create 5M row String table ========================================'

,0,0) WITH NOWAIT

;

--===== Start the timers

SET STATISTICS TIME ON

;

--===== This is the CREATE TABLE statement from the article with some minor

-- formatting changes to suit my personal preferences.

CREATE TABLE dbo.String

(

StringId INT NOT NULL PRIMARY KEY IDENTITY(1,1)

,String VARCHAR(20) NOT NULL

)

;

--===== This takes 18 seconds on my little i5 laptop with 4GM dedicated to SQL

-- Server 2008. My target database is in the SIMPLE Recovery Model so that

-- I can also take advantage of "Minimal Logging" for this exercise.

INSERT INTO dbo.String WITH (TABLOCK)

(String)

SELECT String = LEFT(NEWID(),20)

FROM dbo.fnTally(1,5000000)

OPTION (RECOMPILE)

;

--===== Turn off the timers

SET STATISTICS TIME OFF

;

GO

--=============================================================================

-- These are the first two queries from the article that took 14 seconds

-- each for the author. Again, the only change I've made is formatting

-- according to personal preference. I've also wrapped the code in SET

-- STATISTICS to measure CPU and duration.

--

-- Do note the extreme differences in the execution times that are coming

-- off my 6 year old laptop compared to the author's 14 seconds for each.

-- This is not the author's fault. It's Azure's fault.

--

-- If that's what Azure does on even such simple things, then I want no

-- part of it. :(

--=============================================================================

RAISERROR ('

========== Initial Tests ====================================================='

,0,0) WITH NOWAIT

;

--===== Start the timers

SET STATISTICS TIME ON

;

--===== Execute the test code

SELECT COUNT(1)

FROM dbo.String

WHERE String LIKE 'abcd%'

;

SELECT COUNT(1)

FROM dbo.String

WHERE String LIKE '%abcd%'

;

--===== Turn off the timers

SET STATISTICS TIME OFF

;

--=============================================================================

-- Add the index cited in the article and execute the test code again.

-- Things run as expected with the trailing wildcard running in sub-second

-- times and only slightly less CPU time with the same duration for the

-- leading/trailing wild card example.

--=============================================================================

RAISERROR ('========== Create index on String table =========================='

,0,0) WITH NOWAIT

;

--===== Start the timers

SET STATISTICS TIME ON

;

--===== Add the index (takes about 7 seconds to add this index)

CREATE NONCLUSTERED INDEX ix_nc_test ON dbo.String (String)

;

--===== Turn off the timers

SET STATISTICS TIME OFF

;

RAISERROR ('

========== Tests after added index ==========================================='

,0,0) WITH NOWAIT

;

--===== Start the timers

SET STATISTICS TIME ON

;

--===== Execute the test code

SELECT COUNT(1)

FROM dbo.String

WHERE String LIKE 'abcd%'

;

SELECT COUNT(1)

FROM dbo.String

WHERE String LIKE '%abcd%'

;

--===== Turn off the timers

SET STATISTICS TIME OFF

;

--=============================================================================

-- Now we'll create and populate the dbo.StringsSplit table using the

-- data from the original dbo.String table as the data source.

-- This creates 100 Million rows in about 9 minutes on my laptop.

--=============================================================================

RAISERROR ('

========== Create 100M row StringSplit table ================================='

,0,0) WITH NOWAIT

;

--===== Start the timers

SET STATISTICS TIME ON

;

--===== Create the table using the code from the article

CREATE TABLE dbo.StringSplit

(

StringId INT NOT NULL

,StringSplit VARCHAR(20) NOT NULL

)

;

--===== Add the Clustered Index, also from the article.

CREATE CLUSTERED INDEX ix_c_test ON dbo.StringSplit (StringSplit)

;

GO

--===== Split the data as prescribed in the article.

-- Again, using "Minimal Logging" here for performance.

INSERT INTO dbo.StringSplit WITH (TABLOCK)

(StringId,StringSplit)

SELECT s.StringId

,StringSplit = SUBSTRING(s.String,t.N,8000)

FROM dbo.String s

CROSS APPLY dbo.fnTally(1,LEN(s.String))t

-- ORDER BY StringSplit

OPTION (RECOMPILE)

;

--===== Turn off the timers

SET STATISTICS TIME OFF

;

CHECKPOINT

;

--=============================================================================

-- These are the final two queries from the article.

-- The author reports that the first query, which is the last query from

-- above. takes 14 seconds and the new StringSplit table takes 250ms.

--

-- Again, if that's what Azure does on even such simple things, then I

-- want no part of it because the execution times on my 6 year old laptop

-- simply blow both of those times away. :(

--=============================================================================

RAISERROR ('

========== Tests after NGram creation ========================================'

,0,0) WITH NOWAIT

;

--===== Start the timers

SET STATISTICS TIME ON

;

--===== Execute the test code (old method)

SELECT COUNT(StringId)

FROM dbo.String

WHERE String LIKE '%abcd%'

;

--===== Execute the test code (new method using stringSplit table)

SELECT COUNT(DISTINCT StringId)

FROM dbo.StringSplit

WHERE StringSplit LIKE 'abcd%'

;

--===== Turn off the timers

SET STATISTICS TIME OFF

;Here are the results from my 6 year old laptop using SQL Server 2008.

========== Create 5M row String table ========================================

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 80 ms.SQL Server Execution Times:

CPU time = 5132 ms, elapsed time = 23117 ms.(5000000 row(s) affected)

========== Initial Tests =====================================================

(1 row(s) affected)

SQL Server Execution Times:

CPU time = 732 ms, elapsed time = 227 ms.(1 row(s) affected)

SQL Server Execution Times:

CPU time = 2497 ms, elapsed time = 681 ms.

========== Create index on String table ==========================

SQL Server parse and compile time:

CPU time = 0 ms, elapsed time = 1 ms.SQL Server Execution Times:

CPU time = 22681 ms, elapsed time = 6685 ms.SQL Server Execution Times:

CPU time = 22681 ms, elapsed time = 6728 ms.========== Tests after added index ===========================================

(1 row(s) affected)

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 0 ms.(1 row(s) affected)

SQL Server Execution Times:

CPU time = 2543 ms, elapsed time = 672 ms.========== Create 100M row StringSplit table =================================

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 47 ms.SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 35 ms.

SQL Server parse and compile time:

CPU time = 16 ms, elapsed time = 65 ms.SQL Server Execution Times:

CPU time = 458003 ms, elapsed time = 577079 ms.(100000000 row(s) affected)

========== Tests after NGram creation ========================================

(1 row(s) affected)

SQL Server Execution Times:

CPU time = 2560 ms, elapsed time = 684 ms.(1 row(s) affected)

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 0 ms.Looking forward to anyone giving it a go, especially on Azure because, right now, Azure is looking like a non-option to me. It's just stupid how slow stuff ran for the author (and it's not the author's fault!!!) on Azure.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 5, 2018 at 3:40 am

The Azure S tier instances are very low spec and are no match for a multi-core laptop in any kind of performance testing 😉

😎 -

August 5, 2018 at 5:39 am

Eirikur Eiriksson - Sunday, August 5, 2018 3:40 AMThe Azure S tier instances are very low spec and are no match for a multi-core laptop in any kind of performance testing 😉

😎Interesting. Thanks, Eirikur. I'll have to look into what the various Azure tiers are. If you have a recommended link for such a thing, that would save some time.

I still can't believe that MS would even bother offering something that runs 60 to 253 times slower than a 6 year old laptop. Heh... and to think there's actually multiple levels in such a tier. I guess the "S" stands for "Slow".

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 5, 2018 at 7:12 am

Jeff Moden - Sunday, August 5, 2018 5:39 AMEirikur Eiriksson - Sunday, August 5, 2018 3:40 AMThe Azure S tier instances are very low spec and are no match for a multi-core laptop in any kind of performance testing 😉

😎Interesting. Thanks, Eirikur. I'll have to look into what the various Azure tiers are. If you have a recommended link for such a thing, that would save some time.

I still can't believe that MS would even bother offering something that runs 60 to 253 times slower than a 6 year old laptop. Heh... and to think there's actually multiple levels in such a tier. I guess the "S" stands for "Slow".

Hi Jeff, this is a good place to start, things do change quite quickly and one has to spend some time keeping up to it. Word of advice, if you come across anything older than six months, discard it as it'll most likely be obsolete.

😎With "S" tiers only mounting up to a handful of DTUs, "Slow" is more appropriate than "Standard" 😛

My advice is to start with the free subscription and play around, just remember to turn off the instance when not using it, Azure as all other cloud service providers work on a WYPIWYG (What You Pay Is What You Get) which translates to WYGIWYP (What You Get Is What You Pay), so even if not using the instance but leaving it running, will incur charges. -

August 5, 2018 at 8:11 am

Eirikur Eiriksson - Sunday, August 5, 2018 7:12 AMJeff Moden - Sunday, August 5, 2018 5:39 AMEirikur Eiriksson - Sunday, August 5, 2018 3:40 AMThe Azure S tier instances are very low spec and are no match for a multi-core laptop in any kind of performance testing 😉

😎Interesting. Thanks, Eirikur. I'll have to look into what the various Azure tiers are. If you have a recommended link for such a thing, that would save some time.

I still can't believe that MS would even bother offering something that runs 60 to 253 times slower than a 6 year old laptop. Heh... and to think there's actually multiple levels in such a tier. I guess the "S" stands for "Slow".

Hi Jeff, this is a good place to start, things do change quite quickly and one has to spend some time keeping up to it. Word of advice, if you come across anything older than six months, discard it as it'll most likely be obsolete.

😎With "S" tiers only mounting up to a handful of DTUs, "Slow" is more appropriate than "Standard" 😛

My advice is to start with the free subscription and play around, just remember to turn off the instance when not using it, Azure as all other cloud service providers work on a WYPIWYG (What You Pay Is What You Get) which translates to WYGIWYP (What You Get Is What You Pay), so even if not using the instance but leaving it running, will incur charges.Thanks, Eirikur. I've been there before and saw nothing of "S Tier" nor anything having to do with performance.

Looking at the pricing link on that page, is the "S Tier" considered to be the "General Purpose" pricing?

Seriously... in light of the revelation of slowness that's been discovered on this thread, how in the heck do you know what you're actually getting. This whole thing reminds me of the Gillette company... "Give them the razor, sell them the blades... and if they want good blades, charge them double".

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 5, 2018 at 8:48 am

Jeff Moden - Sunday, August 5, 2018 8:11 AMEirikur Eiriksson - Sunday, August 5, 2018 7:12 AMJeff Moden - Sunday, August 5, 2018 5:39 AMEirikur Eiriksson - Sunday, August 5, 2018 3:40 AMThe Azure S tier instances are very low spec and are no match for a multi-core laptop in any kind of performance testing 😉

😎Interesting. Thanks, Eirikur. I'll have to look into what the various Azure tiers are. If you have a recommended link for such a thing, that would save some time.

I still can't believe that MS would even bother offering something that runs 60 to 253 times slower than a 6 year old laptop. Heh... and to think there's actually multiple levels in such a tier. I guess the "S" stands for "Slow".

Hi Jeff, this is a good place to start, things do change quite quickly and one has to spend some time keeping up to it. Word of advice, if you come across anything older than six months, discard it as it'll most likely be obsolete.

😎With "S" tiers only mounting up to a handful of DTUs, "Slow" is more appropriate than "Standard" 😛

My advice is to start with the free subscription and play around, just remember to turn off the instance when not using it, Azure as all other cloud service providers work on a WYPIWYG (What You Pay Is What You Get) which translates to WYGIWYP (What You Get Is What You Pay), so even if not using the instance but leaving it running, will incur charges.Thanks, Eirikur. I've been there before and saw nothing of "S Tier" nor anything having to do with performance.

Looking at the pricing link on that page, is the "S Tier" considered to be the "General Purpose" pricing?

Seriously... in light of the revelation of slowness that's been discovered on this thread, how in the heck do you know what you're actually getting. This whole thing reminds me of the Gillette company... "Give them the razor, sell them the blades... and if they want good blades, charge them double".

Select the "Single Database" option and then the "DTU" option, that will give you the three tiers, Basic, Standard and Premium (Cough cough, more like a domestic business "class").

😎The main thing is to manage the resource usage, scale up and down as needed and think of a database instance more like a ad-hock/scheduled resource than cast iron. Have had clients where getting them to destroy/suspend/scale instances was saving them many $100Ks a year compared to running on own infrastructure, large portion being the licensing costs. I'm looking forward to the "Managed Instances", would advice anyone doing a serious migration to either get a support agreement (if you say please to Microsoft) or wait until it's out of preview, almost 100% compliant to on-prem instances.

-

August 5, 2018 at 11:31 am

Eirikur Eiriksson - Sunday, August 5, 2018 8:48 AMJeff Moden - Sunday, August 5, 2018 8:11 AMEirikur Eiriksson - Sunday, August 5, 2018 7:12 AMJeff Moden - Sunday, August 5, 2018 5:39 AMEirikur Eiriksson - Sunday, August 5, 2018 3:40 AMThe Azure S tier instances are very low spec and are no match for a multi-core laptop in any kind of performance testing 😉

😎Interesting. Thanks, Eirikur. I'll have to look into what the various Azure tiers are. If you have a recommended link for such a thing, that would save some time.

I still can't believe that MS would even bother offering something that runs 60 to 253 times slower than a 6 year old laptop. Heh... and to think there's actually multiple levels in such a tier. I guess the "S" stands for "Slow".

Hi Jeff, this is a good place to start, things do change quite quickly and one has to spend some time keeping up to it. Word of advice, if you come across anything older than six months, discard it as it'll most likely be obsolete.

😎With "S" tiers only mounting up to a handful of DTUs, "Slow" is more appropriate than "Standard" 😛

My advice is to start with the free subscription and play around, just remember to turn off the instance when not using it, Azure as all other cloud service providers work on a WYPIWYG (What You Pay Is What You Get) which translates to WYGIWYP (What You Get Is What You Pay), so even if not using the instance but leaving it running, will incur charges.Thanks, Eirikur. I've been there before and saw nothing of "S Tier" nor anything having to do with performance.

Looking at the pricing link on that page, is the "S Tier" considered to be the "General Purpose" pricing?

Seriously... in light of the revelation of slowness that's been discovered on this thread, how in the heck do you know what you're actually getting. This whole thing reminds me of the Gillette company... "Give them the razor, sell them the blades... and if they want good blades, charge them double".

Select the "Single Database" option and then the "DTU" option, that will give you the three tiers, Basic, Standard and Premium (Cough cough, more like a domestic business "class").

😎The main thing is to manage the resource usage, scale up and down as needed and think of a database instance more like a ad-hock/scheduled resource than cast iron. Have had clients where getting them to destroy/suspend/scale instances was saving them many $100Ks a year compared to running on own infrastructure, large portion being the licensing costs. I'm looking forward to the "Managed Instances", would advice anyone doing a serious migration to either get a support agreement (if you say please to Microsoft) or wait until it's out of preview, almost 100% compliant to on-prem instances.

So, between the S tier and the P tier, how many DTUs do you need to be cable to get the same performance that you might get out of a bloody 6 year old laptop? :blink:

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 11 posts - 16 through 26 (of 26 total)

You must be logged in to reply to this topic. Login to reply