Dimensional Modeling Case Study Part 2 - Days Dimension

-

February 7, 2024 at 12:00 am

Comments posted to this topic are about the item Dimensional Modeling Case Study Part 2 - Days Dimension

-

February 7, 2024 at 3:47 pm

I have used a similar approach to help show work orders that had been open a long time to stand-out more easily yet more orderly. Worked great.

-

February 7, 2024 at 6:59 pm

I like this a lot. And will definitely use it, thank you! But this sentence in the article had me scratching my head trying to understand what you were trying to achieve:

The script will generate results comprising the initial 31 rows, as illustrated below.

... thankfully when I ran the script I did get the result that I expected (365 * 5 rows, not 31 rows). So I think you meant to say :

The initial 31 rows generated in the script's results are illustrated below.

Or maybe you edited out a section of the article indicating that you would add a TOP 31 clause to generate a partial result for illustrative purposes?

Sorry to be persnickety, but I got to that sentence and thought I had completely misunderstood the code and the previous sections of the article.

Thank you again.

-

February 8, 2024 at 12:26 am

I've always had a bit of an issue with Data Warehouses and some of the techniques of using dimension tables... especially temporal dimension tables. While this is a very nicely written article, I see no particular advantage to having such a table when a well written iTVF would provide the same information in a very high performance, read-less manner. In fact, the very code used to create the table is very close to being perfect to form the iTVF and would not need the penalty of either having a table or using a recursive CTE. They'd just be direct calculations where you give it two dates or a number of days as columns in a set and it returns a row for each row in the set.

Again, I have no issues with the great job the author has done with this article. I just don't understand what looks like a fairly significant resource usage and performance disadvantage. And, please, I DO get that "well... everyone does data warehouses this way" but I disagree with that concept just as I have with many other concepts that seem slow, cumbersome, or just flat out unnecessary.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 8, 2024 at 12:42 am

You may have never seen a good data warehouse. The advantages over standard reporting procedures are significant. Calculations such as YTD, PTYD and the comparisons of the two, rolling averages of all kinds, as well as the freedom for the business user to get at the data in the way that they see fit--that works for them. You seem pretty set in your opinion, so I won't try to change your mind. But for others this can be a differing opinion.

-

February 8, 2024 at 1:05 am

Fair point @jeff, but I think Ron and many other people who build DWs (or Power BI developers?) are more concerned with making the data, and potential calculations, as transparent for self-service reporting, Excel imports, whatevs, than they are with the perf, resource, and space tradeoffs of a dimension table. I'm going to guess that the "Cloud-native" developers skew more in Ron's direction.

I personally do it both ways, depending on circumstances and expected usage.

There is a lot more to be said here, on both sides. This could be a very fun thread.

In any case, I congratulate the author for having (mostly) presented the basic ingredients of a common and useful data analysis scenario (data bands) with clarity. The ideas here can be applied (as you note, Jeff) with multiple strategies. Next time I need to do some bucketing/banding, I will definitely return to this article, and possibly everyone's comments here, to help me think about whether I'm designing my solution as cleanly and extensibly as I can.

-

February 8, 2024 at 2:39 am

RonKyle wrote:You may have never seen a good data warehouse. The advantages over standard reporting procedures are significant. Calculations such as YTD, PTYD and the comparisons of the two, rolling averages of all kinds, as well as the freedom for the business user to get at the data in the way that they see fit--that works for them. You seem pretty set in your opinion, so I won't try to change your mind. But for others this can be a differing opinion.

Thanks for taking the time to provide some feedback, Ron. I'm all for learning something new (especially new to me). I'm just not seeing quantifiable advantages and I'd seriously (with absolutely no sarcasm or irony intended) love to see someone do a head-to-head test. If anyone has a link to such a thing, I'd spend some good time studying it and trying it out.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 8, 2024 at 3:03 am

Lisa Slater Nicholls wrote:Fair point @jeff, but I think Ron and many other people who build DWs (or Power BI developers?) are more concerned with making the data, and potential calculations, as transparent for self-service reporting, Excel imports, whatevs, than they are with the perf, resource, and space tradeoffs of a dimension table. I'm going to guess that the "Cloud-native" developers skew more in Ron's direction.

I personally do it both ways, depending on circumstances and expected usage.

There is a lot more to be said here, on both sides. This could be a very fun thread.

In any case, I congratulate the author for having (mostly) presented the basic ingredients of a common and useful data analysis scenario (data bands) with clarity. The ideas here can be applied (as you note, Jeff) with multiple strategies. Next time I need to do some bucketing/banding, I will definitely return to this article, and possibly everyone's comments here, to help me think about whether I'm designing my solution as cleanly and extensibly as I can.

And this is why I truly love the environment here on SSC. We have a great article with people having different opinions and we're talking about them. I'm particularly grateful because so many people in other places simply say "Well.... if the advantages of these long standing methods aren't obvious, you're an idiot or seriously uneducated".

I've seriously not ever seen anyone do a head-to-head comparison of even doing a single table-to-method comparison. It reminds me a bit about all the BBFAATT out there about index maintenance. Until the last year or so, if you look at any of it, people way that index maintenance using the ol' 5/30 method is super important and that, YES, logical fragmentation CAN cause performance issues and that's the reason why you need to do index maintenance, etc, etc, ad infinitum... and yet, no one publishing those article ever proves that logical fragmentation causes any significant performance issues.

That's the same thing I'm seeing about data warehouses... and this article is a perfect example. Which is better? And easy to use table or an easy to use function? And, yep.... "Better" has many facets to consider... accuracy, performance, resource usage, easy of usage, etc.

I'd really like to see a comparison but there never seem to be any. Guess I'll have to make one. If you and/or Ron or anyone else would like to have some "fun" together, someone define a simple usage of this temporal range table from the article, I'll build what you folks would say is a typical usage table and a related function, and then let's test and learn together.

And, if you really want to have some fun, I have a bit of code that will setup a simple but kind-of large table (100 million rows, which isn't "large" by today's standards but suffices for some forms of testing). I can tell you up front that I do reporting on the table 7 different ways from Sunday in anywhere from 18ms (milliseconds) to 120ms and it requires no data warehouse and is always almost instantly up to date and supports drilldowns to single days for GUIs. It would be interesting to see how someone would do such a thing using a data warehouse.

And, nope... I'm not sure about any of it because I don't really have a clue about how to do something like that in a data warehouse.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 8, 2024 at 3:09 am

And, sorry... I forgot that it's a bit new... "BBFAATT" is pronounced as "bee-bee-fat" and is my acronym for "Books, Blogs, Forums, Articles, AI, `Tubes, and Talks".

Heh.. and if sarcasm is intended, the "fat" part of the pronunciation can be bleated like a goat. 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 8, 2024 at 4:47 am

Yep, Jeff, here's the very crux of it:

"Better" has many facets to consider... accuracy, performance, resource usage, easy of usage, etc.

I'm going to say a lot of things below, because I enjoy the exercise, but they all boil down to a higher-level question: what constitutes success? First you have to define that, and then you know what you really need to measure, and can figure out how. One of the other great things about this community is that it has a wide variety of practitioners, with different job requirements or success metrics.

We all would enjoy the results of head-to-head perf and resource tests for general knowledge, but those results would not necessarily speak to Ron's point, or mine, unless they showed very dramatic differences, and even then, we'd still take them with a grain of salt when deciding what to use, right? Because... how do those differences contribute to our success? And what else does?

Here's a recent case in point, although it has nothing to do with DWs and reporting:

My better half and I had an almost violent disagreement because he felt that a RBAR** implementation of a particular process was the better way to go than the set-based equivalent. This was very unlike him. I didn't want to give in, because of course this is a religious issue, but eventually acknowledged that he was right. (I apologize for the heresy.)

Both versions were accurate. His reasons included the following arguments in favor of the RBAR version:

- <li style="list-style-type: none;">

- <li style="list-style-type: none;">

- it was more easily unit-testable and loggable at a fine grain;

- its logic was more exactly congruent to the VB code it was replacing, so he could get sign-off from the business knowing they understood what they were agreeing to;

- it would be easier for developers of different types to understand and extend the business logic once it was written;

and -- very, very important --

- while marginally slower than the set-based version, it was plenty fast enough.

Here's a more BI-related case in point:

I used to work for a university; almost by definition, my users were a lot of very smart people who are not SQL-adept, have deep business domain knowledge, and present a limitless pool of questions. The appropriate solution for said limitless pool was to create a self-service architecture these people could leverage.

Of course, when I created SSAS models and datasets, a reporting warehouse, etc, I wanted them all to be as efficient as possible. But I didn't always care if they were the most efficient implementation that could possibly be created. I cared that they would be used, and useful, in a self-service framework.

It would be great if the most efficient, and most formally correct, implementation was also always the easiest to understand and work with. It just isn't always so, especially not with the wide variety of end-user reporting tools and UIs that MS and everybody else is busy coming up with.

How do you know when it is, or isn't? This is a hugely important question, and the answers are theoretically testable. But you need to think like a sociologist to get there. Your tests will require a lot more than a sample table and a couple of queries.

I have the utmost respect, almost reverence, for the rigor of your testing, Jeff, and your committment to doing it. By going through this exercise I am agreeing that everything should be tested, and testable, even when it's difficult.

So... Let's talk about what measurement might look like, in my university case, just for kicks.

I would have needed to set up two implementations, both accessible to users and their tools, both able to generate the same results, both adequately supplied with user documentation. Then chronicle stats for each on questions like :

- <li style="list-style-type: none;">

- <li style="list-style-type: none;">

- "over a period of <x> months, how many end users successfully answered their own questions without talking to the help desk, and how fast" and

- "what proportion of federal and state reporting requirements were met using the DW" and

- "how many grant $ were generated with the support of DW-provided graphs and statistics versus hand-gathered numbers", and

- "how adeptly or quickly did the public relations staff find what they needed to know to answer media questions", etc.

- If a single pool of users had access to both implementations one would also record their preferences, by which ones they used more frequently, analyze this by user type, etc.

Also, very important "were there any real issues of accuracy in either system?" (If there were, start over.)

I admit I didn't take the several years out to do this kind of discovery testing before providing a useful solution to my users, so I don't have those answers for you. But I did have years of experience working with users and reporting behind me, and I'm guessing Ron might too. I relied on that experience when designing the data sources I exposed to my users.

Maybe this is all a way of answering your implied question about WHY you haven't seen the head-to-head comparisons you are thinking of. Testing isn't besides the point to people who create DWs (at least good ones), but testing the right things is hard and takes a lot of time.

Thank you for your patience, if you have read this far.

** speaking of Modenisms, I doubt BBFAATT is going to attain the same ubiquity as RBAR, but nice try, Jeff <gd&r>.

- This reply was modified 2 years ago by Lisa Slater Nicholls.

- This reply was modified 2 years ago by Lisa Slater Nicholls.

-

February 8, 2024 at 5:41 am

Heh... no... I agree... BBFAATT will never be able to compete with RBAR.

Also, sorry about your argument with set-based v.s. RBAR thing. I'd have to see what the two things look like to make a comment there because a lot of people don't actually get the set-based stuff right and, at other times and as you say, it was close enough for him and you.

Getting back to the subject, Ron suggested that I was pretty well set in my opinion and that he wasn't going to try to change my opinion. I'm suggesting that my opinion can be changed if we do some testing. It also seems like others are set in their ways and, perhaps, I'm wasting my time because they don't want to change their opinion.

And, I don't actually have a problem with that. 😀

Shifting gears a bit and citing your wonderful feedback, you wrote...

Then chronicle stats for each on questions like :

"over a period of <x> months, how many end users successfully answered their own questions without talking to the help desk, and how fast" and

"what proportion of federal and state reporting requirements were met using the DW" and

"how many grant $ were generated with the support of DW-provided graphs and statistics versus hand-gathered numbers", and

"how adeptly or quickly did the public relations staff find what they needed to know to answer media questions", etc.

If a single pool of users had access to both implementations one would also record their preferences, by which ones they used more frequently, analyze this by user type, etc.

Did you find it necessary to use temporal range tables, like the one in this article, to solve for those questions? That's really what started this whole conversation.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 8, 2024 at 6:27 pm

Getting back to the subject, Ron suggested that I was pretty well set in my opinion and that he wasn't going to try to change my opinion. I'm suggesting that my opinion can be changed if we do some testing. It also seems like others are set in their ways and, perhaps, I'm wasting my time because they don't want to change their opinion.

My opinion is based on my observations of users using cubes that I or others have designed. It gives them a flexibility a standard report does not provide them. Unfortunately I can't think of a way to do an apples-to-applies comparison for reports that have different sources.

-

February 8, 2024 at 8:07 pm

> Did you find it necessary to use temporal range tables, like the one in this article, to solve for those questions? That's really what started this whole conversation.

Of course I wouldn't find it necessary. My point was that my USERS found it necessary to use temporal range tables, for THEIR questions, and that their questions were a very open-ended set. **

OK, caveat: I said "necessary" here, Jeff, because you did, but the truth is that without some complex social data available, such as was suggested by the metric-accumulating questions I listed, we'll never know whether temporal range tables were truly "necessary" for users to ask and answer their questions or just very, very preferable to them. But I can assure you that the latter, at least, is true.

In many of the jobs I've had, and this is also what Ron is talking about, the kind of flexibility that allows users to answer their own questions, and not be restricted to the answers to specific questions that we can provide via SQL or well-parameterized but canned reports for them, is extremely important. Enabling them to do it easily and quickly, even with a sense of discovery and delight, is a lot more important to them -- and hence to my success -- then the exact speed of their analysis queries.

Yes, when building datasources for user access, we have to balance out our priorities, so that what we provide is performant within some reasonable guidelines. But performance and resource efficiency will only get us so far. We have to build something they will come to.

Your responsibilities, and mine, and Ron's, and our criteria for success are all different, because (once again) this community has room for participants with lots of different job descriptions. I don't know Ron, but his words ring true to me, and I have experienced the same thing that he describes.

I don't think we're (any of us) looking to "change each other's opinions" here. I don't even think it's important. I learn from you every time you write, Jeff, and I bet Ron does too, and what we learn helps us build things that are used, and useful.

End of sermon.

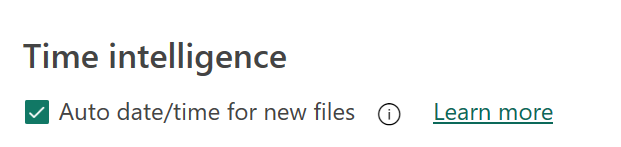

I'm going to switch gears here slightly to acknowledge that the very worst thing in Power BI, and the default setting that everybody should change immediately when they install the Desktop authoring environment, is this one:

... mindlessly doing cr*p like this is a good way to (a) kill perf and (b) give temporal dimensions a permanent bad name. Thanks, Microsoft!

And, seriously, thank you as always Jeff, for reminding us to test our assumptions on a regular basis.

** Off the top of my head, on a given day, different university users would be asking random temporal-related stuff like this, and you can probably see that the banding or buckets will change depending on the question:

- how did COVID lockdown periods affect retention rates?

- what are the diversity differences between classes of different years in the nursing school, and is this different at all in different age groups?

- how has the number of individuals who decline to state their gender changed over time?

- did the years in which admissions' applications were test-optional differ, along many different demographic axes, from those in which admissions was test-required?

- what percentage of the staff audits at least 2 university classes, over decades? What days of the week are most likely to see staff enrollment in courses?

- grouping financial aid guidelines according to a timeline of government and university policy shifts, what patterns do we see in admissions and retention, and are there differences in these patterns based on demographics?

- ....

-

February 9, 2024 at 5:50 am

Thank you all for your interest in my article. I truly appreciate your comments, as they help me strike a proper balance between function/sproc driven and model-driven approaches. In my opinion, there are different perspectives to consider when addressing solutions to a problem. In my real-world work experience, the temporal banding problem was raised by a data engineer proposing to store a set of bandings with 3-month increments into a dimension table to segment patient counts by banded wait days.

The initially proposed solution began with a model-driven approach involving some convoluted data engineering work to consume the banding data. As a dimensional modeler, a Data Warehouse (DW) approach is my natural choice for solving such problems, shifting the bulk of the work to ETL from the reporting process.

I am particularly intrigued by Jeff's idea of implementing the task as a functional module that behaves like a dimension dataset, or a runtime dimension if you will, during reporting time. Given that the existing frontend consists of more than a thousand stored procedures, I would not mind adopting a function/proc driven approach to tackle this banding problem using Jeff's method, if our data engineer decides to pursue that direction.

In the next series of case studies, I will discuss a solution to a 'what-if' business requirement where a user input will define bandings, which will then be read back as parameters for an Inline Table-Valued Function (iTVF) or stored procedure if we want to load the bandings into a generic dimension table to be consumed on the fly. While this solution may not integrate well with a downstream semantic layer or other BI visualization tools, it should work effectively for a reporting system driven by stored procedures.

The model-driven approach may not be better than, if not as good as, a function-driven approach for a single report request, particularly in terms of performance. It is definitely less flexible, as the bandings are predefined and stored in a static dimension table compared to parameterized bandings on the fly. I believe it ultimately comes down to the trade-off between ease of use and flexibility when focusing solely on dimension design. Performance gains on the DW frontend mainly come from proper aggregation and column store indexing, albeit at the cost of extra ETL time and loss of granularity.

However, without a well-designed user-facing data model, each stored procedure becomes a set of siloed SQL scripts. How can we effectively understand and maintain the reporting system without delving into hundreds of stored procedures? I believe a star schema with around 10 dimension tables referenced by a central fact table is much more readable.

I want to extend my gratitude to Lisa and Ron for sharing their insights from their DW experience. Indeed, there are numerous challenges in this fast-changing field.

-

February 9, 2024 at 6:01 pm

Hi Hang Liu,

Thank you again for your article and for this neat summary of the questions and trade-offs. I'm glad you are continuing the series.

>While this solution may not integrate well with a downstream semantic layer or other BI visualization tools, it should work effectively for a reporting system driven by stored procedures.

Well... I use a sort of a compromise, which in my own experience works pretty well without duplicating logic.

Here's what I (really) do: the logic is in a view, which may leverage a TVF if the logic is complex. Often, the logic is not complex -- for example, federal compliance reporting has a lot of different age-grouping requirements depending on the question, and these different sets are pretty arbitrary. I'll add a typical example below.

Once captured there, it's available for stored procedures or ad-hoc queries, canned reports, etc, that would be written by an IT professional.

But the views are the source for the dimensions in cubes and models accessible to end users for self-service reporting as well. So, in essence they are persisted, but only in the models (which are really efficient for this persistence), and the ETL time as well as ETL development time costs are minimal.

So, I've never really felt I needed to make a choice between the two approaches.

What I learned from your article was a cleaner way to think about temporal banding problems. Then, as Jeff indicated, your code would slot into a TVF very neatly.

Typical/runnable example of arbitrary banding as it would be captured in a view. When used in SQL queries or adding an FK to a dimension in a DW fact table, the join is usually on <source table>.Age between RangeStart and RangeEnd.

with bands (RangeStart, RangeEnd)

as (

select 1, 17

union all

select 18, 24

union all

select 25, 39

union all

select 40, 999

union all

select null, null

)

select row_number() over (

order by isnull(RangeStart, 10000)

) as RangeIndex,

RangeStart,

RangeEnd,

coalesce('Under ' + cast(RangeMin + 1 as varchar(3)),

cast(RangeMax as varchar(3)) + ' and Above',

cast(RangeStart as varchar(3)) +

' - ' + cast(RangeEnd as varchar(3)),

'Age Unknown') as RangeName

from bands

left join (

select min(RangeEnd) as RangeMin, null as RangeMax

from bands

union all

select null, max(RangeStart)

from bands

) minmax on RangeMin = RangeEnd

or RangeMax = RangeStart;

Viewing 15 posts - 1 through 15 (of 22 total)

You must be logged in to reply to this topic. Login to reply