Basic Always On Groups Randomly Stop Synchronizing

-

January 22, 2026 at 4:20 am

In one of my environments I have 3 pairs of Always On SQL 2022 Servers (CU18), Standard Edition with BAGs. I'm finding that randomly one or more of the BAGs stops synchronizing and the only way to resolve this is to pull the DB from the BAG and add it back again.

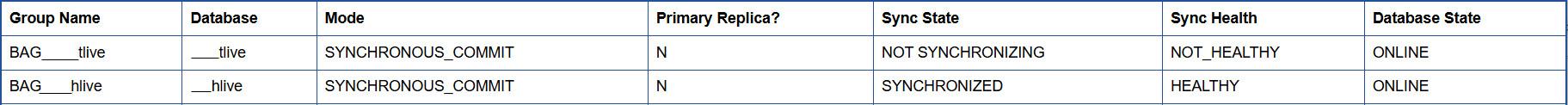

The error information we have is shown here, and one BAG is NOT_HEALTHY but the other 3 are fine (only 2 of 4 BAGs shown).

We thought it may be network related and for one pair of servers we removed what the sysadmins thought was the problem node, and added a new node with new IP Address, etc. But this didn't fix it. It's been suggested it may be a SAN issue, but then why only one database out of 4 on this cluster, and one out of several on the other clusters? If the SAN were an issue I would expect it to impact multiple BAGs. Also if it were the SAN, why is it always the Secondary that gives an issue. There are at least some days when there are Primary Nodes on the secondary environment and we don't get issues on the Primary nodes.

I recall from the SQL 2012 days when IPv6 was a new thing that we were having issues and had to disable IPv6 to get a stable AG, but I was under the impression this was resolved and I've not seen any recent issues re. this.

We've not found anything meaningful in the Cluster Manager Error log when the AG goes belly up, except "The Cluster service failed to bring the clustered role 'BAG_tlive' completely online or offline. One or more resources may be in a failed state. ...", which is not very enlightening. We can't identify the resource that "may be" in a failed state!

At the moment I'm thinking of asking the sysadmins to disable IPv6! Are there any other things I could look at?

Leo

Nothing in life is ever so complicated that with a little work it can't be made more complicated. -

January 22, 2026 at 2:50 pm

My advice - check all of the logs. On the primary and on the secondary. The SQL logs, the event logs, every log you can find. Start with the Cluster Manager Error log so you can find the exact time of failure, then move on to the SQL Server logs on both systems and check the log at that time for any errors. If nothing stands out, check the event logs on the servers themselves for anything happening at that time.

I would not recommend making any changes to the server itself as you indicated that it is only affecting 1 instance and rebuilding that instance had no effect. As long as the configuration of the server hosting that instance matches the other servers where problems don't exist, I would not change things. For example, if 3 of your servers have IPv6 disabled and only 1 has it enabled AND the one that has it enabled is the problematic one, then I'd disable it. Otherwise, I wouldn't make that change.

I'd also look for a pattern for failure - is it every day at midnight (for example) or Monday at 2 PM or some predictable time? If so, then it is very likely a scheduled task kicking off that is breaking things. By any chance, is that server's AV configured to ignore the mdf and ldf and ndf files? Or better yet, to ignore the folders containing SQL data?

If none of the above helps, I'd check the official docs from Microsoft before implementing - https://learn.microsoft.com/en-us/troubleshoot/sql/database-engine/availability-groups/troubleshooting-availability-group-failover

Microsoft has some pretty good guide on how to troubleshoot AG issues.

I do not recommend making "random changes" (such as disabling IPv6 on ONE server) as that is random guessing on the problem. I prefer to determine the cause for the problem and correct the issue itself. It MAY be IPv6, but disabling IPv6 MAY just hide the underlying problem or delay the NOT_HEALTHY state.

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

January 30, 2026 at 5:40 am

If your Basic Always On DB randomly stops syncing, check sys.dm_hadr_database_replica_states to see which database is stuck, look at SQL Server, Windows, and cluster logs for network or cluster issues, make sure your AG endpoints are reachable, and then usually you can fix it by running ALTER DATABASE [YourDB] SET HADR RESUME;—also keep an eye on I/O, CPU, or network spikes, because these often cause the sync to pause, and once everything’s stable the database should start syncing again.

Viewing 3 posts - 1 through 3 (of 3 total)

You must be logged in to reply to this topic. Login to reply