AVG based on condition

-

July 22, 2019 at 11:30 am

Hi, i need the AVERAGE flow between two dates: TODAY() and the most recent date that respect this condition Flow less than 20 for at leat 5 days in a row (could be more than 5 days).

Here's a sample:

TIMESTAMP FLOW

18/07/2019 100

17/07/2019 110

16/07/2019 120

15/07/2019 120

14/07/2019 110

13/07/2019 13

12/07/2019 120

11/07/2019 120

10/07/2019 100

09/07/2019 12

08/07/2019 10

07/07/2019 100

06/07/2019 120

05/07/2019 3

04/07/2019 5

03/07/2019 6

02/07/2019 4

01/07/2019 3

30/06/2019 300

29/06/2019 200

?The result for this sample is the AVG of the flow between 18/07/2019 and 05/07/2019.

Thank you in advance

-

July 22, 2019 at 2:41 pm

comment deleted

- This reply was modified 6 years, 7 months ago by Jonathan AC Roberts.

-

July 22, 2019 at 2:41 pm

Hi Likiraght and welcome aboard!

Your question is good and so is your test data but you'll find that almost everyone likes to test their coded solutions before they post a reply. They (including me) don't want to have to take the time to convert your test data to code and many heavy hitters on this forum will simply skip over posts that don't provide the test data in a "readily consumable" format.

With that thought in mind, please read'n'heed the article at the link in my signature line below. It'll really help us help you in the future.

Since you're brand new here, I've converted your test data into "readily consumable" code for you as follows (it's just one of many methods that result in the same thing... "readily consumable" test data):

--===== Create the given test data in a readily consumable format.

-- You should do this whenever you post test data.

IF OBJECT_ID('tempdb..#TestData','U') IS NOT NULL

DROP TABLE #TestData

;

SELECT [TimeStamp] = CONVERT(DATE,v.BrFrDate,103)

,Flow = CONVERT(INT,v.Flow)

INTO #TestData

FROM (VALUES

('18/07/2019',100)

,('17/07/2019',110)

,('16/07/2019',120)

,('15/07/2019',120)

,('14/07/2019',110)

,('13/07/2019',13)

,('12/07/2019',120)

,('11/07/2019',120)

,('10/07/2019',100)

,('09/07/2019',12)

,('08/07/2019',10)

,('07/07/2019',100)

,('06/07/2019',120)

,('05/07/2019',3)

,('04/07/2019',5)

,('03/07/2019',6)

,('02/07/2019',4)

,('01/07/2019',3)

,('30/06/2019',300)

,('29/06/2019',200)

)v(BrFrDate,Flow)

;Here's one solution to your problem... it uses a rather old fashion method of subtracting a partitioned ever-increasing number from the date to form group "numbers", which appear to be dates but are just a way of grouping rows. I've also taken the liberty to include a few more things that I think they may ask you for in the future.

WITH cteGrp AS

(--==== Substact an increasing number of days from the TimeStamp to form groups of dates based on

-- whether or not they have a FLOW of < 20.

SELECT GrpNum = DATEADD( dd

,-ROW_NUMBER() OVER (PARTITION BY CASE WHEN Flow >= 20 THEN 1 ELSE 0 END

ORDER BY [TimeStamp])

,[TimeStamp])

,[TimeStamp]

,Flow

FROM #TestData

)

,cteCnt AS

(--===== Determine a count for each group so we can figure out if there are 5 or more in a group.

SELECT GrpNum

,GrpCnt = COUNT(*) OVER (PARTITION BY GrpNum)

,[TimeStamp]

,Flow

FROM cteGrp

)

,cteCutOffDate AS

(--==== Find the maximum TimeStamp of any group having a FLOW of <20 for 5 days or more

SELECT CutOffDate = MAX([TimeStamp])

FROM cteCnt

WHERE Flow < 20 AND GrpCnt >=5

)

SELECT StartDate = MIN([TimeStamp])

,EndDate = MAX([TimeStamp])

,AvgFlow = AVG(Flow+0.0)

,DaysMeasured = COUNT(*)

,TotalFlow = SUM(Flow)

FROM #TestData td

WHERE [TimeStamp] > (SELECT CutOffDate FROM cteCutOffDate)

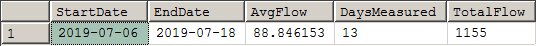

;For the test data you provided, that results in the following:

I suppose there's probably a simpler solution that uses Lead/Lag but I'm currently working on a machine that only has SQL Server 2008 on it and so don't have the ability to use Lead/Lag.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

July 22, 2019 at 2:48 pm

p.s. If you'd like to learn more about the very useful method of conditionally grouping dates by simple subtraction of an increasing number, please see the following article.

https://www.sqlservercentral.com/articles/group-islands-of-contiguous-dates-sql-spackle

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

July 22, 2019 at 3:23 pm

A couple of other points.

- Always use a temp table or a table variable when providing sample data.

- It obviates the need for cleanup.

- Use an international date format instead of a localized date format.

- YYYYMMDD

- There is newish syntax that greatly simplifies conditionally dropping a table. It's much more succinct and descriptive.

DROP TABLE IF EXISTS #TestData

Here is my take.

-- I used #flows rather than #TestData.

WITH low_flows AS

(

SELECT *, CASE WHEN MAX(FLOW) OVER( ORDER BY f.[TIMESTAMP] ROWS BETWEEN 4 PRECEDING AND CURRENT ROW) < 20 THEN 1 ELSE 0 END AS low_flow

FROM #flows AS f

)

, last_low_flows AS

(

SELECT *, MAX(CASE WHEN lf.low_flow = 1 THEN lf.[TIMESTAMP] END) OVER() AS last_low_flow

FROM low_flows AS lf

)

SELECT AVG(llf.FLOW), llf.last_low_flow, MAX(llf.[TIMESTAMP])

FROM last_low_flows AS llf

WHERE llf.[TIMESTAMP] BETWEEN llf.last_low_flow AND GETDATE()

GROUP BY llf.last_low_flow;And here is a comparison of the scans and reads:

DREW

Table 'Worktable'. Scan count 3, logical reads 45, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table '#flows. Scan count 1, logical reads 1, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

JEFF

Table '#TestData'. Scan count 2, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Worktable'. Scan count 3, logical reads 65, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.J. Drew Allen

Business Intelligence Analyst

Philadelphia, PA - Always use a temp table or a table variable when providing sample data.

Viewing 5 posts - 1 through 5 (of 5 total)

You must be logged in to reply to this topic. Login to reply