Have you ever made sausage? The steps that go into making it are gut wrenching to some, but the end product is delicious when properly made. The steps below have a strong resemblance to making sausage and the outcome was as good as any Uzinger's sold at a Brewer's game.

The Ground Rules

- One SSIS server to host development, QA and Test for a set of application database servers.

- Once a package is promoted to development, the internals of the package should never be touched as it is moved up the environments.

- The package must be location aware to minimize the possibility of environments getting mixed up

- The packages are stored on the file system

- The configurations are stored in a database

- SSIS packages are called via jobs (via schedules or applications that start the job).

Disclaimer

I came up with all the brilliant ideas expressed here, except the ones I stole from my coworkers and people who share their knowledge all over the web and in print. In case you have been hiding in a cave, or your company took away Internet for the past few years - checkout Jamie Thomson's old blog when he worked at Conchango - it's chock full of really good stuff.

Architecture

The architecture needs to be explained first. As I wrote above, we support development, QA, and test on one SSIS server and production on another. However, this solution will work on more or less environments (I am thinking if you have a lot more, you either like to promote code, or you work for the government and are into job justification). We utilize domain accounts for the SSIS packages credentials. This allows us to keep from dealing with the password storage hassles/security risks and allows us to utilize a common setup across our application databases. The appropriate ETL account is setup on the same environment servers with access to an ETL "schema" so that any ETL work can use that schema. This keeps permissions simple and easy to manage. In addition, the ETL account allows us to use that account as a method to differentiate the SSIS_Configurations records for that particular environment.

Service Accounts

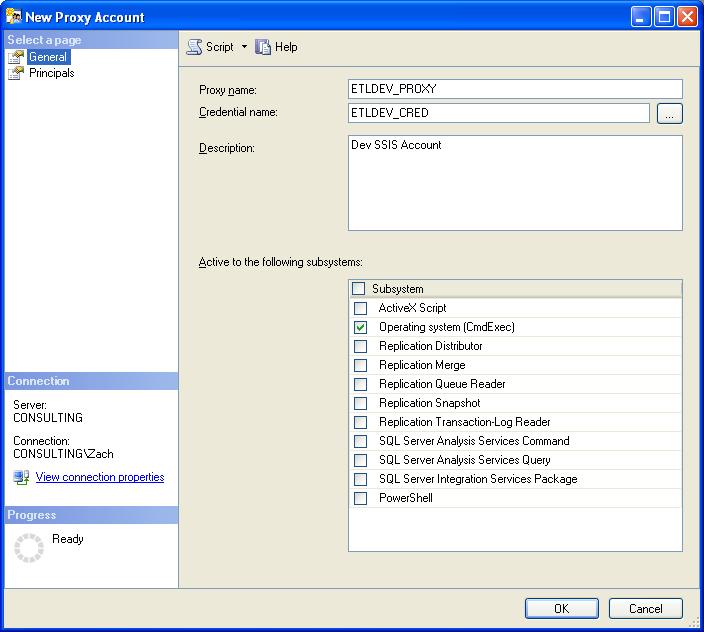

A network account was created for each environment we needed to support (ETLDev, ETLQA, ETLTest, ETLProd). I added the accounts to the SSIS server and created a credential for each one and tied them to a proxy with access to the CMDExec on the server.

Each network account has access to read the c:\ETL\Config directory and explicitly given access (read/modify, etc) to its corresponding folder structure. This actually helps keep everyone out of trouble. The ETLTest proxy could never access the QA environment packages, eliminating the potential confusion and problems associated with that. In the company I work for, we have several instances of different business units in each environment, so we utilize scripts to set baseline security on all instances. In this case we created an ETL "Schema" on each instance, and automatically created the proper security per corresponding environment with the login's role having access to the schema. We then standardized security on the databases for the ETL accounts and developers knew exactly what permissions the accounts had natively, and anything developed in the ETL space would work as well.

This whole "architecture" resolves around the Domain Accounts. When a package is called from a job, the Run As is set to the appropriate environment account. This account then runs the package and provides the context for the package to know what environment its being run for (so it can find the right configurations).

File Structure

The file structure is the standard setup that is recommended all over the web - a picture is worth a few words, at least this one:

Configuration

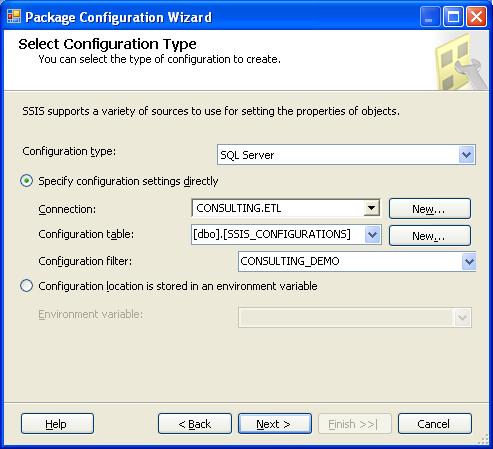

The experts suggested indirect configuration was the way to go and after some experience I agree. The setup that is recommended:

- Create an environment variable that contains a path to the .dtsconfig file

- Create the .dtsconfig file and add a connection that points to the database where the configurations live

- Setup the configurations database (the meat and potatoes of this article).

This setup really lends to flexibility in changing databases or file paths should the need arise.

Now for the hacks to the configuration table. First we start out by making two changes to the main table.

CREATE TABLE [dbo].[SSIS_Configurations_Storage](

[ConfigurationId] [int] IDENTITY(1,1) NOT NULL,

[ConfigurationFilter] [nvarchar](255) NOT NULL,

[ConfiguredValue] [nvarchar](255) NULL,

[PackagePath] [nvarchar](255) NOT NULL,

[ConfiguredValueType] [nvarchar](20) NOT NULL,

[ServiceAccount] [nvarchar](128) NULL,

CONSTRAINT [PK_SSIS Configurations] PRIMARY KEY CLUSTERED

(

[ConfigurationId] ASC

) ON [PRIMARY]

) ON [PRIMARY]

The surrogate key just provides a clean way to handle moving the configurations from one environment to the next, and the ServiceAccount attribute is needed to work with the environments. Next, we need to create a view that acts as the table that BIDS will see during the configuration setup in the package.

CREATE VIEW [dbo].[SSIS_CONFIGURATIONS]

AS

SELECT ConfigurationFilter, ConfiguredValue, PackagePath, ConfiguredValueType

FROM dbo.SSIS_Configurations_Storage

WHERE (ServiceAccount = CURRENT_USER)

GO

We then create a trigger on this view , this allows your normal configuration process to works, but gathers the current user who saved the configuration. When moving from one environment to the next, a simple application or stored procedure masking the credentials can make this pretty easy. I built a simple c# app to allow for viewing, editing and saving as the ETLQA, ETLTest, etc user to simplify it even further.

CREATE TRIGGER [dbo].[SSIS_CONFIGURATIONS_ins]

ON [dbo].[SSIS_CONFIGURATIONS]

INSTEAD OF INSERT

AS

BEGIN

SET NOCOUNT ON; INSERT INTO dbo.SSIS_Configurations_Storage

([ConfigurationFilter]

,[ConfiguredValue]

,[PackagePath]

,[ConfiguredValueType]

,[ServiceAccount])

SELECT ConfigurationFilter

,ConfiguredValue

,PackagePath

,ConfiguredValueType

,CURRENT_USER

FROM inserted END

Alright, now the bulk of the hacks are completed its time to setup a job to run a package - its the easy part, and if conventions are followed is extremely quick to duplicate for your next environment after the job is set up the first time.

Job Naming

To make this work aesthetically, all job's are named in the Hungarian notation style (No, I don't advocate Hungarian notation typically, nor use it in my own applications). This serves two purposes, it groups jobs together and is further simplified when scripting out the first version of the job and replacing the "dev" with "test" for the entire job. Do this simple CTRL + H command changes the path to the SSIS package, the credential name, the job owner and the job name. Convention over configuration.

devConsultingLoadDemo

qaConsultingLoadDemo

testConsultingLoadDemo

The useful part of this scheme with the environment folder permissions is that if you change one path, but not the credential you will get a permission denied error accessing the folder!

In Closing

Its a pretty ugly and bizarre setup but it does work well if your environment fits the bill. If you get promoted for this implementation; claim its your own and you can owe me a beer. 😉 If you have a better idea, please leave it in the forums or if public humiliation isn't your thing, put it in a private message and send it off to me.