Microsoft Fabric has been evolving since its general availability in November 2023. Developers have asked for a command line interface (CLI) to manage the objects in Fabric. Microsoft has responded with the preview of the Fabric Command Line Interface (CLI) on April 1, 2025. Please see the announcement on the Fabric Blog for more information. As a data architect, I build both Lakehouse’s and Warehouses for clients. How can we use this new interface to manage a Fabric Lakehouse?

Business Problem

The Fabric Command Line Interface (CLI) is a module that can be downloaded and installed into a Python environment. Today, we are going to explore how to install, configure and use the command line to manage a couple different Fabric Lakehouse’s.

Install and configure

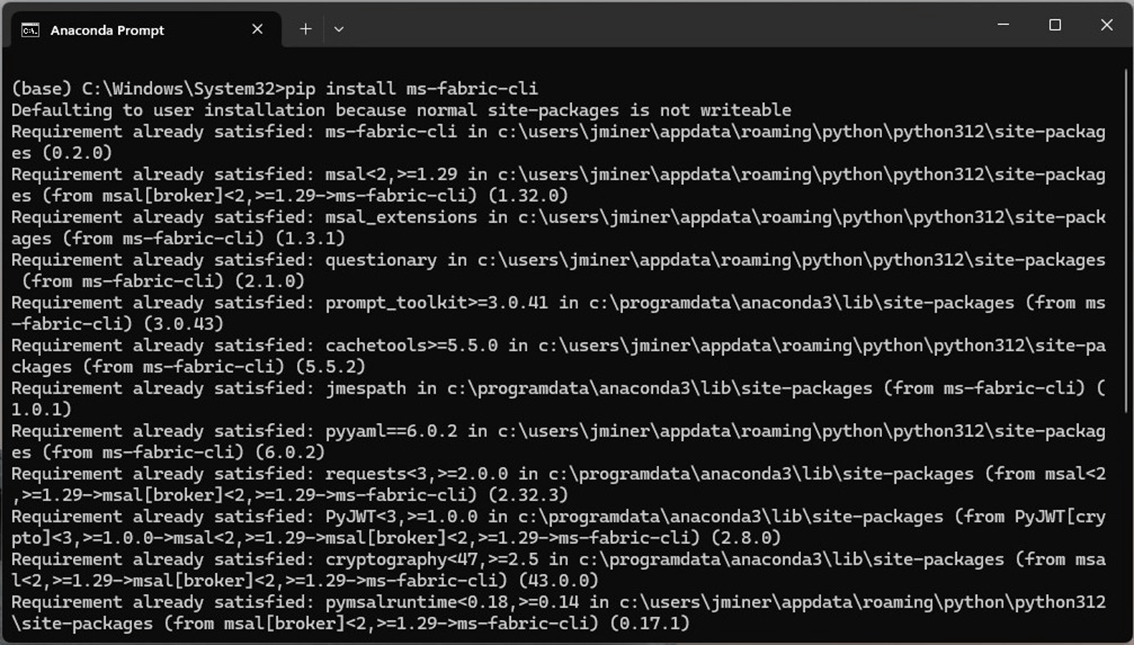

Anaconda Python is a popular software distribution package that contains the Python Language, the Spyder editor, Jupyter Notebooks, and the environment prompt. Please see the website for all the details on this free software. I assume you have downloaded and installed Anaconda. The next step it to install the “mms” library using the pip command. The image below shows the output of a tThe image above shows the details of each capacity. The image below shows a call to the assign to capacity REST API call. A return code of 202 means the operation has been submitted (accepted). Please see documentation for details on assigning a capacity to a workspace.ypical installation. Use the “- - quiet” option to skip all the output.

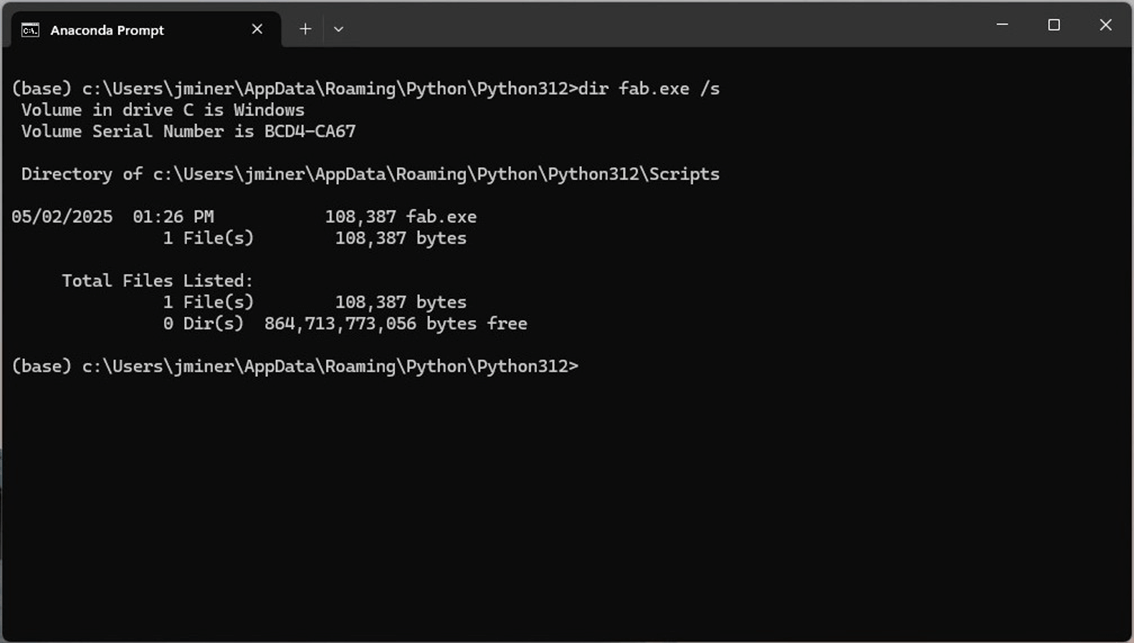

If we look for the location of the fab.exe program, we can see that it was installed on my local profile. This is not helpful if we want to call the CLI from any directory.

One way to fix this issue is to run a batch file to append the above directory to the PATH variable using the set command. Please see DOS command below on how to do this. This will only be in effect until the Anaconda prompt is open.

REM REM -- add Fabric CLI to path -- REM set PATH=%PATH%;c:\users\jminer\appdata\roaming\python\python312\Scripts

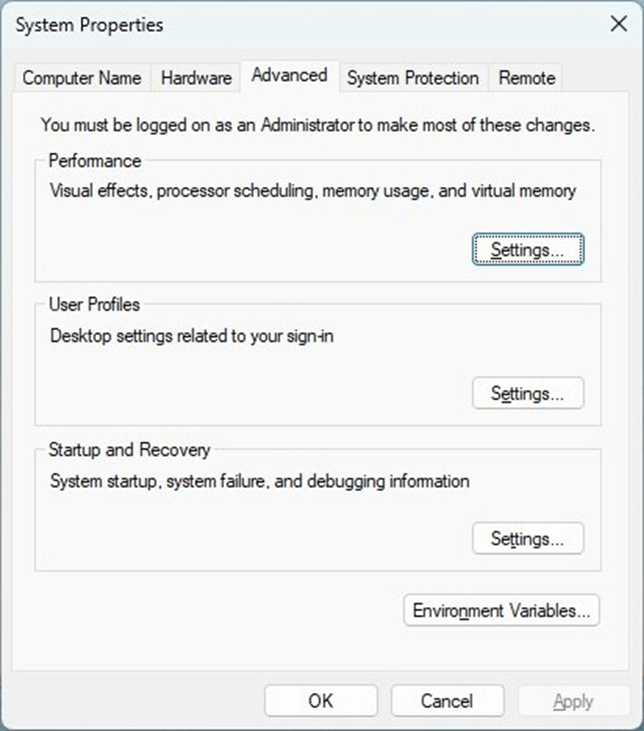

A better solution is to use the advanced system properties section of the control panel to make a consistent change to the environment variable. In my organization, I do not have administrative rights to the computer. However, I can get an audited command prompt that does have elevated privileges. Use the following windows shortcut to bring up the required window.

REM REM -- open advance properties -- REM SystemPropertiesAdvanced

The image below shows the advance section of the system properties page in the control panel.

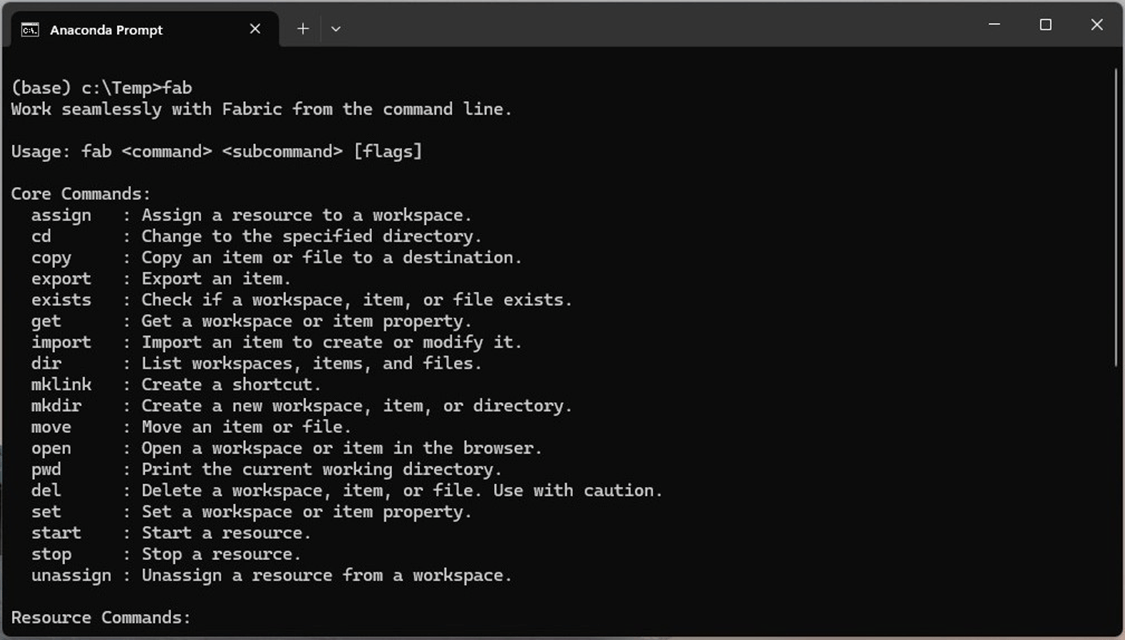

Regardless of technique, please update the PATH variable now. The image below shows that the updated PATH variable is working since I am executing the fab.exe from the c:\temp directory. If we do not pass a command to the executable, it displays the help page.

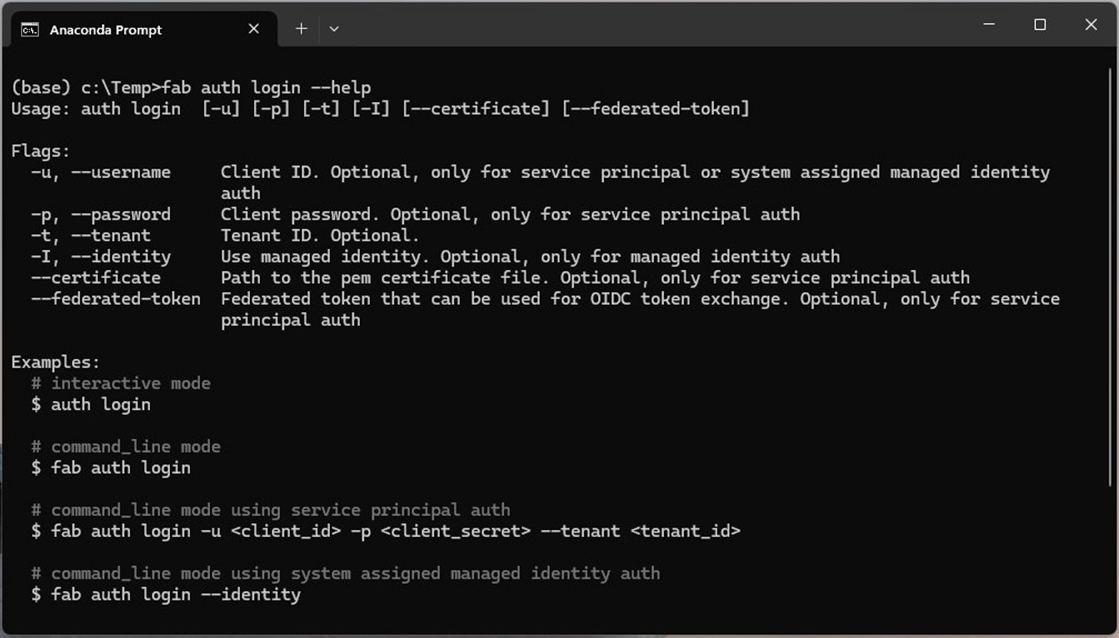

For each command, we can ask for additional help. The window below shows how to authenticate from a remote computer to Microsoft Fabric. Today, we are going to use the interactive method.

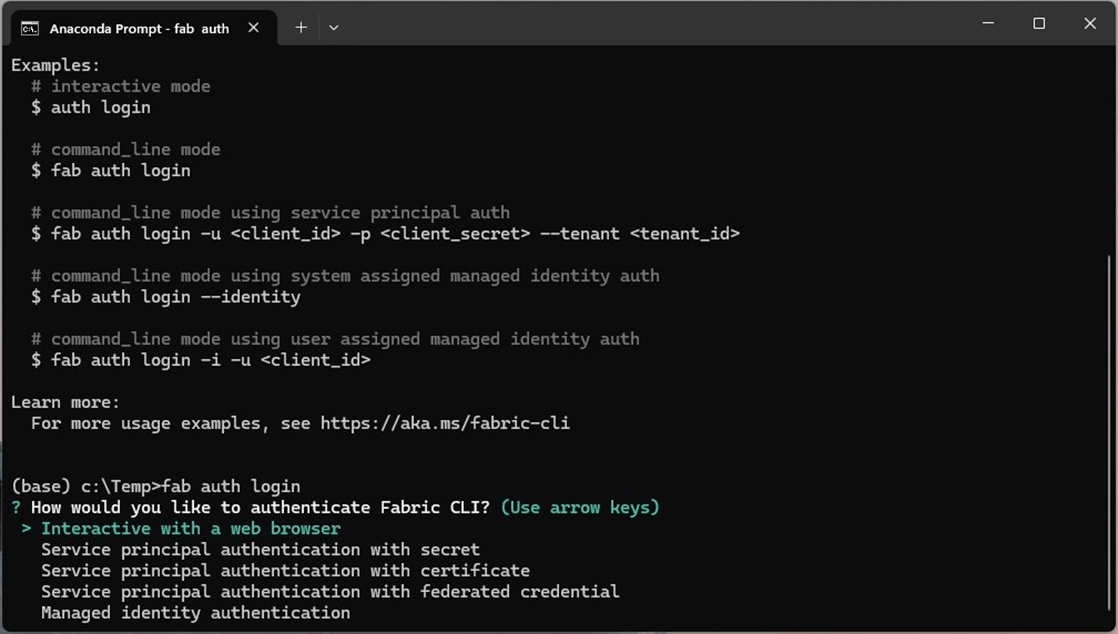

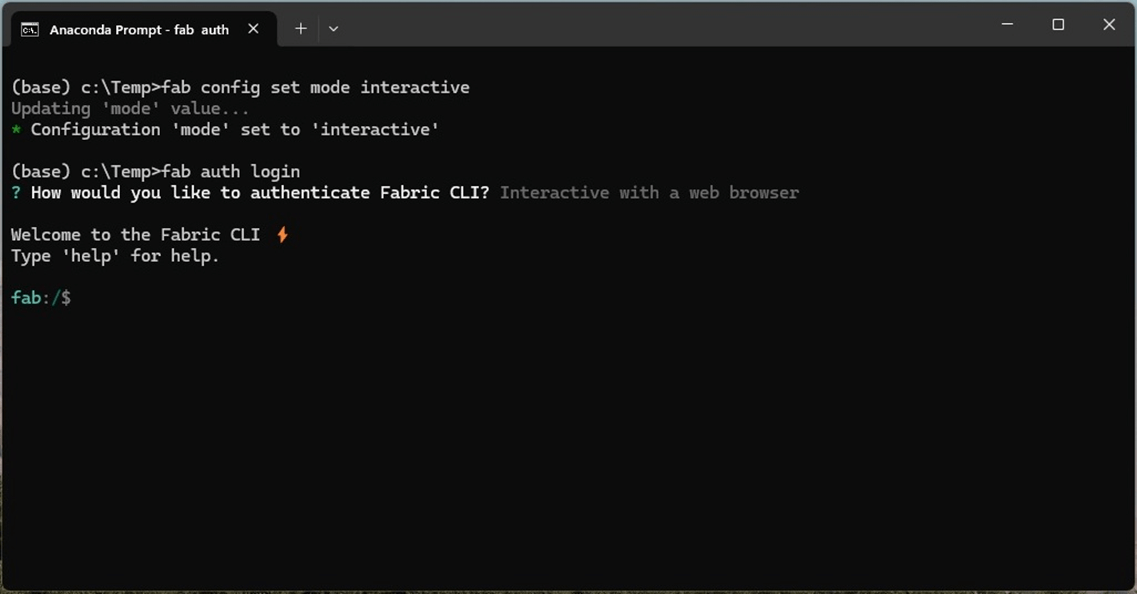

The “fab auth login” command returns a prompt asking the user to choose the authentication method. In our case, we are going to use the web browser. This is the typical login pattern of user name, password, and multi-factor authentication.

To recap, installing and configuring the Microsoft Fabric Command Line Interface (CLI) is not hard.

Non-Interactive Mode

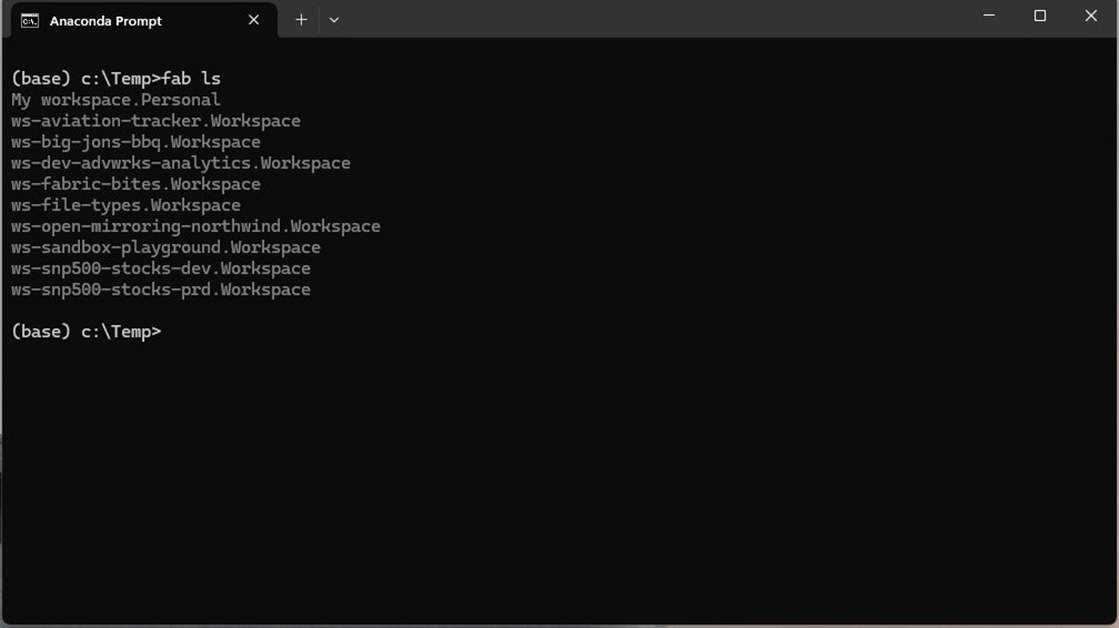

By default, the CLI does not keep track of prior commands. Let us see how we can use CLI commands to get details about the Aviation Tracker Lakehouse. The ls command lists details about Fabric objects. By default, a listing at the root level of the tenant shows all workspaces that we have access to.

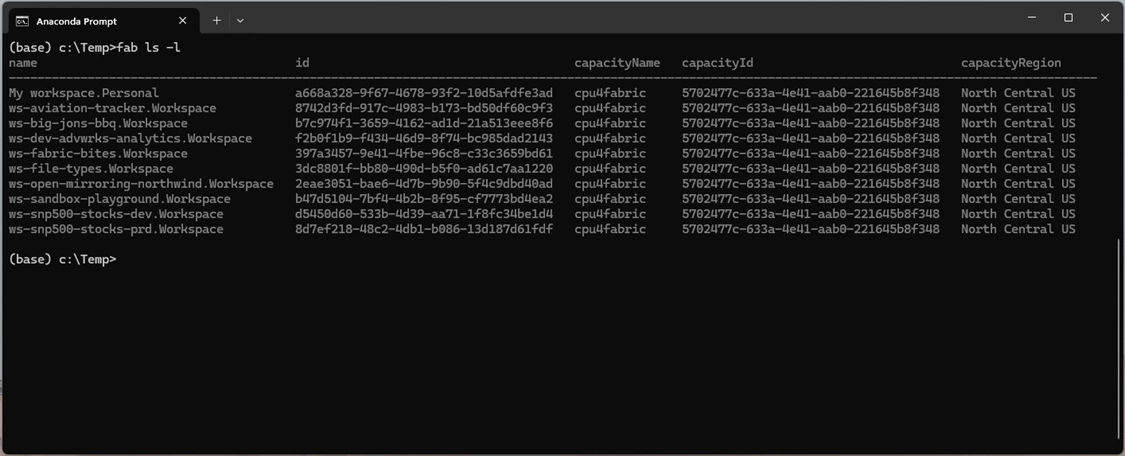

The addition of the -l switch displays additional information such as the workspace identifier and the capacity id for each workspace.

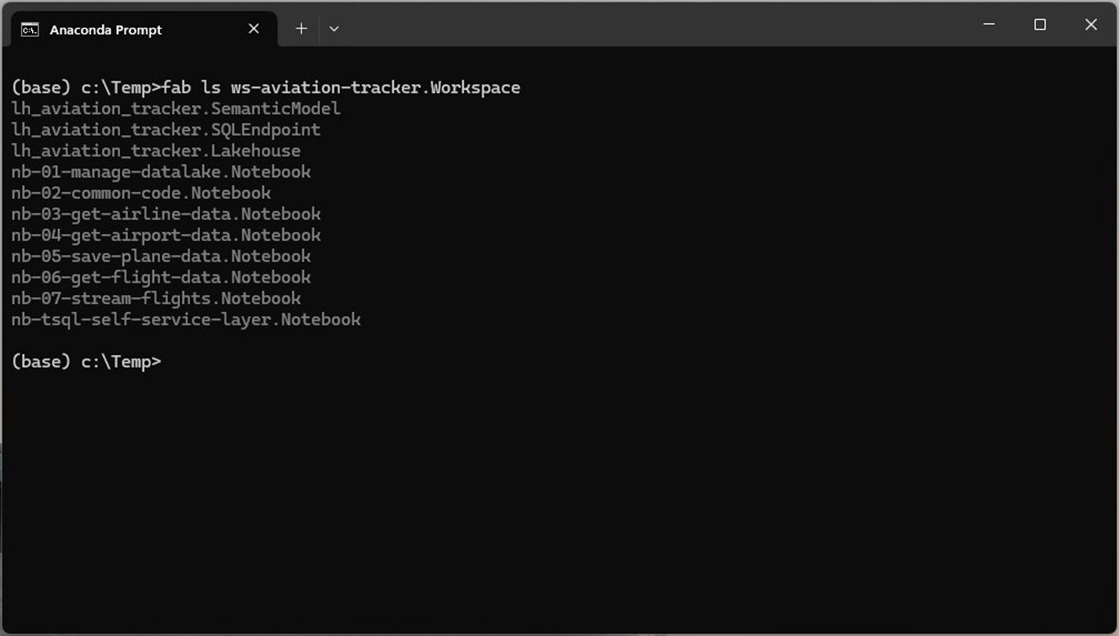

Because we are using the non-interactive interface of the Fabric CLI, every action must have a fully qualified path for every call. Thus, a listing of the “ws-aviation-tracker” workspace shows all objects in the workspace. This includes the Lakehouse, the Semantic Model, the SQL End Point and all the notebooks.

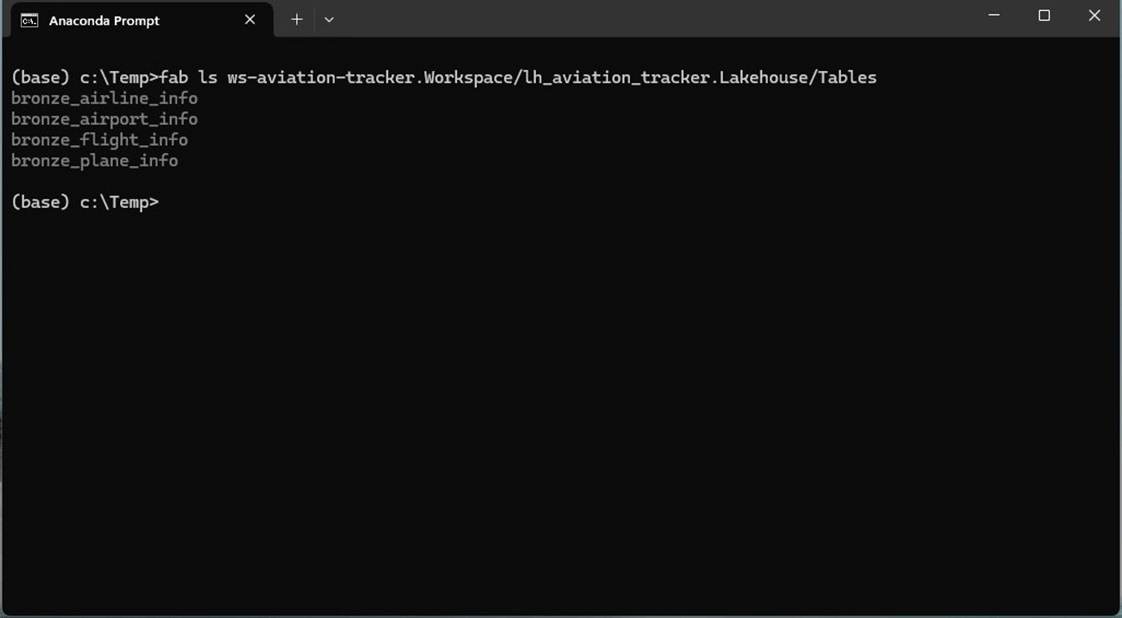

If we add the name of the Lakehouse and the Tables folder to the fully qualified name, we can see all the tables in the lakehouse. We have bronze quality data for airlines, airports, flights, and planes.

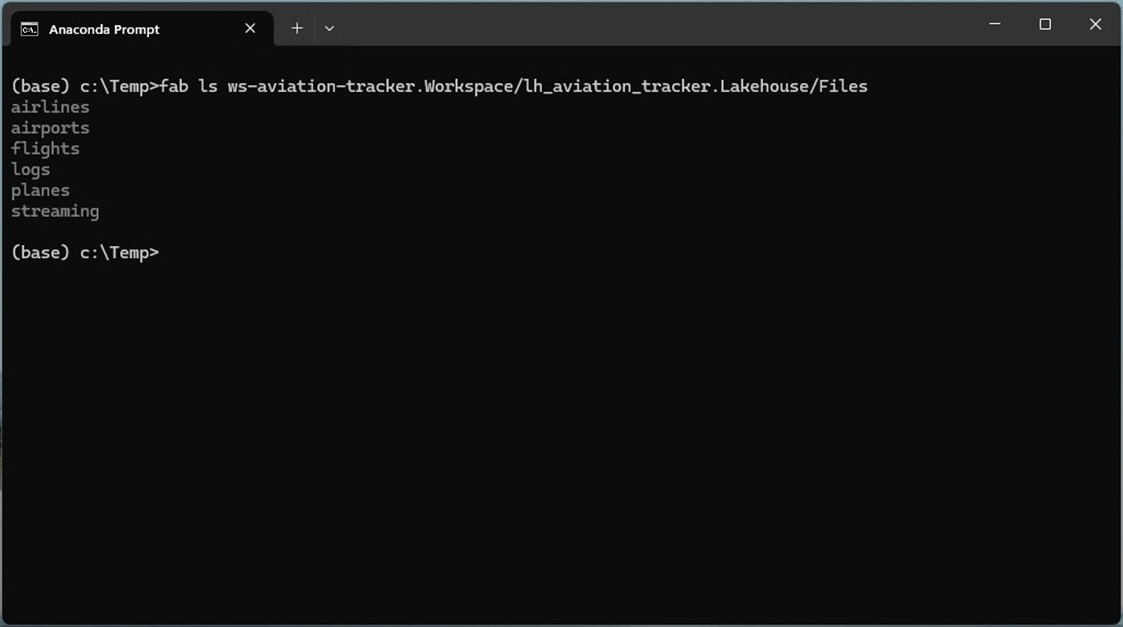

If we change the root folder in the lake house to Files, we can see the different file folders.

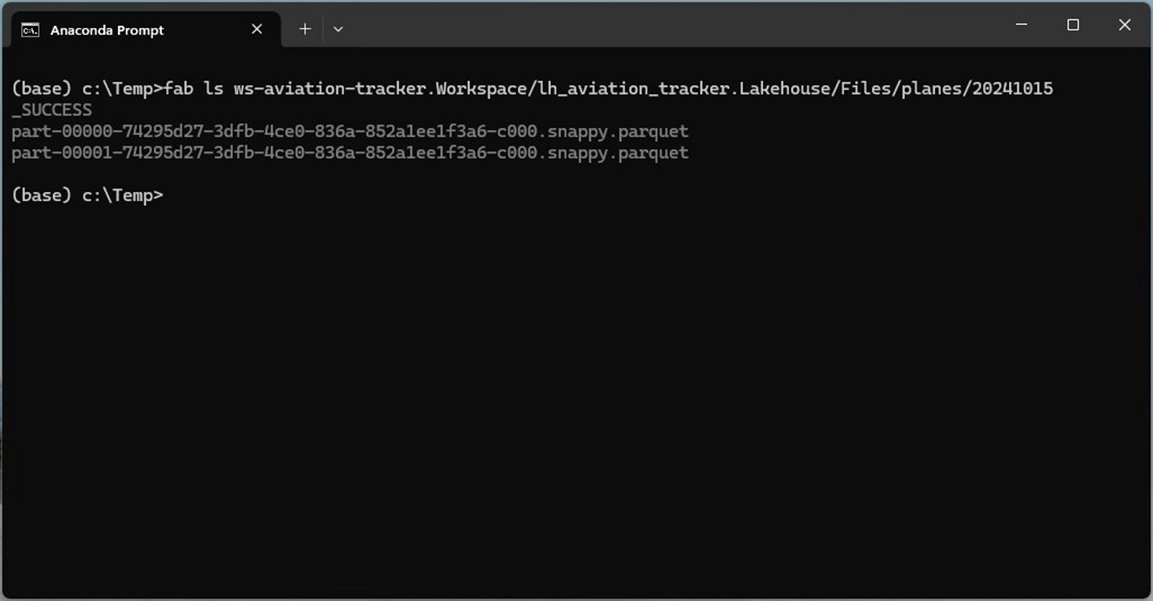

The plane folder contains dimensional data about air planes. We can see that a download of this information from the Internet (Web) was stored in a folder 20241015. This folder is the sortable download date and contains a parquet file that has two partitions.

Concisely, getting information about objects in the Microsoft Fabric ecosystem can be done with the ls command.

Downloading files

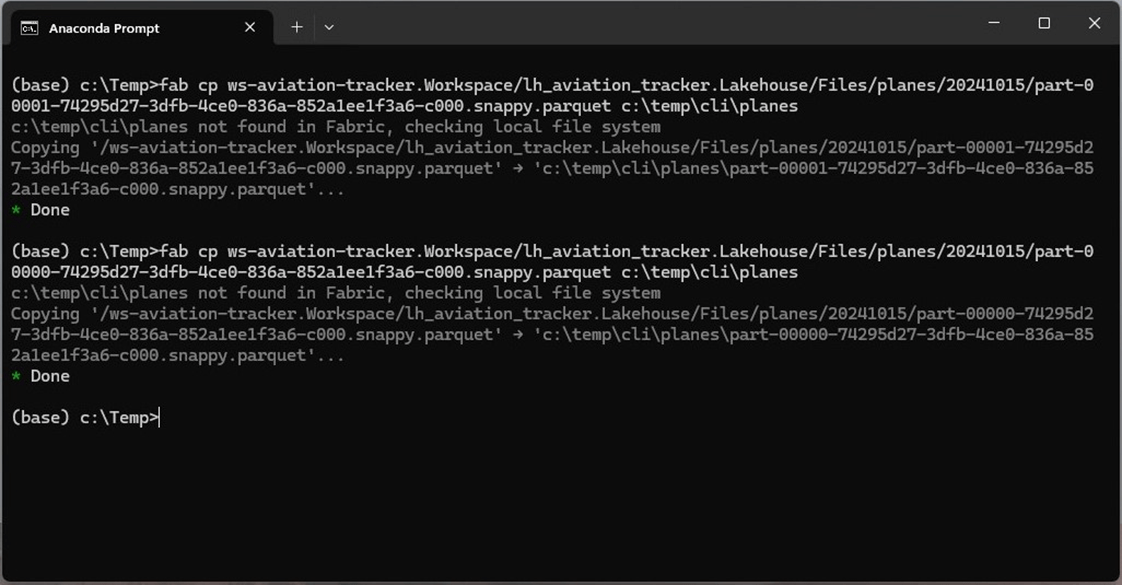

Just like Linux, the copy command (cp) can be used to download files from Fabric to your local computer. If we specify two Fabric paths, the command will copy files from one ADLS Gen 2 storage container to another. The image below shows we can download the plane parquets to the following path “c:\temp\cli\planes.”

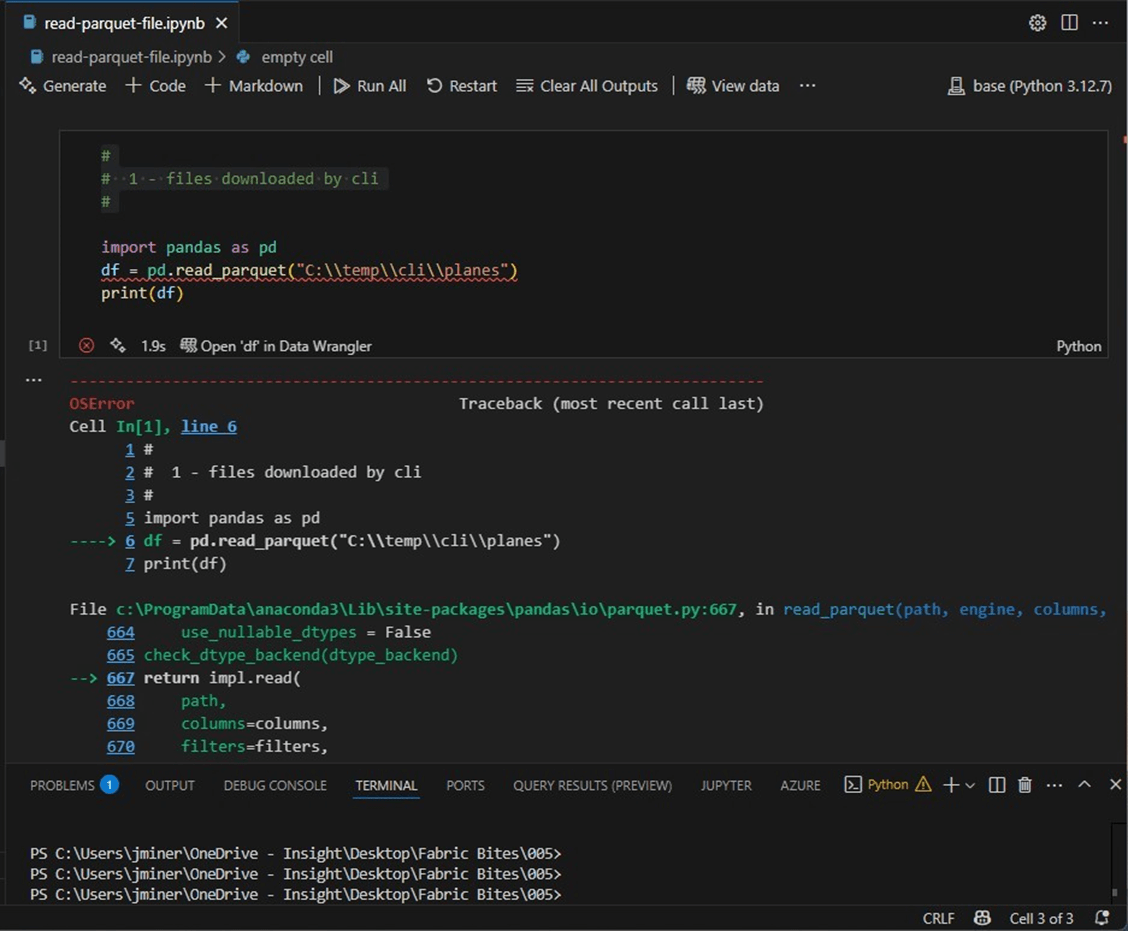

The quickest way to verify the integrity of the downloaded files is to write a quick program using VS Code. The image below shows an Jupyter notebook. The code is using the pandas library to read up the files and display the data. Unfortunately, the command fails.

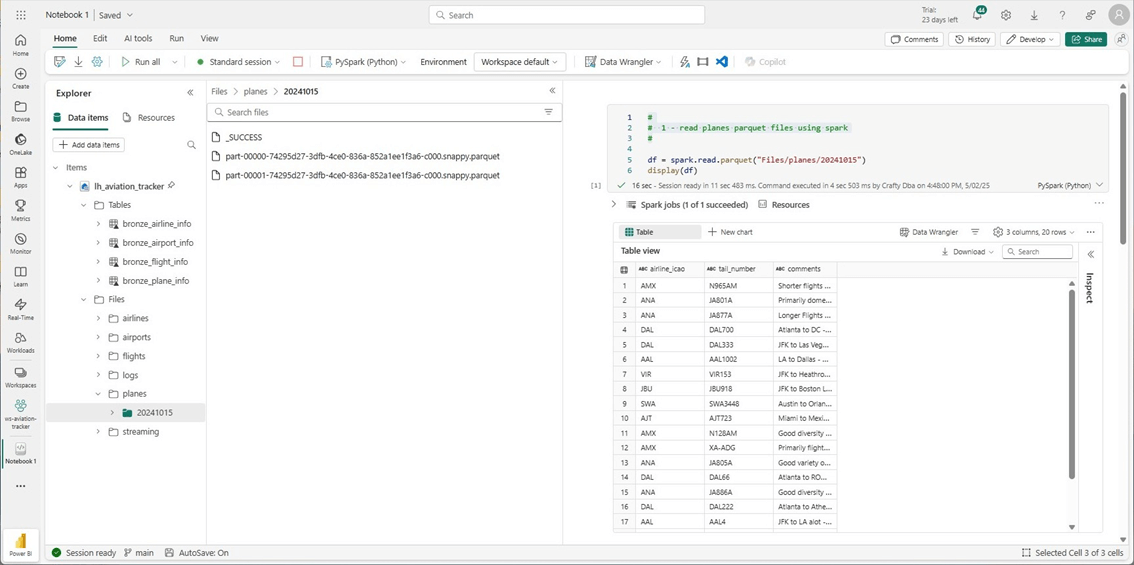

To find the root cause of an issue, we must investigate the problem. Are the source files in Fabric correct? The code below uses spark to read parquet files into a dataframe and display the contents of the dataframe. This code works perfectly.

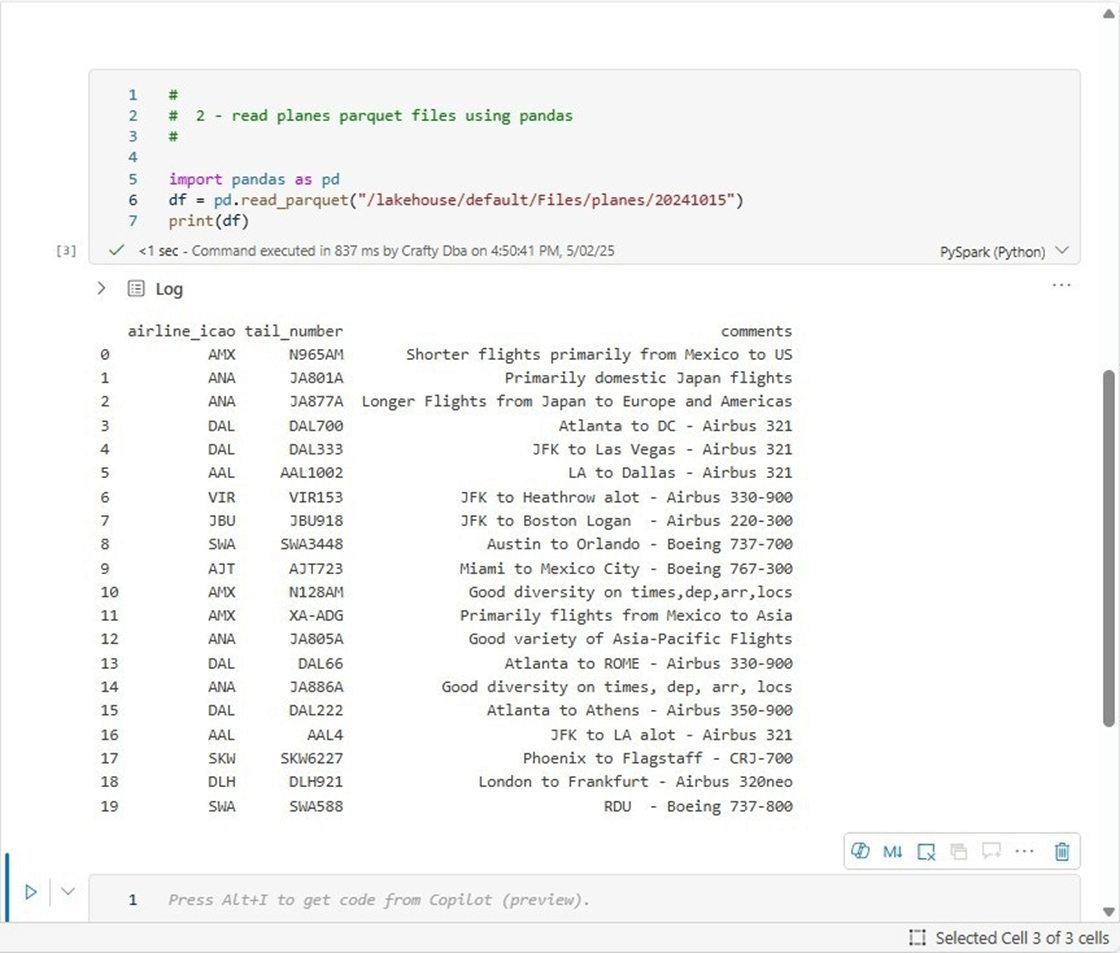

Let us repeat the test using the pandas library. The output below is correct.

At this point in time, the issue must be with the Fabric Command Line Interface. The copy command bug has been reported to the Fabric Ideas website as well as the Microsoft product team. It is my educated guess that the download does not preserve the file in its binary format. The opposite action of downloading files is uploading files. We will be exploring that functionality with a text file in the next section.

Uploading files

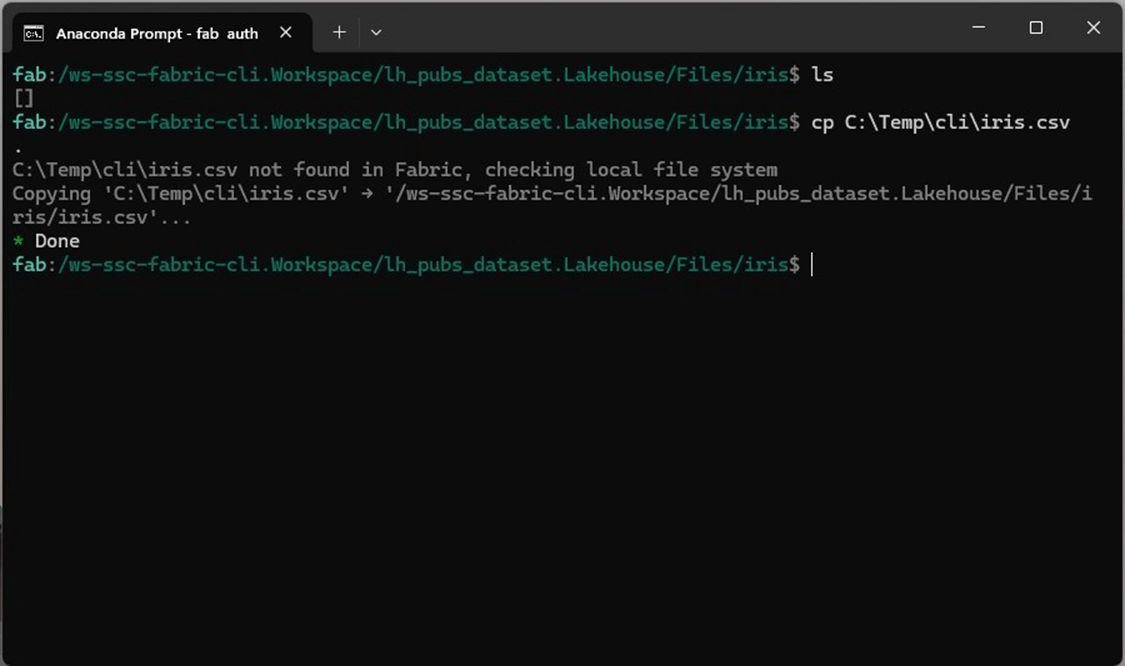

Again, the copy command (cp) can be used to upload files from your local computer to Microsoft Fabric. The Fisher’s Iris dataset is very popular in Machine Learning. One can easily see that the petal/septal width and length of the flowers can be used to cluster the data into three distinct groups by species. I have downloaded and stored the csv file in the c:\temp\cli directory. Additionally, I have a lakehouse named “lh_pubs_dataset” that is deployed in the “ws-ssc-fabric-cli” workspace. This is just a quick test. Therefore, I am going to re-use this existing lakehouse.

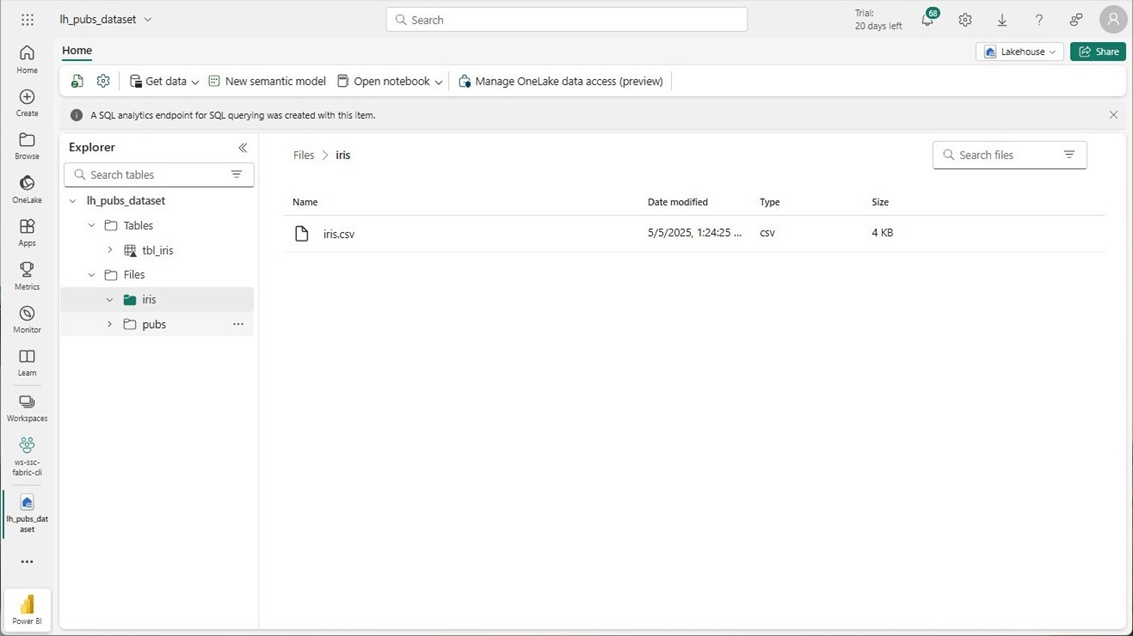

The above image shows the iris csv file has been uploaded to the iris folder. The image below shows the file that exists in the lakehouse folder named iris.

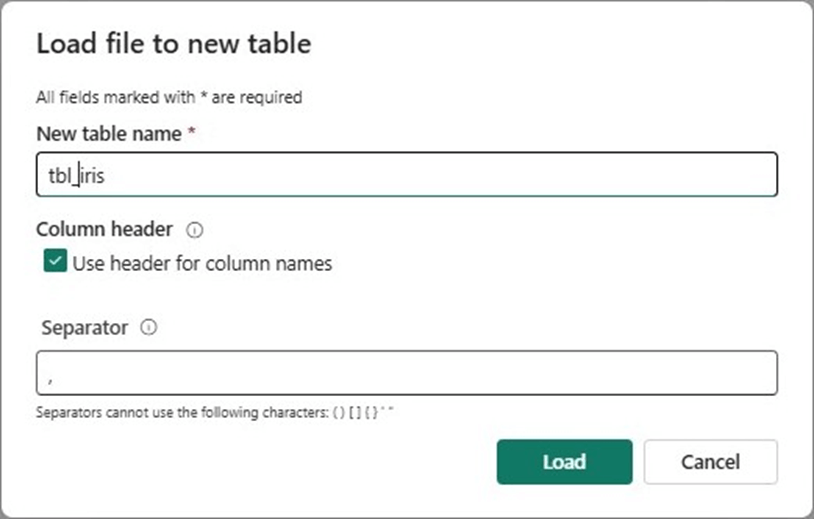

Spoiler alert, we are going to right click on the csv and load the file into a table. The above image shows this has been done already. The image below shows the details on how to load a file into a table.

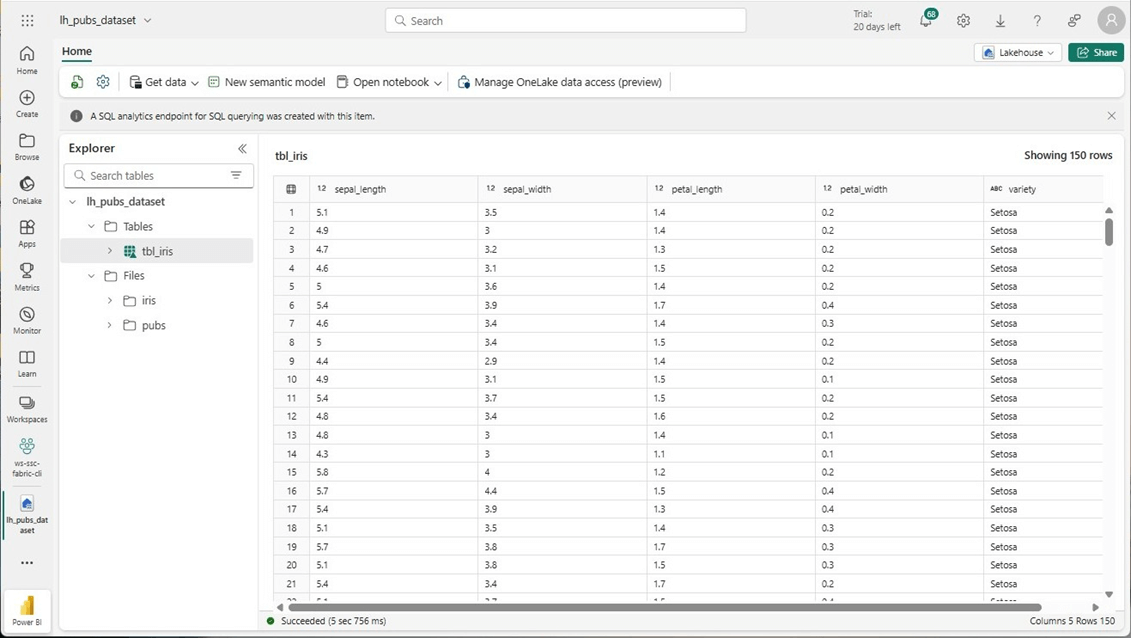

Finally, we want to view the data. We can click on the table to list the 150 rows in the iris dataset.

The copy command of the Fabric CLI seems to work fine with text files. I am looking forward to the bug fix from the Microsoft Product Team so that this functionality works with binary files. Right now, Fabric natively supports loading of both CSV and parquet files. In the next section, we will explore the interactive version of the CLI.

Interactive Mode

The goal in the next two sections is to create a new workspace, assign capacity to the workspace, create a new lakehouse, create a directory structure for the publisher tables, upload parquet files using storage explorer, and create delta tables based upon input files. We are using the sample pubs database from SQL Server that was created in 1996. This section will focus on the workspace tasks.

The wonderful thing about the interactive mode is the location in the Fabric ecosystem is preserved. Moving around the ecosystem is done by using the change directory (cd) command. Use the set command to change to interactive mode. Please note that the prompt changes when you are in this mode.

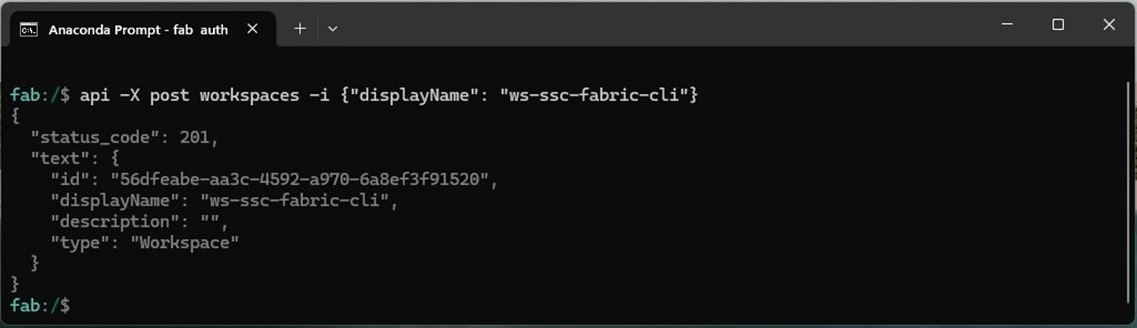

I did not realize there is a create CLI command existed since the documentation is hidden under examples. The old school technique is to call the REST API with the correct inputs. The api command can be used with the correct method (URL path). The image below show a post call to create a new workspace named “ws-ssc-fabric-cli.” Please see documentation for details on create workspace.

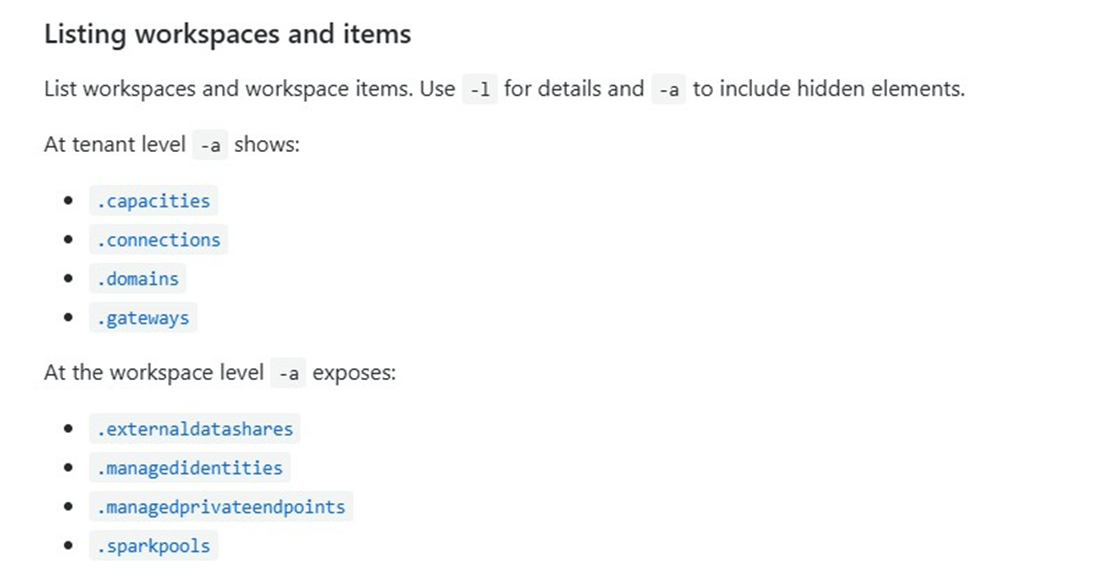

There are some hidden objects that can be listed at the tenant and workspace levels. Please see the image below for details.

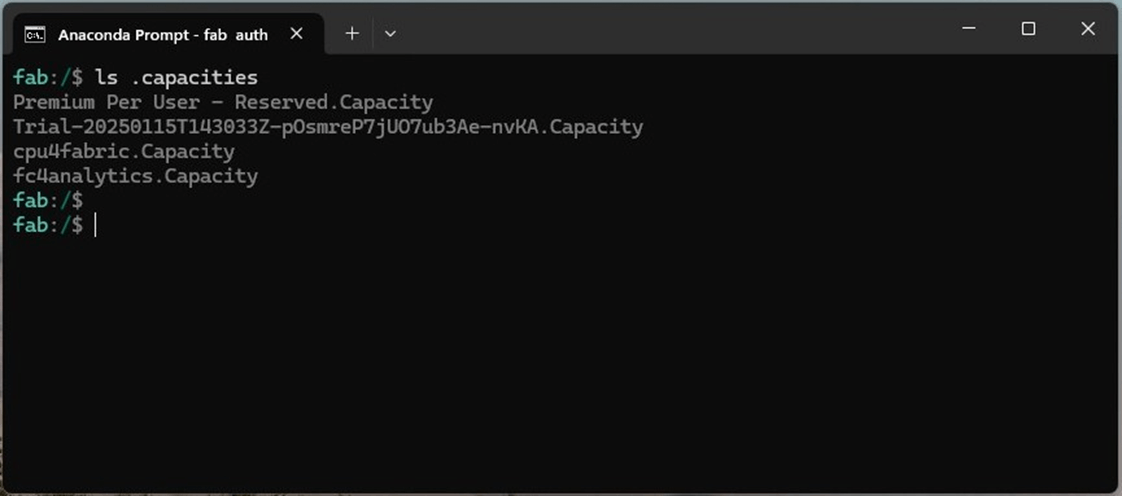

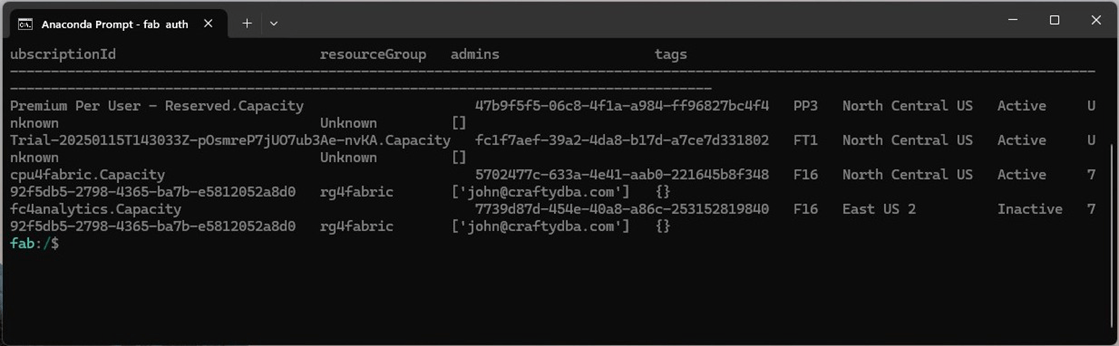

We want to get details about the “cpu4fabric” capacity. The image below shows two dedicated capacities.

We can use the -l option to get details such as the capacity id. We will need the cpu4fabric capacity id and the ws-ssc-fabric-cli workspace id to make the REST API call.

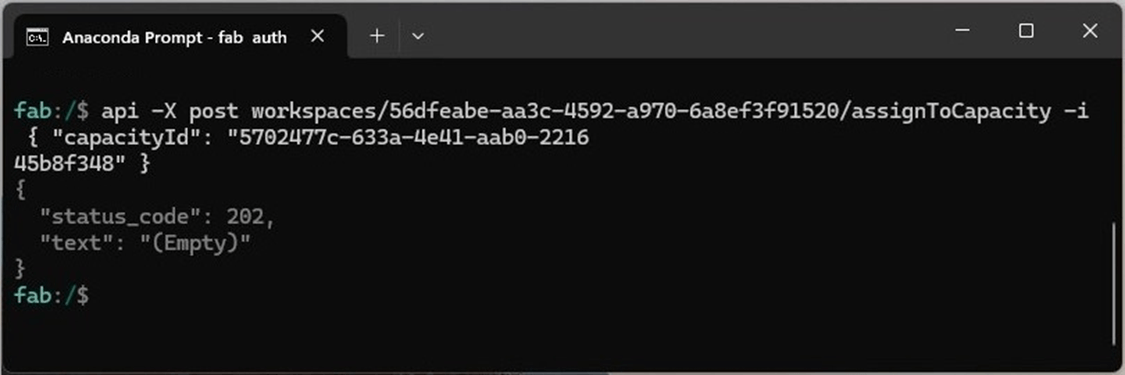

The image above shows the details of each capacity. The image below shows a call to the assign to capacity REST API call. A return code of 202 means the operation has been submitted (accepted). Please see documentation for details on assigning a capacity to a workspace.

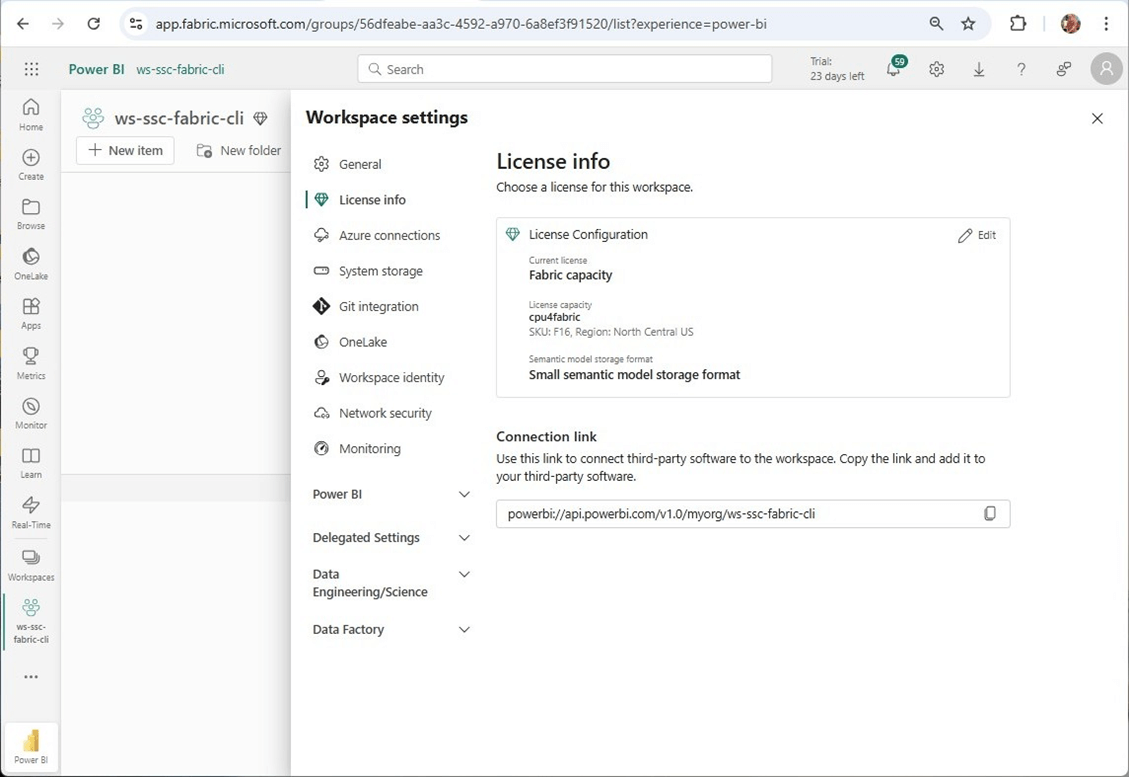

We can go to the licensing information section of the workspace settings to validate that the capacity was assigned.

Why did we assign a capacity to the workspace? We cannot do any data engineering in Fabric without a capacity. We can create PBI reports using PPU or PRO license.

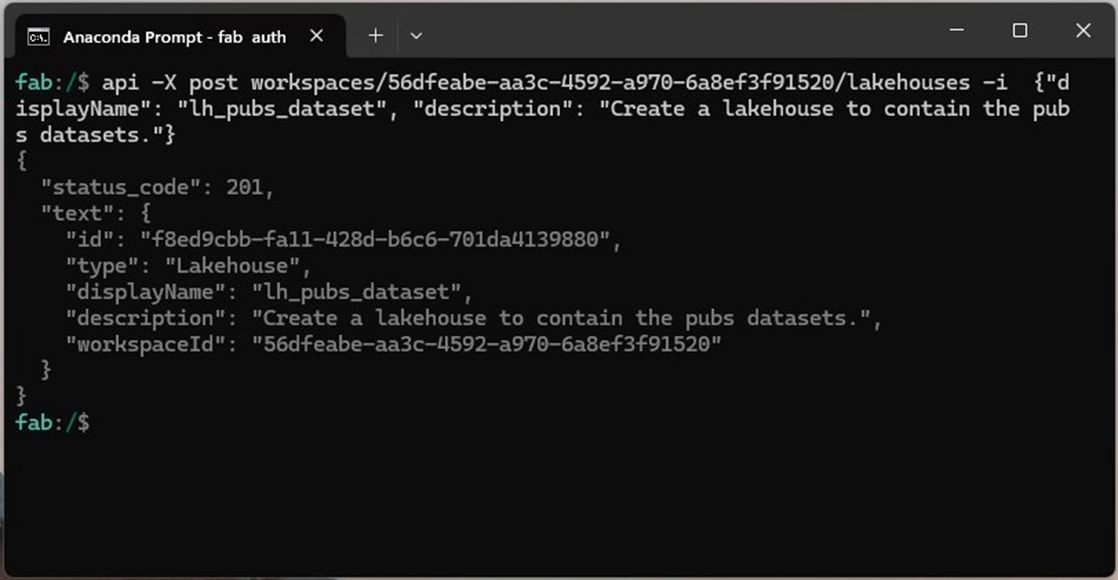

Managing a Lakehouse

The first step is to deploy the lakehouse. Again, there is a create command that is part of the Fabric CLI. For now, we are going to call REST API to create the lakehouse. The body or input is a json document that has the name of the lakehouse and the description of the lakehouse. Please see documentation for details and image below for sample execution. After executing the command, we have a lakehouse named lh_pubs_dataset.

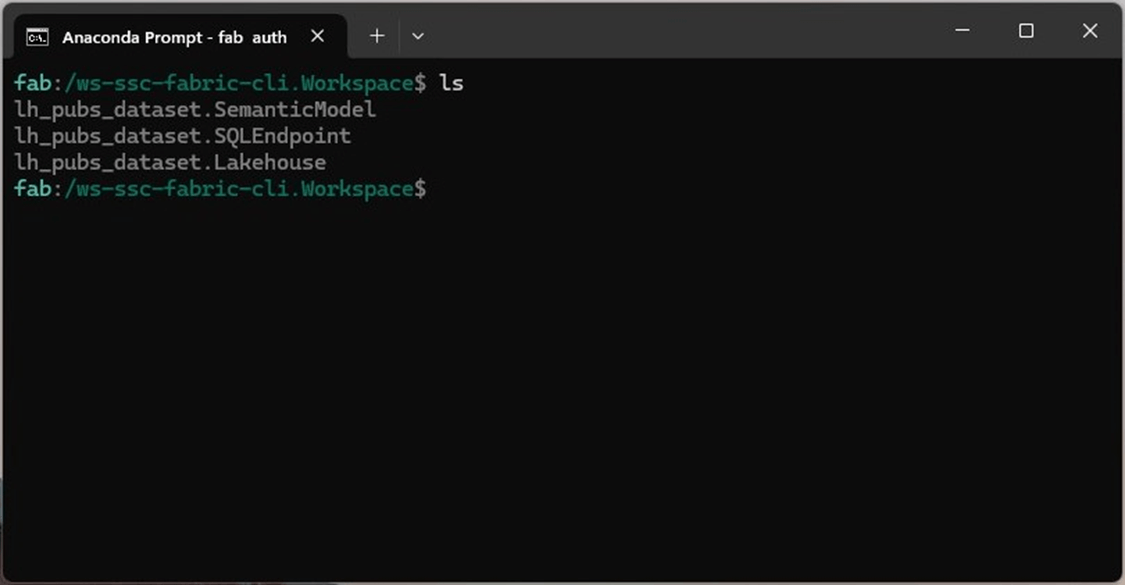

Use the change directory (cd) command to move to the new workspace and list the objects that are part of the lakehouse. We can see in the image below that we have a lakehouse, a semantic model, and the SQL end point.

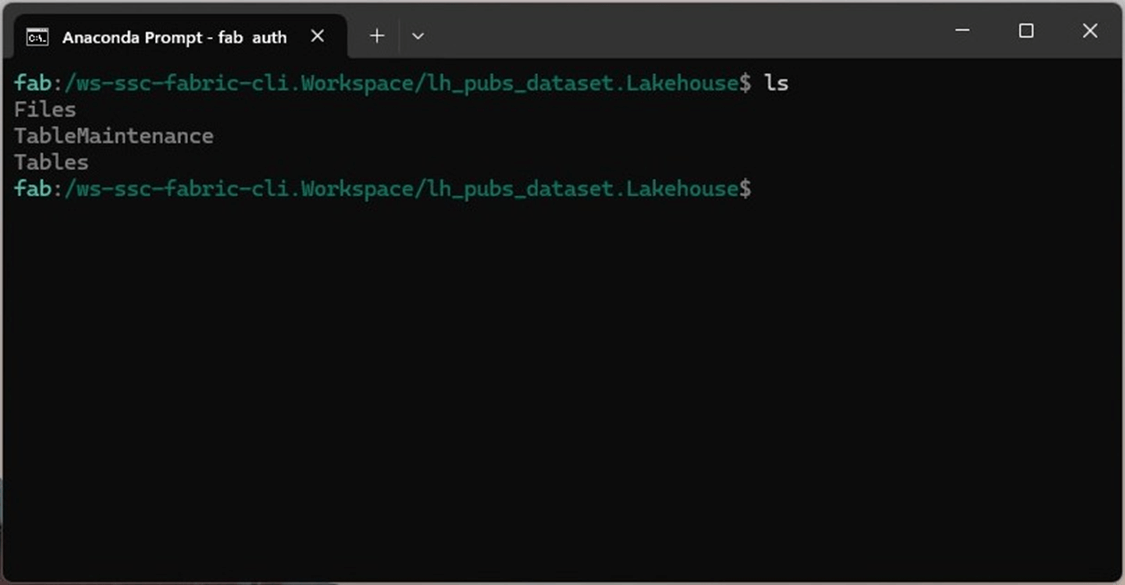

If we drill into the lh_pubs_dataset object, we can see three items that are part of every lakehouse. The Files, Tables, and Table Maintenance objects.

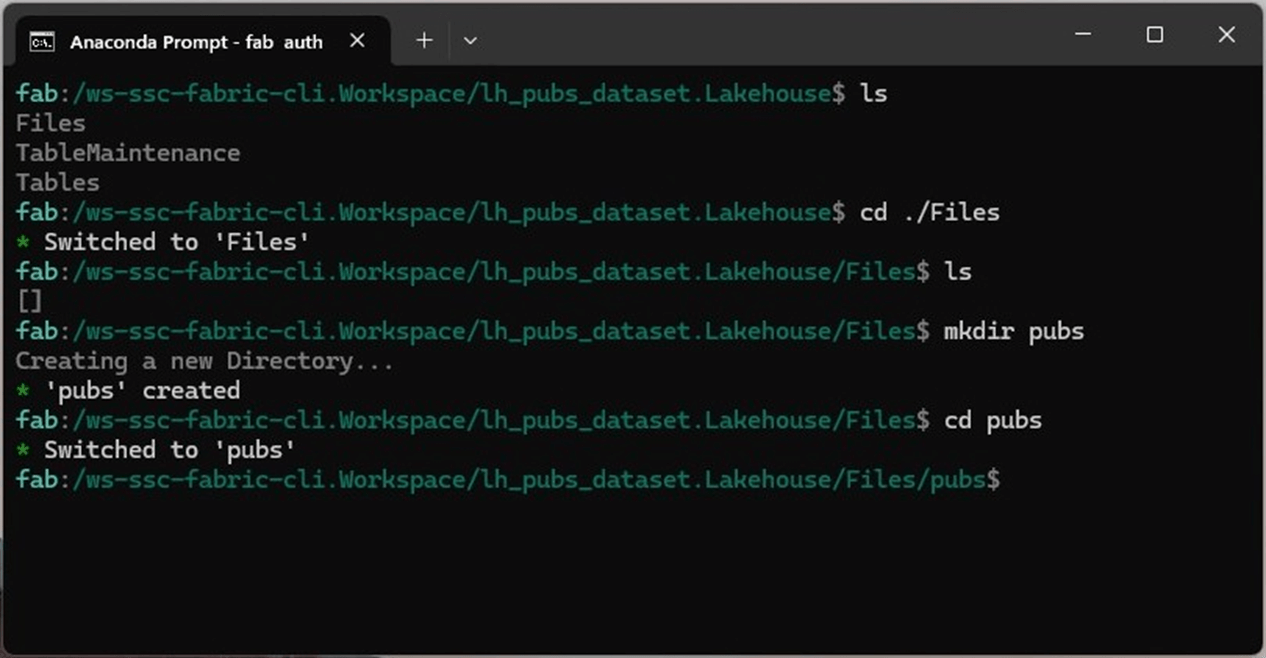

We are going to create a main folder under Files called pubs. The mkdir fabric CLI command is an alias for create.

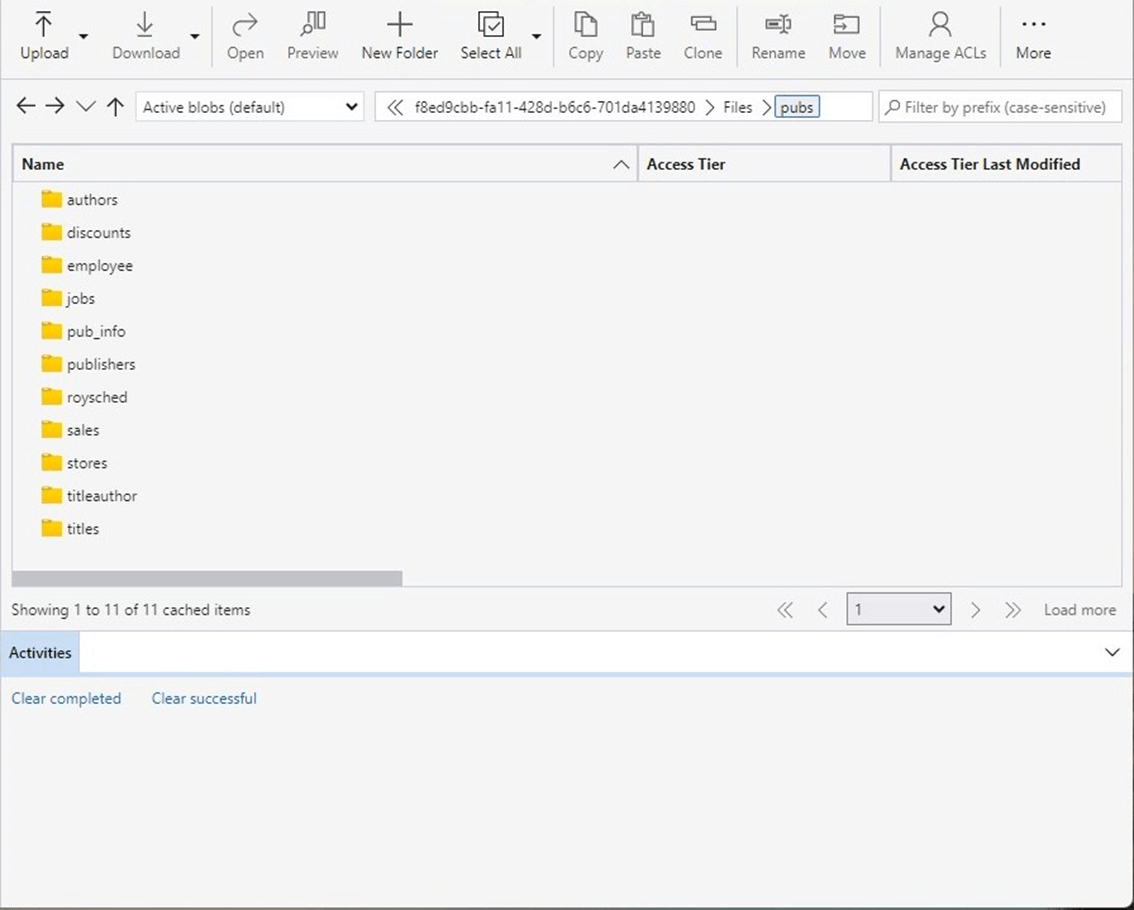

Since we know that the binary copy for the Fabric CLI is buggy currently, we are going to use Azure Storage Explorer to upload a pre-defined folder structure that contains parquet files for each table.

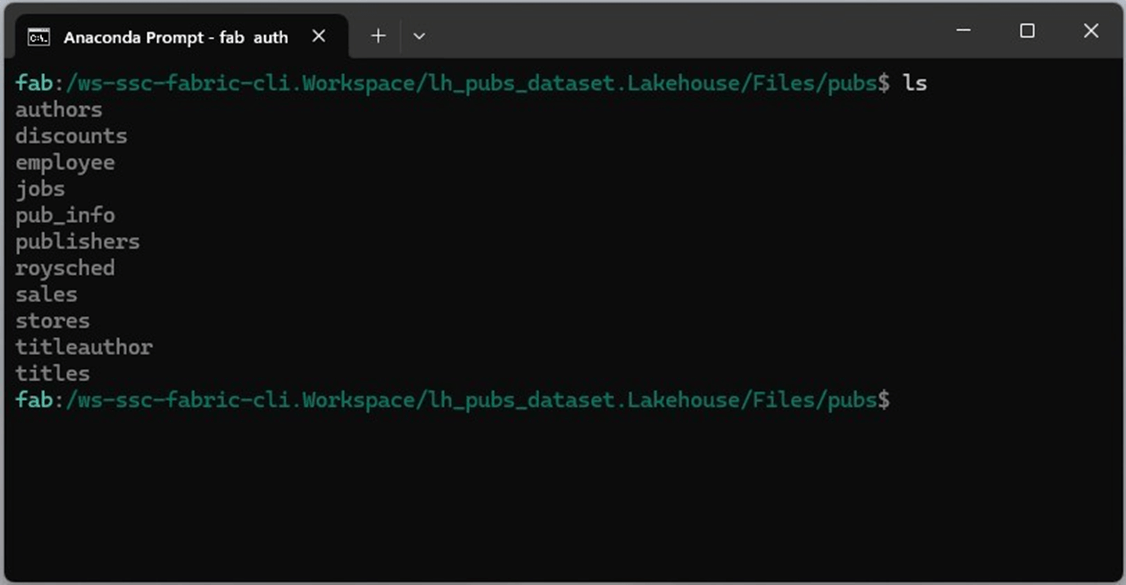

If we do a listing of the pubs directory, we can see all the folders have been uploaded.

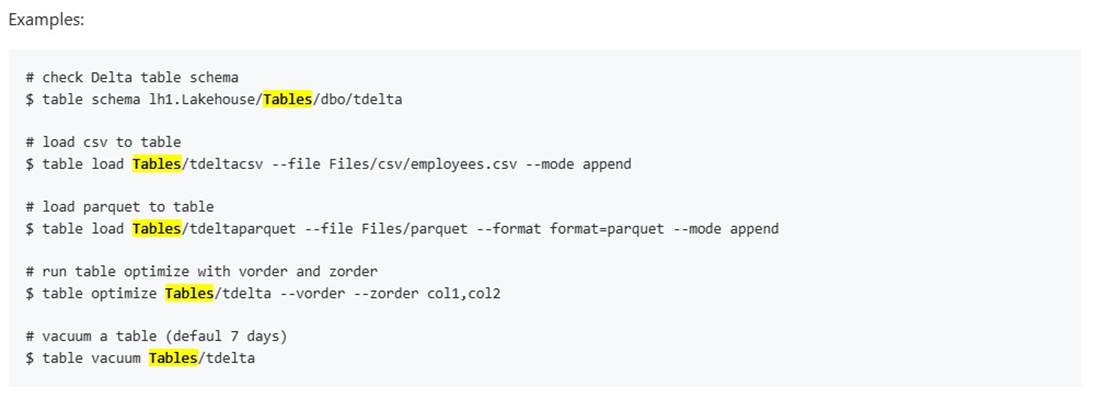

The last step it to create tables based upon the parquet files. Looking at the Fabric CLI documentation shown as an image below, we should be able to use the syntax for the third example.

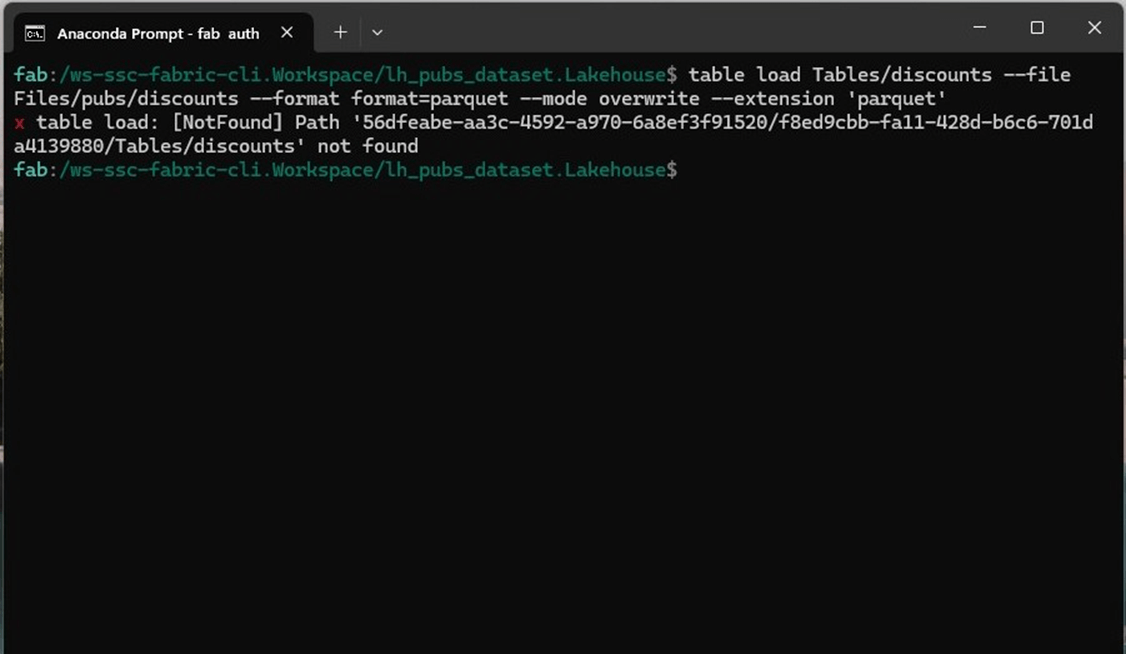

Unfortunately, I have discovered another bug. The load table bug has been reported to the Fabric Ideas website as well as the Microsoft product team.

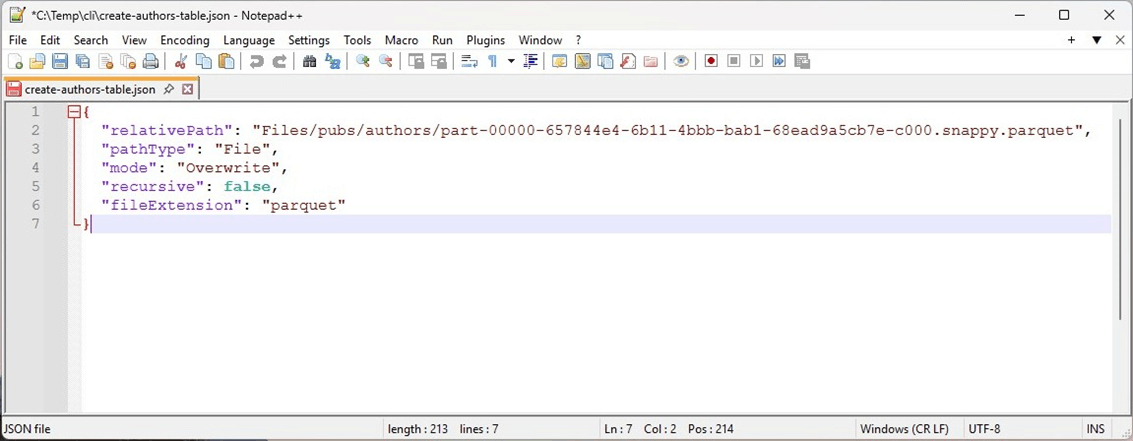

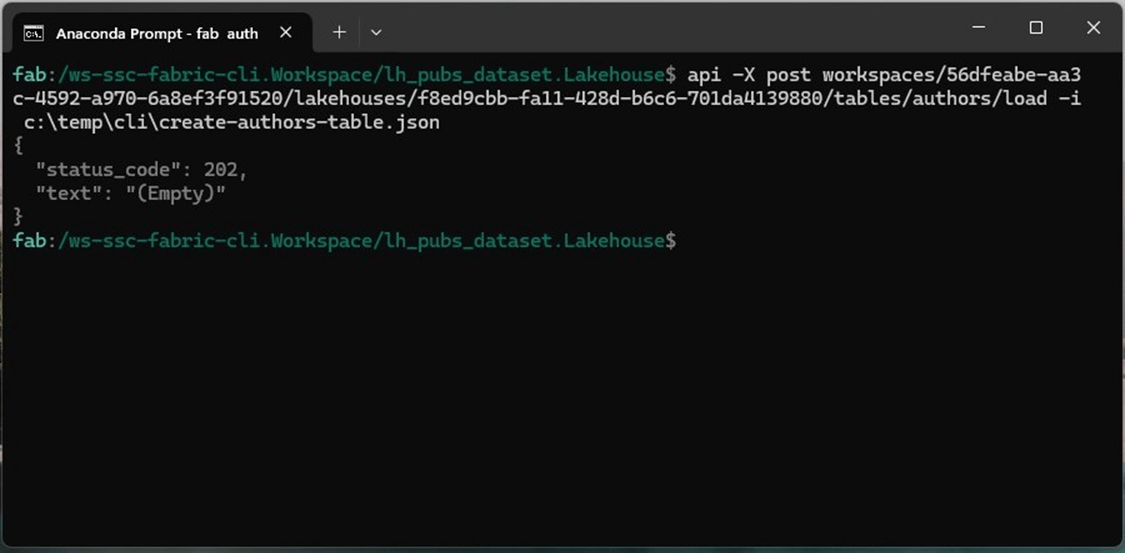

The command works if an existing table exists. Let us use the api command to create a work around to load the author’s table. Since the JSON document is quite large, I am going to store the information in a file. The image below shows the details needed for the REST API call.

The command below submits a request to create the delta table based on the parquet file. The http return code of 202 tells me that the command has been accepted.

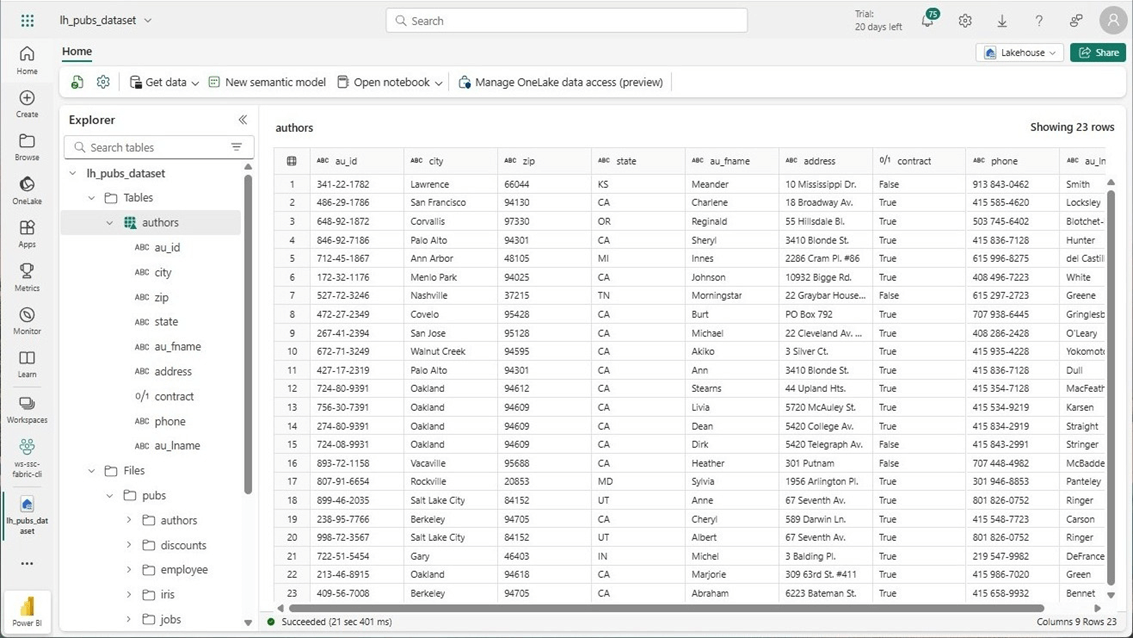

After a few minutes, the request will be processed, and the author’s table will appear. The image below shows the records in the table by using the explorer in the Microsoft Fabric interface.

The commands to create and load a lakehouse exist in the Microsoft Fabric Command Line Interface. Two existing bugs are show stoppers. We need the ability to upload and download binary files as well as create tables. Since this is in preview, I expect the bugs to be fixed soon.

Summary

There are two packages that I will use in the future to manage Microsoft Fabric. If I need to write something that will be executed internally within Fabric, I suggest using the Semantic Link and Semantic Link Labs libraries. On the other hand, the Microsoft Fabric CLI is a great tool to execute commands externally from the ecosystem.

Today, we created a new workspace, assigned an existing capacity to the workspace, created a new lakehouse, created a directory structure for the publisher tables, uploaded parquet files using storage explorer, and created delta tables based upon input files. This is a typical deployment pattern for an existing system that has dimensional data. During the exploration, a couple bugs were discovered in the preview release of the product. Once these bugs are squashed, the Microsoft Fabric CLI will be ready to automate deployments of Lakehouse objects.

I hope you enjoyed this article. Next time, we will investigate what features are available in the command line interface to manage Fabric Warehouses.