Introduction

You are a SQL Developer or BI architect, and you get news that your scheduled production ETL Job(s) that were running “perfectly” are taking “longer” and “longer” to run. The problem is there are over 300 individual child SSIS packages that stage data, load a Data Warehouse (WH), load Data Marts, process SSAS Cubes and manage your Database and Cube Partitions.

Some of the questions that could be asked would be: What is “longer”, or what is the benchmark for that complaint? Which of the ETL phases and individual packages within the ETL phases are causing the job to take longer? Which SSIS tasks and pipeline components on the individual Dataflow tasks are causing the packages to take longer? Or could this simply be as a result of increase in amount of data being pulled?

In part 1, we looked at some prerequisites for setting up a comprehensive ETL Audit system namely:

- Gathering all your SSIS Package information such as Packages, Tasks and Pipeline Component Names and Ids.

- Enabling the minimal necessary Logging required to Capture ETL Execution Cycle.

Before we proceed we are going to outline a hypothetical business scenario and sample ETL architecture that we will use for all demonstration going forward.

This is a three part series on ETL auditing. The various articles cover:

- Part 1 – Introduction to ETL Auditing

- Part 2 – Auditing Simple Setups

- Part 3 – Auditing Complex Setups

Sample ETL Project

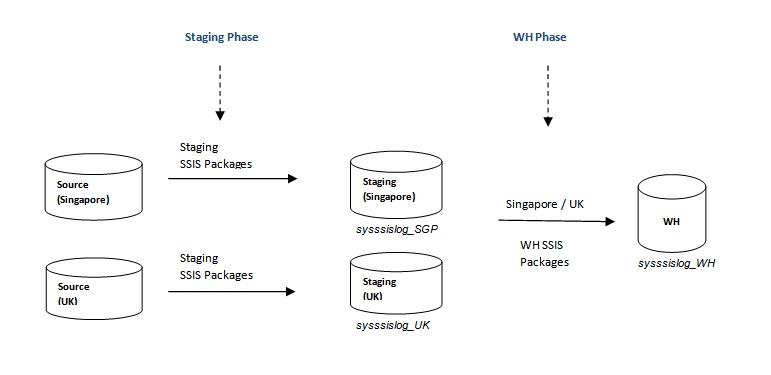

To demonstrate some complexities in setting up ETL audit systems using the SSIS logging option, we are going to assume an ETL Process that loads a Data Warehouse (WH) for a Company with two businesses, one in Singapore and another in the UK, each with a separate data source. From the two sources, data is first staged on separate databases before it is finally moved into the WH. The ETL setup for the two businesses is as shown fig 2 below.

Fig 1: Showing our hypothetical ETL Setup.

Fig 1: Showing our hypothetical ETL Setup.

Auditing Data Staging Phase

In this part of the series we are going to focus on ETL processes in the Staging Phase of the architecture shown in fig 1. In the Staging Phase, data is pulled from two separate sources into two separate staging databases on two different servers. Each of the staging databases has a SSIS log table into which logging is enabled for the set of packages that loads that particular staging database.

From the ETL architecture shown on fig 1, let’s further analyze and summarize what’s involved in the staging phase.

Staging Phase:

- Data coming from the Singapore and UK sources is staged separately on different servers for the two systems.

- The same or different set of SSIS Packages are deployed to each of the two staging servers to load data from Source to Staging.

- Logging is enabled for each set of the Staging Package into a separate SSIS log table namely sysssislog_SGP and sysssislog_UK for the Singapore and UK staging databases respectively.

The key point to note here is that log events pertaining to each source system is logged into a separate logging table, in other words all execution cycles in the sysssislog_SGP log table can be attributed to Singapore staging. Similarly, every event logged into sysssislog_UK will belong to the UK staging phase.

Establishing Execution Framework within the Staging Phase

To be able to enable a well-coordinated audit system we must outline the ETL process lifecycles within the staging phase in our ETL set up. For the purposes of this exercise, we are going further assume that;

- The WH is of a daily latency. This means that in a day we expect two separate and successful batch executions in the Staging Phase, one from the Singapore source database to the Singapore staging database and another from the UK source database to the UK staging database.

- A successful batch execution is when both the first and last package loading each staging phase successfully completes together in a run.

- ETL batch execution is restarted if a package fails before the completion of the first and last package together.

All source, staging and WH databases are SQL Server databases.

Estimating Batch Execution Cycles

As defined above, a successful batch execution is when both the first and last package in each staging phase successfully completes together in a run.

To determine execution intervals for successful staging batches, we will estimate the time between the first event logged by the “First Package” and the last event logged by the “Last Package” excluding failed execution. Note that it is important that you identify the first and last packages in any of your SSIS setup that you consider a batch. This information can be obtained in many ways, but in these demos I identified them by name, for instance the logic in listing 1 below will output all successful Singapore batches in the staging phase. In the 'First Package Name' will represent the actual name of the first package in my batch process.

Listing 1: SQL Script to capture Batch Execution Cycles by source system from an SSIS Log table.

with FailedExecs

as

(

select Distinct

s.executionid

from dbo.sysssislog_Singapore s

where event='OnError'

),

FirstPackage

as

(

select

ROW_NUMBER() over ( partition by s.sourceid

order by min(s.Starttime) ) Batchid

,min(s.Starttime) as StartTime

,cast(convert(char(8),min(s.Starttime) ,112) as int) as StartDatekey

,s.executionid

,s.source

from dbo.sysssislog_Singapore s

where s.source like 'First Package Name'

and event not like ('User:%')

and s.executionid not in (select executionid from FailedExecs)

group by s.executionid ,s.source,s.sourceid

),

LastPackage

as

(

select

ROW_NUMBER() over ( partition by s.source

order by max(s.Endtime) ) Batchid

,max(s.Endtime) as EndTime

,cast(convert(char(8),min(s.Endtime) ,112) as int) as EndDatekey

,s.executionid

,s.source

from dbo.sysssislog_Singapore s

where s.source like 'Last Package Name'

and event not like ('User:%') --Note

and s.executionid not in (select executionid from FailedExecs)

group by s.executionid ,s.source,s.sourceid

)

-- insert into aud.ETLPhaseBatches

select Distinct

N'Staging' as [ETLPhase]

,N'Singapore' as [SourceSystem]

,f.Batchid as BatchId

,f.StartTime as StartTime

,f.StartDatekey as StartDatekey

,l.EndTime as EndTime

,l.EndDatekey as EndDatekey

--,d.DayNumberDesc

--,d.WeekNumberDesc

--,d.MonthNumberDesc

-- ,d.YearNumberDesc

,datediff (second,f.StartTime,l.EndTime ) as EstBatchExecTime_Sec

,datediff (MINUTE,f.StartTime,l.EndTime ) as EstBatchExecTime_Min

from FirstPackage f

inner join LastPackage l

on f.Batchid=l.Batchid

--inner join [Dim].[Date] d

--on d.DateISOName=f.StartDatekeyFrom the logic it means that if you run 10 successful batches from Singapore source to Singapore staging in 10 days, the result will be 10 batch ids each representing a day’s execution. The logic also returns the estimated time it took for each of the batches to complete, the day the execution occurred and the actual time each batch started and completed.

Note that by replacing sysssislog_SGP with dbo.sysssislog_UK and the Source column name 'Singapore' with 'UK' in the logic all the staging batches for UK source can also be obtained.

It is also important to note that logic will work for any SSIS logging table regardless of the ETL phase as long as one set of packages are logging data into a particular log table from one source. Let’s now look at some other scenarios:

- Where you have only one package in an ETL phase and

- Where you have a one parent package calling and processing many child packages either sequentially or in parallel.

In the first case the one package in the phase becomes both the “First Package Name” and “Last Package Name” in the logic in listing1. Similarly in the second case by replacing “First Package Name” and “Last Package Name” with the parent package name, the logic will capture the execution cycles for all the child packages called by the parent.

Package Execution Context

It very important to note that when you have a setup where a parent package calls a child package and logging is enabled on both packages, a single event is logged twice for the child package. The first time, the entries are logged under the execution context of the child package. The second time, the entries are logged under the execution context of the parent package.

This can present a problem in establishing executions cycles, since they represent duplicate entries in that regard and has to be eliminated. Log entries that are logged by a child package under the execution context of a parent package contain a User: prefix in the event field, the clause

Where event not like ('User:%')

is added to all the scripts to excludes these logs in the execution times estimation process.

Now assuming this information has been collected for Singapore and UK into one table aud.ETLPhaseBatches , let’s see what we can do with it.

Batch Reports

The very essence of capturing audit trails is to be able to generate report which will help you answer some of the questions we outlined at the beginning.

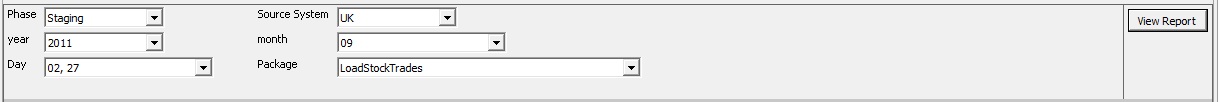

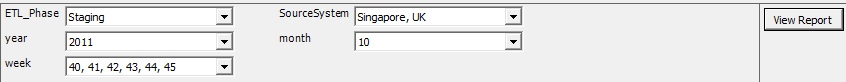

The logic in listing1 above can be joined to a time dimension table (commented out in the logic) to generate various batch reports. By joining the logic to a time dimension will allow you to generate a time parameterized report for various phases and sources. Fig 2 shows report parameters for the “Singapore” and “UK” systems in the staging ETL phase.

Fig 2: Batch Cycle Report Parameters

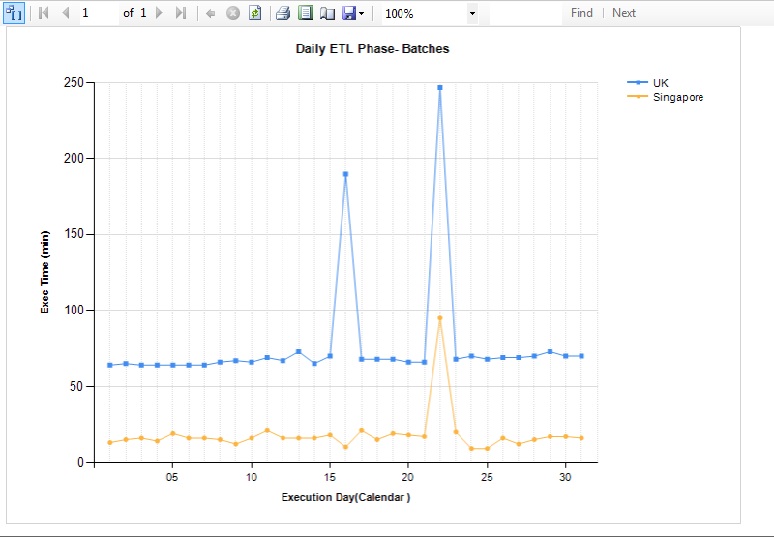

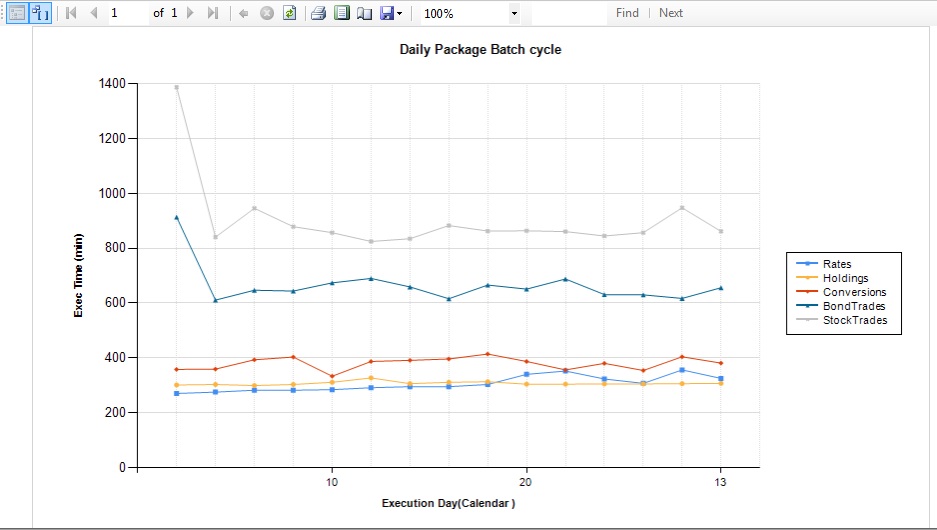

The resulting sample batch report graph is displayed in fig 3 below.

Fig 3: Parameterized report showing Daily batch execution cycles for ETL Phases and source System within a Period of time

The report illustrates daily batch execution cycles for the “Singapore” and “UK” systems in the staging ETL phase. The graph shows two spikes in the batch executions where the staging packages took 5 times the average batch execution time to complete on the 16th and 22nd of October for the UK load. A similar spike is observed for the Singapore load on the 22th but not on the 16th. I will leave you to draw some conclusions but after such an observation, you may like to know what package execution times could have contributed to the 16th spike for UK if it wasn't say network related. that is what we going to do in the next section.

Estimating Package Execution intervals

In the previous section we outlined all batches and estimated the execution time interval for each batch. In this section we will estimate the execution time interval for packages within each of the batches. To estimate this metric for packages we will estimate the time between the first and last event logged by each package. The script to estimate these time intervals is shown in listing 2 below.

Listing 2: SSIS Package Batches cycles by source system from SSIS Log

with FailedExecs

as

(

select Distinct

s.executionid

from sysssislog_Singapore s

where event='OnError'

),

PkgBatches

as

(

select

ROW_NUMBER() over ( partition by s.sourceid

order by min(s.Starttime) ) Batchid

,cast(convert(char(8),min(s.Starttime) ,112) as int) as Datekey

,s.sourceid

,s.executionid

,p.PackageName

from sysssislog_Singapore s

inner join Aud.DimSSISPackage p

on s.sourceid =p.PackageId

where s.executionid not in (select executionid from FailedExecs)

and s.event not like ('User:%')

group by s.executionid,s.sourceid ,p.PackageName

)

-- insert into aud.PackageBatches

select

N'Staging' as [ETLPhase]

,N'Singapore' as [SourceSystem]

,p.PackageName

,s.sourceid

,s.executionid

,P.Batchid as BatchId

,min(s.starttime) as starttime

,Max(s.endtime) as endtime

,p.Datekey

,d.DayNumberDesc

,d.WeekNumberDesc

,d.MonthNumberDesc

,d.YearNumberDesc

,datediff (second, min(s.starttime) , Max(s.endtime)) as EstExecTime_Sec

,datediff (minute, min(s.starttime) , Max(s.endtime)) as EstExecTime_Min

from sysssislog_Singapore s

inner join PkgBatches p

on s.executionid=p.executionid

and s.sourceid =p.sourceid

inner join [Dim].[Date] d

on d.DateISOName=p.Datekey

where s.executionid not in (select executionid from FailedExecs)

group by

p.Datekey

,s.sourceid

,s.executionid

,p.PackageName

,p.Batchid

,year(s.StartTime)

,month(s.StartTime)

,day(s.StartTime)

,d.DayNumberDesc

,d.WeekNumberDesc

,d.MonthNumberDesc

,d.YearNumberDesc The key piece in Listing 2 is that we join the log table to Aud.DimSSISPackage table which holds package information such as packages names and their related IDs (Refer to the “Gathering SSIS Package Information” section in Part 1) of our ETL setup.

Assuming this information has been collected for Singapore and UK into one table, aud.PackageBatches, by joining the two tables loaded in listing 1 and listing 2, Package executions can be matched to phase batches as shown in listing 3 below.

Listing 3: Matching Package to Phase Batches.

select a.ETLPhase ,a.SourceSystem ,a.PackageName ,a.Batchid as PackageBatchId ,a.starttime ,a.endtime ,a.p.Datekey ,a.d.DayNumberDesc ,a.d.WeekNumberDesc ,a.MonthNumberDesc ,a.YearNumberDesc ,a.EstExecTime_Sec as PackageEstExecTime_Sec ,b.BatchID as PhaseBatchID From aud.PackageBatches a inner join from aud.ETLPhaseBatches b on a.ETLPhase=b.ETLPhase and a.SourceSystem=b.SourceSystem and a.starttime>=b.starttime and a.endtime <=b.Endtime

Package Level Reporting

Note that the logic in listing 2 above has been joined to a time dimension table. By joining the logic to a time dimension will allow you to generate a time parameterized package level report for various phases and sources. Fig 4 below shows as sample report parameters for the top 5 Packages for the “UK” staging phase within a certain period of time.

Fig 4: Report Parameters for Top 5 Packages based on averaged Execution Cycle intervals.

Fig 5 below displays a sample report of the parameters on fig 4 above.

Fig 5: Showing the Package Execution Cycles for UK Package in the Staging Phase with the Top 5 average execution times.

What can be deduced from the sample graph in fig 5? Apart from the first day of the month where the StockTrades and BondTrades Packages loading the UK staging database showed spikes in execution times there is nothing unusual about execution cycles for all five packages in that month. The spikes on the first could be attributed to month-end activities from the sample data used.

Estimating Task level Execution intervals

To estimate the execution cycle interval for tasks, we will estimate the time between the first event logged by each task and the last event logged by the same task. Note that we will exclude all failed executions in both cases. The script to do this is in Listing 4 below.

Listing 4: SSIS Task Batches cycles by source system from SSIS Log

with FailedExecs

as

(

select Distinct

s.executionid

from sysssislog_Singapore s

where event='OnError'

),

TaskBatches

as

(

select

N'Staging' as [ETLPhase]

,N'Singapore' as [SourceSystem]

ROW_NUMBER() over ( partition by s.sourceid

order by min(s.Starttime) ) Batchid

,cast(convert(char(8),min(s.Starttime) ,112) as int) as Datekey

,s.sourceid

,s.executionid

,t.TaskName

,p.PackageName

from sysssislog_Singapore s

inner join Aud.DimSSISPackageTasks t

on t.TaskId=s.sourceid

inner join Aud.DimSSISPackage p

on t.PackageId=p.PackageId

where s.executionid not in (select executionid from FailedExecs)

and s.event not like ('User:%')

group by s.executionid,s.sourceid ,t.TaskName,p.PackageName

)

--insert into Aud.ETLTaskBatches

select

t.[ETLPhase]

,t.[SourceSystem]

,t.TaskName

,t.PackageName

--,s.sourceid

--,s.executionid

,t.Batchid as BatchId

,min(s.starttime) as starttime

,Max(s.endtime) as endtime

,t.Datekey

,d.DayNumberDesc

,d.WeekNumberDesc

,d.MonthNumberDesc

,d.YearNumberDesc

,datediff (second, min(s.starttime) , Max(s.endtime)) as EstExecTime_Sec

,datediff (minute, min(s.starttime) , Max(s.endtime)) as EstExecTime_Min

from sysssislog_Singapore s

inner join TaskBatches t

on s.executionid=t.executionid

and s.sourceid =t.sourceid

inner join [Dim].[Date] d

on d.DateISOName=t.Datekey

group by

t.Dateke

,s.sourceid

,s.executionid

,t.Batchid

,t.TaskName

,t.PackageName

,year(s.StartTime)

,month(s.StartTime)

,day(s.StartTime)

,d.DayNumberDesc

,d.WeekNumberDesc

,d.MonthNumberDesc

,d.YearNumberDescThe key piece in Listing 4 is that we join the log table to Aud.DimSSISPackage table which holds all packages names and their related IDs and also to the Aud.DimSSISPackageTasks table which holds all task information such as task Names, task IDs and their related package IDs (Refer to the “Gathering SSIS Package Information” section in Part 1) of our ETL setup.

Task Level Reporting

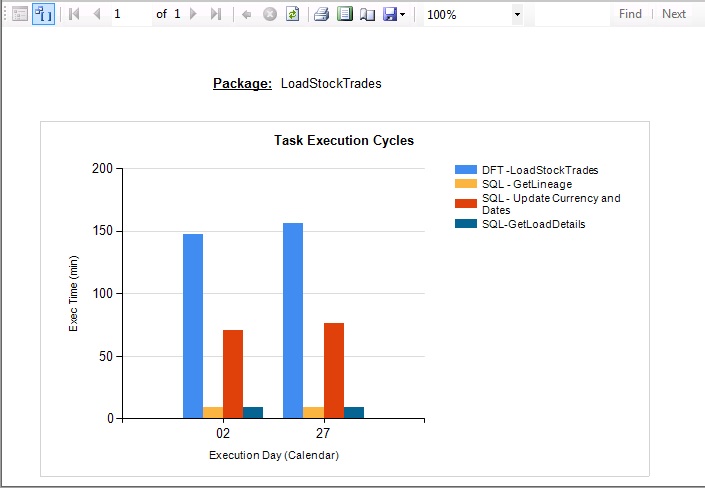

Lets say from analysis in the previous sections you been able to identify some problem packages taking to long to run. The task level report will help us identify the tasks on such packages whose execution cycle that could be the reason why the packages are taking long to run.

By joining the logic in Listing 4 to a time dimension will allow you to generate a time parameterized SSIS Task report for packages in any ETL Phase. Fig 6 shows a sample report parameters for tasks on a package called LoadStockTrades that loads data into “UK” staging database in the staging ETL phase.

Fig 6: SSIS Task Cycle Report Parameters

Fig 7 below displays a sample report of the parameters on fig 6 above.

Fig 7: A Parameterized report showing Daily Task Execution cycles for LoadStockTrades Package that loads data into the Staging Phase for the UK System.

The sample report Fig 7 shows observations of a LoadStockTrades Package on two particular days 02/09/11 and 02/09/11, on these day it can be observed that the Dataflow task DFT-LoadStockTrades took well over 2hrs to run on each of those days.

Auditing Pipeline Components

My approach to logging is, minimal initial event logging as much as possible especially in production and enabling more logging (pipeline component logging) for troubleshooting. In other words, identify Dataflow tasks with performance issues using the task level execution cycles described above and then enable further pipeline logging specifically on those Dataflow tasks for monitoring and troubleshooting.

There are many custom DataFlow tasks and event logs that can be used to monitor and adjust performance, Check MSDN for a complete list and description.

To enable pipeline logging, select specific Dataflow tasks and select the necessary pipeline event. Some of the events monitor components that might cause memory leaks, or track how long it takes for components to run. A useful event to enable in relation to execution cycle time performance related issues is the PipelineComponentTime. These logs report the time that each component in the data flow spends on each of the five major processing steps namely Validate, PreExecute, PostExecute, ProcessInput and PrimeOutput. Refer to MSDN for a complete description of these processing steps.

Summary

In part 2 we’ve just looked at how to use SQL scripts and SSIS log tables to generate various forms of reports that can be used to evaluate ETL batch, SSIS package and task execution cycles. This part also focused only on scenarios where one data source or one source system logs data into one SSIS log table as seen at the staging phase of our hypothetical ETL architecture in Fig1.

In part 3 we are going to look at the warehouse (WH) phase of this architecture. Note that in the WH Phase, events are logged into one SSIS log table sysssislog_WH for both the UK and Singapore systems by the same set of packages. The problem is that, should you just query the sysssislog_WH table you can not tell the event logs that belong to Singapore and those that belong to the UK system. Thus in cases where multiple systems events are logged into one log table, even if you are able outline consecutive batches you cannot tell which batches belong to which system. Let’s say on one day Singapore was loaded into the WH first and on another day UK was loaded first, how do you tell the flip flop from the logged data? What if you have more than two source systems with such possible flip flops? In Part 3 we will look at how to resolve such complexities using such log tables.

This is a three part series on ETL auditing. The various articles cover:

- Part 1 – Introduction to ETL Auditing

- Part 2 – Auditing Simple Setups

- Part 3 – Auditing Complex Setups