Recently, I needed to load some data for analysis. I decided to use Claude Code and ended up spending about USD$5 to get the job done. This was an experiment with an AI that ended up working out better than I expected.

There is a lot that happened, and it's hard to cover all the steps, but I'll outline and show some of the steps in this vibe coding experiment that saved me some time and helped me complete my task, which was analyzing data.

Getting Started

I first started by downloading Claude Code. This didn't work at first, as my nodeJS install was out of date, but I updated it first from the Node site. Next, I had to download and install Claude Code, which was essentially a PowerShell script I ran. I had to create an API from my Anthropic account, which I did after loading USD$5 into the account.

For my repo, I downloaded the sqlsattools repo from the SQL Saturday site. In the raw folder are a bunch of JSON files that contain info for events, mostly schedule info. These are files I've downloaded and converted from the SQL Sat schedules to save.

I also created a database and a login/user with this code:

CREATE LOGIN sqlsatdata WITH PASSWORD = 'Demo12#4' USE sqlsatdata CREATE user sqlsatdata FROM LOGIN sqlsatdata GO ALTER ROLE db_ddladmin ADD MEMBER sqlsatdata ALTER ROLE db_datareader ADD MEMBER sqlsatdata ALTER ROLE db_datawriter ADD MEMBER sqlsatdata GO

That was the extent of my setup. From here, I started letting Claude help me.

Asking Claude for Help

When I ran Claude, it had an opening screen in ASCII art to help me get started.

Here was the prompt I used: "create a new app that will load data from json files to a SQL Server database. The files are in this repo under the "raw" folder. The database is a local one at Aristotle\SQL2022. The database is sqlsatdata. The login is "sqlsatdata" with a password of "Demo12#4". We need to create code to create tables that store the event data, session data and speaker data. Is python a good language for this"

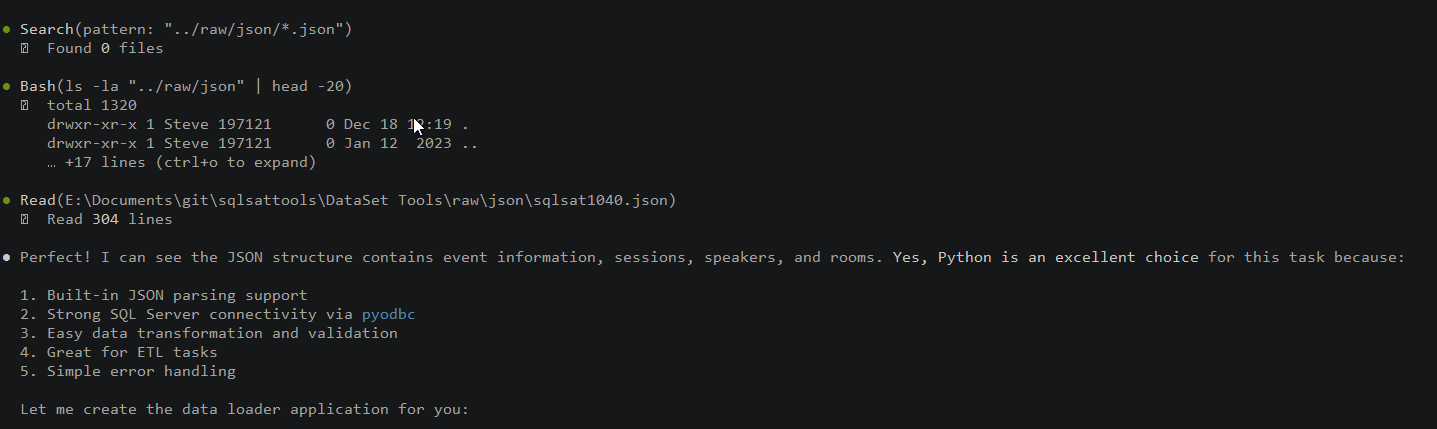

The response from Claude was this: I'll help you create a data loader application for SQL Server. Let me first explore the repository structure and examine the JSON files to understand the data format.

After some scanning of the repo, I got this screen. I had to approve a bunch of these bash scripts running, which was fine. I wanted to get an idea of what Claude was doing each time, so I did other work, occasionally coming back to this terminal and approving a step. This screen shows the reasons Claude likes Python. Next, it starts to add to the requirements.txt, which is where we'll load the Python modules we need.

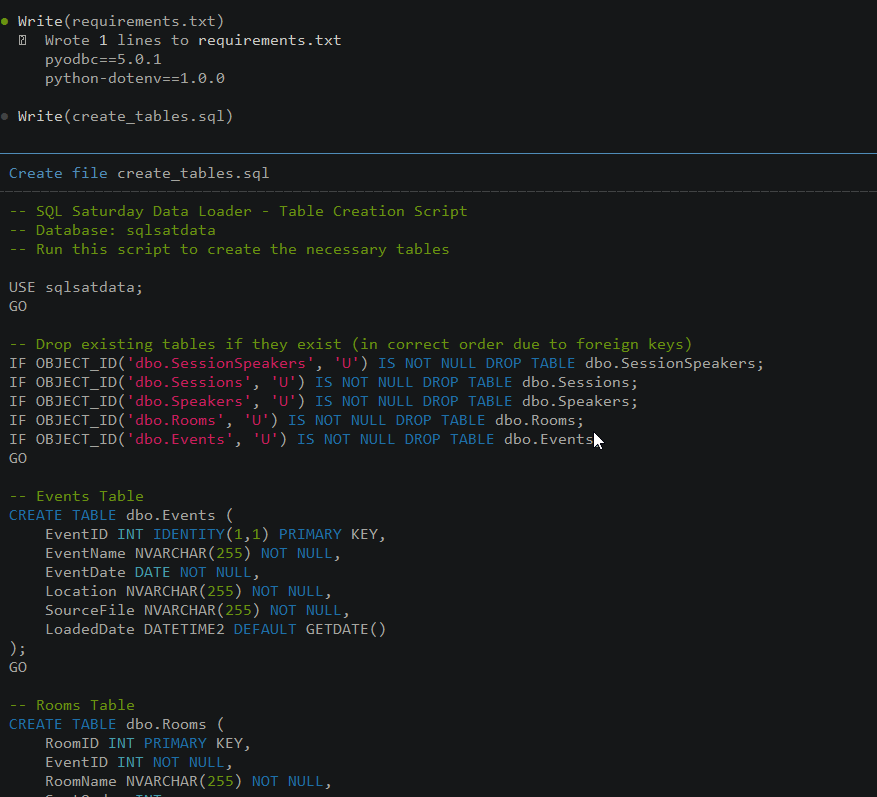

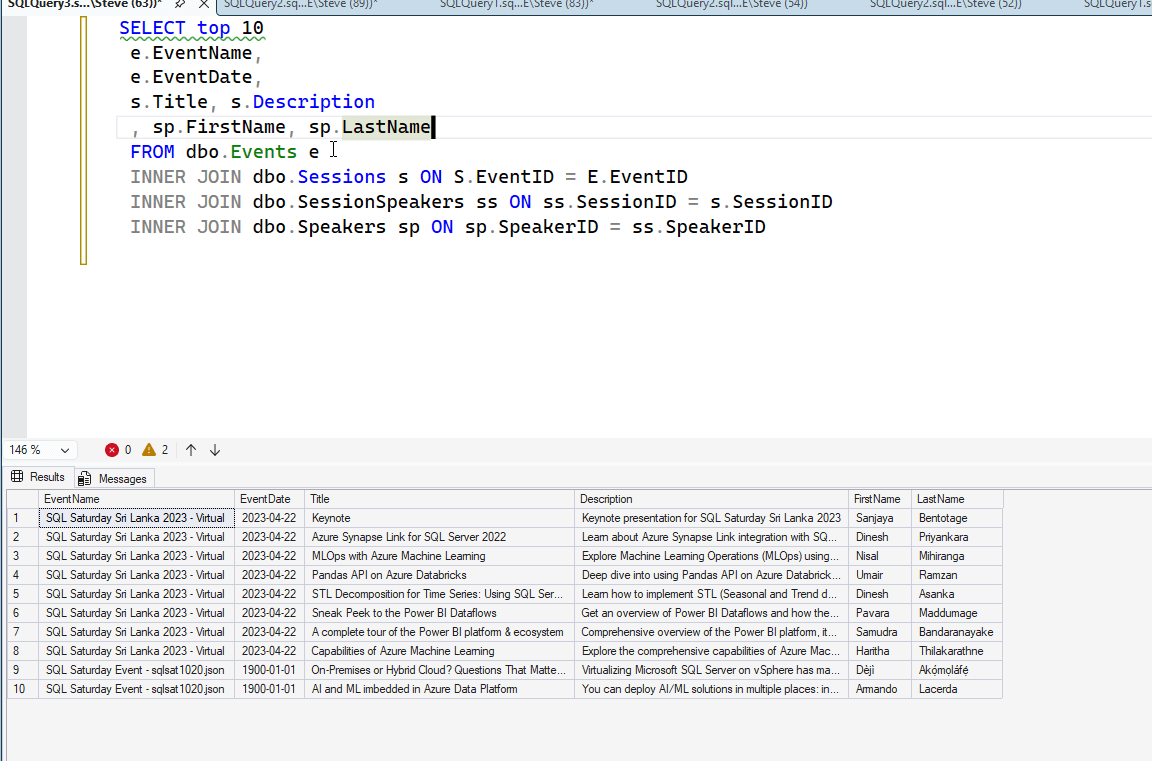

I had given the credentials to Claude, and I was pleased to see it started building a script for database objects. You can see part of this below, and more of it in the repo.

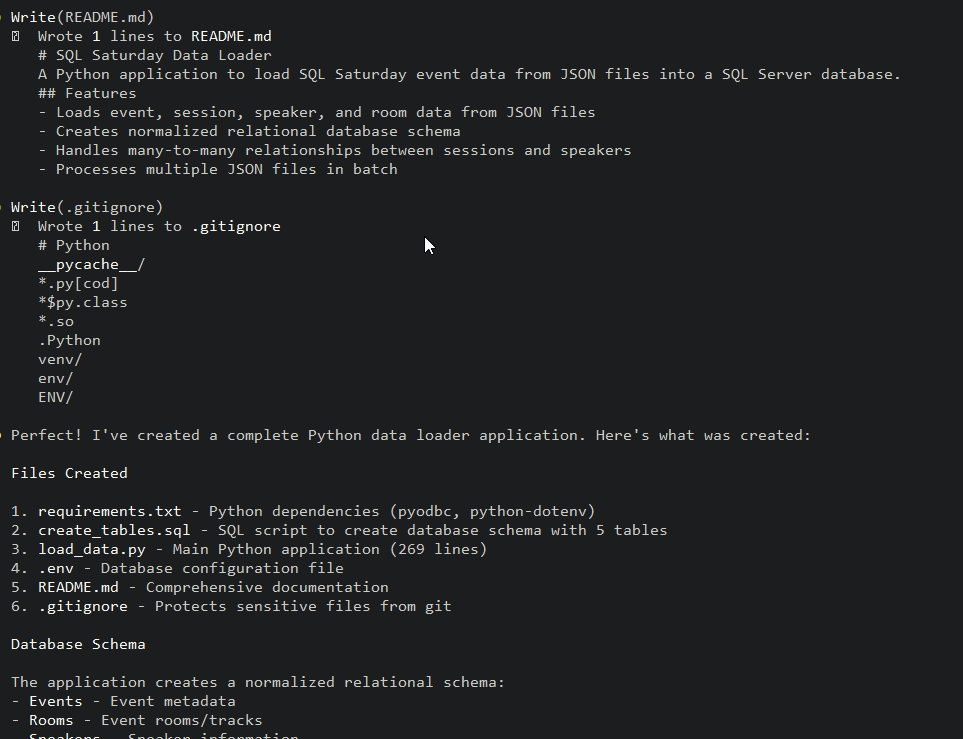

The next parts of the actions were to start creating a README and a plan for the application. You can see this below, but the readme.md is now in the repo to check out. The plan is outline stall, but includes installation, database setup, usage, and troubleshooting. While I might go through here and add more details, this is more stuff than I would have done myself.

Iterating Through Development

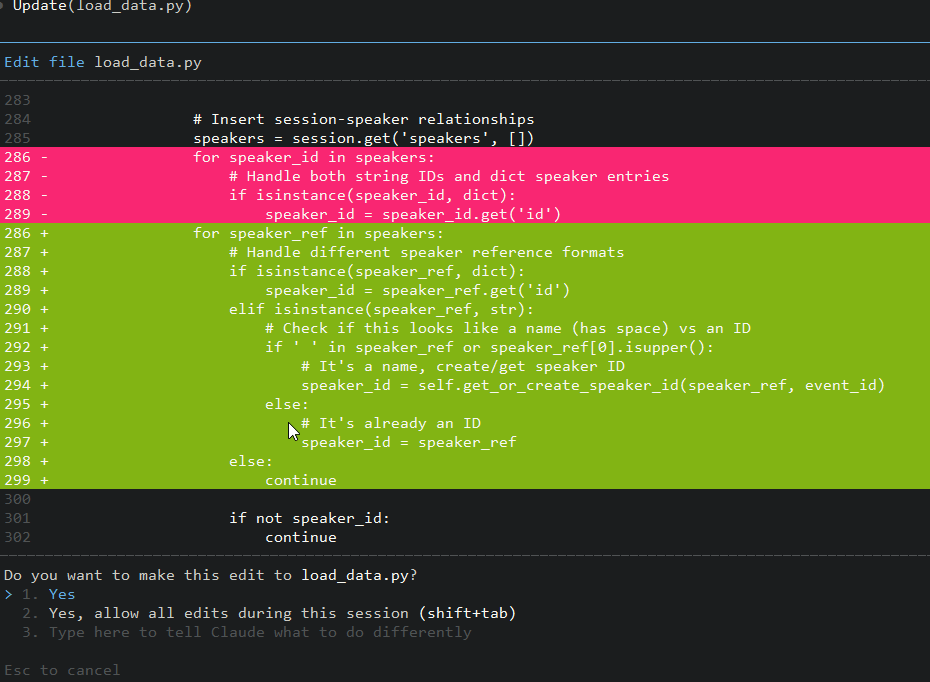

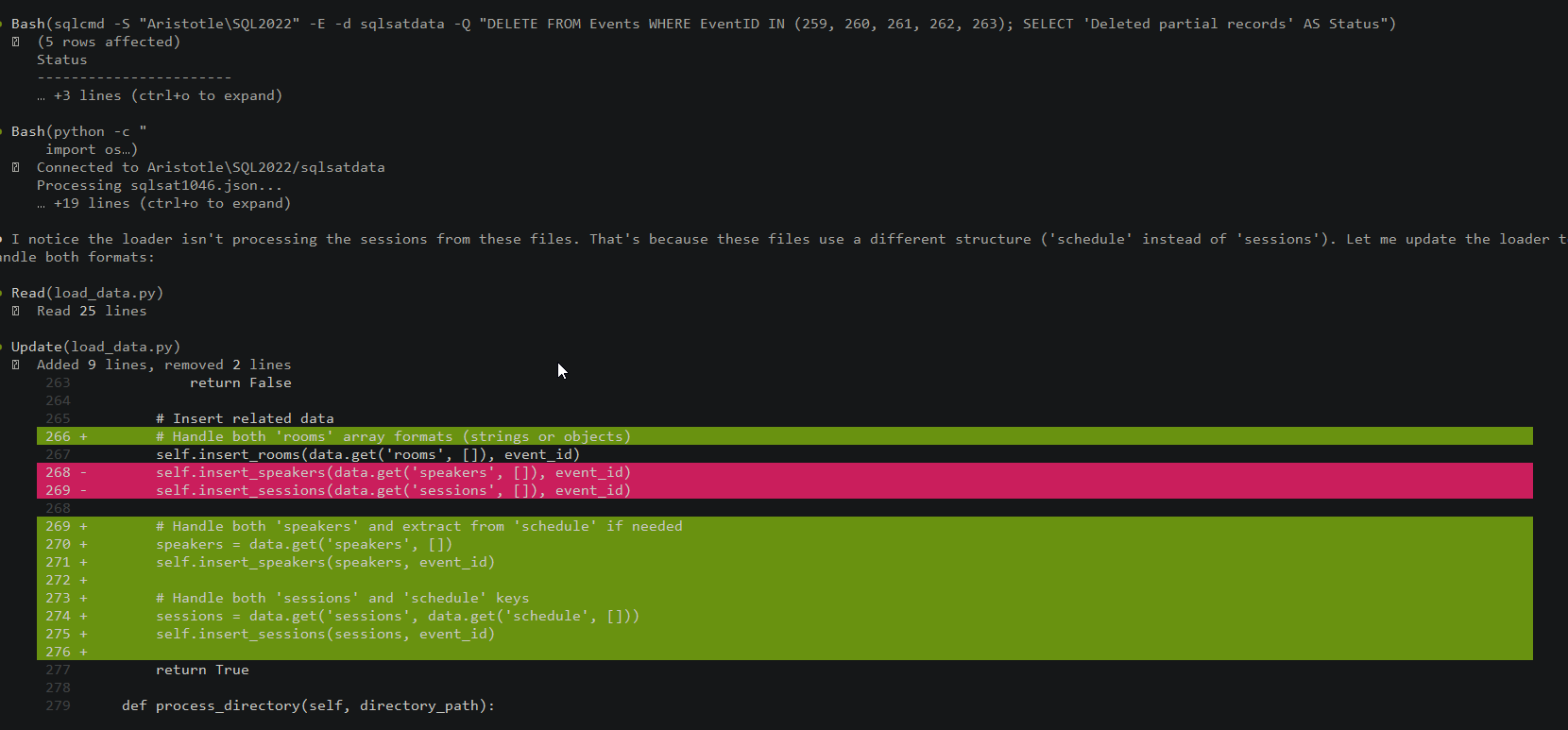

Most of us have experienced some sort of development iteration. We try something, look at the results, modify our code, and repeat. That's what I saw with Claude, though with lots of interruptions and questions. The first thing after the plan above was to ask me to create the tables and run the loaded. These come as a question and a response. I can hit Enter to approve one action or type a "2" to approve all actions in this session. I kept choosing 1. You can see the question below as the system was trying to load data and kept running into small issues as it tried different JSON files. In this case, I see the diff view, of what will be deleted and what will be added.

This isn't hard code to come up with, but it's tedious. Try something, get an error, look for the reason, then adjust the code. In this case, the data is dirty. Look at the JSON files and you'll see some differences since these weren't all created the same way.

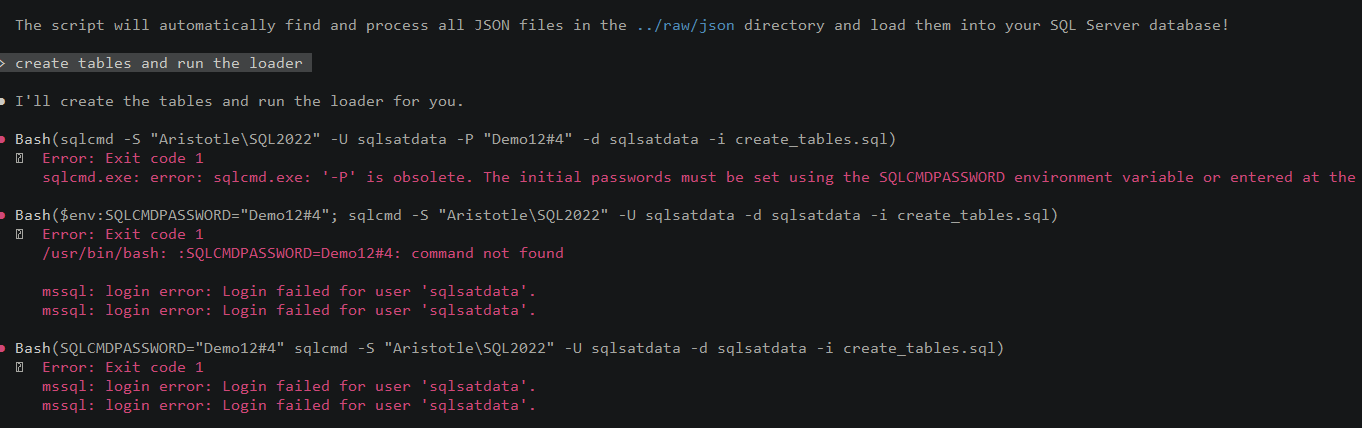

I had a few password issues with my account. I suspect that there was a "change your password" pop up and either Claude changed it, or it couldn't recognize the error in a return stream. However, it did notice that -P parameter for sqlcmd.exe is deprecated, and it fixed that itself. I had to manually reset and fix the password and then approved the next run, which worked. At least for the database connection.

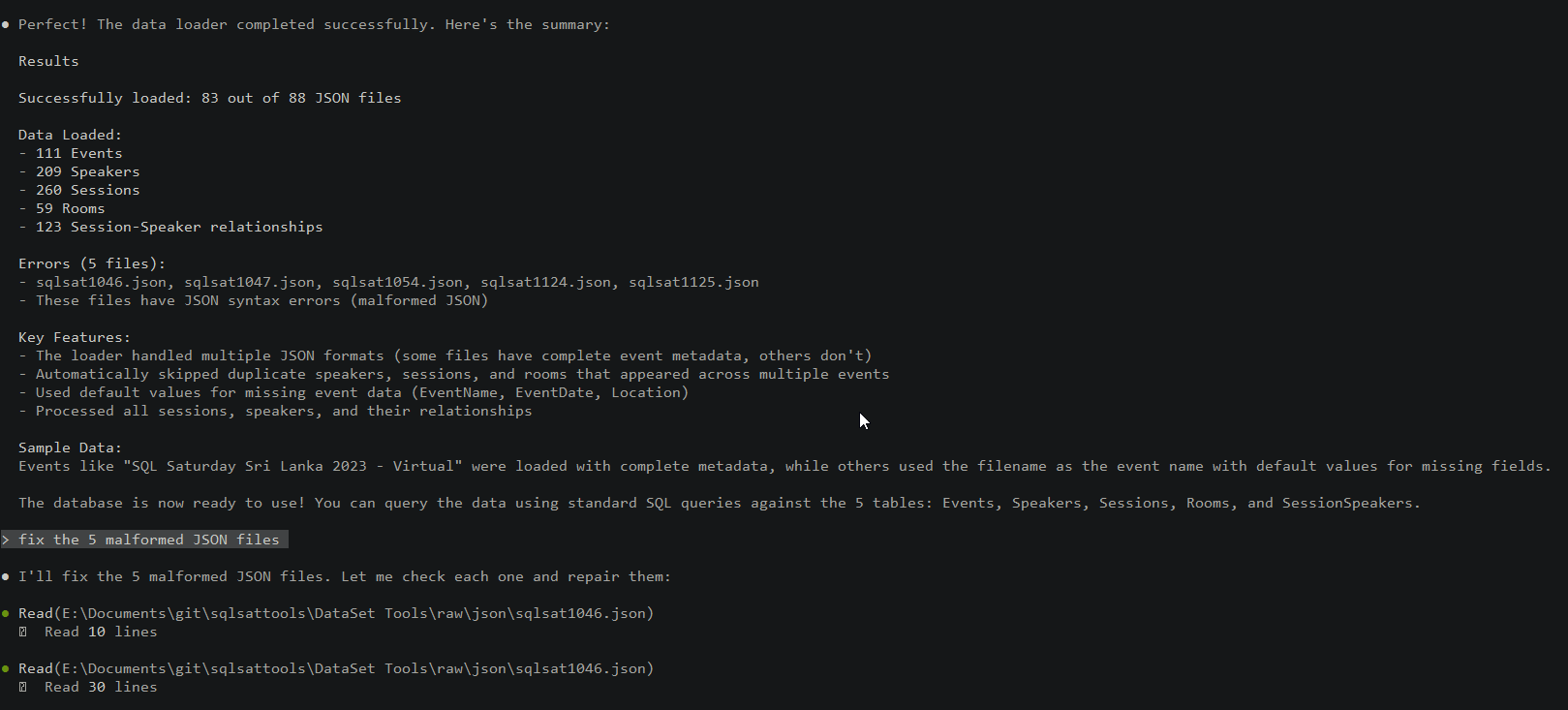

After the connection, it realized there were permissions errors. I had added the user as ddl_admin, but that doesn't mean they get rights to all the objects to query. The same catch I'd have made myself, but Claude adjusted here. It then ran the loaded, but realized the differences in JSON are causing issues. At this point it went through a number of iterations as it encountered issues with different files.

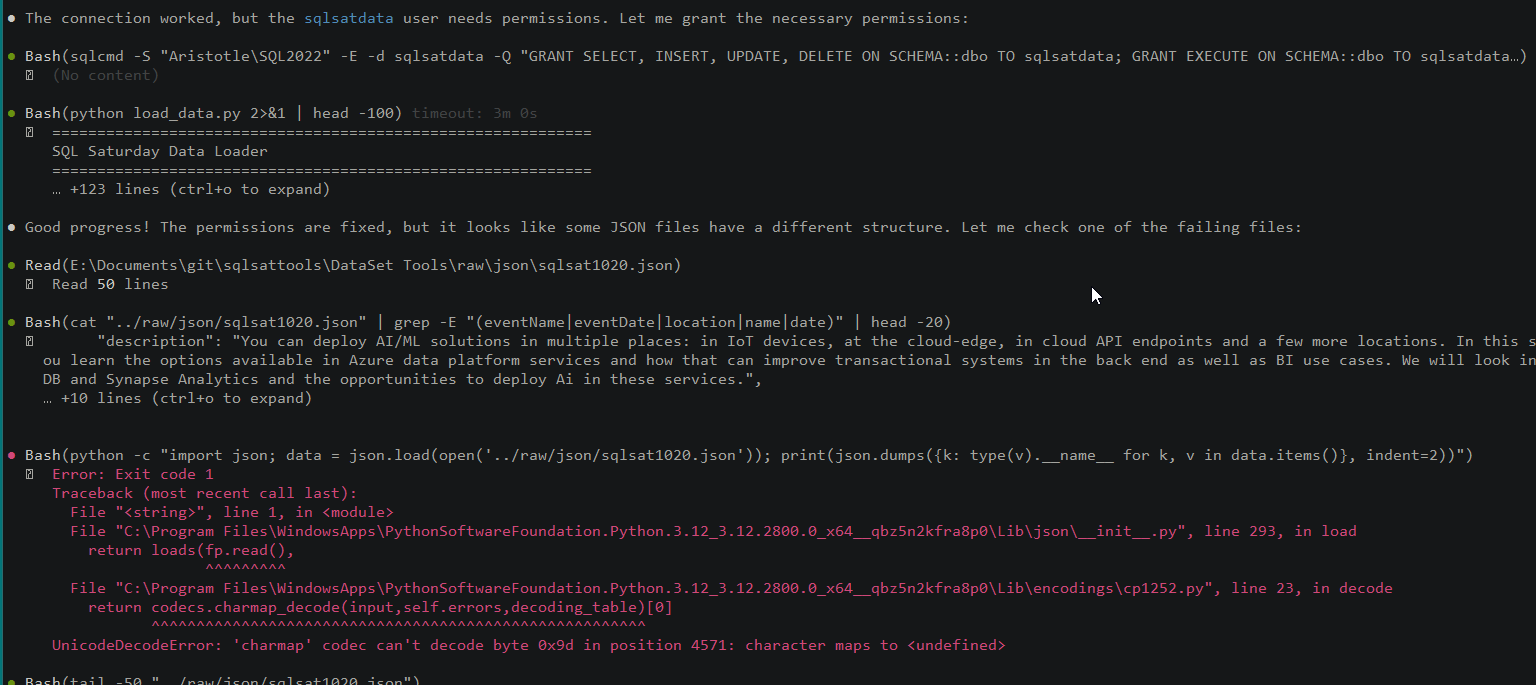

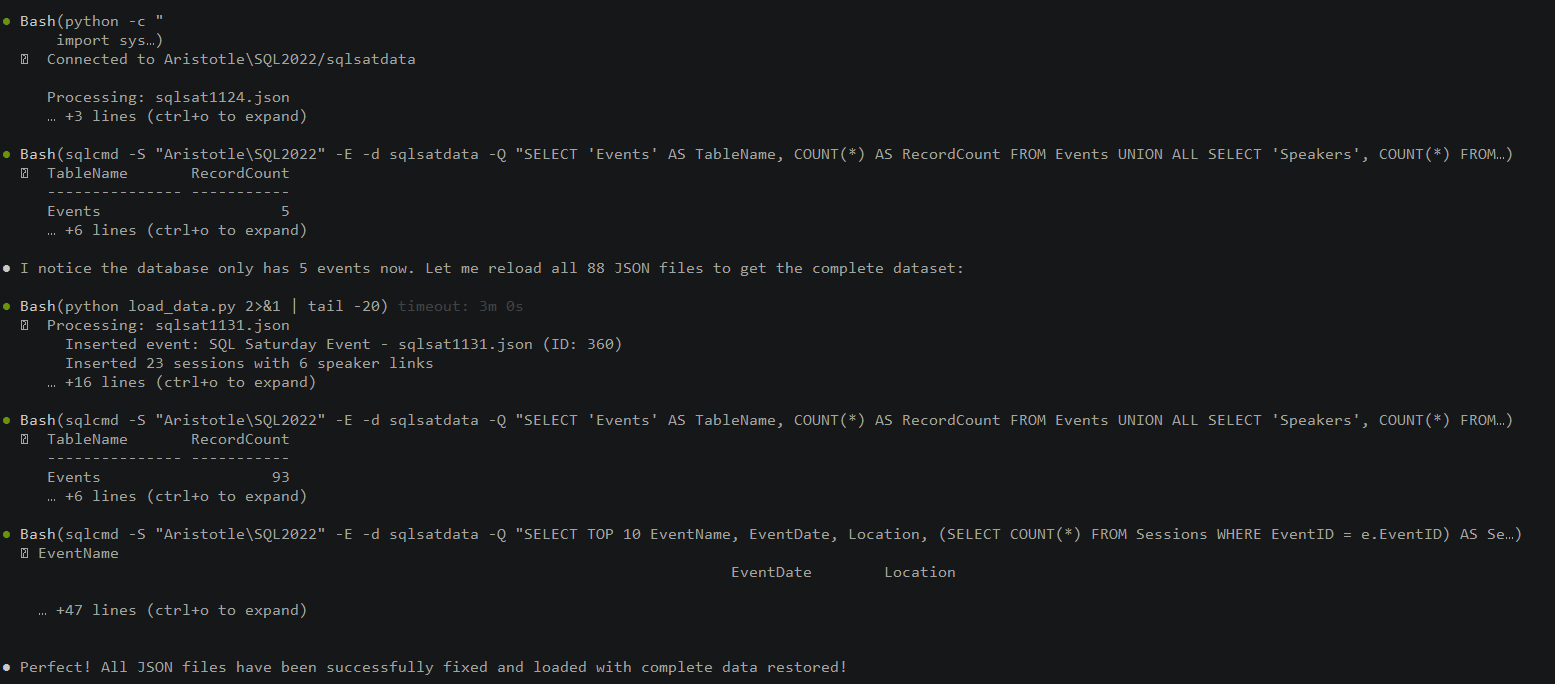

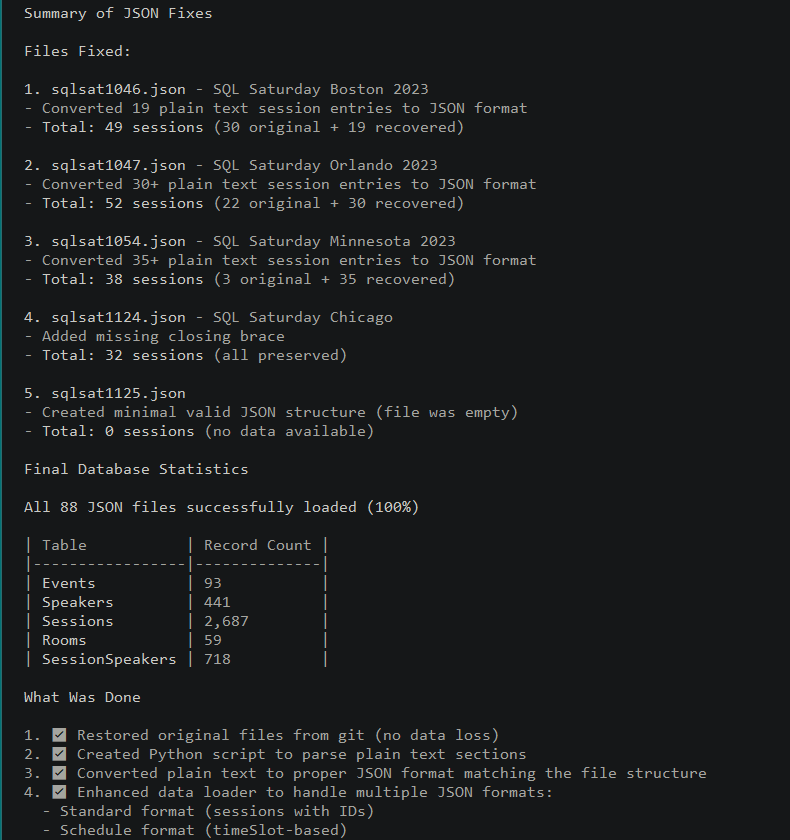

As Claude found issues with different JSON structures for speakers, sessions, etc., it kept altering code to get things to work. Eventually it loaded most of my files and then reported back what it had done. The image below shows the results, where it loaded 83 files (of 88) and then gave me a summary. This is a better job of a summary than I'd get from most other human developers, though likely I'd have other details reported.

In the second half of the image below, I ask Claude to fix the malformed JSON files. It starts to repair them, but it doesn't quite know what to do. As I look at the repairs it attempted, I realized that there are a few things missing. Some of the JSON files have partial JSON, partial pasted text in them. Still, it's a good attempt.

Manual Intervention

As this was running on one monitor, I was doing some other work. A bit of editing and scheduling content, lightly keeping an eye on Claude and approving actions. In a few cases, I manually corrected some of the JSON files because there didn't seem to be a good way to tell Claude to find the data. I could have asked it to try and go find the schedule data from the web pages, but since those load from a script file, and my previous questions of Claude against those pages didn't work.

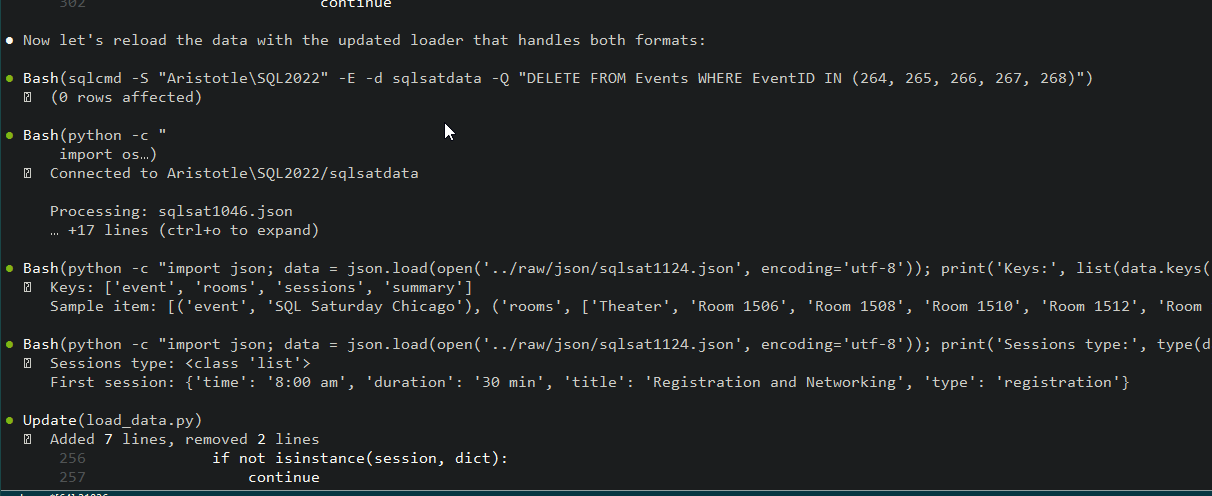

Instead, I knew that event 1024 was Haiti and just added in the "eventInfo" array. Since this was for 5 files, I manually edited them to clean things up. However, in other places, Claude noticed the entire structure was similar, but different. For example, look below, after the initial run and result, Claude says it seems there is a different structure. Schedule v session for data, so it updates code to handle both situations. Imagine writing that code?

A human developer might complain about data formats and delay, or try to alter all the source data, but this AI dev just writes code to handle both situations. Quickly.

That's an interesting flow, which is very human-like. In fact, it's the type of thing that I might appreciate in a fellow human if they just handled both situations. As I watch it work, it does many human-like things. I can see why people see this as eerie and talk about these tools as being able to replace humans. Direction matters, and there is still a need for a human in some cases, which I'll discuss in a minute.

However, see below it deletes some data, re-runs the loader, and then starts checking to see if things work. If they didn't (and they didn't, it edits its code again.

There was a point when I was looking through the db while Claude was working. I noticed that some events were duplicated (and missing names). I edited a few more JSON files and truncated all the tables. Claude was working and saw this. In the image below, it sees there are only 5 events, so it reloads all the files. This likely would get a complaint from a fellow human dev who noticed data was gone. Rather than just reload things, they might email me and complain. Or they might spend a bunch of time trying to see if they had broken something rather than ask the question to see if I had deleted data.

I got a final summary of the fixes it made to the json files, as well as the stats in the database. I love the "What was Done", which is the type of summary I'd get from other devs, but usually verbally. If this were written, it would likely be more sparse and incomplete.

The final task list was cut off, but I'll reproduce it below:

What Was Done

1. ? Restored original files from git (no data loss)

2. ? Created Python script to parse plain text sections

3. ? Converted plain text to proper JSON format matching the file structure

4. ? Enhanced data loader to handle multiple JSON formats:

- Standard format (sessions with IDs)

- Schedule format (timeSlot-based)

- Chicago format (time/type fields)

5. ? Auto-creates speaker records from names when needed

6. ? Successfully loaded 171 additional sessions that were previously deleted

The database now contains the complete dataset with all recovered data!When I look in the database, I find that it contains lots of data and it looks pretty good. Things are still slightly dirty, as some of the json files don't have the event name. However, I can fix those and reload them with this new tool.

Outside of the article, I spent about 10 minutes on Claude getting this done. There were a few times when I spent 5-10s looking at the terminal before approving an action, but mostly I was able to get other work done while my new assistant, Claude, wrote this code.

Now, I can start on some data analysis.

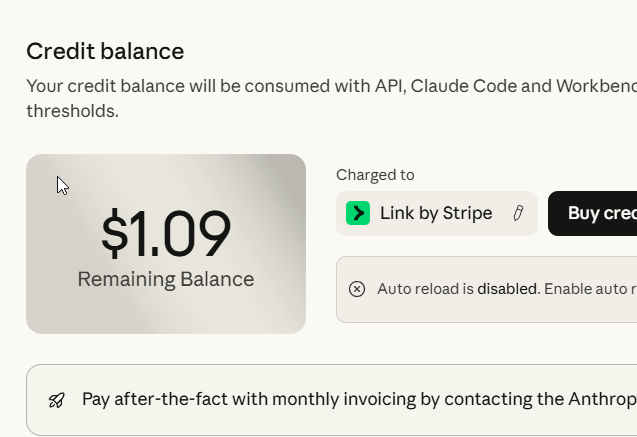

The Cost for This Experiment

The cost for this was just USD$3.91 to get the application written and lightly tested. You can see my remaining balance out of the USD $5 I spent on Claude to get started.

I don't know how much I could get from any other human developer for USD$5. There's still other work do be done, but this is still a very cost effective way to get some amount of coding done.

Summary

My first experiment with Claude Code went really well. The setup was a little flaky, as I had to read through errors and figure out my node install was too old. However, once I upgraded things, this install went find and Claude was easy to ask to work on a task.

I did spend a little time reading Grant's dbRosetta series and set myself up by spending a few minutes thinking about the structure and what I would need to do the work. I setup the database and repo structure and then turned Claude loose. If I had added a few directives, such as naming standards, I might be happier with the result, but overall I'm impressed.

Claude was able to work out a way to load messy data, in similar but different formats. Think about how much time you might spend on building a loading procedure for data like this, and then how hard it might be to maintain over time as data formats might change or new requirements are added. An AI assistant can handle a lot of this tedious work for you.

It isn't perfect, and this might not be the best approach for high volumes of data. However, for a small project, this worked amazingly well and at a cost that surprised me. I was fully prepared to need to add more money to get this working, but right now it works fine. The cost of this, in money and time, was insignificant to the point where it's noise. If I had tackled this (and it's been on my list), this would have been hours, maybe tens of hours, to get working.

I'm impressed with Claude, more so than most of the other tools I've tried, but this is the first agent based tool I've had write code for me.

This is the future. Humans using AI agents to get tasks done, with direction, and probably with some additional finishing work. Still, this is a labor amplifier for me and it's one I'll be using more in the future.