Transactional Replication has poor Plan

-

April 23, 2018 at 8:02 am

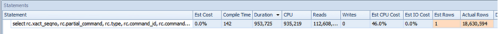

For some reason we are getting a real poor plan on our Replication server... transactional. (I have no idea why the picture is being shrunk down so much.)

Any ideas on why replication is doing such a poor job on estimating the rows?

Second Question... I thought SQL Server 2014 was supposed to use 100 rows as min, not the old 1 row.select rc.xact_seqno, rc.partial_command, rc.type, rc.command_id, rc.command, rc.hashkey, NULL, NULL, NULL, NULL, NULL, NULL, rc.article_id from MSrepl_commands rc with (INDEX(ucMSrepl_commands)) JOIN dbo.MSsubscriptions s with (INDEX(ucMSsubscriptions)) -- At end, we use the FASTFIRSTROW option which tends to force -- a nested inner loop join driven from MSrepl_commands ON (rc.article_id = s.article_id AND rc.publisher_database_id=s.publisher_database_id ) where s.agent_id = @agent_id and s.status = @active_status and rc.publisher_database_id = @publisher_database_id and rc.xact_seqno > @last_xact_seqno and rc.xact_seqno <= @max_xact_seqno and -- If log based transaction, we do -- 1. only select tran later than sub pub seqno -- 2. only select tran later than ss_cplt_seqno (((rc.type & @snapshot_bit) <> @snapshot_bit and rc.xact_seqno > s.publisher_seqno and rc.xact_seqno > s.ss_cplt_seqno ) or -- If snapshot transaction, we do -- 1. filter out the snapshot transactions that were inserted later that is not -- the subscription's snapshot transaction -- 2. filter out trigger generation command for non synctran subscription. -- Note: don't do loop back detection. ((rc.type & @snapshot_bit) = @snapshot_bit and ((rc.xact_seqno >= s.subscription_seqno and rc.xact_seqno <= s.ss_cplt_seqno) or (rc.xact_seqno in (select subscription_seqno from @snapshot_seqnos) and (rc.type & ~@snapshot_bit) in (25, 50, 51, 52, 53, 54, 55, 56 ,57, 58))) and -- Command type list must match that in sp_MSdrop_article (s.update_mode <> @read_only or rc.type <> @synctran_type))) and -- Filter out the new command types that we have introduced after 9.0 (@compatibility_level >= 10000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (105)) and -- Filter out the new command types that we have introduced after 8.0 (@compatibility_level >= 9000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (13, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 31, 32, 33, 47, 100, 101, 102, 104)) and -- Filter out the new command types that we have introduced after 7.0 (@compatibility_level > 7000000 or (rc.type & ~@snapshot_bit) not in (25, 40, 45, 50, 51, 52, 53, 54, 55, 56 ,57, 58, 60, 68, 69)) order by rc.xact_seqno, rc.command_id asc OPTION (FAST 1)

-

April 23, 2018 at 3:04 pm

dwilliscp - Monday, April 23, 2018 8:02 AMAny ideas on why replication is doing such a poor job on estimating the rows?

Second Question... I thought SQL Server 2014 was supposed to use 100 rows as min, not the old 1 row.1. Statistics are not updated for some time.

2. You can use plan guides to assign a more efficient plan if you have one. -

April 24, 2018 at 5:11 am

dwilliscp - Monday, April 23, 2018 8:02 AM

For some reason we are getting a real poor plan on our Replication server... transactional. (I have no idea why the picture is being shrunk down so much.)

Any ideas on why replication is doing such a poor job on estimating the rows?

Second Question... I thought SQL Server 2014 was supposed to use 100 rows as min, not the old 1 row.select rc.xact_seqno, rc.partial_command, rc.type, rc.command_id, rc.command, rc.hashkey, NULL, NULL, NULL, NULL, NULL, NULL, rc.article_id from MSrepl_commands rc with (INDEX(ucMSrepl_commands)) JOIN dbo.MSsubscriptions s with (INDEX(ucMSsubscriptions)) -- At end, we use the FASTFIRSTROW option which tends to force -- a nested inner loop join driven from MSrepl_commands ON (rc.article_id = s.article_id AND rc.publisher_database_id=s.publisher_database_id ) where s.agent_id = @agent_id and s.status = @active_status and rc.publisher_database_id = @publisher_database_id and rc.xact_seqno > @last_xact_seqno and rc.xact_seqno <= @max_xact_seqno and -- If log based transaction, we do -- 1. only select tran later than sub pub seqno -- 2. only select tran later than ss_cplt_seqno (((rc.type & @snapshot_bit) <> @snapshot_bit and rc.xact_seqno > s.publisher_seqno and rc.xact_seqno > s.ss_cplt_seqno ) or -- If snapshot transaction, we do -- 1. filter out the snapshot transactions that were inserted later that is not -- the subscription's snapshot transaction -- 2. filter out trigger generation command for non synctran subscription. -- Note: don't do loop back detection. ((rc.type & @snapshot_bit) = @snapshot_bit and ((rc.xact_seqno >= s.subscription_seqno and rc.xact_seqno <= s.ss_cplt_seqno) or (rc.xact_seqno in (select subscription_seqno from @snapshot_seqnos) and (rc.type & ~@snapshot_bit) in (25, 50, 51, 52, 53, 54, 55, 56 ,57, 58))) and -- Command type list must match that in sp_MSdrop_article (s.update_mode <> @read_only or rc.type <> @synctran_type))) and -- Filter out the new command types that we have introduced after 9.0 (@compatibility_level >= 10000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (105)) and -- Filter out the new command types that we have introduced after 8.0 (@compatibility_level >= 9000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (13, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 31, 32, 33, 47, 100, 101, 102, 104)) and -- Filter out the new command types that we have introduced after 7.0 (@compatibility_level > 7000000 or (rc.type & ~@snapshot_bit) not in (25, 40, 45, 50, 51, 52, 53, 54, 55, 56 ,57, 58, 60, 68, 69)) order by rc.xact_seqno, rc.command_id asc OPTION (FAST 1)

You're getting a one row estimate because you're using a query hint that tells the optimizer to only use one row.

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

April 24, 2018 at 11:53 am

dwilliscp - Monday, April 23, 2018 8:02 AM

For some reason we are getting a real poor plan on our Replication server... transactional. (I have no idea why the picture is being shrunk down so much.)

Any ideas on why replication is doing such a poor job on estimating the rows?

Second Question... I thought SQL Server 2014 was supposed to use 100 rows as min, not the old 1 row.select rc.xact_seqno, rc.partial_command, rc.type, rc.command_id, rc.command, rc.hashkey, NULL, NULL, NULL, NULL, NULL, NULL, rc.article_id from MSrepl_commands rc with (INDEX(ucMSrepl_commands)) JOIN dbo.MSsubscriptions s with (INDEX(ucMSsubscriptions)) -- At end, we use the FASTFIRSTROW option which tends to force -- a nested inner loop join driven from MSrepl_commands ON (rc.article_id = s.article_id AND rc.publisher_database_id=s.publisher_database_id ) where s.agent_id = @agent_id and s.status = @active_status and rc.publisher_database_id = @publisher_database_id and rc.xact_seqno > @last_xact_seqno and rc.xact_seqno <= @max_xact_seqno and -- If log based transaction, we do -- 1. only select tran later than sub pub seqno -- 2. only select tran later than ss_cplt_seqno (((rc.type & @snapshot_bit) <> @snapshot_bit and rc.xact_seqno > s.publisher_seqno and rc.xact_seqno > s.ss_cplt_seqno ) or -- If snapshot transaction, we do -- 1. filter out the snapshot transactions that were inserted later that is not -- the subscription's snapshot transaction -- 2. filter out trigger generation command for non synctran subscription. -- Note: don't do loop back detection. ((rc.type & @snapshot_bit) = @snapshot_bit and ((rc.xact_seqno >= s.subscription_seqno and rc.xact_seqno <= s.ss_cplt_seqno) or (rc.xact_seqno in (select subscription_seqno from @snapshot_seqnos) and (rc.type & ~@snapshot_bit) in (25, 50, 51, 52, 53, 54, 55, 56 ,57, 58))) and -- Command type list must match that in sp_MSdrop_article (s.update_mode <> @read_only or rc.type <> @synctran_type))) and -- Filter out the new command types that we have introduced after 9.0 (@compatibility_level >= 10000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (105)) and -- Filter out the new command types that we have introduced after 8.0 (@compatibility_level >= 9000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (13, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 31, 32, 33, 47, 100, 101, 102, 104)) and -- Filter out the new command types that we have introduced after 7.0 (@compatibility_level > 7000000 or (rc.type & ~@snapshot_bit) not in (25, 40, 45, 50, 51, 52, 53, 54, 55, 56 ,57, 58, 60, 68, 69)) order by rc.xact_seqno, rc.command_id asc OPTION (FAST 1)

That's just the execution of sp_MSget_repl_commands and it is written with OPTION (FAST 1).

Refer to this article for some troubleshooting steps to try - I would guess the section Distribution Agent Reader Latency applies:

Transactional Replication ConversationsSue

-

May 7, 2018 at 12:21 pm

Grant Fritchey - Tuesday, April 24, 2018 5:11 AMdwilliscp - Monday, April 23, 2018 8:02 AM

For some reason we are getting a real poor plan on our Replication server... transactional. (I have no idea why the picture is being shrunk down so much.)

Any ideas on why replication is doing such a poor job on estimating the rows?

Second Question... I thought SQL Server 2014 was supposed to use 100 rows as min, not the old 1 row.select rc.xact_seqno, rc.partial_command, rc.type, rc.command_id, rc.command, rc.hashkey, NULL, NULL, NULL, NULL, NULL, NULL, rc.article_id from MSrepl_commands rc with (INDEX(ucMSrepl_commands)) JOIN dbo.MSsubscriptions s with (INDEX(ucMSsubscriptions)) -- At end, we use the FASTFIRSTROW option which tends to force -- a nested inner loop join driven from MSrepl_commands ON (rc.article_id = s.article_id AND rc.publisher_database_id=s.publisher_database_id ) where s.agent_id = @agent_id and s.status = @active_status and rc.publisher_database_id = @publisher_database_id and rc.xact_seqno > @last_xact_seqno and rc.xact_seqno <= @max_xact_seqno and -- If log based transaction, we do -- 1. only select tran later than sub pub seqno -- 2. only select tran later than ss_cplt_seqno (((rc.type & @snapshot_bit) <> @snapshot_bit and rc.xact_seqno > s.publisher_seqno and rc.xact_seqno > s.ss_cplt_seqno ) or -- If snapshot transaction, we do -- 1. filter out the snapshot transactions that were inserted later that is not -- the subscription's snapshot transaction -- 2. filter out trigger generation command for non synctran subscription. -- Note: don't do loop back detection. ((rc.type & @snapshot_bit) = @snapshot_bit and ((rc.xact_seqno >= s.subscription_seqno and rc.xact_seqno <= s.ss_cplt_seqno) or (rc.xact_seqno in (select subscription_seqno from @snapshot_seqnos) and (rc.type & ~@snapshot_bit) in (25, 50, 51, 52, 53, 54, 55, 56 ,57, 58))) and -- Command type list must match that in sp_MSdrop_article (s.update_mode <> @read_only or rc.type <> @synctran_type))) and -- Filter out the new command types that we have introduced after 9.0 (@compatibility_level >= 10000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (105)) and -- Filter out the new command types that we have introduced after 8.0 (@compatibility_level >= 9000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (13, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 31, 32, 33, 47, 100, 101, 102, 104)) and -- Filter out the new command types that we have introduced after 7.0 (@compatibility_level > 7000000 or (rc.type & ~@snapshot_bit) not in (25, 40, 45, 50, 51, 52, 53, 54, 55, 56 ,57, 58, 60, 68, 69)) order by rc.xact_seqno, rc.command_id asc OPTION (FAST 1)

You're getting a one row estimate because you're using a query hint that tells the optimizer to only use one row.

This query I captured.. but was created by Replication.. so I have no idea why it is generating a query with a hint.

-

May 7, 2018 at 12:25 pm

Sue_H - Tuesday, April 24, 2018 11:53 AMdwilliscp - Monday, April 23, 2018 8:02 AM

For some reason we are getting a real poor plan on our Replication server... transactional. (I have no idea why the picture is being shrunk down so much.)

Any ideas on why replication is doing such a poor job on estimating the rows?

Second Question... I thought SQL Server 2014 was supposed to use 100 rows as min, not the old 1 row.select rc.xact_seqno, rc.partial_command, rc.type, rc.command_id, rc.command, rc.hashkey, NULL, NULL, NULL, NULL, NULL, NULL, rc.article_id from MSrepl_commands rc with (INDEX(ucMSrepl_commands)) JOIN dbo.MSsubscriptions s with (INDEX(ucMSsubscriptions)) -- At end, we use the FASTFIRSTROW option which tends to force -- a nested inner loop join driven from MSrepl_commands ON (rc.article_id = s.article_id AND rc.publisher_database_id=s.publisher_database_id ) where s.agent_id = @agent_id and s.status = @active_status and rc.publisher_database_id = @publisher_database_id and rc.xact_seqno > @last_xact_seqno and rc.xact_seqno <= @max_xact_seqno and -- If log based transaction, we do -- 1. only select tran later than sub pub seqno -- 2. only select tran later than ss_cplt_seqno (((rc.type & @snapshot_bit) <> @snapshot_bit and rc.xact_seqno > s.publisher_seqno and rc.xact_seqno > s.ss_cplt_seqno ) or -- If snapshot transaction, we do -- 1. filter out the snapshot transactions that were inserted later that is not -- the subscription's snapshot transaction -- 2. filter out trigger generation command for non synctran subscription. -- Note: don't do loop back detection. ((rc.type & @snapshot_bit) = @snapshot_bit and ((rc.xact_seqno >= s.subscription_seqno and rc.xact_seqno <= s.ss_cplt_seqno) or (rc.xact_seqno in (select subscription_seqno from @snapshot_seqnos) and (rc.type & ~@snapshot_bit) in (25, 50, 51, 52, 53, 54, 55, 56 ,57, 58))) and -- Command type list must match that in sp_MSdrop_article (s.update_mode <> @read_only or rc.type <> @synctran_type))) and -- Filter out the new command types that we have introduced after 9.0 (@compatibility_level >= 10000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (105)) and -- Filter out the new command types that we have introduced after 8.0 (@compatibility_level >= 9000000 or (rc.type & ~@snapshot_bit & ~@postcmd_bit) not in (13, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 31, 32, 33, 47, 100, 101, 102, 104)) and -- Filter out the new command types that we have introduced after 7.0 (@compatibility_level > 7000000 or (rc.type & ~@snapshot_bit) not in (25, 40, 45, 50, 51, 52, 53, 54, 55, 56 ,57, 58, 60, 68, 69)) order by rc.xact_seqno, rc.command_id asc OPTION (FAST 1)

That's just the execution of sp_MSget_repl_commands and it is written with OPTION (FAST 1).

Refer to this article for some troubleshooting steps to try - I would guess the section Distribution Agent Reader Latency applies:

Transactional Replication ConversationsSue

Thanks for the link.. looking over and it could help with diag other issues.. but even though the table has an up to date statistic.. and only the clustered index.. still does not explain why this query would run so long.. on that day.. and suck up so much resources.. ok the fact that it was expecting one 1 row is likely the reason.. in my view.. but again I have no idea why SQL Server's replication would be generating SQL Plan that was so bad.

-

May 7, 2018 at 12:57 pm

dwilliscp - Monday, May 7, 2018 12:25 PMThanks for the link.. looking over and it could help with diag other issues.. but even though the table has an up to date statistic.. and only the clustered index.. still does not explain why this query would run so long.. on that day.. and suck up so much resources.. ok the fact that it was expecting one 1 row is likely the reason.. in my view.. but again I have no idea why SQL Server's replication would be generating SQL Plan that was so bad.

There isn't really one single thing you can look at to find the exact cause. The section in that blog explains things other than statistics. You need to go through those, identify where the latency seems to be and look at the suggested workaround in the article.

That section explains the different phases of the Distribution agent reader and gives examples for the different things to check such as:

Large batch of transactions is mentioned and various ways to check for that.

Suggests looking at the query statistics for that stored procedure.

Suggests testing the reading thread with an example and instructions to look for blocking, scans, timeouts as well as a large number of cmds.

Shows how to look at the completion times for different phases in the Agent Log to help identify where things are slow

It looks like it is likely related to a very large transaction and that it had millions of cmds to execute. Or the disks may be so slow that the cmds are getting backed up. As noted in the article, you want to check the disks for the distribution databases. For a large transaction, it mentions that you would want to consider replicating the stored procedure execution instead of the results. Refer to this article for more information:

Publishing Stored Procedure Execution in Transactional ReplicationSue

Viewing 7 posts - 1 through 7 (of 7 total)

You must be logged in to reply to this topic. Login to reply