sql backup for big database in 4 steps

-

April 7, 2021 at 6:15 pm

Hello,

Can someone help me with code for sql backup for big database in 4 steps so it will not take a long time to do a back up.

Thank you

-

April 8, 2021 at 2:27 am

Just as a side bar, a search engine search for "backup database to multiple files sql server" yields a large number of articles on the subject.

From that lot, the simplest introduction to the technique of backing up to multiple files appears in the following article.

A word of caution... backing up to multiple files doesn't automatically mean that it will improve the performance of your backups or your restores. Check it as a single file backup and as a multi-file backup. Then, practice doing restores because the only reason to do backups is to have successful restores when things go haywire. And, make sure that your backups go off-site to help protect against things like ransomware, etc (knock on wood).

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 8, 2021 at 3:08 am

(1) Have you page compressed any (or better yet all) of the really large tables? And used COMPRESS/DECOMPRESS if it's clob data? [If you're on SQL 2016+ then even Standard Edition has full compression capability. If you're on less than 2016, you'd need Enterprise Edition. I'm pointing that out because I realize that sometimes people post to a higher version than they have because there tends to be more activity on newer-version forums.]

(2) If not, are you using WITH COMPRESSION on the backups? For data not already page compressed, that typically drastically speeds up the BACKUP!

So what should you do first? Use backup compression if not using data compression.

But you really should use page compression if it's available in your SQL version/edition. And, compression or not, you always have the option to move some/most of the tables to other db(s), which you can then back up on different schedules and/or with different methods, as you prefer. Each db would then be smaller than the current combined db, of course.

For example, say you decide to page compress 40% of the tables in the db (you could end up with higher/lower %, but the principle is the same). You might then move the other 60% of tables to another db. But won't that require code changes, which you don't want? No, you don't want to have to change your SQL code, it should continue to work just fine.

To do that, you might, for example, take these steps:

(11) Compress all tables in the maindb that you decide to compress.

(12) Backup the maindb and restore it to, say, maindb2.

(13) Drop the compressed tables from maindb2.

(14) Generate 'CREATE SYNONYM' statements for the non-compressed tables in maindb to point them to the same table names but in the dbmain2 db, like: CREATE SYNONYM dbo.table_name FOR maindb2.dbo.table_name

(15) DROP the non-compressed tables from maindb.

(16) Run the gen'd code in dbmain to create the synonyms for the non-compressed tables pointing to dbmain2, same table name.

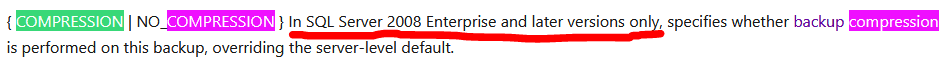

(17) From then on, BACKUP dbmain WITH NO_COMPRESSION, BACKUP dbmain2 WITH COMPRESSION.

I realize this isn't necessarily 100% detailed or clear. Thus, if you decide to try this, let me know and I can provide more details as needed.

Note that you can move tables to diff db(s) like above even if you decide not to, or can't, compress any of the tables.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

-

April 8, 2021 at 4:23 am

If you move tables to another database, remember that DRI (Declared Referential Integrity, like FKs, etc) won't work across databases.

I totally agree with Page/Row Compression, compressed columns using the COMPRESS/DECOMPRESS function, and compressed backups. Compressed backups are only available in the Enterprise Edition for production environments (and Developer's Edition for development/testing environments).

Also, if you have any large WORM tables (Write Once, Read Many) such as Audit, History, Transaction, etc, tables, consider temporally partitioning them (I prefer partitioned views but most people consider that to be a heterodoxical recommendation) to have one file, say per month, with one (again, per month) file group and set the older files groups to READ_ONLY, back them up one final time, and then set your backups to only backup Read/Write files groups.

I have a 2TB history table that they won't let me get rid of anything in it... it only takes me a little over 10 minutes to backup that database at night because of the Read_Only temporal partitioning I've done and I've set the backups to only backup Read/Write file groups.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 8, 2021 at 7:39 am

Jeff Moden wrote:.... Compressed backups are only available in the Enterprise Edition for production environments (and Developer's Edition for development/testing environments)....

Compressed backups are available on every edition except Express Edition ! ( since SQL 2008 )

However, I think since SQL 2014 SP1 you have a system setting to enable it by default !

EXEC sys.sp_configure N'backup compression default', N'1'

RECONFIGURE

GOJohan

Learn to play, play to learn !Dont drive faster than your guardian angel can fly ...

but keeping both feet on the ground wont get you anywhere :w00t:- How to post Performance Problems

- How to post data and code to get the best help- How to prevent a sore throat after hours of presenting ppt

press F1 for solution, press shift+F1 for urgent solution 😀

Who am I ? Sometimes this is me but most of the time this is me

-

April 8, 2021 at 12:56 pm

Oh BTW, On top of compression, you may also want to fiddle around with following settings to see what works best for you !

--Media Set Options

BLOCKSIZE = { blocksize | @blocksize_variable }

--Data Transfer Options

BUFFERCOUNT = { buffercount | @buffercount_variable }

| MAXTRANSFERSIZE = { maxtransfersize | @maxtransfersize_variable }Please do always use ! ( it is a first line of defense to avoid loading/restoring bad backup data )

--Error Management Options

CHECKSUMJohan

Learn to play, play to learn !Dont drive faster than your guardian angel can fly ...

but keeping both feet on the ground wont get you anywhere :w00t:- How to post Performance Problems

- How to post data and code to get the best help- How to prevent a sore throat after hours of presenting ppt

press F1 for solution, press shift+F1 for urgent solution 😀

Who am I ? Sometimes this is me but most of the time this is me

-

April 8, 2021 at 2:54 pm

Johan Bijnens wrote:Jeff Moden wrote:.... Compressed backups are only available in the Enterprise Edition for production environments (and Developer's Edition for development/testing environments)....

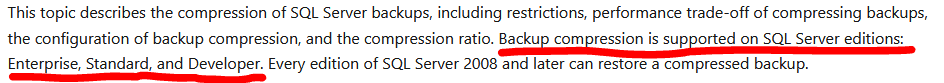

Compressed backups are available on every edition except Express Edition ! ( since SQL 2008 ) ref: https://docs.microsoft.com/en-us/sql/relational-databases/backup-restore/backup-compression-sql-server

However, I think since SQL 2014 SP1 you have a system setting to enable it by default !

EXEC sys.sp_configure N'backup compression default', N'1'

RECONFIGURE

GOLordy... there it is in black and white. From the link you posted...

I've been using the Enterprise edition forever. I had to look it up last night and, just so you don't think I'm losing my mind (although I've confirmed that's apparently started to happen 😀 ), here's the link and the screenshot of what I read in that link...

https://docs.microsoft.com/en-us/sql/t-sql/statements/backup-transact-sql?view=sql-server-ver15

I read that as meaning "Enterprise Only" for the later versions, as well.

Shifting gears a bit, your "BTW" on the Media Set and Data Transfer options is spot on. I'd forgotten that I'd tested settings for my environment and ended up with the following settings... which really did help a lot with backup performance.

BUFFERCOUNT=17

MAXTRANSFERSIZE=1048576

We recently added some memory to the server and the infrastructure group made some changes on the network. The backup speeds haven't changed but, perhaps, it's time to re-evaluate those settings and, maybe, finally convince them that changing the BLOCKSIZE from the default isn't going to mess anything up.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 8, 2021 at 2:58 pm

Johan Bijnens wrote:Oh BTW, On top of compression, you may also want to fiddle around with following settings to see what works best for you !

--Media Set Options

BLOCKSIZE = { blocksize | @blocksize_variable }

--Data Transfer Options

BUFFERCOUNT = { buffercount | @buffercount_variable }

| MAXTRANSFERSIZE = { maxtransfersize | @maxtransfersize_variable }Please do always use ! ( it is a first line of defense to avoid loading/restoring bad backup data )

--Error Management Options

CHECKSUMExcellent point! I've had great results from adjusting those settings for db backups as well.

SQL DBA,SQL Server MVP(07, 08, 09) "It's a dog-eat-dog world, and I'm wearing Milk-Bone underwear." "Norm", on "Cheers". Also from "Cheers", from "Carla": "You need to know 3 things about Tortelli men: Tortelli men draw women like flies; Tortelli men treat women like flies; Tortelli men's brains are in their flies".

Viewing 8 posts - 1 through 8 (of 8 total)

You must be logged in to reply to this topic. Login to reply