Should I listen to this recommendation to change data lengths for consistency during ETL?

-

April 4, 2017 at 3:09 pm

This tidbit has shown up in an online (recorded) course I'm doing on ETL, discussing the importance of converting data types for consistency. I haven't heard this before, and I'd like a second opinion:

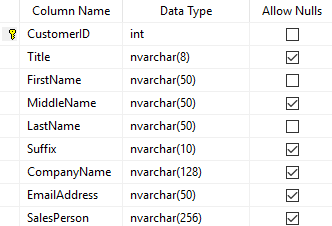

The source table:

The instructor identifies that the columns have different data lengths, and calls it a mistake, stating "there's no consistency here", and suggests that if they used 8 characters for the title, and 128 for company name, it would have been better to use 64 for the first name, or 10 for the title, and 150/200 for the company name. The given reason is that multiple data types that are not consistent can make researching and programming more tricky and introduce opportunities for errors.

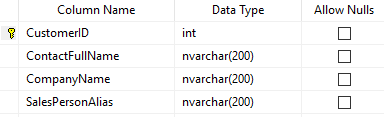

The tutor then goes on to produce the below destination table, based on the above source. SalesPersonAlias is a sub-string of the source SalesPerson, and ContactFullName is the concatenation of FirstName + " " + LastName.

The commentary: "This time my table has consistent column types, which is nvarchar 200, 200, 200 which is just a number I chose, a personal preference. I like 100, 200, 300; nvarchar will collapse to it's smallest size needed and it has very low overhead." "<referring to ContactFullName above> instead of being at 101 which is all it would take for the original source data - 50 for the first name, 50 for the last name and 1 for the space in between - I'll just convert to 200".

Is changing column lengths in the Data Warehouse to longer than the possible source data length to ensure data length consistency a best practice in ETL design? Is the tutor's criticism of the source data lengths something you'd share?

Regards,

Andrew -

April 4, 2017 at 3:59 pm

I wouldn't call it a "mistake" as the instructor did, but I do like to pad source strings a little to account for future growth. Source applications can sometimes change the way they enforce string lengths, and I don't want to have to change ETL processes each time that happens.

I also prefer to use somewhat consistent lengths for strings, or at least consistent increments (i.e. 5, 10, 50, 100, 200, etc.). With that being said, I wouldn't make something like a Title a varchar(200), even for the sake of consistency...a title will never be that long and I think some common sense is also in order.

I think everyone has their own preferences when it comes to these things, and I agree with consistency although I think the scenario you have described above may take it a bit far. I will usually sacrifice consistency for the sake of performance or complexity (specific use-cases), but not implement it blindly just for the sake of consistency either. I guess consistency means different things to different people...

My last comment, albeit from left-field and not directly related to your question, is that I would not use nvarchar in the DW unless I really need it.

-

April 4, 2017 at 4:16 pm

I personally disagree with your professor. While having space for longer things is not a horrible idea, there is no benefit to having it larger than it needs to be. Using his logic, why not use nvarchar(8000) for all of your data types? You'd never have problems with not enough space.

And depending on your table, you can quickly overflow the max size of a row by having too wide of columns.A good read on it is actually in this forum where VARCHAR(50) vs VARCHAR(8000) was discussed:

https://www.sqlservercentral.com/Forums/Topic303089-149-1.aspxTo summarize what I think are the 3 most important points from that thread:

1 - This has not touched the theoretically most important reason; in an RDBMS your data model implies the correct data domains and straight out violating it by defining a data column to have a different domain then the data model is just wrong. (posted by Hans Lindgren)

2 - One thing not mentioned is you can't index the VARCHAR(8000). The maximum number of bytes in an index is 900. (posted by Chris Nicholas)

3 - The performance difference you might see are in the cases the SQL Query Engine decides to plan for 'worst case scenarios'. When this happens it does not use statistics for a column but by brute force it generates a query plan in which the datasize of the column (for ALL records) are assumed to be the same as the defined MAX LENGTH. And hence it allocates memory and other resources to cope with this. And, as you might already have realized, this can be fatal for your performance if you only have strings of maximum datasize=50 but defined length=8000... (posted by Hans Lindgren)But, to me anyways, it just feels sloppy doing that. Like if you know a column will never have over 10 characters in it, but the values will range from 1 to 10, why have it set to store up to 200?

And it brings up more questions for me:

1 - why is your professor using nvarchar for all of his values? That is likely overkill for what he is storing in the table when VARCHAR would likely cover everything he is storing. Plus that is rather short email addresses being only 50 characters long

2 - why is your professor duplicating data in the database? The second table he is creating could easily be replaced with a view

3 - when he says "consistency", is he implying that your table should be all the same data type excpet for the ID? If so, that is soooooooooooooooooo wrong. Would he store a datetime value as a nvarchar?The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

April 4, 2017 at 10:26 pm

The instructor is partially correct. Consistency is vitally important for speed of development, more error free code, and for the sake of performance especially when it comes to the creation of proper indexes and writing SARGable code.

On the other hand, the instructor is horribly incorrect in stating the following and I've emphasized the problem...

This time my table has consistent column types, which is nvarchar 200, 200, 200 which is just a number I chose, a personal preference. I like 100, 200, 300;

Do you see the problem? Let's get 5, 10, 20, or 50 Developers making the same kinds of decisions based on their personal preferences and you end up with a bloody nightmare of possibly impossible combinations to index and the possibility of having nothing but a raft of implicit conversions and a lot of non SARGable code not to mention the problems when someone's larger data is directed to another table with smaller columns and it doesn't fit.

How do you solve the problem? You make a plan at the beginning of the project, no matter how big or small, that provides specifications that everyone must follow to a "T".

I also think it interesting that the instructor said nothing of incorrectly using names for the company and sales person contacts instead of IDs from another properly normalized table nor did he mention that if you're going to duplicate data for the sake of formatting data, that you should be consistent with your naming (considering the source, ContactFullName should probably be CustomerFullName, instead) and the columns should be index-able, automatically updated, persisted computed columns in the original table instead of being in a separate table that will need separate updates to keep current with any Inserts or Updates to the Customer table.

To summarize my opinion, consistency is important and must be enforced by a "standards" document for the project and necessary duplication of data must be made as automatic as possible. You might even want to build some UDT aliases to make it all a bit more standard still.

I'll also state that anyone that thinks that 50 characters for an email column is good enough, they're in for a very rude awakening. 😉

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

April 5, 2017 at 1:07 am

I think the others have hit on it pretty well so far. I believe this instructor has missed the mark. Making every column the same length is the same kind of thing that EntityFramework loves to do. That's not a good thing.

I also hate to see the default of 50 in the length for the datatypes. That just means no thought was put into the size of the field.

I agree with Jeff, the project should match the standards doc that the team comes up with and agrees to adhere to. Otherwise there is great potential for all sorts of variances in the project.

Jason...AKA CirqueDeSQLeil

_______________________________________________

I have given a name to my pain...MCM SQL Server, MVP

SQL RNNR

Posting Performance Based Questions - Gail Shaw[/url]

Learn Extended Events -

April 5, 2017 at 3:16 am

The source database is the Microsoft sample database AdventureWorksLT 2012. I'm not sure why everything the user can enter is NVARCHAR, why the email length is 50, why there's only DATETIME (no DATETIME2) and why some of the tables are over-simplified and not properly normalized.

I believe the destination table is a simplified loading table in a staging database, intended to show the different (consistent) data lengths, that's why the data is duplicated from the source (rather than a view).

Martin, that's a good idea - using consistent increments to data lengths when one needs chosen would add some predictability and standardization to the database. Thanks also for the reminder to be on the lookout for unnecessary NVARCHAR.

bmg002, I had the notion that setting the data length to 200 when it's maximum can only be 101 was stripping domain knowledge, thanks for finding the words. Thanks for the VARCHAR(8000) tips - I wasn't aware VARCHAR(MAX) could result in the engine using worst-case scenario query plans. The instructor isn't suggesting data types all be the same - I think he perhaps just chose a bad (overzealous) example.

Jeff and SQLRNNR, amen - I've noted the need for a department-wide standards document for a while, and am working on this - I'll expand that to common column data types and lengths. One of our internally developed databases has a slight variation to our non-verbal agreed naming standard, and it trips me up whenever I reference it, said standard would have helped avoid this.

Thanks for the assistance, as always, I appreciate the direction.

Andrew

-

April 5, 2017 at 7:25 am

Andrew P - Wednesday, April 5, 2017 3:16 AMThe source database is the Microsoft sample database AdventureWorksLT 2012. I'm not sure why everything the user can enter is NVARCHAR, why the email length is 50, why there's only DATETIME (no DATETIME2) and why some of the tables are over-simplified and not properly normalized.I think the reason is that it's not designed to be a perfect database. It's designed to be a typical database. 😉

Shifting gears, I feel no sense of loss in not seeing anything of DATETIME2. I think they really screwed it up by not allowing direct date math. To try and overcome some of the problems, they recently released a "DATEDIFFBIG" function that's totally unnecessary for DATETIME. because DATETIME allows for the same simple functionality MS EXCEL does... you can subtract a begin date from and end date to easily come up with a duration. It just needs to be formatted to be meaningful to most.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 7 posts - 1 through 6 (of 6 total)

You must be logged in to reply to this topic. Login to reply