Reducing I/O reads and tempdb in Azure Severless SQL pool

-

January 7, 2023 at 1:05 pm

I've been using Azure Serverless SQL Pool for a couple of months now. I don't have much knowledge on tempdb and I/O reads. I came across the following question:

You are using a serverless SQL pool to query some Apache Parquet files. The files consist of tens of millions of rows of UTF-8-encoded company names, area names, and employees. The database is configured to use the default collation.

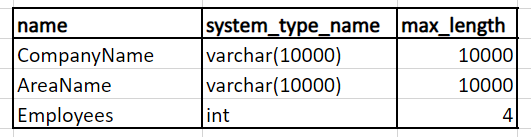

The queries use OPENROWSET and infer the schema shown in the following table.

Which of the following methods will help reduce the I/O and tempdb usage:

- Defining an external table for the Parquet files and updating the query to use the table.

- Using OPENROWSET WITH to explicitly specify the maximum length for CompanyName and AreaName.

- Defining a data source and view for the Parquet files. You recommend updating the query to use the view.

I'm under the impression that option 2 and 3 would help. Any clarification is appreciated.

- This topic was modified 3 years, 1 months ago by Dominic546.

-

January 8, 2023 at 1:10 pm

Thanks for posting your issue and hopefully someone will answer soon.

This is an automated bump to increase visibility of your question.

-

January 8, 2023 at 2:58 pm

Dominic546 wrote:I've been using Azure Serverless SQL Pool for a couple of months now. I don't have much knowledge on tempdb and I/O reads. I came across the following question:

You are using a serverless SQL pool to query some Apache Parquet files. The files consist of tens of millions of rows of UTF-8-encoded company names, area names, and employees. The database is configured to use the default collation. The queries use OPENROWSET and infer the schema shown in the following table.

Which of the following methods will help reduce the I/O and tempdb usage:

- Defining an external table for the Parquet files and updating the query to use the table.

- Using OPENROWSET WITH to explicitly specify the maximum length for CompanyName and AreaName.

- Defining a data source and view for the Parquet files. You recommend updating the query to use the view.

I'm under the impression that option 2 and 3 would help. Any clarification is appreciated.

Interesting question, 10000 character company and area names? (would not like to memorise those)

😎

Questions:

- Have you tried to define an external table with definitions closer to the real values, such as company name as nvarchar(250)?

- As the Parquet file format is a columnar storage format, transforming to row-type storage will expand the volume, is the file format and / or the target database the appropriate choices?

Viewing 3 posts - 1 through 3 (of 3 total)

You must be logged in to reply to this topic. Login to reply