How to backup a specific table to an external folder?

-

Hi everyone

My SS db has a bunch of tables that I don't use. I would like to archive them outside of SS vs deleting them for good. The table are quite big (about 4 million records). I have used Generate Scripts to create backup of table definitions and SP but never with data. If I use Generate Scripts for schema and data then the file that is created is about 14GB in size and is too big to open in SS. SS let me create the file but it won't let me open the file. My question is..Is there a way to back up the data and table definitions to an external destination (say external drive or another drive on the machine) so if in the future if I ever need it then I can retrieve the archived table?

Thank you

-

April 10, 2025 at 5:15 pm

You can export the data as text files as long as you re-import the files to check it goes smoothly. People often find commas in csv fields or unescaped double double quotes in their files which makes them difficult to import. Avoid the temptation to run a query with results to file, you need to export the data, not what the query looks like on your screen. It would be less work to create a new db, move the tables over and detach or backup the database. If you need the tables again simply restore, but again, verify the backup works before dropping the tables. I would avoid attempting to script the data inserts unless it's a 10 row table.

-

April 10, 2025 at 5:33 pm

Ed B wrote:You can export the data as text files as long as you re-import the files to check it goes smoothly. People often find commas in csv fields or unescaped double double quotes in their files which makes them difficult to import. Avoid the temptation to run a query with results to file, you need to export the data, not what the query looks like on your screen. It would be less work to create a new db, move the tables over and detach or backup the database. If you need the tables again simply restore, but again, verify the backup works before dropping the tables. I would avoid attempting to script the data inserts unless it's a 10 row table.

you bring up good points. I think the CSV output is too much hassle when it comes to re-importing the files. It is easier to have a new DB created to store archived tables. Creating the DB is easy. How exactly do I move table and indexes from DB1 to DB2?

- water490 wrote:Ed B wrote:

You can export the data as text files as long as you re-import the files to check it goes smoothly. People often find commas in csv fields or unescaped double double quotes in their files which makes them difficult to import. Avoid the temptation to run a query with results to file, you need to export the data, not what the query looks like on your screen. It would be less work to create a new db, move the tables over and detach or backup the database. If you need the tables again simply restore, but again, verify the backup works before dropping the tables. I would avoid attempting to script the data inserts unless it's a 10 row table.

you bring up good points. I think the CSV output is too much hassle when it comes to re-importing the files. It is easier to have a new DB created to store archived tables. Creating the DB is easy. How exactly do I move table and indexes from DB1 to DB2?

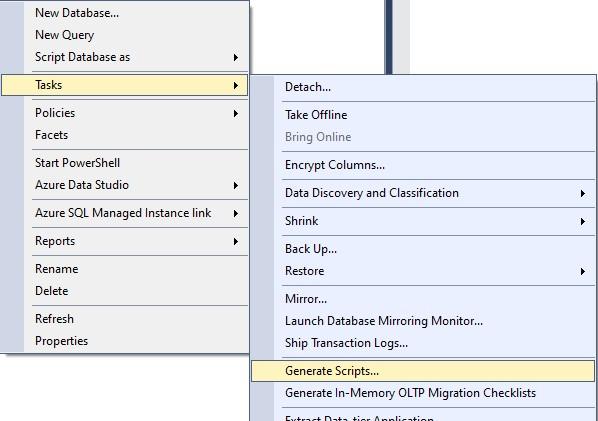

You can script the table definitions:

Then for each table you can just do:

insert into myNewDB.dbo.myTable select * from dbo.myTable;

For tables with identity columns you can use identity_insert

-

April 10, 2025 at 7:23 pm

Jonathan AC Roberts wrote:water490 wrote:Ed B wrote:You can export the data as text files as long as you re-import the files to check it goes smoothly. People often find commas in csv fields or unescaped double double quotes in their files which makes them difficult to import. Avoid the temptation to run a query with results to file, you need to export the data, not what the query looks like on your screen. It would be less work to create a new db, move the tables over and detach or backup the database. If you need the tables again simply restore, but again, verify the backup works before dropping the tables. I would avoid attempting to script the data inserts unless it's a 10 row table.

you bring up good points. I think the CSV output is too much hassle when it comes to re-importing the files. It is easier to have a new DB created to store archived tables. Creating the DB is easy. How exactly do I move table and indexes from DB1 to DB2?

You can script the table definitions:

The for each table you can just do:

insert into myNewDB.dbo.myTable select * from dbo.myTable;

For tables with identity columns you can use identity_insert

It worked. Thank you so much!

-

April 11, 2025 at 4:58 pm

If you think other systems will ever need the data, you could also look at exporting the table to parquet format. Using Python is the normal way to do this. If Polybase is installed even SQL Server can read a parquet file as an external table.

Reading and Writing Parquet Files in SQL Server - Simple Talk

Viewing 6 posts - 1 through 6 (of 6 total)

You must be logged in to reply to this topic. Login to reply