DBCC Integrity Check Error - insufficient system memory in resource pool

-

April 27, 2020 at 12:27 am

We have Sql server STD 2012 SP2 and from last 3 days, we are getting error while running the DBCC Integrity Check for both User and system Databases, we are using Ola Maintenance solution script.

We have Total Physical Memory 8 GB, Total Virtual Memory 9.25 GB (showing in system Information Available Physical Memory 925 Mb and Available Virtual Memory 2.25 Gb).

MAX Memory configured as 4096 MB and MIN Memory 1024 KB)

We are seeing following error in sql server error log:

indent preformatted text by 4 spaces

Level 17, State 123, Server line 1 There is insufficient system memory in resource pool 'internal' to run this query.

Msg 8921, Level 16, State 1, Server ,Line 1 Check terminated. A failure was detected while collecting facts.

Possibly tempdb out of space or a system table is inconsistent. Check previous errors.

Outcome: Failed Duration: 02:55:03 DateTime: 2020-04-24 07:39:12

DateTime: 2020-04-24 07:39:12. Process Exit Code 1. The step failed.

When i checked Event log then i see the following error:

There is insufficient system memory in resource pool 'internal' to run this query.

We have TEMP DB configure as:

Size" 2046 MB and space available: 1360 MB

Temp Files: TempDB, TempDev2 and TempLog

We have only one real DB with 6 Gb.

Thanks for your help!

-

April 27, 2020 at 12:43 am

I have checked with following query:

We don't have Resource pool enabled:

SELECT is_enabled FROM sys.resource_governor_configuration;

It returns '0'

-

April 28, 2020 at 10:05 pm

your available physical memory is pretty low, and I am pretty sure that SQL won't run on Virtual memory. My guess here (without seeing your system) is you are out of memory.

How much of that 8GB is currently allocated to SQL Server? Do you have enough free memory for SQL to get up to 4GB?

I do not recommend dropping below 4GB (which is already quite low for SQL Server). I would look at offloading some of the other things from that system so SQL can get the full 4 GB you are promising to it or adding more memory so SQL can get the 4 GB.

Failing that, I would also check TEMPDB. You said the size and avialable, but is that for the tempdb data file or log file? Are those set to autogrow? do they both have enough free space?

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

April 29, 2020 at 1:47 am

Thanks Brian for your detail response.

We have total Memory 8 GB so i have increased MAX Memory to 6 GB and it's working fine right now. but we have also application running on so now just 2 GB left so don't know but mostly Sql server consuming as when i see the process in task manager, CPU was nothing much but memory was showing almost 86%.

I think probably i should add more RAM and apply the service pack might help.

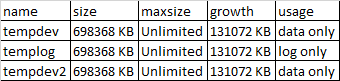

TEMP Files:

<!--more-->

name

size

maxsize

growth

usage

tempdev

698368 KB

Unlimited

131072 KB

data only

templog

698368 KB

Unlimited

131072 KB

log only

tempdev2

698368 KB

Unlimited

131072 KB

data only

Any other long term solution you are suggesting?

-

April 29, 2020 at 4:25 pm

I don't think telling SQL it can use more memory is the way to go when you are possibly having memory pressure already. If I understood you correctly you said you have an application that needs 2 GB and SQL is using 6 GB and you have a total of 8 GB. What memory will the OS use? You had under 1 GB free when SQL only had 4 GB. So when you had 6 GB (your applications and SQL), you had 1.1 GB used by the OS and other processes leaving you 0.9 remaining. NOTE that is assuming SQL was able to get the full 4 GB you said it could have. Now you bumped that up to 6 GB? That is not what I was recommending at all... That is the opposite of what I recommended.

A long term solution is getting more memory into the server. If you tell SQL it can have 4 GB of memory, it will operate with the expectation that if it needs that 4 GB, it can get it. So if SQL is running and only needing 1 GB of memory at the time it will use that. Then when it needs more it will ask the OS for more until it uses all of the 4 GB (technically it can go a little bit over). If it can't get the memory it needs for any reason, you may get errors or it may free some old stuff from memory.

If you think of memory like real money, imagine if someone told you they could loan you $40. They have a total of $80. They tell another 3 friends they can borrow $20. You only need 20 right away, the other 3 friends needed the 20 right away. You have no knowledge of how much the friends borrowed, but there is $0 left to borrow. You think you can have another $20, but when you ask for it, it is not there. So someone has to pay back $20 before you can borrow the $40. You have no knowledge of anyone else's borrowing habit or if they can pay it back, so the only thing you can do is pay back your $20 and borrow another. But you might not be able to do that. You may need to pay back $5 and borrow $5 and repeat until you get done with the $40 and can pay the whole thing back. SQL though, once it has the money never pays it back until you restart the service. If it can't get more, it will shuffle around the memory it has (free some plans from cache for example) to try to get enough so it can do what it needs to do. If it cannot get enough, you get errors. In the above example, you have told SQL it can have 6 GB of memory out of 8 GB and the OS now has 2 GB to use on everything else which may (likely IS) too low based on what you have said so far.

This is also assuming the problem you saw is with memory pressure. It could be something else (such as tempdb not having enough disk space to grow). The numbers you posted for TempDB size in the original post is the TOTAL tempDB size. So that 1360 MB free could be all in the data files, or could be a combination of data file and log. Do you have enough free space in tempdb log? I would hope you do, but would be worth checking out.

Do you have enough disk space left for tempDB (and other databases) to grow? do you have auto-grow turn on? do you have free space in your user database you are running dbcc checkdb on? do you have enough free space in the log of the database you are running dbcc checkdb on? Do you get the same error when NOT using Ola's script to run DBCC?

Do you have other things installed on that server that you can migrate to another server or that don't need to be installed on it? I am referring to any other applications including but not limited to SSIS, SSRS, Office, active directory, exchange, SSAS? Or an even more scary thought - is this a server that end users connect to directly (RDP)?

Is your antivirus on that server configured to not scan the database files?

Is your power settings set to "high performance"?

One of the first things I generally recommend (which a lot of people disagree with) is to stop using Ola's scripts; write your own. Ola's scripts are good if you need a "quick fix" to a system that has no backup and maintenance strategy, and they are good scripts when they work. But I generally find those "all-in-one" scripts end up being more headache than it is worth. Especially in an enterprise environment. Ola's scripts have "community support". If they fail, you are jumping on a forum like this hoping that a random stranger will take time to reply and help. His script is very lengthy, which isn't a bad thing if you understand it all, but I know I cannot support his scripts in my environment if it fails on me. On top of that, it has a lot of features I will never use. For example, at my workplace we have RedGate SQL Backup. I have no need for a script to contain native backup or Idera's backup solution or Quest or any other 3rd party backup solution. If I use Ola' script, it has scripts for all of the tools baked into it. If I write my own, I can tailor it for my needs. I may have tables where I know index fragmentation is expected and I can build up the maintenance scripts to ignore that tables fragmentation (not a good idea, but I could).

Plus if you write your own, you can have short, specific scripts that are easy to do repeated tests on. For example, with Ola's script, if there was a typo in it (there isn't as far as I am aware) that caused backups to run when you only wanted integrity checks, you submit the bug to Ola and when he has time, he updates his script to fix it. This could be hours, days, weeks, months or never if he decides to abandon it. If it is your script, and you wrote them to be specific, you KNOW your integrity check will only do an integrity check and will never accidentally do a backup at the same time unless you ran the backup script first (which I generally do).

I know this post is long, but I think I make some good points. You do not have to follow all of the above advice. I do not know your environment (servers, network, SAN, workstations, etc), so my advice may be wrong in your scenario. My advice is biased towards my work environment and past experiences. I wrote my own backup and maintenance scripts after hitting some limitations of the existing maintenance plans. The previous DBA's liked GUI's and thus liked maintenance plans. Drag and drop database backup and maintenance seemed nice and easy. Worked good enough for quite some time, but eventually I became the sole DBA, so I started looking into the limitations of maintenance plans which led me to Ola's script. But when I opened it and tried to read it, I realized that I could not adequately support it in an emergency so I took to working on my own backup and maintenance scripts. My opinion with SQL scripts is if I cannot support it when it fails, it doesn't go on the production server. This mentality has resulted in our developers (myself included) writing shorter stored procedures and if it needs to be long, we are getting better at commenting it. I am cautious about running any code I randomly find on the internet if I don't understand what it is doing. This applies to SQL as well as any other scripts I find online (bat file, powershell, shell scripts, vbs).

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

May 5, 2020 at 10:35 pm

Thanks Brian for your detailed information with very good example.

I understood that i need to add more memory as increasing MAX memory could create problem as OS and application have combined only 2 MB left.

I am also thinking to apply the service pack which might help.

For TEMPDB, You asked "Do you have enough disk space left for tempDB (and other databases) to grow? " as i mentioned about 2 GB total space and my job running mid night so there are no other processes running but how i can tell enough disk space left?

We have Auto Grow set up and by MB.

No other applications like SSIS, SSRS, Office, active directory, exchange, SSAS.

We have enough disk space on the drive.

Thank You!

Viewing 6 posts - 1 through 6 (of 6 total)

You must be logged in to reply to this topic. Login to reply