Cloud Performance Considerations

-

October 27, 2025 at 7:04 pm

How important to SQL Server is the speed of the SSD and network latency?

Essentially, how does a cloud SSD of 125MB/sec compare to a Gen 5 SSD which measures 14,000MB/sec? Even if we manage to max out the Premium SSD V2 with 1,250MB, it's still less than 10%.

Also, how does a SQL server running on the same physical server as an app server compare to VMs that may be in the same zone but not on the same machine necessarily?

-

October 28, 2025 at 7:10 pm

Thanks for posting your issue and hopefully someone will answer soon.

This is an automated bump to increase visibility of your question.

-

October 29, 2025 at 6:45 pm

Speed of the SSD and network latency will determine the maximum performance of the SQL instance. My understanding, if the network latency is 1 second and the SSD speed is 125 MB/sec and you are pulling in 125 MB of data out of SQL, it will take 3 seconds to complete (assuming nothing else is touching the disk). 1 second for the request due to network latency, 1 second to get the data, 1 second network latency to return the data.

As for the speed of the cloud SSD at 125MB/sec compared to the Gen 5 SSD at 14,000MB/sec, you are able to read data over 1000 times faster assuming nothing else is using the disk.

It really depends on your system needs. Also depends on if the speeds are read/write, read only, write only. If you are creating an OLAP server, you want fast reads. If you are creating an OLTP server, you want fast write speeds. If it is a mix, then you need to find the sweet spot.

As for SQL running on the same physical server as the app vs on different VM's and potentially different machines - you get network latency by putting in more layers. If the app and database are all on the same machine, you remove the network bottleneck. That being said, if network is not a bottleneck (ie you have a 1 Gbps connection and you are maxing out at 10 Mbps), then you will notice basically no difference having it on different VM's.

In the end, it REALLY falls under "it depends". Fast SSD but slow network means you'll have bottleneck at the network side. For example, if the SSD is at 14Gbps AND it is on a SAN with a 10Gbps connection, then the fastest you will read or write to the disk will be 10Gbps. If the network is at 10Gbps and the disk is 125Mbps, the fastest you can read or write to the disk will be 125Mbps.

The first step with any project like this is to determine your current resource usages (CPU, memory, disk, network), then add overhead for growth and that's what you need. As for what will help performance, the faster the resources, the faster the SQL instance. As for VM's, it really depends on the network connection between them. If you set up a 10Mbps NIC on your VM, then things will be slow.

One risk with having the database and app on the same server is that you are sharing resources. So if the server has 128 GB of RAM (for example), that NEEDS to be shared with the application AND the database and potentially even with the user sessions if they RDP in.

The above is all just my opinion on what you should do.

As with all advice you find on a random internet forum - you shouldn't blindly follow it. Always test on a test server to see if there is negative side effects before making changes to live!

I recommend you NEVER run "random code" you found online on any system you care about UNLESS you understand and can verify the code OR you don't care if the code trashes your system. -

October 30, 2025 at 12:18 am

We are coming from on-premise with devices that are middle-spec. HP G9 or 10s. We have 3 servers - we only use 4vCPUs for our SQL Server.

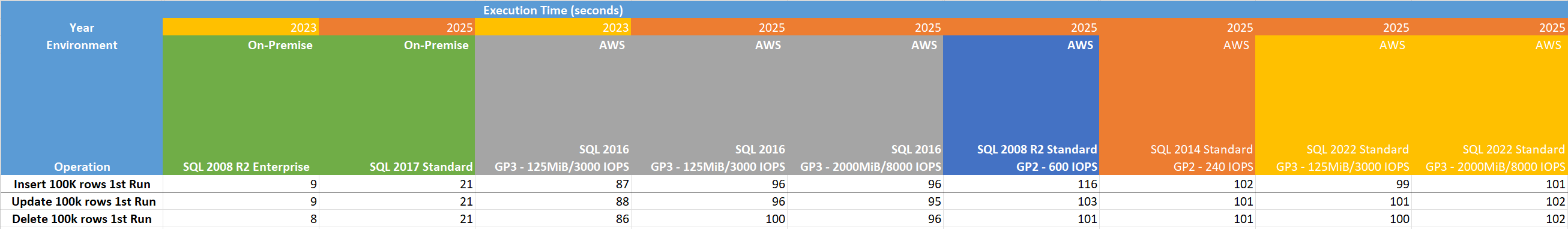

We are coming from on-premise with devices that are middle-spec. HP G9 or 10s. We have 3 servers - we only use 4vCPUs for our SQL Server.Attached is an image of testing using 4 AWS instances vs 2 of our on-premise instances.

- AWS M4-xlarge (SQL Server 2008 R2)

- AWS M5-xlarge (SQL Server 2016 Standard)

- AWS M4-large (SQL Server 2014)

- AWS R6-2Xlarge (SQL Server 2022)

Note the metronomic performance of AWS. On-Premise is 5-10 faster than any AWS setup.

On AWS, whether it's using GP2 240 IOPS, GP2 600 IOPS, GP3 125 MiB/3,000 IOPS or GP3 2000 MiB/8,000 IOPS, the times are identical regardless of SQL Server version. How can the speed of the hard drive yield no performance difference whatsoever?

Also of note is the performance degradation across the board from 2023 to today without any changes - just AWS taking away performance without notice.

I am doing DML from SSMS on the server with a table (without any indexes) with 100,000 rows since clouds are not really capable of high and even 100,000 rows tests my patience. I should have done 10,000 rows 🙂

Tomorrow I'll test 80,000 IOPS but if going from 240 to 3,000 has no effect or going from 3,000 to 8,000 then I'm pretty 80,000 won't do anything.

It should be noted that AWS GP3 2,000 MiB is much higher than Azure's top 1,250 for SSD v2 Premium which is also machine dependent.

- This reply was modified 3 months, 3 weeks ago by MichaelT.

-

October 30, 2025 at 8:44 pm

Passmark Disk

125MiB/3000 IOPS (default for GP3)

1100

2000MiB/8000 IOPS (MAX MiB - lowest IOPS)

8491

2000MiB/37,500 IOPS (MAX MiB - MAX IOPS for 75GB drive)

9775

So Passmark is detecting an improvement - not necessarily 1:1 ratio but from 125MiB to 2,000 it jumped by nearly 8.

And the increase from 8,000 IOPS to 37,500 IOPS yielded a 15% improvement. These were the min and max IOPS for a drive of 75GB for test purposes so I couldn't test 80,000 which is the absolute max for larger drives.

I guess the million dollar question is how does AWS separate normal IO from database IO and throttle it and why is that the case?

As expected, the storage increase from 8,000 to a whopping 37,500 yielded no discernible improvement other than possibly 1-2 seconds out of 100.

Viewing 5 posts - 1 through 5 (of 5 total)

You must be logged in to reply to this topic. Login to reply

We are coming from on-premise with devices that are middle-spec. HP G9 or 10s. We have 3 servers - we only use 4vCPUs for our SQL Server.

We are coming from on-premise with devices that are middle-spec. HP G9 or 10s. We have 3 servers - we only use 4vCPUs for our SQL Server.