BULK INSERT question

-

June 29, 2025 at 11:27 pm

Hi everyone

I have a text file that I want to import into SS table. I can import the file but the problem is that order isn't preserved. Suppose the file has below entries:

Line1

Line2

Line3

When the above gets imported into SS table it is presenting them as

Line2

Line3

Line1

The order isn't preserved. I can't do sort on the table because the order of the lines in the text file don't follow any logical order that allows sorting. For example, line1 could be "DEF" and line2 could be "ABC". I can get line1 and line2 to import but once in the table I have no way of knowing what the original order was ("DEF" is first and "ABC" is second).

My proposed solution is to have a LOG_ID field that auto-increments each time a row is added and then instruct BULK INSERT to only load into LOG_ENTRY column. This isn't working. What can I do to fix it?

Table:

CREATE TABLE [dbo].[EventLog](

[LOG_ID] [int] IDENTITY(1,1) NOT NULL,

[LOG_ENTRY] [varchar](max) NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

GOSP:

/****** Object: StoredProcedure [dbo].[WIP_UpdateEventLogTable] Script Date: 2025-06-29 4:30:48 PM ******/SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

ALTER PROCEDURE [dbo].[WIP_UpdateEventLogTable]

AS

DECLARE @FILEPATH VARCHAR(200)

SET @FILEPATH = 'C:\Documents\Log.txt'

EXEC ( 'BULK INSERT dbo.EventLog.Log_Entry

FROM ''' +

@FILEPATH +

''' WITH (FIRSTROW = 2, FIELDTERMINATOR = '','', ROWTERMINATOR = ''\n'', MAXERRORS = 0);'

)Error:

Msg 208, Level 16, State 82, Line 3

Invalid object name 'dbo.EventLog.Log_Entry'.

(1 row affected)

Completion time: 2025-06-29T16:32:53.7176295-07:00Thank you

-

June 30, 2025 at 1:43 am

Not sure what is going on here. I posted a reply twice but it never got posted. I will try again. Sorry for any spam.

I did some research and it looks like the above isn't really doable. If I am wrong please correct me.

Looks like the way to get this to work is with a FORMATFILE option. I created the file but when I try to use it I am getting an error:

Msg 4864, Level 16, State 1, Line 16

Bulk load data conversion error (type mismatch or invalid character for the specified codepage) for row 2, column 1 (LOG_ID).

Msg 7399, Level 16, State 1, Line 16

The OLE DB provider "BULK" for linked server "(null)" reported an error. The provider did not give any information about the error.

Msg 7330, Level 16, State 2, Line 16

Cannot fetch a row from OLE DB provider "BULK" for linked server "(null)".

Completion time: 2025-06-29T18:31:05.2699659-07:00Here is my code to run BULK INSERT:

DROP TABLE IF EXISTS #EventLog

CREATE TABLE #EventLog

(

[LOG_ID] [int] IDENTITY(1,1) NOT NULL,

[LOG_ENTRY] [varchar](max) NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

BULK INSERT #EventLog

FROM 'C:\Users\Documents\Log.txt'

WITH ( FIRSTROW = 2,

FIELDTERMINATOR = '',

ROWTERMINATOR = '\r\n',

MAXERRORS = 0,

FORMATFILE = 'C:\Users\Format_File\EventLog.xml'

)

select*

from#EventLog -

June 30, 2025 at 1:45 am

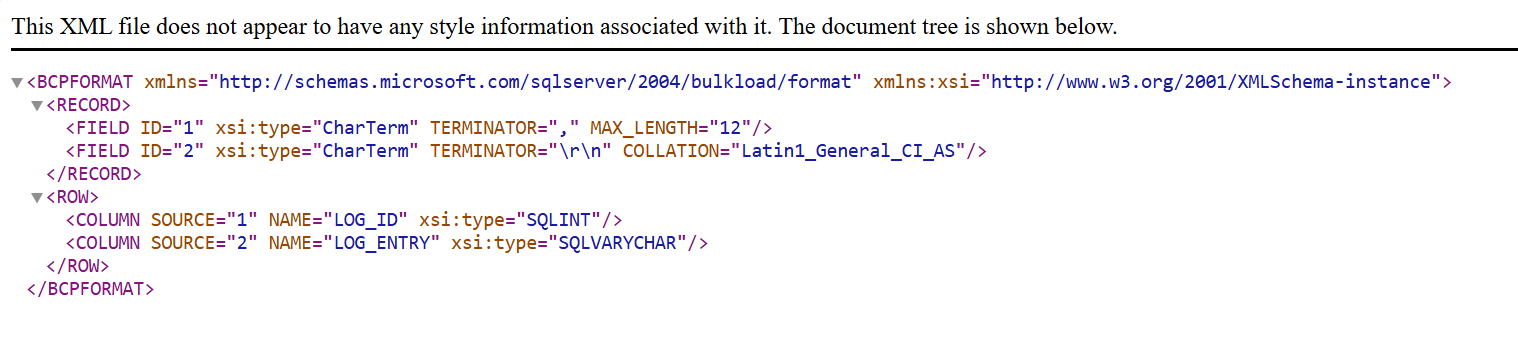

it won't let me post the formatfile so here is a picture of it:

-

June 30, 2025 at 6:49 am

Why not just add a number to each line before importing? With PowerShell:

Get-Content -Path "Original.txt" |

ForEach-Object { "$($_.ReadCount),"$_"" } |

Set-Content -Path "OriginalwithNumbers.csv"ps back-tick " to get an embeded double quote does not display here.

This is a tilde separated version:

Get-Content -Path "Original.txt" |

ForEach-Object { "$($_.ReadCount)~ $_" } |

Set-Content -Path "OriginalwithNumbers.csv"- This reply was modified 8 months, 1 weeks ago by Ken McKelvey.

- This reply was modified 8 months, 1 weeks ago by Ken McKelvey.

- This reply was modified 8 months, 1 weeks ago by Ken McKelvey.

-

June 30, 2025 at 7:30 am

create a view over that table without the identity column - then insert into the view instead of the table and see if result is what you wish for.

Ken solution is guaranteed to work but may have issues with huge files.

-

June 30, 2025 at 6:17 pm

Thank you both. The line numbers got added. I ran the BULK INSERT code and the entire text file got added to one row vs separate rows. I don't think SS can figure out the end of line characters. What could be the issue and what would be a fix?

Code:

DROP TABLE IF EXISTS #EventLog

CREATE TABLE #EventLog

(

[LOG_ENTRY] [varchar](max) NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

BULK INSERT #EventLog

FROM 'C:\Users\Documents\Logs\IB Log Lined.txt'

WITH (

-- FIRSTROW = 2,

FIELDTERMINATOR = '',

ROWTERMINATOR = '\r\n',

MAXERRORS = 0

)

select*

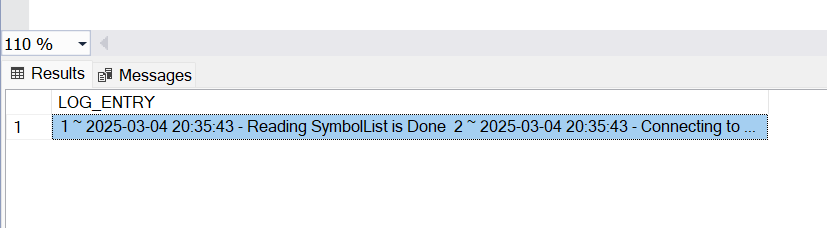

from #EventLogScreenshot:

Notice the ~ which is supposed to be on each line but in SS they are all combined:

- This reply was modified 8 months, 1 weeks ago by water490.

-

June 30, 2025 at 6:25 pm

using a hex editor see what is the true row delimiter.

\r\n is one, but it can also be \r or \n on their own. (you can also try each one individually)

-

June 30, 2025 at 9:21 pm

thank you both. the code is working great now.

-

June 30, 2025 at 9:39 pm

and working well with what solution?

Viewing 10 posts - 1 through 10 (of 10 total)

You must be logged in to reply to this topic. Login to reply