The first thing, I did, I searched online if anyone else had already shared their pain points and possible solutions of using Durable Functions in Azure Data Factory:

The first two ADF posts gave me some confidence that Durable Functions could be used in ADF, however, they only provided some screen-shots, no code examples, and no pattern to pass input to a Durable function and process its output in the end, which was critical to my real project use-case; but I still give credit to both guys for sharing this information. The third post is one of many very detailed and well written about Durable Functions, but they didn’t contain information about ADF and PowerShell code for my Function App that I was looking for. So, this was my leap of faith to do further exploration and possibly create an ADF solution with the Durable Functions that I needed.

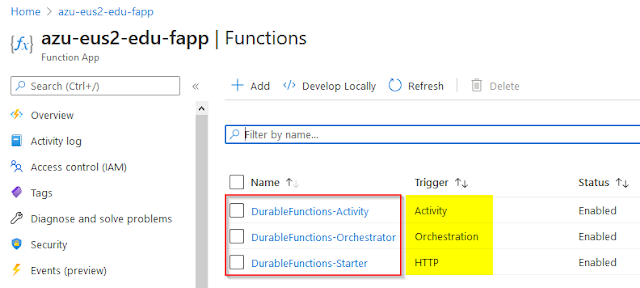

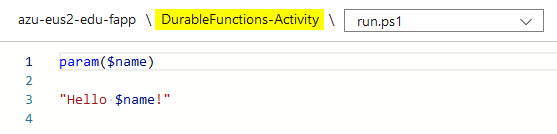

The sample code is a simple solution to write the output of different city's names:

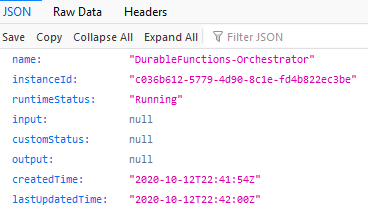

You can even test this whole solution and see the results. First, you will need to trigger the Starter function. In a few seconds if will provide you with a JSON output that will contain several URIs, one of them will be the statusQueryGetUri to supply a timely status of my further Orchestration execution.

You can pass the value of this URI to your web browser to see how your durable function will operate in time.

It all looked good, but this still wasn't my use case, I really needed to be able to pass a JSON request to my Durable function with a parameter value and expect that my durable function output will be based on that input parameter. I needed to make a change to my durable function code and I haven't started working in a data factory environment yet.

Alright, I made the following changes in my proof of concept Durable Function (my real use-case Azure Function business logic is far more complex).

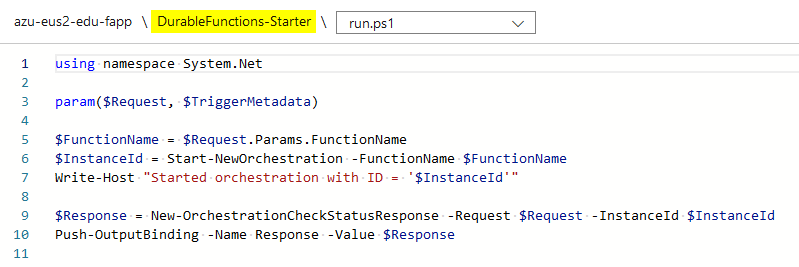

DurableFunctions-Starter

First, I’ve added an HTTP request as an input parameter for my orchestration function. This $Request would also include a JSON message body to incorporate the whole amalgam of incoming parameters.

using namespace System.Net

param($Request, $TriggerMetadata)

$FunctionName = $Request.Params.FunctionName

#$InstanceId = Start-NewOrchestration -FunctionName $FunctionName

$InstanceId = Start-NewOrchestration -FunctionName $FunctionName -Input $Request

Write-Host "Started orchestration with ID = '$InstanceId'"

$Response = New-OrchestrationCheckStatusResponse -Request $Request -InstanceId $InstanceId

Push-OutputBinding -Name Response -Value $Response

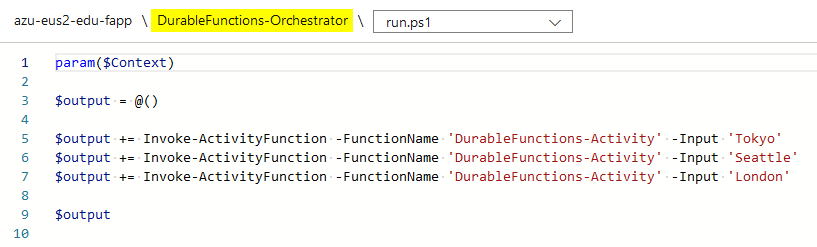

DurableFunctions-Orchestrator

Second, I made a total makeover of the orchestration function by replacing references to hard-coded parameters to the Request body ($Context.Input.Body) that will be passed from my initial DurableFunctions-Starter function. It actually took a while to understand how to get to the Input reference from the incoming $Context parameter (time-saving tip 🙂

using namespace System.Net

param($Context)

$output = @()

$output += Invoke-ActivityFunction -FunctionName 'DurableFunctions-Activity' -Input $Context.Input.Body

$output

DurableFunctions-Activity

The final change took place in the activity function, where now instead of outputting the incoming $name parameter, it takes the new $Request parameter (you can keep the @name or rename it any way you want to, just don't forget to change this in the function bindings or function.json supporting file).

The current Activity function now performs some processing by taking the timezone element from the incoming @Request parameter and then generating the @response with the output of UTC converted time to a requested timezone. Again, this wasn't a real project use case of my Azure Function that caused me to write this blog post, I'm just using this proof-of-concept code to show what can be done, the rest of the Azure Function/Durable Function coding is up to us to explore.

using namespace System.Net

param($Request)

$timezone = $Request.timezone

if ($timezone) {

$timelocal = Get-Date

$timeuniversal = $timelocal.ToUniversalTime()

$converted_time = [System.TimeZoneInfo]::ConvertTimeBySystemTimeZoneId($timeuniversal, [System.TimeZoneInfo]::Local.Id, $timezone)

Write-Host "Requested TimeZone: $timezone"

Write-Host "Local Time: $timelocal"

Write-Host "UTC Time: $timeuniversal"

Write-Host "Converted Time: $converted_time"

$response = "{""Response"":"""+$converted_time+"""}"

}

else {

$response = "{ ""Response"":""Please pass a request body""}"

}

# Outputing the result back to the Orchestrator

Write-Output $response

In the end, if you're developing your code in a Visual Studio Code environment or directly in Azure Portal (like me :-), it would help to test its functionality before attempting to use it in Azure Data Factory. Let’s do it.

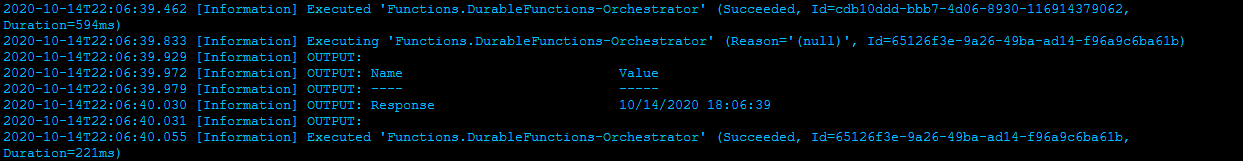

I move myself to the DurableFunctions-Starter function, then I pass the JSON body request as ‘{"timezone": "Eastern Standard Time"}’ and click the Run button.

As a result, a few seconds later I get the following JSON response from my Starter durable function:

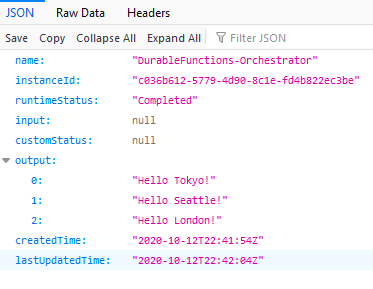

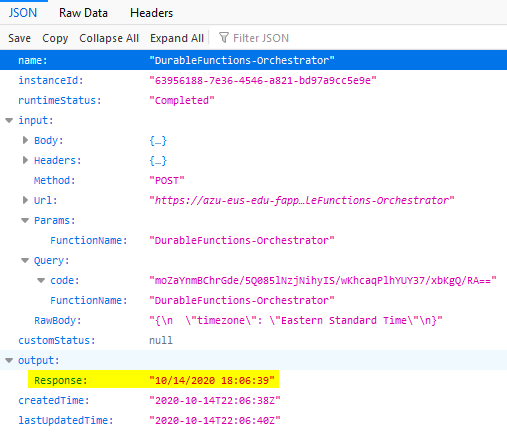

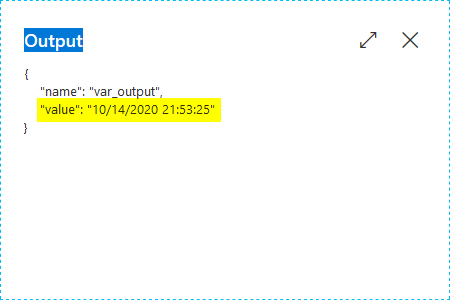

The Orchestration durable function returns the following output with the JSON Response value.

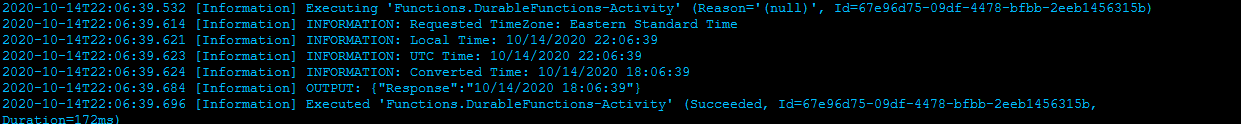

The Activity durable function provided me with more details on how it had processed the incoming "Eastern Standard Time" timezone parameter.

But, more than that, I can now use the highlighted "statusQueryGetUri" and check the status of my Durable function processing along with the returned output result in a browser:

This Uri can be used in my data factory workflow logic, more ADF notes are coming...

Woking model of an ADF pipeline to execute Azure Durable Functions

This is a working model of how I would create an ADF process to execute Azure Durable Function and process the function output result.

1) Prepare JSON request body message

2) Trigger a durable function execution

3) Check the status of my durable function execution and move further when the status is “Completed”

4) Process the returned output result if needed

Now, I would like to inject a few more details to explain how it works:

(1) Prepare JSON request body message

This could be a simple programmatic Set Value activity task to define a String variable of your HTTP JSON message body request. In my testing case it was a simple text: ‘{"timezone": "Eastern Standard Time"}’.

(2) Trigger a durable function execution

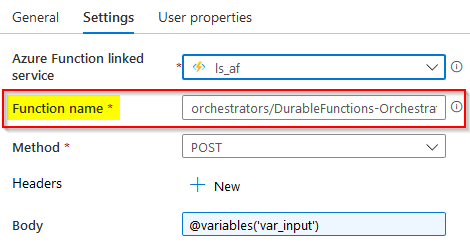

Here becomes an interesting part: usually, I thought that to execute a regular Azure Function I could use just its name which works with no issues. However Durable Function is a different thing, referencing my DurableFunctions-Starter as a name won’t work, and it will fail the Function App is not found error message. Looking at the existing binding information of my Starter durable function, I can use the route parameter which I need to construct as a full name of my Durable Function (i.e. Orchestrator, because Starter durable function can call multiple Orchestrator functions if needed).

So, instead of using DurableFunctions-Starter as a function name to trigger, I had to set it to orchestrators/DurableFunctions-Orchestrator in my ADF Azure Activity task to execute.

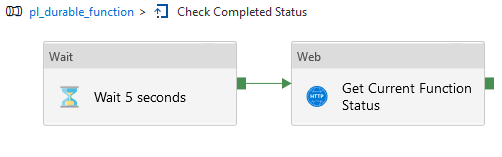

(3) Check the status of my durable function

This should be easy since I already know that the output of my (2) Azure Function call will produce the "statusQueryGetUri" which I can check with a simple Web call. If it's not "Completed" yet, then I can wait a few seconds/minutes and repeat this web call again.

The web activity is configured to execute GET method for the following URL: @activity('Azure Durable Function call').output.statusQueryGetUri. The statusQueryGetUri value will be different every time when you execute your Azure Function, so a dynamic setting is very helpful.

This chase to check function call status and then wait and do it again, will be repeated until the output of the web activity equals to “Completed” or this leads to a similar expression in my Until activity task setting configured to @not(or(equals(activity('Get Current Function Status').output.runtimeStatus, 'Pending'), equals(activity('Get Current Function Status').output.runtimeStatus, 'Running'))).

(4) Process the returned output result

As a result of a successful ADF pipeline run, I can extract my Durable Function output Response value from what comes out of the "Get Current Function Status" activity task, and this expression @activity('Get Current Function Status').output.output.Response returns the Response with a converted time based on the initially requested time-zone:

Mission accomplished!

Closing notes

I think this has been the longest journey to create a blog post for me so far: a couple of weekends and a few more nights to create a working prototype of durable functions which can be executed in my Azure Data Factory workflow, and I can also pass incoming JSON formatted parameters and process returned results at the end.

I’m happy that it has worked in the end, I’m also glad that this leap of faith was worth to try and experience to struggle with some not well-documented concepts of durable functions and how they can be incorporated in Azure Data Factory along with many hours of trials and errors, eventually resulted to a successful “green” outcome 🙂

Feel free to share this post and send me your comments if your experience with Durable Functions was different than mine.

After finishing writing this blog post, I can remember this whole journey of using Durable Functions in Azure Data Factory better: long-rinning Azure Function code can be supported in ADF! Well done, Microsoft, good job! 🙂