(2020-July-29) There is a well known and broadly advertised message from Microsoft that Azure Data Factory (ADF) is a code-free environment to help you to create your data integration solutions - https://azure.microsoft.com/en-us/resources/videos/microsoft-azure-data-factory-code-free-cloud-data-integration-at-scale/. I agree and support this approach of using drag and drop visual UI to build and automate data pipelines without writing code. However, I'm also interested to try if I can recreate certain ADF operations by writing code, just out of my curiosity.

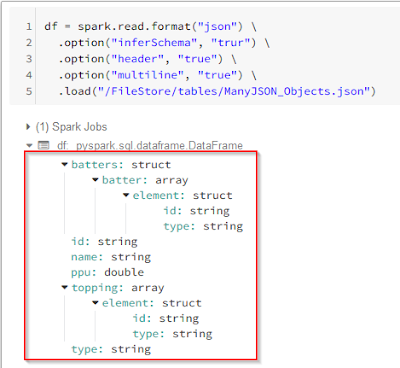

[

{

"id": "0001",

"type": "donut",

"name": "Cake",

"ppu": 0.55,

"batters":

{

"batter":

[

{ "id": "1001", "type": "Regular" },

{ "id": "1002", "type": "Chocolate" },

{ "id": "1003", "type": "Blueberry" },

{ "id": "1004", "type": "Devil's Food" }

]

},

"topping":

[

{ "id": "5001", "type": "None" },

{ "id": "5002", "type": "Glazed" },

{ "id": "5005", "type": "Sugar" },

{ "id": "5007", "type": "Powdered Sugar" },

{ "id": "5006", "type": "Chocolate with Sprinkles" },

{ "id": "5003", "type": "Chocolate" },

{ "id": "5004", "type": "Maple" }

]

},

{

"id": "0002",

"type": "donut",

"name": "Raised",

"ppu": 0.55,

"batters":

{

"batter":

[

{ "id": "1001", "type": "Regular" }

]

},

"topping":

[

{ "id": "5001", "type": "None" },

{ "id": "5002", "type": "Glazed" },

{ "id": "5005", "type": "Sugar" },

{ "id": "5003", "type": "Chocolate" },

{ "id": "5004", "type": "Maple" }

]

},

{

"id": "0003",

"type": "donut",

"name": "Old Fashioned",

"ppu": 0.55,

"batters":

{

"batter":

[

{ "id": "1001", "type": "Regular" },

{ "id": "1002", "type": "Chocolate" }

]

},

"topping":

[

{ "id": "5001", "type": "None" },

{ "id": "5002", "type": "Glazed" },

{ "id": "5003", "type": "Chocolate" },

{ "id": "5004", "type": "Maple" }

]

}

]