(2022-Jun-06) This blog post is a collaborative effort of several people, some of them I currently work with, and others' contributions came from their writing, so it’s more than natural to repeat Isaac Newton’s famous saying, “If I have seen further it is by standing on the shoulders of giants”. Recent reading into his biography introduced an idea that along with a noble concept to acknowledge the support of others, Isaac Newton might have mocked one of his rivals in the scientific field because of another’s person short stature.

Photo by Jean van der Meulen: https://www.pexels.com/photo/grey-concrete-columns-1547706/

Regardless of the actual reason for the scientific mind to formulate such a phrase, let’s get to the meta-driven Azure Data Factory pipelines. Previously, we talked about a rich set of features within a Data Copy activity (Part 1), along with a configuration database and pipeline framework that will support flexible Data Copy based data flow for various datasets (Part 2).

A journey between sourcing and target dataset goes through several linking points: first, they require data connector definitions (aka “linked services” in Azure Data Factory), then comes another very important step that can define a relationship between datasets’ attributes/columns. This is called mapping.

Picture this as a marriage counsellor for a data copy activity that guides both parties (source & target) in their marriage process. Azure Data Factory supports Default or Explicit mappings. Default mapping requires columns between both sides to be the same and no formal “marriage”/mapping agreement is required. Explicit mapping, on the other hand, allows defining such formal agreement where data types can be explicitly changed to a certain extent as well as column selection process provides more flexibility for the final target data transformation.

The use-case of being very picky/selective about which column to choose in your data flow might be very handy in your data warehouse solution. And here lies a problem when something is explicitly mapped: it is like written in stone, with each column-to-column stream being clearly defined.

But, the Microsoft product team is a group of smart people and they introduced parameterized column mapping (now you see, why this is a very valid topic for Part 3 of this blog series). This parameterized mapping allows JSON-formated values to define a complete list of column pairs between source and destination datasets.

For example, mapping between 3 columns may look like this:

{

"type":"TabularTranslator",

"mappings":[

{

"source":{

"name":"columnA"

},

"sink":{

"name":"columnA"

}

},

{

"source":{

"name":"columnB"

},

"sink":{

"name":"columnB"

}

},

{

"source":{

"name":"columnC"

},

"sink":{

"name":"columnC"

}

}

]

}If we can dynamically set this parameter value, then we create a process to formulate its value programmatically using external metadata.

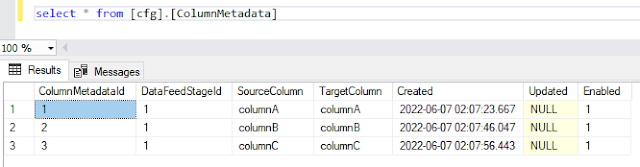

If we formulate this value programmatically using external metadata, then we can define an external column mapping table to host this data.

I used one of the examples of T-SQL stored procedures that can generate JSON output based on the column mapping table from this blog post (https://sqlitybi.com/dynamically-set-copy-activity-mappings-in-azure-data-factory-v2/) and slightly adjusted it.

CREATE PROCEDURE [cfg].[GetColumnMapping ]

@DataFeedStageId int

AS

BEGIN

DECLARE @json_construct varchar(MAX) = '{"type": "TabularTranslator", "mappings": {X}}';

DECLARE @json VARCHAR(MAX);

SET @json = (

SELECT

c.[SourceColumn] AS 'source.name',

c.[TargetColumn] AS 'sink.name'

FROM [cfg].[ColumnMetadata] as c

WHERE c.DataFeedStageId = @DataFeedStageId

FOR JSON PATH );

SELECT REPLACE(@json_construct,'{X}', @json) AS json_output;

ENDThis stored procedure allows me to generate the JSON output I need to pass to my Data Copy mapping parameterized value:

Working on another project, I was late to know about some of the T-SQL improvements that can simplify a table column(s) conversation into a single varchar string, starting from the SQL Server 2016. There is a very good STRING_AGG T-SQL function that can be used for this, so my initial stored procedure was adjusted this way:

CREATE PROCEDURE [cfg].[GetColumnMapping ]

@DataFeedStageId int

AS

BEGIN

DECLARE @json_construct varchar(MAX) = '{"type": "TabularTranslator", "mappings": [{X}]}';

DECLARE @json VARCHAR(MAX);

SET @json = (

SELECT STRING_AGG('{"source":{"name":"' + c.[SourceColumn] + '"},"sink":{"name":"' + c.[TargetColumn] + '"}}', ',')

FROM [cfg].[ColumnMetadata] as c

WHERE c.DataFeedStageId = @DataFeedStageId

);

SELECT REPLACE(@json_construct,'{X}', @json) AS json_output;

END

GOIn both cases, the generated JSON output was the same, and I could pass it to the parameterized data factory mapping of my data copy activity, which I could reuse for different datasets. Another goal for metadata-driven pipelines in Azure Data Factory was achieved!