Here’s an interesting issue that recently came up. We were seeing very high compilations and recompilations on a server to the point that it started causing us some very serious issues (admittedly this wasn’t the sole issue but it was certainly a contributing factor, the other factors were also very interesting so I might look at those in another post).

After looking in the plan cache we could see a very high number of single use plans for a particular stored procedure. Now as you probably know, SQL will usually cache an execution plan and use it over and over whenever a particular query runs. SQL’s lazy and it doesn’t want to bother compiling queries unless it really has to.

So what was going on, why wasn’t SQL able to reuse the cached plan? My first thought was that the proc used OPTION (RECOMPILE), but in this case it wasn’t.

The culprit turned out to be a temp table. But before we look at the specific issue that I was facing, let’s take a look at the following stored proc. Yes, I know it’s totally contrived but it’ll serve the purpose for this demo.

Using an Internally Declared Temp Table

--internal temp table

CREATE PROC TempTableTest1

AS

BEGIN

CREATE TABLE #temptable

(col1 INT NOT NULL)

INSERT INTO #temptable VALUES(1)

SELECT *

FROM #temptable

JOIN sys.databases ON col1 = database_id

END

GO

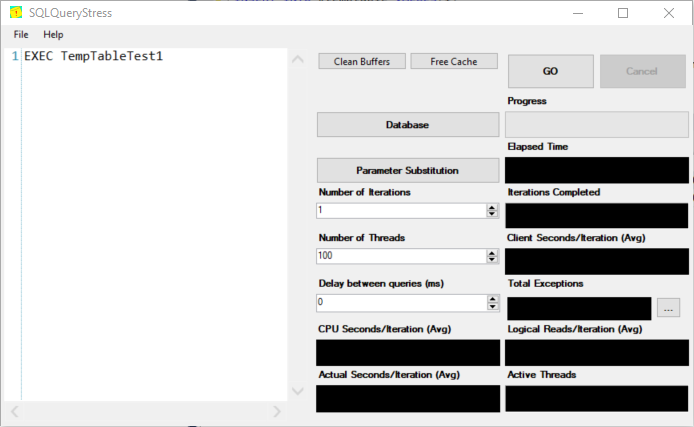

Now we’ve created that, let’s simulate a bunch of users all running it at the same time. I’m going to spark up SQL Query Stress to do this. If you haven’t seen Query Stress, you can download it from here.

Now that’s run, let’s check out our plan cache…

SELECT creation_time, statement_start_offset, statement_end_offset, execution_count, text

FROM sys.dm_exec_query_stats

CROSS APPLY sys.dm_exec_sql_text(sql_handle)

WHERE sql_handle = 0x030018005EBD931242EFA700C6AD000001000000000000000000000000000000000000000000000000000000

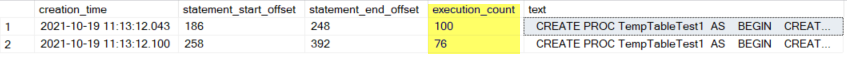

Great, so we can clearly see that SQL’s able to reuse that execution plan absolutely fine. Temp tables in stored procs themselves clearly aren’t a problem.

Now take a look at the following proc, this is similar to the one that gave us such a headache.

Using an Externally Declared Temp Table

--external temp table

CREATE PROC TempTableTest2

AS

BEGIN

SELECT *

FROM #temptable

JOIN sys.databases ON col1 = database_id

END

GO

What’s the difference here?

The big difference is that the temp table is declared outside of the stored procedure and then accessed from within. Let’s run that same test with the 100 simulated users for this. We’ll use the same temp table definition as previously but this time we’re going to need to create and populate it before we execute the stored proc.

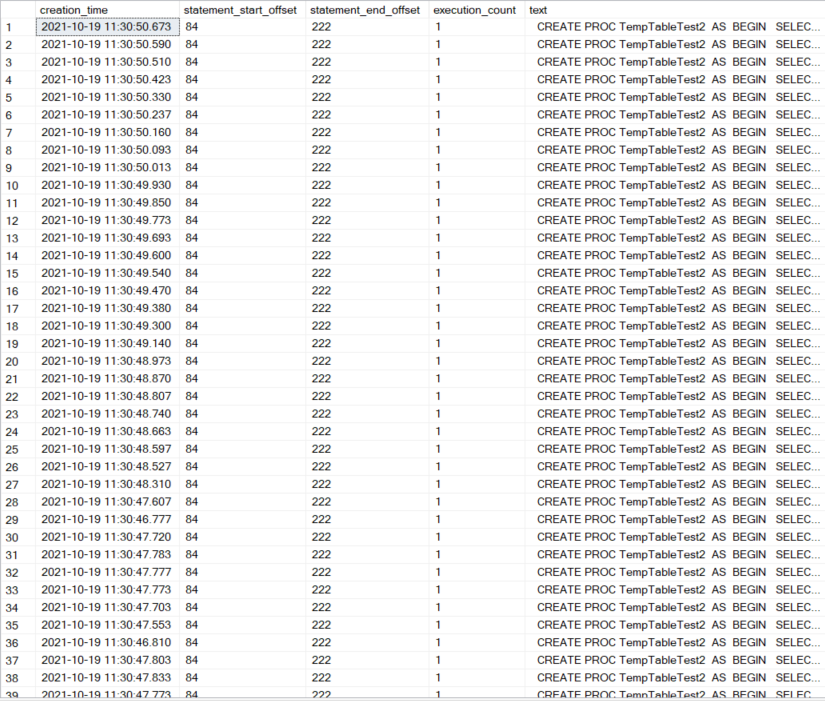

Now let’s check that cache again…

Whoa there! Now that’s looking very different isn’t it?! This time, instead of SQL reusing the same plan each time the proc runs it has had to compile a whole new plan even though it’s executing what is essentially the same code.

So what’s going on here?

The answer lies with the fact that you’re using a temp table which has been defined externally to the proc.

SQL has no way of knowing if the schema of the temp table is the same in each run. Imagine what would happen if a user decided to run the proc after declaring the temp table with two or more columns (and it’d be totally valid for them to do so). Any execution plans that were generated for our example of one column wouldn’t work.

Because of this, SQL can’t trust the plans that it’s got cached so is forced to compile a new plan every time it’s executed.

But What’s the Impact?

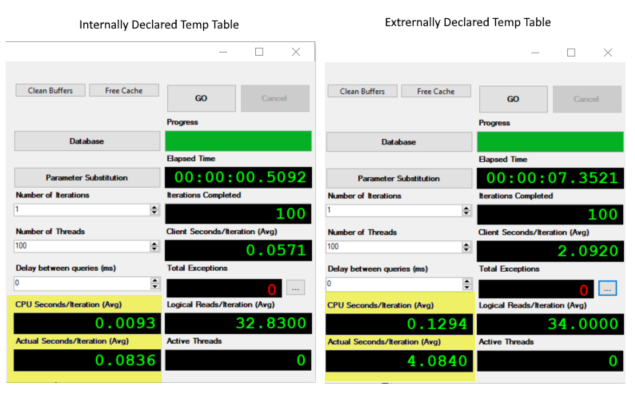

The next question that I’ve got is does this really matter to us? Is it really going to cause us a problem? Let’s check out the average CPU time for them both (BTW, SQL Query Stress is great here because it gives us some really useful information).

Check out the CPU Seconds/Iteration!

That’s the overhead due to the compilations and it’s a massive difference. If this happens to be something that is getting run constantly, very regularly or perhaps it happens to be a very complex query taking up more compile resource I think you can see how this could start to hurt your performance.

Another side effect of this is that it could also cause your cache to become bloated, this can force other plans out of cache with the knock on effect that those queries that have had their plans forced out of cache will now also have to be recompiled.

So What Can We Do About It?

If this is something that’s giving you pain, there are a couple of things that you could do to fix it.

You could create a physical table and use that rather than a temp table, doing that SQL will know what the schema of the table is and will be able to reuse the plan.

That’s got its own potential pit fall and could cause you a problem if you’ve got a number of users executing the proc simultaneously. You’ll need to figure a way of making sure the the proc only reads the rows that are relevant to its own run. I’ll leave that one with you.

Another possible solution is to do away with the temp table and pass in a table variable instead. Yes, I know they come with their own issues but if you really need to be doing this then they may be the easiest way to go.

The first thing that you’re going to need to do is create a table type.

CREATE TYPE TempTableType AS TABLE (Col1 INT NOT NULL)

Because we’re now using a table type, SQL will know the schema and if our theory to this point holds water, should be able to reuse the plan.

Let’s change our proc to use a temp table parameter…

--table variable

CREATE PROC TempTableTest3 @tablevariable TempTableType READONLY

AS

BEGIN

SELECT *

FROM @tablevariable

JOIN sys.databases ON col1 = database_id

END

GO

Now, we’ll simulate that 100 user load again but using the new proc.

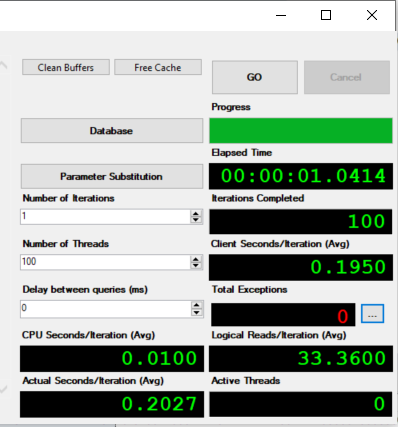

Before we look at the cache, let’s just look at the run time stats.

Interesting, that’s comparable to the sort of performance that we had with the internally declared temp table.

So now for the plan cache…

execution_count = 100

…and we’ve got ourselves plan reuse again!

BEWARE – You Mileage May Vary

I’m sure that I don’t need to tell you this but the example that I’ve given is very simple with a low row count. Table variables have their own issues, mainly around statistics although they do play better in SQL2019 with deferred compilation they’re still not perfect so this is something that you should test thoroughly.