Unity Catalog in Databricks provides a single place to create and manage data access policies that apply across all workspaces and users in an organization. It also provides a simple data catalog for users to explore. So when a client wanted to create a place for statisticians and data scientists to explore the data in their data lake using a web interface, I suggested we use Databricks with Unity Catalog.

New account management and roles

There are some Databricks concepts that may be new for you in Azure when you use Unity Catalog. While Databricks workspaces are mostly the same, you now have account (organization) -level roles. This is necessary because Unity Catalog crosses workspaces.

Users and service principals created in a workspace are synced to the account as account-level users and service principals. Workspace-local groups are not synced to the account. There are now account-level groups that can be used in workspaces.

To manage your account, you can go to https://accounts.azuredatabricks.net/. If you log in with an AAD B2B user, you’ll need to open the account portal from within a workspace. To do this, go to your workspace and select your username in the top right of the page to open the menu. Then choose the Manage Account option in the menu. It will open a new browser window.

Requirements

To create a Unity Catalog metastore you’ll need:

- A Databricks workspace configured to use the Premium pricing tier

- A storage account with the hierarchical namespace enabled

- Azure Active Directory Global Administrator privileges (at least temporarily, or borrow your AAD admin for 5 minutes during setup).

- Contributor or Owner role in a resource group where you can create an Access Connector for Azure Databricks

The pricing tier is set on the basics page when creating the Databricks workspace.

High-level steps

- Create a storage container in your ADLS account.

- Create an access connector for Azure Databricks.

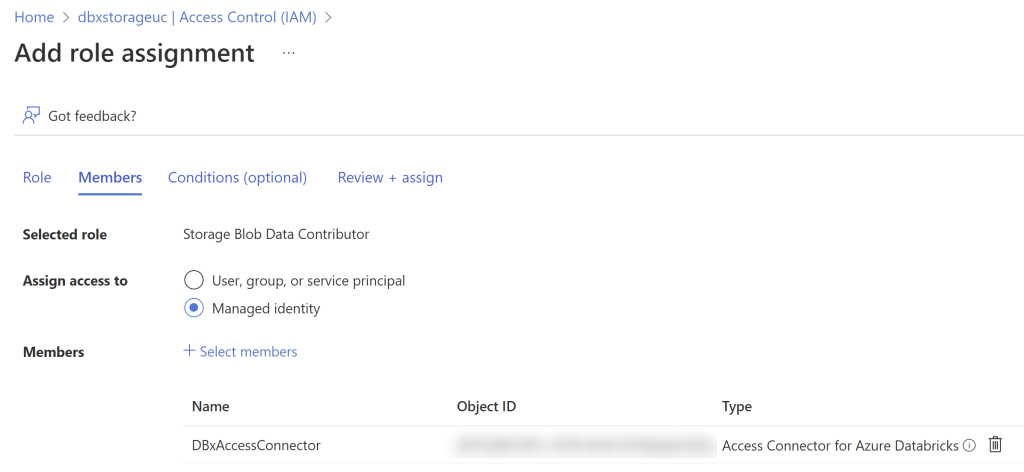

- Assign the Storage Blob Data contributor role on the storage account to the managed identity for the access connector for Azure Databricks.

- Assign yourself account administrator in the Databricks account console.

- Create a Unity Catalog metastore.

- Assign a workspace to the Unity Catalog metastore.

The storage container holds the metadata and any managed data for your metastore. You’ll likely want to use this Unity Catalog metastore rather than the default hive metastore that comes with your Databricks workspace.

The access connector will show up as a separate resource in the Azure Portal.

You don’t grant storage account access to end users – you’ll grant user access to data via Unity Catalog. The access connector allows the workspace to access the data in the storage account on behalf of Unity Catalog users. This is why you must assign the Storage Blob Data Contributor to the access connector.

The confusing part of setup

If you are not a Databricks account administrator, you won’t see the option to create a metastore in the account console. If you aren’t an AAD Global Admin, you need an AAD Global Admin to log into the Databricks account console and assign your user to the account admin role. It’s quite possible that the AAD Global Admin(s) in your tenant don’t know and don’t care what Databricks or Unity Catalog is. And most data engineers are not global admin in their company’s tenant. If you think this requirement should be changed, feel free to vote for my idea here.

Once you have been assigned the account admin role in Databricks, you will see the button to create a metastore in the account console.

One or multiple metastores?

The Azure documentation recommends only creating one metastore per region and assigning that metastore to multiple workspaces. The current recommended best practice is to have one catalog that spans environments, business units, and teams. Currently, there is no technical limitation keeping you from creating multiple metastores. The recommendation is pointing you toward a single, centralized place to manage data and permissions.

Within your metastore, you can organize your data into catalogs and schemas. So it could be feasible to use only one metastore if you have all Databricks resources in one region.

In my first metastore, I’m using catalogs to distinguish environments (dev/test/prod) and schemas to distinguish business units. In my scenario, each business unit owns their own data. Within those schemas are external tables and views. Because it is most likely that someone would need to see all data for a specific business unit, this makes it easy to grant user access at the schema level.

I’ll save table creation and user access for another post. This post stops at getting you through all the setup steps to create your Unity Catalog metastore.

If you prefer learning from videos, I’ve found the Unity Catalog videos on the Advancing Spark YouTube channel to be very helpful.