As you might know, I have written a long time ago a book about Service Broker – a technology within SQL Server that almost nobody is aware of and interested in. But Service Broker provides you an elegant way to implement reliable, distributed, message-based applications directly within the database.

One of the hardest things to understand in Service Broker is its programming model, and how to create a distributed Service Broker application, where each Service Broker service is running on a different SQL Server instance. Over the last 10 – 15 years a lot of things have changed in our IT industry, especially with Container platforms like Docker, and orchestration tools like Kubernetes. Therefore, I want to show you in this blog posting how you can create a fully distributed Service Broker application with Docker, and how to deploy it with a single command line. Let’s get started.

The SQL Server base image

As you know, I’m a huge believer in Docker, and I really like to run my SQL Server instances natively with Docker on my Mac system – without the use of any virtual machines. One thing that always annoys me when I deploy a new Docker Container from the SQL Server base image that is provided by Microsoft is the lack of sample databases. I really like to teach query tuning concepts with the use of the AdventureWorks2014 database – I’m here somehow an “old-school” person  Therefore, the step that I always do on a newly created Docker container is the restore of my AdventureWorks2014 backup.

Therefore, the step that I always do on a newly created Docker container is the restore of my AdventureWorks2014 backup.

But one of the cool things of Docker is the possibility to create and publish a new Docker image into a Docker repository, which is based on another Docker image – like the one provided by Microsoft. The following listing shows the Docker file that I use to create my customized SQL Server 2019 image.

# The customized image is based on SQL Server 2019

FROM mcr.microsoft.com/mssql/server:2019-latest

# Switch to the root user

USER root

# Sets the pwd and chown to the service account of SQL Server

WORKDIR /tmp

RUN chown mssql /tmp

# Copy the scripts and the sample databases into the image

COPY sql /tmp/

COPY scripts /tmp/

# Mark the scripts as executable

RUN chmod +x /tmp/*.sh

# Convert CRLF to LF in case Windows or VS Code changed it

RUN find . -type f ( -name "*.sql" -o -name "*.env" -o -name "*.sh" ) -exec sed -i 's/r$//' {} ;

# Switch to user mssql or the container will fail

USER mssql

# Run the initial setup script

RUN /bin/bash /tmp/start-sqlserver.sh

As you can see, I’m copying some SQL and shell scripts into the Docker image that I need later for the necessary setup work. The whole setup is done through the script start-sqlserver.sh that is shown in the following listing.

# Export the necessary environment variables export $(xargs < /tmp/sapassword.env) export $(xargs < /tmp/sqlcmd.env) export PATH=$PATH:/opt/mssql-tools/bin # Set the SQL Server configuration cp /tmp/mssql.conf /var/opt/mssql/mssql.conf # Start up SQL Server, wait for it, and then restore the sample databases /opt/mssql/bin/sqlservr & sleep 20 & /tmp/configure.sh

In the first few lines I’m exporting some environment variables that are needed for sqlcmd and for the sa password of SQL Server. And in the last line SQL Server is started, and the real customization of the SQL Server image happens within the file configure.sh that is also shown in the following listing.

# Loop until SQL Server is up and running

for i in {1..50};

do

sqlcmd -S localhost -d master -Q "SELECT @@VERSION"

if [ $? -ne 0 ];then

sleep 2

fi

done

# Download the AdventureWork2014 backup file from GitHub

wget https://github.com/SQLpassion/Docker/raw/71ac56d9b5bbf517ca2deabd926853920db673d4/sqlserverbase/sample-databases/AdventureWorks2014.bak

# Restore the sample databases

sqlcmd -S localhost -d master -i /tmp/restore-databases.sqlFirst, the script waits in a loop until SQL Server is successfully started up. In the next step a backup of the AdventureWorks2014 database is downloaded from my GitHub repository, which is finally restored by the SQL script restore-database.sql:

USE master GO -- Restore the AdventureWorks2014 database RESTORE DATABASE AdventureWorks2014 FROM DISK = '/tmp/AdventureWorks2014.bak' WITH MOVE 'AdventureWorks2014_Data' TO '/var/opt/mssql/data/AdventureWorks2014.mdf', MOVE 'AdventureWorks2014_Log' TO '/var/opt/mssql/data/AdventureWorks2014.ldf' GO

After all these steps the customization of my Docker image is done, so let’s build it with the following command:

docker image build -t sqlpassion/sqlserver:2019-latest .

When the build is completed, you can show the built image with the following command:

docker image ls

And finally, you can also run a Docker container with this customized image:

docker run -p 1433:1433 --name sql2019 -d sqlpassion/sqlserver:2019-latest

In addition, I have also published this Docker image to my Docker repository, so you can directly pull it to your environment with the following command – instead of building it as a separate step:

docker pull sqlpassion/sqlserver:2019-latest

Now we will use this base image in the next step when we create the distributed Service Broker application.

Creating the Service Broker Service Containers

If you have already experience with Service Broker, then you know that each Service Broker application consists of multiple services, at least a so-called Initiator Service that starts a conversation by sending messages to a so-called Target Service. Therefore, we need for our Service Broker deployment 2 SQL Server instances where each instance hosts one of these 2 services. The following listing shows the Docker file that creates the one of these Docker images (they are identical for both images).

# The customized image is based on the SQL Server 2019 from SQLpassion

FROM sqlpassion/sqlserver:2019-latest

# Switch to the root user

USER root

# Sets the pwd and chown to the service account of SQL Server

WORKDIR /tmp

RUN chown mssql /tmp

# Copy the scripts and the sample databases into the image

COPY sql /tmp/

COPY scripts /tmp/

COPY certs /tmp/

# Mark the scripts as executable

RUN chmod +x /tmp/*.sh

# Convert CRLF to LF in case Windows or VS Code changed it

RUN find . -type f ( -name "*.sql" -o -name "*.env" -o -name "*.sh" ) -exec sed -i 's/r$//' {} ;

# Switch to user mssql or the container will fail

USER mssql

# Run the initial setup script

RUN /bin/bash /tmp/start-sqlserver.sh

It’s the same as the code that was used to describe the SQL Server base image. But there are a few minor differences that I want to point out. First, this image is based on the image that we have created previously:

FROM sqlpassion/sqlserver:2019-latest

Because we are dealing here with a distributed Service Broker application, we also must deal with security. Therefore, I’m also copying some certificates into the Docker image that are later used when transport security is set up for our Service Broker services.

COPY certs /tmp/

The folder certs contain the public and private keys for the certificate used by the Initiator Service and for the Target Service. The public and private key pairs were created and exported with the following T-SQL statements:

CREATE CERTIFICATE InitiatorServiceCertPrivate

WITH SUBJECT = 'For Service Broker authentication - InitiatorService',

START_DATE = '01/01/2022',

EXPIRY_DATE = '01/01/2099'

GO

CREATE CERTIFICATE TargetServiceCertPrivate

WITH SUBJECT = 'For Service Broker authentication - TargetService',

START_DATE = '01/01/2022',

EXPIRY_DATE = '01/01/2099'

GO

BACKUP CERTIFICATE InitiatorServiceCertPrivate

TO FILE = '/tmp/initiator-service-cert-public.cer'

WITH PRIVATE KEY

(

FILE ='/tmp/initiator-service-cert-private.key',

ENCRYPTION BY PASSWORD ='passw0rd1!'

)

GO

BACKUP CERTIFICATE TargetServiceCertPrivate

TO FILE = '/tmp/target-service-cert-public.cer'

WITH PRIVATE KEY

(

FILE ='/tmp/target-service-cert-private.key',

ENCRYPTION BY PASSWORD ='passw0rd1!'

)

GO

The real Service Broker setup and deployment happens finally in the file setup-ssb.sql (for both the Initiator Service and the Target Service), which gets executed by the script file configure.sh. I don’t want to go here into the details, because this is Service Broker stuff, which is outside of the scope of this blog posting.

Deployment with Docker Compose

By now we have 2 additional Docker images. One Docker image for the Initiator Service, and another Docker image for the Target Service. You could now start up 2 Docker containers manually by using the Docker run command, or you can use the power of Docker Compose. With Docker Compose you can describe in a YAML file a full Docker deployment, which gets automatically deployed and started when you run the docker-compose command. The following listing shows the YAML file in which I have described our distributed Service Broker application that consists of the Initiator Service and the Target Service.

version: '3.7'

services:

initiator-service:

build:

context: initiator-service/.

restart: on-failure

networks:

- localnet

container_name: initiator-service

hostname: initiator-service

image: sqlpassion/initiator-service:2019-latest

ports:

- "1433:1433"

env_file:

- initiator-service/sql/sapassword.env

target-service:

build:

context: target-service/.

restart: on-failure

networks:

- localnet

container_name: target-service

hostname: target-service

image: sqlpassion/target-service:2019-latest

ports:

- "1434:1433"

env_file:

- target-service/sql/sapassword.env

networks:

localnet: null

The cool thing about Docker Compose is that it also builds the necessary Docker images if they are not present. Let’s do this with the following command:

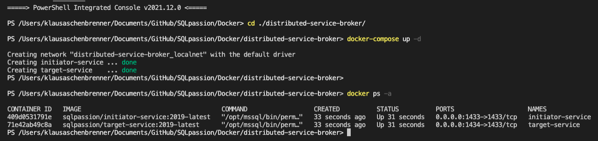

docker-compose up -d

This commannd builds and runs the Initiator Service and the Target Service, which are based on the sqlpassion/sqlserver:2019-latest Docker image. After the successful completion of the command, you can verify with the following command, if you have 2 Docker containers up and running:

docker ps -a

The Initiator Service is accessible through localhost,1433, and the Target Service is acessible through localhost,1434. When you have connected to both SQL Server instances (through SQL Server Management Studio or Azure Data Studio), you can send with the following T-SQL statement a Service Broker message from the Initiator Service to the Target Service:

USE InitiatorService GO EXEC SendMessageToTargetService '< HelloWorldRequest> Klaus Aschenbrenner </HelloWorldRequest>' GO

After the execution of the stored procedure, you should have a response message in the table ProcessedMessages on both SQL Server instances:

-- On the Initiator Service side (localhost,1433): SELECT * FROM InitiatorService.dbo.ProcessedMessages GO -- On the Target Service side (localhost,1434): SELECT * FROM TargetService.dbo.ProcessedMessages GO

The whole messages, which are exchanged between both Service Broker services, are processed by the following activated stored procedures:

- InitiatorService.dbo.ProcessResponseMessages

- TargetService.dbo.ProcessRequestMessages

Summary

In this blog posting you have seen how you can use the power of Docker and Docker Compose to automatically deploy a distributed Service Broker application. You can also find the whole source code needed for this example in my GitHub repository. A big thanks also goes to Chrissy LeMaire, who inspired and helped me a lot by setting up this example. So please make sure to also have a look into her GitHub repository, which contains lot of code about Docker and PowerShell in combination with SQL Server.

Thanks for your time,

-Klaus