Question: When an activity in a Data Factory pipeline fails, does the entire pipeline fail?

Answer: It depends

In Azure Data Factory, a pipeline is a logical grouping of activities that together perform a task. It is the unit of execution – you schedule and execute a pipeline. Activities in a pipeline define actions to perform on your data. Activities can be categorized as data movement, data transformation, or control activities.

In many instances, when an activity fails during a pipeline run, the pipeline run will report failure as well. But this is not always the case.

There are two main scenarios where an activity would report failure, but the pipeline would report success:

- The maximum number of retry attempts is greater than 0, and the initial activity execution fails but the second attempt succeeds

- The failed activity has a failure path or a completion path to a subsequent activity and no success path

Retry Attempts

In the General settings of any activity is a property called Retry. This is the number of times Data Factory can try to execute the activity again if the initial execution fails. The default number of retries is 0. If we execute a pipeline containing one activity with the default Retry setting, the failure of the activity would cause the pipeline to fail.

I often set retries to a non-zero number in copy activities, lookups, and data flows in case there are transient issues that would cause a failure that might not be present if we waited 30 seconds and tried the activity again.

Dependency with a Failure Condition

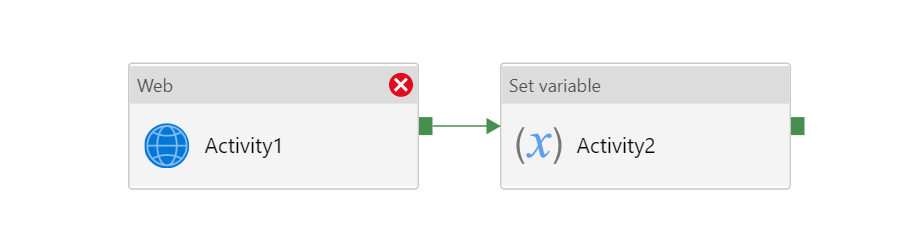

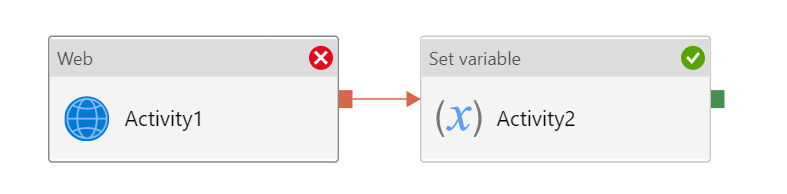

Activities are linked together via dependencies. A dependency has a condition of one of the following: Succeeded, Failed, Skipped, or Completed. If we have a pipeline containing Activity1 and Activity2, and Activity2 has a success dependency on Activity1, it will only execute if Activity1 is successful. In this scenario, if Activity1 fails, the pipeline will fail.

But if we have a pipeline with two activities where Activity2 has a failure dependency on Activity1, the pipeline will not fail just because Activity1 failed. If Activity1 fails and Activity2 succeeds, the pipeline will succeed. This scenario is treated as a try-catch block by Data Factory.

Now let’s say we have a pipeline with 3 activities, where Activity1 has a success path to Activity2 and a failure path to Activity3. If Activity1 fails and Activity3 succeeds, the pipeline will fail. The presence of the success path alongside the failure path changes the outcome reported by the pipeline, even though the activity executions from the pipeline are the same as the previous scenario.

What This Means for Monitoring

This difference between pipeline and activity status has a few implications of which we should be aware as we monitor our data factories.

If we are using Azure Monitor alerts, we need to understand that setting an alert for pipeline failures doesn’t catch all activity failures. If there is a retry of an activity and the second attempt is successful, there would be an activity failure but no pipeline failure.

Conversely, if we set an alert to notify us of activity failures, and we have a pipeline designed with the try-catch pattern, we might get an alert about an activity failure, but the pipeline would still show success. You would need to look at the status of the activities within the pipeline execution to see the failure of which you were alerted.

For many of my implementations, just setting an alert to notify me when any activity failure occurs is fine. For others, I really only care if the pipeline fails. Sometimes I need to set more specific alerts where I choose only certain activities to monitor for failure.

You could also use the Data Factory SDK to roll your own monitoring solution. If you write PowerShell, C#, or Python, you can retrieve the status of any pipeline or activity run and take subsequent actions based upon the results.

What This Means for Pipeline Design

You may need to add activities to your pipelines to support your monitoring scenarios if you need something more customized than what is offered from Azure Monitor and don’t want to use the SDK.

If you have notification needs that Azure Monitor can’t accommodate, you could add an activity in your pipelines to send an email based upon your desired activity outcomes. You can cause that activity to execute using an activity dependency alone, or by combining it with a variable and an If Condition activity.

There are times where we may need a pipeline to fail even though we are using the try-catch pattern that results in pipeline success. In that case, I add an additional web activity to the end of my pipeline failure path that hits an invalid url like http://throwanerror. The failure of this activity will cause the pipeline to fail. Keep monitoring and notifications in mind as you design your pipelines so you are alerted as appropriate.

Azure Data Factory Activity and Pipeline Outcomes

To help clarify these concepts I made the below guide to Data Factory activity and pipeline outcomes. Feel free to share it with others. You can download it directly from this link. A text version that should be friendlier for screen readers can be found on this page.