Changing Table Schema – what goes behind the scenes – Part I

Getting the database schema right is the most important part of any database design/development cycle. However change is law of nature. Things do get change over time as new requirements emerge and we always don't have luxury of redesigning the whole thing. So in my opinion knowing how to handle database schema changes is also very important.

For some lucky ones table schema change is just a simple matter of knowing correct ALTER TABLE syntax. After all its just about adding, dropping or changing columns. However if you are dealing with large tables or if storage space matters a lot to you then tighten your seat belts as we will be exploring what goes behind the scenes when we change table schema. Finally we will summarize some interesting aspects of changing table schema.

Ok we will dive deep but let's start from shallow end first. When you change a table schema one to all of the following things can happen.

1. Only meta data containing table information is changed e.g. the information you get back by querying catalog views like sys.columns.

2. SQL Server examines the existing data to ensure it is consistent with schema change you are making.

3. SQL server changes the physical data stored in every row.

DBCC PAGE to rescue

In order to see all this in action we will use undocumented DBCC PAGE command. The full usage of this command and how to interpret its output is a separate topic in itself but to start with just understand that it lets you examine the physical storage in a DATA PAGE containing rows. SQL Server stores data in 8KB pages and disk IO operations are performed at PAGE level i.e. page is the basic unit of data storage. Every page contains a 96 bytes header and the actual data rows. In addition there is a row offset array which starts from end of page and indicates where a row is in a page. Refer to this MSDN link for more details http://msdn.microsoft.com/en-us/library/ms190969(SQL.90).aspx.

Before we use DBCC PAGE you need to know what is physical layout of a row in data page. So it starts like this

![]()

A. Two status bits (1 byte each)

B. Length of fixed portion of rows (1 byte)

C. Fixed length data (n bytes)

D. Number of columns (2 bytes)

E. Null bitmap (multiple of 1 byte where each byte can store NULL-ability of up to 8 columns)

F. Number of variable length columns (2 bytes)

G. End position of variable length columns(2 bytes for every variable length column)

H. Variable length data

Lets now create a test table and see the things in action.

-- all illustrations done in temp database USE tempdb GO CREATE TABLE tbTestChange ( PK_id INT NOT NULLPRIMARY KEY, Col1 SMALLINT NOT NULL, Col2 VARCHAR(10)NOT NULL, COl3 CHAR(5)NOT NULL )

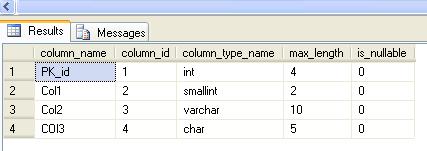

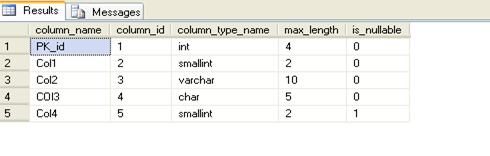

Run the following query. This will show the meta-data for above table. It uses sys.columns system catalog view (http://msdn.microsoft.com/en-us/library/ms176106(SQL.90).aspx )which returns a row for every column in a table or view. This contains information like column data type, maximum length, nullability etc. An inner join with sys.types is used to get actual type name rather than unfriendly type identifier (like 'int' instead of returning '56').

SELECT c.nameAS column_name,c.column_id,t.name AS column_type_name,c.max_length,c.is_nullable

FROM sys.columns c

INNER JOIN sys.types t

ON c.system_type_id = t.system_type_id

WHERE c.object_id= object_id('tbTestChange')

ORDER BY c.column_id

You should now see following obvious result

Now insert some data in to the table

INSERT INTO tbTestChange SELECT 1,100,'AAAAAA','aaaa' UNION SELECT 2,101,'BBBBBBB','bbbbb'

Now we would try to examine how the data is stored, using DBCC PAGE. This command takes following parameters

DBCC PAGE

(

['database name'|database id], -- can be the actual name or id of the database

file number, -- the file number where the page is found

page number, -- the page number within the file

print option = [0|1|2|3] -- display option providing differing levels of information

)

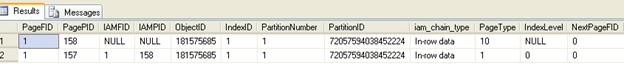

So to get the page and file number we can use another command DBCC IND.

DBCC IND('tempdb','tbTestChange',-1)

This will produce two rows. Select the PageFID and PagePID values for the row where IndexLevel is 0 (this means its leaf level). On my setup it was like this

Armed with this info we can now run DBCC PAGE command

DBCC TRACEON(3604) --this is needed to send DBCC PAGE results to client

DBCC PAGE('tempdb',1/* file num*/,157/*page num*/,1)Here last parameter '1' is used to print page header plus per-row hex dumps and a dump of the page slot array. Following is the results of above command on my setup

PAGE: (1:157) BUFFER: BUF @0x02BCAA9C bpage = 0x043EA000 bhash = 0x00000000 bpageno = (1:157) bdbid = 2 breferences = 0 bUse1 = 33489 bstat = 0xc0000b blog = 0x212121bb bnext = 0x00000000 PAGE HEADER: Page @0x043EA000 m_pageId = (1:157) m_headerVersion = 1 m_type = 1 m_typeFlagBits = 0x4 m_level = 0 m_flagBits = 0x8000 m_objId (AllocUnitId.idObj) = 69 m_indexId (AllocUnitId.idInd) = 256 Metadata: AllocUnitId = 72057594042449920 Metadata: PartitionId = 72057594038452224 Metadata: IndexId = 1 Metadata: ObjectId = 181575685 m_prevPage = (0:0) m_nextPage = (0:0) pminlen = 15 m_slotCnt = 2 m_freeCnt = 8035 m_freeData = 153 m_reservedCnt = 0 m_lsn = (34:44:259) m_xactReserved = 0 m_xdesId = (0:0) m_ghostRecCnt = 0 m_tornBits = 0 Allocation Status GAM (1:2) = ALLOCATED SGAM (1:3) = ALLOCATED PFS (1:1) = 0x60 MIXED_EXT ALLOCATED 0_PCT_FULL DIFF (1:6) = CHANGED ML (1:7) = NOT MIN_LOGGED DATA: Slot 0, Offset 0x60, Length 28, DumpStyle BYTE Record Type = PRIMARY_RECORD Record Attributes = NULL_BITMAP VARIABLE_COLUMNS Memory Dump @0x355DC060 00000000: 30000f00 01000000 64006161 61612004 †0.......d.aaaa . 00000010: 00f00100 1c004141 41414141 ††††††††††......AAAAAA Slot 1, Offset 0x7c, Length 29, DumpStyle BYTE Record Type = PRIMARY_RECORD Record Attributes = NULL_BITMAP VARIABLE_COLUMNS Memory Dump @0x355DC07C 00000000: 30000f00 02000000 65006262 62626204 †0.......e.bbbbb. 00000010: 00f00100 1d004242 42424242 42††††††††......BBBBBBB OFFSET TABLE: Row - Offset 1 (0x1) - 124 (0x7c) 0 (0x0) - 96 (0x60) DBCC execution completed. If DBCC printed error messages, contact your system administrator.

The whole output looks very complex but for purpose of this discussion we will concentrate on following information

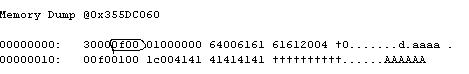

Memory Dump @0x355DC060 00000000: 30000f00 01000000 64006161 61612004 †0.......d.aaaa . 00000010: 00f00100 1c004141 41414141 ††††††††††......AAAAAA Slot 1, Offset 0x7c, Length 29, DumpStyle BYTE Record Type = PRIMARY_RECORD Record Attributes = NULL_BITMAP VARIABLE_COLUMNS Memory Dump @0x355DC07C 00000000: 30000f00 02000000 65006262 62626204 †0.......e.bbbbb. 00000010: 00f00100 1d004242 42424242 42††††††††......BBBBBBB OFFSET TABLE: Row - Offset 1 (0x1) - 124 (0x7c) 0 (0x0) - 96 (0x60)

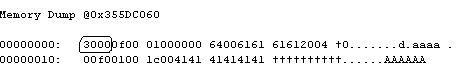

If you remember we inserted two rows. So does that helps now J. Yes, every memory hex dump is for each row and also the row-offset at the bottom contains two entries indicating two records. Let's try to analyze 1st row dump which is

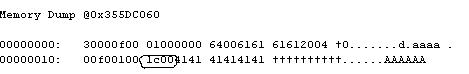

00000000: 30000f00 01000000 64006161 61612004 †0.......d.aaaa . 00000010: 00f00100 1c004141 41414141 ††††††††††......AAAAAA

You may need a calculator to convert from hex J

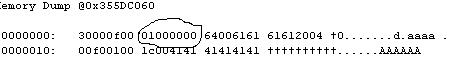

a. 1st two bytes store status bit and this is shown as '3000' in memory dump

b. Next two bytes store length of fixed portion of row. This is shown as '0f00' in memory dump. Hex of 0xF is 15. So 15 is obtained by adding status bits (2 bytes) + 2 bytes for storing this information i.e. storing 0f00 + int(4 bytes) for PK_id + smallint(2 bytes) for Col1 + char(5 bytes) for Col3. Note we have not included variable length column i.e. Col2 here.

c. So 1st fixed length column is PK_id (refer the output of query used above using sys.columns) of length 4 bytes. This is stored as 01000000.This is because we stored value 1 in this column.

d. Next fixed length column is col1 which is smallint i.e. 2 bytes. This in above memory dump is 6400 because we stored value 100 (0x64).

e. Similarly last fixed length is col3 (col2 is variable length column) and its of 5 bytes. We inserted value 'aaaa'. If you don't remember ASCII chart by heart then I will help you J. Yes 'aaaa' maps to 61616161. And off course hex '20' maps to 'space' character in ASCII( So you see you inserted 4 chars and used space for 5).

f. As mentioned above next two bytes store number of columns. So we have 4 columns in this table (and also in this row, why I am saying 'also in this row', you will come to know later). And hence

g. Next byte stores what is called NULL BITMAP. It indicates if a column is NULL or not. Since we have total 4 columns so 4 bits of a byte are used to represent NULL BITMAP in this case. So value 'f0' when read in binary (in reverse order) means none of the bits are set and hence all columns are not null. Note that if total columns were more than 8 then two bytes would have been used and so on (i.e. 1 to 8 columns need 1 bye, 9 to 16 need 2 bytes and so on).

h. Next two byte stores number of variable length columns. So we have 1 variable length column (and I would say again in this row). Hence value shown in memory dump is '0100'

i. Then we have two bytes for position where 1st variable length column ends. This is total offset so far plus size of 1st variable length column i.e. Col2. As we inserted 'AAAAAA' for this row so we have size of 6 bytes. So if we calculate offset using what we have seen so far (i.e. refer to all points above) total offset works out to be 2(in point a above) + 2(point b) + 4( point c) + 2(point d)+5(point e)+2(point f)+ 1(point g)+2(point h)+2(point i)+size ofcol2(i.e. 6)=28. This in hex is '1c' so we have memory dump showing '1c00'

j. Finally we have data for 1st variable length column. We inserted 'AAAAAA' so we get hex values of '41414141414'

So now we have looked in detail how data is physically stored. If you want you can go ahead and analyze memory dump for 2nd row.

Now we will return back to what we wanted to look in 1st place (that's trouble when diving deep as you find so many things apart from one you are looking for, that you start getting distracted J). Now we will alter the table in different ways and see what changes do we see.

Schema changes needing just meta-data change

Leave the results of DBCC PAGE command in one query window and go to other query window (still connected to tempdb) and run following command

ALTER TABLE tbTestChange

ADD Col4 smallint NULL

Now run the query we used earlier to see what meta data we have got for this changed table

SELECT c.nameAS column_name,c.column_id,t.name AS column_type_name,c.max_length,c.is_nullable

FROM sys.columns c

INNER JOIN sys.types t

ON c.system_type_id = t.system_type_id

WHERE c.object_id= object_id('tbTestChange')

ORDER BY c.column_id

The output result should look like

So you can see that a new column with column_id '5' has been added. It's a fixed length column and is null-able. So when you run DBCC PAGE command again what do you expect. Let's try that

DBCC TRACEON(3604) --this is needed to send DBCC PAGE results to client

DBCC PAGE('tempdb',1/* file num*/,157/*page num*/,1)

Output has not at all changed from we had earlier (compare with other query window still open with results). This is because when a NULL-able column is added SQL server just changes the meta-data and does not touches the physical pages. So this can be handy when trying to add a new column to a very large table. However IMO decision as to whether a column should be NULL or NOT NULL should not primarily depend of how much time it takes to upgrade the table but on whether the column is candidate for NULL or NOT NULL from your business logic point of view. But leaving that argument aside (it can raise many eyebrows) yes when adding a NULL-able column its only meta-data and not physical pages that change.

Now insert two more records to this table

INSERT INTO tbTestChange(PK_id,Col1,Col2,COl3,Col4) SELECT 3,102,'CCCCCC','cccc',51 UNION SELECT 4,103,'DDDDDD','ddddd',NULL

Run the DBCC PAGE command again.

DBCC PAGE('tempdb',1/* file num*/,157/*page num*/,1)The outut I had this time was like

Slot 0, Offset 0x60, Length 28, DumpStyle BYTE Record Type = PRIMARY_RECORD Record Attributes = NULL_BITMAP VARIABLE_COLUMNS Memory Dump @0x3518C060 00000000: 30000f00 01000000 64006161 61612004 †0.......d.aaaa . 00000010: 00f00100 1c004141 41414141 ††††††††††......AAAAAA Slot 1, Offset 0x7c, Length 29, DumpStyle BYTE Record Type = PRIMARY_RECORD Record Attributes = NULL_BITMAP VARIABLE_COLUMNS Memory Dump @0x3518C07C 00000000: 30000f00 02000000 65006262 62626204 †0.......e.bbbbb. 00000010: 00f00100 1d004242 42424242 42††††††††......BBBBBBB Slot 2, Offset 0x99, Length 30, DumpStyle BYTE Record Type = PRIMARY_RECORD Record Attributes = NULL_BITMAP VARIABLE_COLUMNS Memory Dump @0x3518C099 00000000: 30001100 03000000 66006363 63632033 †0.......f.cccc 3 00000010: 000500e0 01001e00 43434343 4343††††††........CCCCCC Slot 3, Offset 0xb7, Length 30, DumpStyle BYTE Record Type = PRIMARY_RECORD Record Attributes = NULL_BITMAP VARIABLE_COLUMNS Memory Dump @0x3518C0B7 00000000: 30001100 04000000 67006464 64646400 †0.......g.ddddd. 00000010: 010500f0 01001e00 44444444 4444††††††........DDDDDD OFFSET TABLE: Row - Offset 3 (0x3) - 183 (0xb7) 2 (0x2) - 153 (0x99) 1 (0x1) - 124 (0x7c) 0 (0x0) - 96 (0x60) DBCC execution completed. If DBCC printed error messages, contact your system administrator.

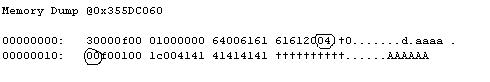

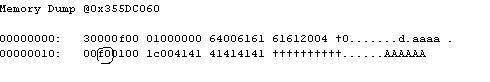

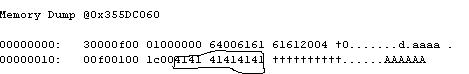

So you can see there are 4 rows in row offset and corresponding 4 memory dumps. If you examine slot 2 more carefully (see marked parts of hex dumps below) you will find that fixed column length now stores '1100' i.e. 17 (earlier it was 15). So now we have added 2 more bytes for newly added smallint column. Note that this value is same for slot 2 and slot 3 viz. for both new rows added above (even though one of the row has col4 as NULL). Also note that value of new col4 is indicated as '3300' in slot 2 (for 3rd row) and as '0001' in slot 3 (for 4th row). So when its fixed length column we use same storage space whether column is NULL or NOT NULL. Another important point worth noticing is NULL bitmap in both the slots. For slot 2 its 'e0' which when read in binary (in reverse order) indicates none of the 5 bits are set and hence no column is NULL. For slot 3 this value is 'f0' which when read in binary (in reverse order) means first four bits are not set while 5th is hence indicating that col4 (5th column in our table) is NULL.

ALTER TABLE tbTestChange

ALTER COLUMN Col2 VARCHAR(10)NULL

SELECT c.nameAS column_name,c.column_id,t.name AS column_type_name,c.max_length,c.is_nullable

FROM sys.columns c

INNER JOIN sys.types t

ON c.system_type_id = t.system_type_id

WHERE c.object_id= object_id('tbTestChange')

ORDER BY c.column_id

DBCC TRACEON(3604) --this is needed to send DBCC PAGE results to client

DBCC PAGE('tempdb',1/* file num*/,157/*page num*/,1)

You would find that DBCC PAGE output is same and the row offset has not changed at all.

Another case where just meta data is changed is when size of variable length column is increased. You can confirm this in same manner as shown above using DBCC PAGE.

Now take a deep breath and surface back to the top as we have seen a lot by diving deep. We explored how data is physically stored within data pages and what are different scenarios when changing table schema does not causes any change in physical data pages. In next section we will explore some other scenarios where ALTER TABLE will cause SQL server to just examine data pages and cases where physical layout of the stored data is changed.

NOTE: All the above tests were done on SQL Server 2005 Developer Edition (SP2) and SQL 2005 Express edition (SP2).

Bibliography / Sources Consulted:

- SQL Server Books Online 2005

- Inside Microsoft SQL Server 2005: The Storage Engine - by Kalen Delaney