EBCDIC file is getting loaded with all special characters in my table

-

October 15, 2018 at 3:16 pm

Hi,

I have an EBCDIC file and I am trying to load it using SSIS.

I created a flat file connection manager, changed the Code page to 1140 (IBM EBCDIC - U.S/Canada (37 + Euro))

When I load the data all my data is loading with special characters. Any help on this, please?

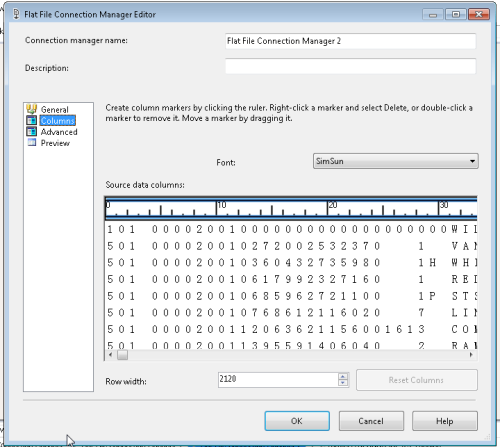

FYI... Pics loaded below for reference:

FYI... I can provide the file if needed since it is a public file and no private data is there in it.

FYI... below is a sample file and the Link for more details on the file.

Link for details of the file: http://www.rrc.state.tx.us/media/1273/ola013k.pdf

File Path: https://drive.google.com/open?id=17N5GaiDi2TzpbdTVa99qaiIKRicPjSAO

Can someone help me with this, please?

-

October 15, 2018 at 3:44 pm

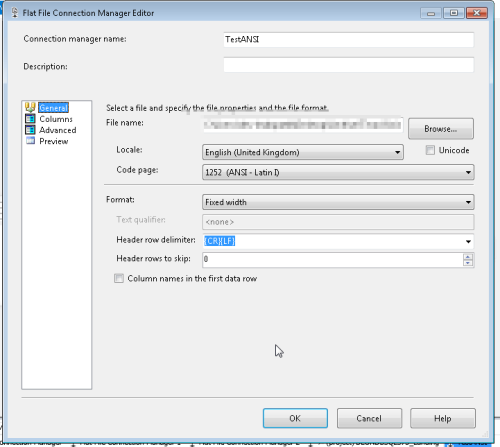

I cannot see the data in your file - but it doesn't look like EBCDIC encoded file, it looks like a standard ANSI file. What happens if you set the code page to a normal 1252 ANSI file?

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

October 15, 2018 at 3:54 pm

Hi Jeffrey,

I did not get what u meant by you cannot see the data in the file.

Do you mean you cannot download the file -> https://drive.google.com/open?id=17N5GaiDi2TzpbdTVa99qaiIKRicPjSAO

To answer your question on what is happening If I download it in ANSI 1252. Below are the pics.

-

October 15, 2018 at 4:29 pm

File is indeed ebcdic - but as it contains COBOL packed fields you will need to convert those independently within SSIS before loading to the database.

Have a look at https://www.mssqltips.com/sqlservertip/3459/importing-mainframe-data-including-packed-numbers-with-sql-server-integration-services/ for an example of how to do it.

Give it a try and if you can't make it work tell me and I may be able to do the c# bit for you with some adjustments as I have done this before.

-

October 15, 2018 at 4:35 pm

Hi Frederico,

I tried this method but looks like I am not doing it properly and I am getting blank data loaded.

Can you please help me on the c# ( I am not really good at it).

-

October 15, 2018 at 5:50 pm

Hi,

Had a look at the file and its going to be a bit more complex as it has multiple record types on it.

I may be able to get something done for tomorrow.

be prepared to pay something to a charity of your choosing.But if at all possible speak with the ones supplying you with the files to get a version of it where the comp-3 fields are expanded to be signed leading separate numbers instead of comp-3.

-

October 15, 2018 at 6:16 pm

Thanks for the reply, Frederico.

Sure I will donate to Saint Jude's children's cancer research institute.Sorry, I did not understand what u said about the comp-3 fields.

The Texas state department is providing this files to me. Not sure If they will change but will sure give a try. -

October 15, 2018 at 6:22 pm

tell them you need to load this to a SQL Server database - they should already have had that request many times, and its easy enough for them to do it if not done already

-

October 16, 2018 at 8:15 am

Spoke with them and was told that this is the only format they hold the data, Frederico.

-

October 16, 2018 at 8:47 am

kashyap4007 - Tuesday, October 16, 2018 8:15 AMSpoke with them and was told that this is the only format they hold the data, Frederico.Heh.... that's just their way of saying that they don't know how to generate a headerless/footerless fixed field report to send digitally. 😛

Please don't take the following as a 3rd party endorsement on my part. It's just an example of one of many vendors that claim to ease the pain quite a bit in this area.

http://aminosoftware.com/I can also imagine that someone makes a "provider" that you could add to SQL Server but haven't checked there.

If you prefer something home grown, it's going to take a bit of study on your part and they need to cough up the record layout. Please do a search for "import ebcdic to sql".

The last time I had to do such a conversion was way back in 1986 so I'm totally useless here except that I remember the problems with packed numeric and that articles, like the following, appear to be correct with the understanding that I've not actually tried them.

https://www.mssqltips.com/sqlservertip/3438/importing-mainframe-data-with-sql-server-integration-services/

https://stackoverflow.com/questions/4877379/reading-a-cobol-generated-file/4878481#4878481--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

October 16, 2018 at 8:59 am

Ah... another avenue is to google for "ebcdic to ascii converter". Some are converters you have to pay for but the following link provides some hope for a free method. Again, I've not tried it so this is not an endorsement of any kind. As with any 3rd party (especially open source), Caveat Emptor!

https://www.codeproject.com/Tips/673240/EBCDIC-to-ASCII-Converter--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

October 16, 2018 at 5:29 pm

Just a quick note to say this is not forgotten - just complex and with other things to do at the moment.

Part of the work done though.@jeff - yes some third party tools would do the conversion of this particular file - they do charge for it though for each time the conversion is done, and this isn't normally a once in a life time file. File layout is supplied and OP did post a link to it.

Most of the complexity arises from the fact that the comp-3 fields on the table need to be decoded while still on ebcdic mode, but remaining data is easier to convert on input stage.

Luckily the numeric signed fields are also all comp-3 so no need for further validation (although I may at a later stage implement it). -

October 16, 2018 at 5:52 pm

Thank you for your time Frederico.

-

October 16, 2018 at 6:59 pm

frederico_fonseca - Tuesday, October 16, 2018 5:29 PMJust a quick note to say this is not forgotten - just complex and with other things to do at the moment.

Part of the work done though.@jeff - yes some third party tools would do the conversion of this particular file - they do charge for it though for each time the conversion is done, and this isn't normally a once in a life time file. File layout is supplied and OP did post a link to it.

Most of the complexity arises from the fact that the comp-3 fields on the table need to be decoded while still on ebcdic mode, but remaining data is easier to convert on input stage.

Luckily the numeric signed fields are also all comp-3 so no need for further validation (although I may at a later stage implement it).As I previously stated, I've not researched any of the 3rd party tools that can do the conversion so I don't know the pricing structure. I agree that some will charge by the file but there must be some where it's a one time purchase of software or a yearly license. I am, however, with you. I'd rather learn how to do the conversions myself. Well... except when I have it as easy as I currently do. The closest I've needed to come to EBCDIC is what we're doing now and that's through a Linked Server using the IBM Provider (I forget the exact name it carries). Of course, the OP has no such capability.

I did miss the link for the "details of the file". It's good that they provided that information. The document is quite old and I hope they kept up with any field type, precision, and scale changes.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

October 21, 2018 at 1:48 pm

Sorry for the delay and for the long post below.

If any one has any comments/questions/suggestions all are welcome.

I've decided to have a go at processing this Mainframe file with multiple record formats using SSIS to load onto a SQL Server instance.

Processing these files may either be quite straightforward or it can pose a big challenge.

If files contain only "standard data" its just a question of converting from EBCDIC to ASCII and define the layout as any other ASCII file would be defined and processed.

If the files contain certain type of data, for example Packed or Binary data, this needs to be converted while still on EBCDIC mode otherwise the values are incorrectly converted.In most cases the datafiles on the mainframe are also generated using COBOL - this adds another small quirk related to definition of numerics. Quite easy to deal with as the quirk is just that one of the digits also contains the sign of that number.

On this particular case we are talking about a file supplied by RAILROAD COMMISSION OF TEXAS, more specifically their TEXAS CRUDE OIL INFORMATION SYSTEM master file.

This file contains 4 type of records as follows

RECORD ID TYPE RECORD

1 --------------> OIL FIELD

3 --------------> OIL LEASE

4 --------------> OIL MULTI-WELL

5 --------------> OIL WELLThe layout is quite simple on its construct, without no major redefines of the records.

Was it not for the fact that it contains Packed decimal data (Comp-3) fields loading this data onto SQL Server would be quite straightforward.

First problem is multiple record types per file.

As SSIS does not quite like multiple record types on the same input file, there are multiple methods to deal with the issue.

One of the common ones is to define the input file as a single field record, big enough to hold the biggest of the records on said file.

Processing of each record can then be done with, for example, the following methods

* derived columns

* T-SQL

* SSIS Transform ScriptSecond problem is related to the fact that file must be processed in EBCDIC mode until such time as the special numeric fields are processed.

Of 3 options mentioned above only one is capable of dealing with all requirements.

* Derived columns can't deal with converting the special numeric fields in a easy manner

* T-SQL can't easily convert from EBCDIC to ASCII (or other charset), neither can it easily convert certain types of numerics.

* SSIS Scrit - can easily do EBCDIC to ASCII conversion, and can, with specific functions, convert all numeric types onto standard SQL Numeric data types.So in order to do this task I decided to look into previous tools/processes that I have used before in order to build something that is easy to mantain and easy to understand, while not having too much work while doing it.

First and foremost a tool that can read nearly all type of files, regardless of the source, using whatever layout we supply that tool.

This tool is a freeware one, called RecordEditor (http://record-editor.sourceforge.net/).

It can not only be used to view such files, but also export onto a readable format, and also export the definition of the files on a more user friendly format that can be used by other tools.

E.g. we can supply it with a COBOL copybook, and it can output a XML file with definitions for each record type defined by the copybook.

As it also allows exporting the data onto standard CSV files, this can also be used to confirm that the work we do on SSIS is giving the correct results upon conversion.Second tool which is becoming a must have for any SSIS developer is BIML (Express on my case, for those very heavy on SSIS BIML Studio /MIST is a better option)

And lastly some code found over the net, or even my own code, to convert EBCDIC data onto their more user friendly C# equivalent.

This small project was then made of the following

Record Editor XML file output to get the file definitions on a usable format

BIML scripts to generate the SSIS package in a easy and repeatable way

C# code to perform the conversions

Excel - to process the XML definition and create blocks of BIML code and SQL Server table definitionNote that the Excel part could be done on a more mature way such as loading all definitions to a SQL Server metadata table, and then using custom functions build the required BIML constructs from the metadata.

As this would require a bit more work to be done I have decided not to do it at this stage.Steps taken

* Modify supplied copybook and change all FILLER definitions so that each filler becomes unique - add a sequence number to each one.

This is required to allow RecordEditor to supply us with a full definition of the record on the output xml file.

It has also the benefit that we will load the filler data - although in most cases it is of no interest, sometimes it does contain require data.

Can be discarded once on SQL Server, or not even loaded if we so choose.

* Load copybook onto RecordEditor and generate XML definition

* Load XML onto Excel, and build formulas to generate BIML constructs and SQL Table create statement

This requires

* conversion of BIML specific data types onto C#/SSIS/SQL

* determine when a definition requires special conversion

* generate BIML skeleton

* generate SSIS package using BIML ExpressIn order to test the conversion

* Export data from input file onto individual CSV files using RecordEditor

* Load onto SQL Server tables with the required layoutFollowing functions were implemented in C#

* ConvertEbcdicToUTF8str

* ConvertEbcdicPackedToLong

* ConvertEbcdicPackedToInt

* ConvertEbcdicPackedToDecimal

* ConvertEbcdicToDecimal

* ConvertEbcdicToInt

* ConvertEbcdicToLong

* Unpack (used by *Packed* functions above)All functions will return null if cast conversion fails.

UTF8str will remove trailling spacesOutstanding issues

* Does not report possible cast fails

* Does not process numeric values with embedded sign unless they are packed

At a later stage I may add this missing processingAttached ZIP file contains

* copybook.txt - cobol copybook extracted from supplied PDF

manipulated to suit what I think is best from a final SQL definition

can be changed at will within the normal COBOL rules

* file_format.xlsx

* TexasOil Directory - contains VS solution (2015)

* A few biml scripts - these are used to generate the main SSIS package

* TexasOilLoadCSV.dtsx - used to load RecordEditor .csv files for comparing

purposes

* TexasOilLoad.dtsx - Main package to process the mainframe fileAll of this was made in a way that makes it easy not only to regenerate the

main SSIS package should the input file change, but can also be used to

generate other packages for similar files.As is obvious the package will need to be changed to have the correct input

filename and folder, as well as the desired target SQL Server and table names.

These should also be changed on the BIML if there is a need to regenerate the

package.EDIT:

Forgot to add this.

One issue with BIML is that I was so far unable to make the file definition exactly as it needs to be.

Will be asking the maker for options, but in the meantime the following needs to be done1 - Once package is created go to the input file, and define the outputcolumnwidth with the same value as the input width

2 - go to the flat file source on the data flow , advanced editor, output columns, FullRecord Column and change usebinaryformat to true

Viewing 15 posts - 1 through 15 (of 16 total)

You must be logged in to reply to this topic. Login to reply