Cleaning Up the Cloud

-

October 3, 2025 at 12:00 am

Comments posted to this topic are about the item Cleaning Up the Cloud

-

October 3, 2025 at 3:19 pm

We have a team of two full time employees whose primary day to day responsibility is tracking and auditing our cloud spend. Each IT team that owns anything in the cloud gets a monthly meeting with one of them to go over the spend on what we are responsible for. Any changes are analyzed and must be justified. Initially those meetings were over an hour long as we tried to get a handle on exactly what was out there and get it all tagged to a team who owns it. These days they last around 15 minutes and are fairly painless.

The additional costs of spend governance was often overlooked in the rush to use the cloud for everything. It's not a trivial cost.

-

October 3, 2025 at 3:27 pm

Occasionally our GitHub workflow based CI/CD pipeline would hit the default AWS resource quota for VPCs. The Terraform teardown process was blocked as some components in the VPC have to be deactivated or disconnected before they can be destroyed.

Improvements to Terraform lessened it but it was still an occasional issue. Over time our CI/CD costs had crept up, not by a lot, enough to be written off as company growth and the cost of doing business. Then one month, costs sky rocketed and I'm glad they did.

The cause of the sky rocket was someone deploying something into the CI/CD account that they shouldn't have. That wasn't related to CI/CD, simply someone logging on to the wrong account. Easily corrected, permissions revoked.

I decided to see what else was in the CI/CD account. In theory it should be a few artefacts to support the CI/CD pipeline and nothing else. What I found was a shock.

I found that those occasional failures left other infrastructure behind. Not necessarily expensive things but over time these had accumulated. Fortunately we have a naming convention for CI/CD generated artefacts so identifying them was straight forward. In short, we cut the cost of our AWS CI/CD account by 90%.

It isn't always easy to find what is costing you money in AWS. You have to know how to interogate the AWS Cost Explorer. There is a weasel entry called EC2-Other. That covers a variety of items. We found that RDS snapshots had accumulated, individually not costing much, cumulatively adding to those straws to the donkey's back.

Old AMI (Amazon Machine Images) also cost money. It is quite laborious to find out what is in use and what is not. Just because no instances are currently running from those images does not mean that they aren't used by a periodic process.

I think AWS could be better at revealing these costs. So far, NOT doing so has benefitted then immensely and you could argue that under the shared responsibility model it is not the responsibility of AWS to manage these. However, organisations are realising that the cloud is useful for some things but damned expensive for others. The pendulum is swinging the other way and gaining momentum.

There is always the spirit of a contract (which people respect) and the letter of a contract (which is supposed to ensure the contract is clear and applied in an fair and impartial way). The danger for big vendors of any type, cloud providers included, is that once customers feel that the spirit of an agreement is being broken, they will start shopping around.

-

October 3, 2025 at 7:25 pm

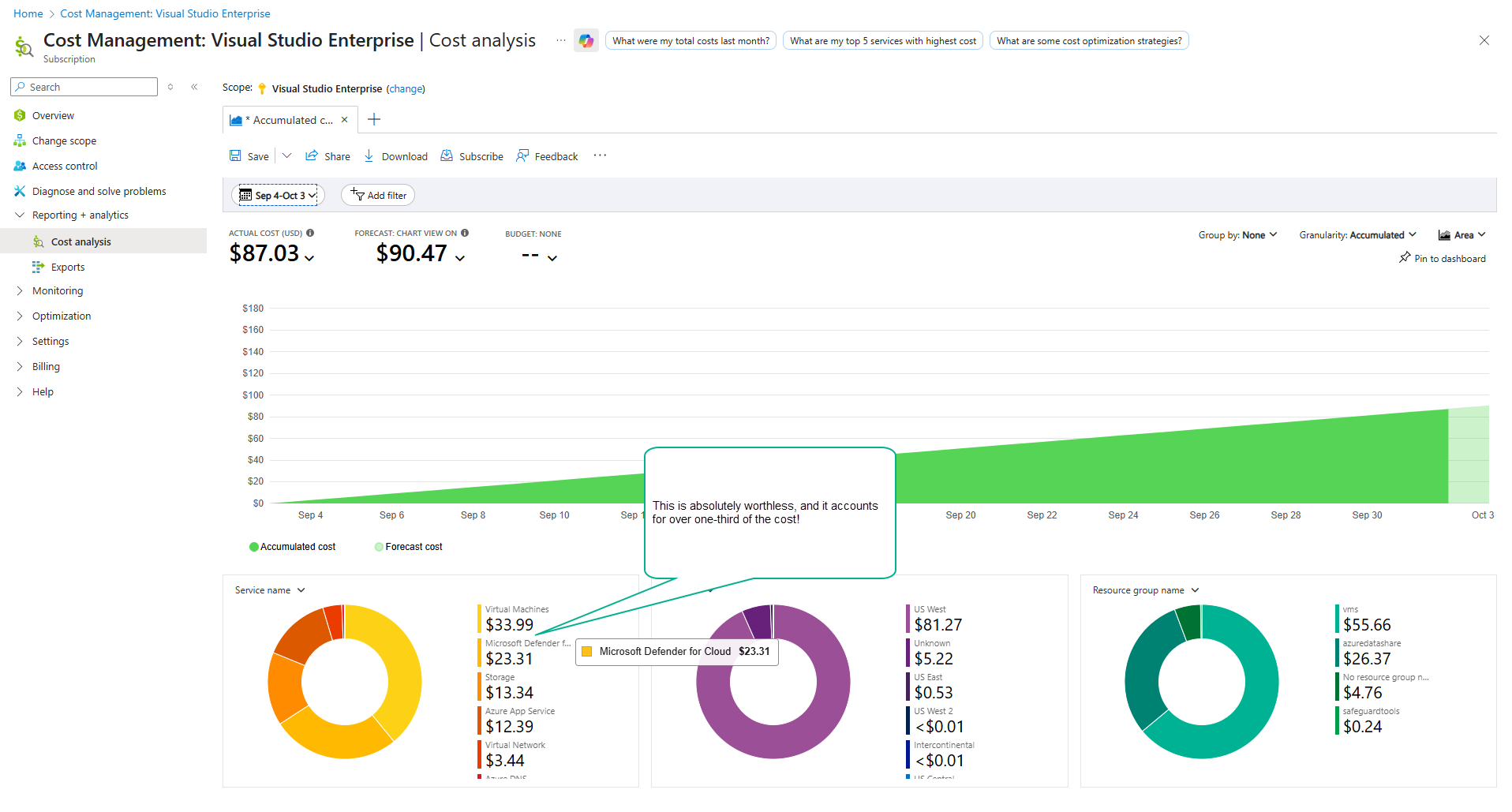

Yeah, a "resource", like a VM, is unfortunately comprised of a dozen other little pieces, each with their own billing rate, it is nearly impossible to know what something will cost. And then you have other worthless crap that Microsoft throws on by default, like this "Microsoft Defender for Cloud", that accounts for over a third of your cost! I've removed it before, and now it is back!

-

October 4, 2025 at 2:13 pm

Thank you, Steve, for this article. It brought to my attention that there can be hidden costs related to cloud usage. For example, I wasn't aware that logging or monitoring could be an additional cost. At work I've got an issue of not being able to read the data out of a database, and I'm not sure why. Working with Microsoft tech support we'll try to figure that out. But now I realize that once I identify what's going on, I'd better turn that off.

This also concerns me because I'm thinking about how to use Azure as an individual. I cannot afford a $150/month bill. I'm not sure at this point how to do that, but I've got to find a way. At work they are extremely scared of the bill escalating, and due to political issues, we just don't get much practice at using any cloud. In today's world, I think using the cloud is essential, but I don't see much chance of my getting much experience at that.

Rod

-

October 4, 2025 at 6:19 pm

One interesting option is the "Azure Spot VM" pricing model. You're basically using unallocated CPU resources from the global pool - but during periods of peak usage your VM can get evicted (shutdown) with little notice. However, your VM image is still persisted in storage, so you can spin it back up again later.

I've used this several times in past: spin up a really cheap VM (from what I recall it was indeed at a 90% discount), and use it for a few hours without interruption. But if I revisit a few days later, I'll need to spin it back up again - no big deal. Definitely not intended for a 24x7 production server, but you certainly can leverage it for production workloads.

"Do not seek to follow in the footsteps of the wise. Instead, seek what they sought." - Matsuo Basho

-

October 14, 2025 at 5:53 pm

Watching costs is a challenge. Even for companies where they have a person(s) doing this.

The spot VM is a good idea. It's also good to learn how to script this so you can quickly recreate something you need. I've learned to try to be really careful and separate new resources into new groups so I can whack the entire group at once.

Viewing 8 posts - 1 through 8 (of 8 total)

You must be logged in to reply to this topic. Login to reply