Standard Deviation (variance) using columns in same row.

-

August 6, 2018 at 3:15 pm

Jonathan AC Roberts - Monday, August 6, 2018 1:48 PMdrew.allen - Monday, August 6, 2018 1:37 PMJonathan AC Roberts - Monday, August 6, 2018 10:21 AMIt can be done without using a self join, using just windowed functions:;WITH CTE AS

(

SELECT t1.EmpName,

t1.Deduction,

t1.YearMonth,

LAG(t1.YearMonth) OVER (PARTITION BY t1.EmpName ORDER BY t1.YearMonth) AS PreviousYearMonth,

LAG(t1.Deduction) OVER (PARTITION BY t1.EmpName ORDER BY t1.YearMonth) AS PreviousDeduction,

VAR(t1.Deduction) OVER(PARTITION BY t1.EmpName ORDER BY t1.YearMonth ROWS BETWEEN 1 PRECEDING AND CURRENT ROW) AS Variance

FROM @test-2 t1

)

SELECT CTE.EmpName,

CTE.Deduction,

CTE.PreviousDeduction,

CTE.Variance

FROM CTE

WHERE CTE.YearMonth = @CurrentYearMonth

AND CTE.PreviousYearMonth = @PreviousYearMonth

You do realize that I offered a similar solution five days ago? It does require that he be on SQL 2012, which is not likely given that he posted in a SQL 2008 forum.

Drew

I did see that but it doesn't work as your WHERE clause restricts YearMonth to be @CurrentYearMonth. So it can't get the previous month.

Yeah, that's one of the problems with not having testable data.

Drew

J. Drew Allen

Business Intelligence Analyst

Philadelphia, PA -

August 7, 2018 at 6:45 am

Jonathan AC Roberts - Monday, August 6, 2018 10:05 AMsgmunson - Monday, August 6, 2018 9:43 AMOkay, I'm totally confused. Since when has the term "variance" come to mean something other than the difference for a set of exactly two values? If we were talking about a set of 20 or 50 or 100 values, I'd start thinking standard deviation, but for exactly two values, what would a mathematical "variance" actually mean? How could it be used, or for that matter, why? Any reason to NOT use just the mere difference?If you have n items (X_1, X_2,..,X_n) the Variance is: SUM((X_i - Mean)^2)/(N-1)

The variance is a measure of the spread from the mean. The Standard Deviation is the square root of the variance.

The "difference" gives a different answer to the "variance" and difference has no relation to the mean.I'm familiar with variance and standard deviation. However, what I'm struggling with here is the USE of such a calculation to look at just two values. There needs to be a particularly valuable reason to do such a thing, as measuring variance is not likely to be statistically significant over the course of a sequence of payroll cycles when all you are looking at is the amount of deductions for any given person having changed from one paycheck to the next. Even if kept over a number of years, the predictive value of variance between one value and it's calendar neighbor, given the odd and often capricious nature of human beings and payroll deductions, seems almost ludicrous to me. I'm looking for some kind of common sense reason to even use that calculation. Show me an economic value for making the effort.... Seems to me that measuring the entire set over a long period has a much higher likelihood of identifying seasonal patterns or trends, assuming that there's even a good reason, economically, to know that such trends exist.

Steve (aka sgmunson) 🙂 🙂 🙂

Rent Servers for Income (picks and shovels strategy) -

August 7, 2018 at 6:52 am

drew.allen - Monday, August 6, 2018 3:15 PMJonathan AC Roberts - Monday, August 6, 2018 1:48 PMdrew.allen - Monday, August 6, 2018 1:37 PMJonathan AC Roberts - Monday, August 6, 2018 10:21 AMIt can be done without using a self join, using just windowed functions:;WITH CTE AS

(

SELECT t1.EmpName,

t1.Deduction,

t1.YearMonth,

LAG(t1.YearMonth) OVER (PARTITION BY t1.EmpName ORDER BY t1.YearMonth) AS PreviousYearMonth,

LAG(t1.Deduction) OVER (PARTITION BY t1.EmpName ORDER BY t1.YearMonth) AS PreviousDeduction,

VAR(t1.Deduction) OVER(PARTITION BY t1.EmpName ORDER BY t1.YearMonth ROWS BETWEEN 1 PRECEDING AND CURRENT ROW) AS Variance

FROM @test-2 t1

)

SELECT CTE.EmpName,

CTE.Deduction,

CTE.PreviousDeduction,

CTE.Variance

FROM CTE

WHERE CTE.YearMonth = @CurrentYearMonth

AND CTE.PreviousYearMonth = @PreviousYearMonth

You do realize that I offered a similar solution five days ago? It does require that he be on SQL 2012, which is not likely given that he posted in a SQL 2008 forum.

Drew

I did see that but it doesn't work as your WHERE clause restricts YearMonth to be @CurrentYearMonth. So it can't get the previous month.

Yeah, that's one of the problems with not having testable data.

Drew

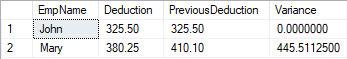

The original poster specifically stated that they wanted variance for the values coming from the same row. All one actually needs for that is the supplied values from the picture and a CROSS APPLY or two. Trying to address the problem by assuming that they :"should" be looking at values over a longer time period doesn't address the poster's problem. Of course, I find the poster's "problem" somewhat "problematic", as I can't see an economic value in holding on to that computation, even over a long period of time, as variance tends to mute trends and for this kind of data, where 0 is going to be a VERY frequent value, I'm at a loss to even come up with a scenario in which the "desired" computation has ANY economic value. Of course, that has never stopped a boss from demanding such calculations, even when there's no conceivable value...

Steve (aka sgmunson) 🙂 🙂 🙂

Rent Servers for Income (picks and shovels strategy) -

August 7, 2018 at 7:43 am

sgmunson - Tuesday, August 7, 2018 6:45 AMJonathan AC Roberts - Monday, August 6, 2018 10:05 AMsgmunson - Monday, August 6, 2018 9:43 AMOkay, I'm totally confused. Since when has the term "variance" come to mean something other than the difference for a set of exactly two values? If we were talking about a set of 20 or 50 or 100 values, I'd start thinking standard deviation, but for exactly two values, what would a mathematical "variance" actually mean? How could it be used, or for that matter, why? Any reason to NOT use just the mere difference?If you have n items (X_1, X_2,..,X_n) the Variance is: SUM((X_i - Mean)^2)/(N-1)

The variance is a measure of the spread from the mean. The Standard Deviation is the square root of the variance.

The "difference" gives a different answer to the "variance" and difference has no relation to the mean.I'm familiar with variance and standard deviation. However, what I'm struggling with here is the USE of such a calculation to look at just two values. There needs to be a particularly valuable reason to do such a thing, as measuring variance is not likely to be statistically significant over the course of a sequence of payroll cycles when all you are looking at is the amount of deductions for any given person having changed from one paycheck to the next. Even if kept over a number of years, the predictive value of variance between one value and it's calendar neighbor, given the odd and often capricious nature of human beings and payroll deductions, seems almost ludicrous to me. I'm looking for some kind of common sense reason to even use that calculation. Show me an economic value for making the effort.... Seems to me that measuring the entire set over a long period has a much higher likelihood of identifying seasonal patterns or trends, assuming that there's even a good reason, economically, to know that such trends exist.

That's a very good point. I worked in an HR office for my last job, and we did payroll twice monthly. Some of the deductions were taken out every payroll, but others were only deducted once monthly, so doing a comparison between the two was not really a valid comparison.

Drew

J. Drew Allen

Business Intelligence Analyst

Philadelphia, PA -

August 7, 2018 at 10:12 am

drew.allen - Tuesday, August 7, 2018 7:43 AMsgmunson - Tuesday, August 7, 2018 6:45 AMJonathan AC Roberts - Monday, August 6, 2018 10:05 AMsgmunson - Monday, August 6, 2018 9:43 AMOkay, I'm totally confused. Since when has the term "variance" come to mean something other than the difference for a set of exactly two values? If we were talking about a set of 20 or 50 or 100 values, I'd start thinking standard deviation, but for exactly two values, what would a mathematical "variance" actually mean? How could it be used, or for that matter, why? Any reason to NOT use just the mere difference?If you have n items (X_1, X_2,..,X_n) the Variance is: SUM((X_i - Mean)^2)/(N-1)

The variance is a measure of the spread from the mean. The Standard Deviation is the square root of the variance.

The "difference" gives a different answer to the "variance" and difference has no relation to the mean.I'm familiar with variance and standard deviation. However, what I'm struggling with here is the USE of such a calculation to look at just two values. There needs to be a particularly valuable reason to do such a thing, as measuring variance is not likely to be statistically significant over the course of a sequence of payroll cycles when all you are looking at is the amount of deductions for any given person having changed from one paycheck to the next. Even if kept over a number of years, the predictive value of variance between one value and it's calendar neighbor, given the odd and often capricious nature of human beings and payroll deductions, seems almost ludicrous to me. I'm looking for some kind of common sense reason to even use that calculation. Show me an economic value for making the effort.... Seems to me that measuring the entire set over a long period has a much higher likelihood of identifying seasonal patterns or trends, assuming that there's even a good reason, economically, to know that such trends exist.

That's a very good point. I worked in an HR office for my last job, and we did payroll twice monthly. Some of the deductions were taken out every payroll, but others were only deducted once monthly, so doing a comparison between the two was not really a valid comparison.

Drew

Yep, ... that, and the nature of human behavior tends to be somewhat random where things like payroll deductions are concerned. That said, i am actually open to hearing what someone might be able to tell me is the economic value of that information. Warning: said person would be best served by being fluent in statistics and the tendency of human beings to use them to support ideas not actually supported by their data.

Steve (aka sgmunson) 🙂 🙂 🙂

Rent Servers for Income (picks and shovels strategy)

Viewing 5 posts - 16 through 19 (of 19 total)

You must be logged in to reply to this topic. Login to reply