Self join / row_number() partition number generation based multiple status

-

May 11, 2020 at 3:20 am

Thanks for what? You haven't asked a question.

If you haven't even tried to resolve your issue, please don't expect the hard-working volunteers here to waste their time providing links to answers which you could easily have found yourself.

-

May 11, 2020 at 12:04 pm

hi

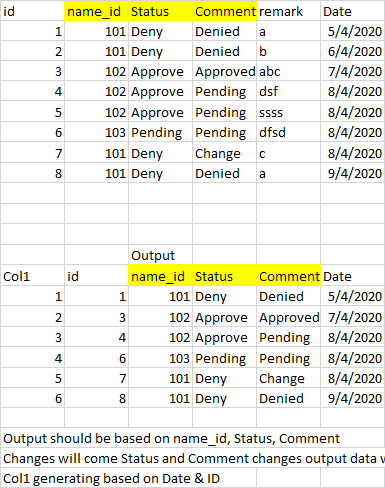

I have mentioned in excel sheet and both input and output required

-

May 11, 2020 at 12:42 pm

Please post your sample data in a consumable format, along with DDL. See here if you are unsure what is required. Many people here will not open Excel attachments, for security reasons. Simple text files are best.

Also, provide some details around what you have tried so far. Rather than expecting someone else to do all of your work, which starts to sound like you are being plain lazy, focus on specific problems you are encountering (duplicate rows, join issues, syntax errors etc etc).

If you haven't even tried to resolve your issue, please don't expect the hard-working volunteers here to waste their time providing links to answers which you could easily have found yourself.

-

May 11, 2020 at 12:52 pm

ravisankar wrote:Whenever there is a change in Status or Comment for name_id then we need to consider that record

The table could be altered so it becomes a "System-Versioned" temporal table. Then it would capture all changes committed to each row. If it's too much data (it would generate a history record if the 'remark' column were updated) you could create a trigger to maintain whatever is appropriate.

Aus dem Paradies, das Cantor uns geschaffen, soll uns niemand vertreiben können

-

May 11, 2020 at 1:18 pm

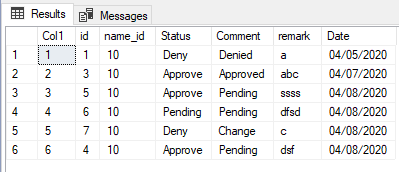

;WITH myTable AS

(

SELECT *

FROM (VALUES (1,10,'Deny','Denied','a','04/05/2020'),

(2,10,'Deny','Denied','b','04/06/2020'),

(3,10,'Approve','Approved','abc','04/07/2020'),

(4,10,'Approve','Pending','dsf','04/08/2020'),

(5,10,'Approve','Pending','ssss','04/08/2020'),

(6,10,'Pending','Pending','dfsd','04/08/2020'),

(7,10,'Deny','Change','c','04/08/2020')) AS T(id,name_id,Status,Comment,remark,Date)

),

CTE AS

(

SELECT *,

LAG(Comment) OVER (PARTITION BY name_id ORDER BY Date) PrevComment,

LAG(Status) OVER (PARTITION BY name_id ORDER BY Date) PrevStatus

FROM myTable

)

SELECT ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) Col1,

id,

name_id,

Status,

Comment,

remark,

Date

FROM CTE

WHERE PrevComment <> Comment

OR PrevStatus <> Status

OR PrevStatus IS NULL

-

May 11, 2020 at 2:56 pm

drop table if exists #data;

create table #data(

id int primary key not null,

name_id int,

[Status] varchar(12),

Comment varchar(64),

remark varchar(64),

day_dt date);

insert #data(id,name_id,[Status],Comment,remark,day_dt) VALUES

(1,101,'Deny','Denied','a','04/05/2020'),

(2,101,'Deny','Denied','b','04/06/2020'),

(3,102,'Approve','Approved','abc','04/07/2020'),

(4,102,'Approve','Pending','dsf','04/08/2020'),

(5,102,'Approve','Pending','ssss','04/08/2020'),

(6,103,'Pending','Pending','dfsd','04/08/2020'),

(7,101,'Deny','Change','c','04/08/2020'),

(8,101,'Deny','Denied','a','04/09/2020');

;with data_cte(id, name_id, [Status], Comment, remark, day_dt, PrevComment, PrevStatus) as (

select

*,

lag(Comment) over (partition by name_id order by day_dt),

lag([Status]) over (partition by name_id order by day_dt)

from

#data)

select

row_number() over (order by day_dt) col1,

id, name_id, [Status], Comment, remark, day_dt

from

data_cte

where

(PrevComment <> Comment or PrevComment is null)

or (PrevStatus <> [Status] or PrevStatus is null)

order by

day_dt;Aus dem Paradies, das Cantor uns geschaffen, soll uns niemand vertreiben können

Viewing 7 posts - 1 through 6 (of 6 total)

You must be logged in to reply to this topic. Login to reply