How to achieve parallelism in BULK INSERT with OLE DB source and target objects

-

December 3, 2019 at 9:03 pm

Hi all!

I need help to get the performance up on my ETL. My data flow task is very simple in nature:

- OLE DB source (MS SQL Server table) points to

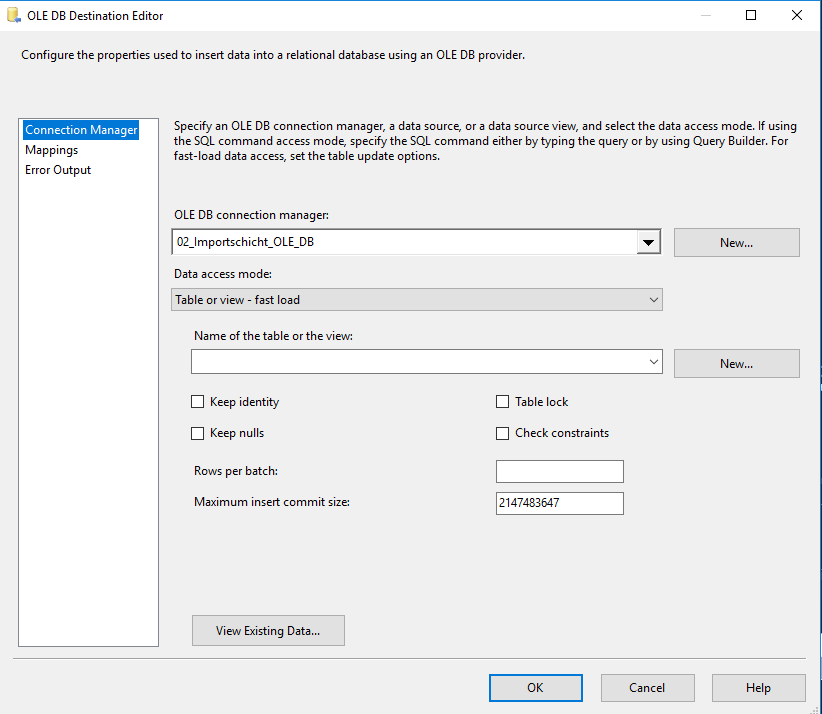

- OLE DB target (MS SQL Server table) with TABLOCK hint

The target table is uncompressed, has no indices or other constraints (PKs, Uniques) -> Heap. The database is set to SIMPLE RECOVERY.

According to the Microsoft docs (e.g. https://docs.microsoft.com/en-us/previous-versions/sql/sql-server-2008/dd425070(v=sql.100)?redirectedfrom=MSDN) I have met all requirements for minimal logging (I have checked to DB log that this is true).

After starting the data flow task, I realised that only one CPU core is utilised, the other 7 cores are not used. Above document actually gives the answer, I guess:

Parallelism and Partitioning Input

To optimize throughput during bulk operations, parallelism is essential. This is best achieved by running several bulk load command at the same time. Because the each bulk operation is single threaded, multiple copies must be run to utilize all CPU cores

I found other posts from different forums which addresses the problem, too:

- https://social.msdn.microsoft.com/Forums/sqlserver/en-US/474ba856-5ba0-43e1-a138-a5a2adc3aec7/bulk-insert-on-multiple-processors?forum=sqldatabaseengine

- https://www.anexinet.com/blog/how-to-optimize-bulk-insert-operations-in-ssis/

Following the discussion I need to have a lot of parallel BULK INSERTs in parallel.

But, HOW do I achieve this automatically? Both links suggest to use a split operator in SSIS to split the stream into several ones and have concurrent loads.

I have about 20 tables with 400 million rows each per month. It doesn't scale to make this manually in every data flow task. Further, my development machine has 8 cores, my production machine has 48 cores. It is a bad design to have different ETLs for DEV and PROD.

Is there a nice efficient way to make use of all cores? I mean, why should I use SSIS when a SELECT INTO or a INSERT SELECT can do the job 23x faster (tested it).

How did you solve such problems?

Thanks a lot 🙂

-

December 3, 2019 at 11:21 pm

If the tables are on the same SQL Server instance, you're probably better off creating SPs to do the inserts and using SSAS ExecuteSQlL tasks to call them. If you do have to move the data through SSIS to other instances, i suggest looking into using the Balanced Data Distributor in the data flow task.

-

December 4, 2019 at 11:29 am

I have tried the Balanced Data Distributer and used one output for one CPU core (i.e. 8 outputs in total). Now, when looking at the SQL monitoring I see one or two BULK INSERTS at the same time and not 8.

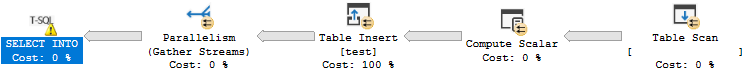

This might be that only one thread is calling the SELECT. But why? I am not using any UDFs, IDENTITY or are serial forcing operators/functions. Indee, when just executing the query with SELECT INTO the execution plan shows that it can read in parallel from the source (see attached picture).

How do I make parallel read possible in SSIS with OLE DB source?

Thanks!

Attachments:

You must be logged in to view attached files. -

December 4, 2019 at 11:48 am

svenflock wrote:Hi all!

I need help to get the performance up on my ETL. My data flow task is very simple in nature:

- <li style="list-style-type: none;">

- OLE DB source (MS SQL Server table) points to

- <li style="list-style-type: none;">

- OLE DB target (MS SQL Server table) with TABLOCK hint

The target table is uncompressed, has no indices or other constraints (PKs, Uniques) -> Heap. The database is set to SIMPLE RECOVERY.

According to the Microsoft docs (e.g. https://docs.microsoft.com/en-us/previous-versions/sql/sql-server-2008/dd425070(v=sql.100)?redirectedfrom=MSDN) I have met all requirements for minimal logging (I have checked to DB log that this is true).

After starting the data flow task, I realised that only one CPU core is utilised, the other 7 cores are not used. Above document actually gives the answer, I guess:

Parallelism and Partitioning Input

To optimize throughput during bulk operations, parallelism is essential. This is best achieved by running several bulk load command at the same time. Because the each bulk operation is single threaded, multiple copies must be run to utilize all CPU cores

I found other posts from different forums which addresses the problem, too:

- <li style="list-style-type: none;">

- <li style="list-style-type: none;">

Following the discussion I need to have a lot of parallel BULK INSERTs in parallel.

But, HOW do I achieve this automatically? Both links suggest to use a split operator in SSIS to split the stream into several ones and have concurrent loads.

I have about 20 tables with 400 million rows each per month. It doesn't scale to make this manually in every data flow task. Further, my development machine has 8 cores, my production machine has 48 cores. It is a bad design to have different ETLs for DEV and PROD.

Is there a nice efficient way to make use of all cores? I mean, why should I use SSIS when a SELECT INTO or a INSERT SELECT can do the job 23x faster (tested it).

How did you solve such problems?

Thanks a lot 🙂

I'm not sure how would you achieve parallelism by applying TABLOCK on the Destination table?

-

December 4, 2019 at 12:03 pm

TABLOCK is independent for parallel inserts. It is just relevant for minimal transaction logging. Is that what you mean?

-

December 4, 2019 at 12:18 pm

To be absolutely sure I just defined a OLE DB source mit a row count after that. The data is still read only by one thread. How is this possible?

-

December 4, 2019 at 1:29 pm

You can achieve faster INSERT with TABLOCK since it would avoid the blockage due to Read and Shared Locks but you cannot achieve the concurrency in insert into destination table.

If your intention is to achieve parallelism and for which you are trying to make the read from source parallel. But since you won't be able to achieve concurrency in destination insert then I don't find any relevance in focussing only on source unless you also think about the target.

-

December 4, 2019 at 1:47 pm

I had table lock disabled in order to allow concurrent inserts. According to documentation minimal logging works for HEAP tables in SIMPLE RECOVERY and concurrent inserts. But that is just a logging thing.

But to exclude the target, if I just have the source and a row count, I have no bottleneck on the target side. In this example the data is still read single-threaded.

So, in this use case: how to I achieve parallel read from the source?

-

December 4, 2019 at 2:09 pm

You can't control SQL Query Processing unless you use Query Hints, Table Hints or specifically ask SQL Server Engine to do something. Query Optimizes choose best plan by its own unless you instruct specifically.

Why don't you try Batching using While Loop or For Loop container. For e.g. if you have 100 rows you can have 5 different loops in SSIS Control Flow each running parallely and in batches of 5 let's say. So at a time 20 records shall be processed. You can decide the Batch Size. You can write Stored Procedure and call it in SSIS using Execute SQL Task or you can use Loop Container whatever you are comfortable with.

With this you will have control over the parallelism.

Most importantly, always keep the available number of cores when intending to make something parallel. For e.g. If you have 2 cores and you are having 4 loops then it won't make sense as you can achieve parallelism only upto 2 threads and rest would be queued.

-

December 4, 2019 at 2:24 pm

I get your point to have full power over parallelism if I implement it manually.

But I don't get the part with the query processor. Why does the query optimizer allow parallel reads WHEN using INSERT SELECTs and SELECT INTOs but not in the SSIS context when reading the stream. I have even specified the query hint

OPTION(USE HINT('ENABLE_PARALLEL_PLAN_PREFERENCE'))with no effect.

Is there any documentation which states that OLE DB sources can handle only one thread like OLE DB destinations? I tried ADO.NET. Same here, only one thread.

I do really like to go back to T-SQL but I have this issue described here:

https://sqlperformance.com/2019/05/sql-performance/minimal-logging-insert-select-heap

As I am not in in control of the batch size, all 400mn records are logged. This is done single threaded, too and of course, the storage gets drown as well. It happens on all SQL Server 2016 machines here.

-

December 4, 2019 at 3:09 pm

if you're trying to get that parallelism from the source you'll need 8 copies of the oledb src using modulo that are something like-

OLEDB Src 1: SELECT * FROM table WHERE Id % 8 = 0

OLEDB Src 2: SELECT * FROM table WHERE Id % 8 = 1

… all the way "= 7"

(Hopefully you have an Int Id) then use the SSIS Union All to bring them back together or have 8 copies of the oledb destination without Tablock.

-

December 4, 2019 at 4:07 pm

There must be something in your query which is causing the plan to run sequential even if you have forced parallelism.

There are few features which can be run only sequential. Few days ago I saw the list of such features. Somehow I don't have the URL but you can definitely search it on your own.

Additionally, there are few other things such as MAXDOP settings, number of data files mapped to tempdb, number of NUMA nodes etc. which plays vital role in Parallelism.

-

December 4, 2019 at 7:34 pm

It is just a simple SELECT of a subset of columns. No transformation at all. I am loading my data from source system DBs to my staging area. All business and tech transformation take place a different succeeding layer. So, apparently, SSIS OLEDB/ADO.NET sources do not support multi-threaded query execution. I do not have a surrogate identity like key in the sources, i.e. I cannot use modulo tricks to get the data separated.

As suggested by you, I will just go to SQL task executions for those trivial SELECTs as the MINIMAL LOGGING issue only occurs with bad statistics in combination with window functions, joins and aggregation.

I still cannot believe that basic operators like DB sources are not queried in parallel if the query supports it.

Does anybody know if the new OLE DB driver released with SQL Server 2019 brings any improvements in that domain?

https://www.microsoft.com/en-us/download/details.aspx?id=56730

-

December 4, 2019 at 7:49 pm

I'm not sure if this will help you. I've seen many properties in Connection, Control Flow, Data Flows and Tasks which you can also explore. There must be some property in order to enable the parallelism. SSIS is one of the advanced In-Memory ETL tool and there must be something.

Have you tried this?

https://www.dynamics101.com/ssis-parallel-processing/

https://blogs.msdn.microsoft.com/sqlperf/2007/05/11/implement-parallel-execution-in-ssis/

May be this will be helpful

https://www.mssqltips.com/sqlservertip/6078/parallel-execution-of-tasks-in-ssis/

-

December 4, 2019 at 8:37 pm

Hi,

thanks for links, the third one was new to me :-). It is indeed the nearest match to my use case. It still has a 1:1 relation between table and source, i.e. data is not split and merged after transfer. I will still use only three threads if 3 tables remain from my 1.300 tables from the use case (the largest ones).

I have played with a lot of parameters (batch sizes, caching, engine threads) which did not affect the overall behaviour that it kept single threaded.

I will have to make more detailed experiments on that.

Viewing 15 posts - 1 through 15 (of 20 total)

You must be logged in to reply to this topic. Login to reply