Binary(Document) Extraction using SSIS - derived column

-

Hello all - I'm reaching out for some pointers regarding a task I have pending:

I have a specific task whereby I have to extract some files out of a database(Varbinary) column. I've done this on a couple of occasions using an SSIS package - albeit in a pretty simplistic manner. For all intents, it was a 'dump' of all documents associated with all users into a single folder - using a derived column. Pretty simple..no frills, just get the docs out - that was my remit.

The current conundrum is that there are multiple documents stored in a table for any particular user, and I've been instructed that there should be a root folder - with a sub - folder for each userID - containing all of that users extracted documents. Each document must have the userID appended to the filename also.

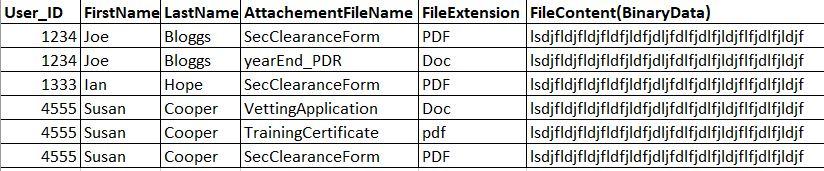

My table (Derived using some joins) looks like this: I have about 18000 documents to extract.

Any pointers on how I can achieve my goals using SSIS? I have a package already that extracts everything from a prepared table and dumps into a single folder but I need something more sophisticated for this task. I'd normally just fudge through until I stumble upon or work out a solution but I'm time critical with this - hence asking for some advice.

I suppose I could do this in a two step approach, whereby I extract the documents into a single folder, appending the userID to the filename during extraction. I could then write some Powershell to create a folder based on the userID\ move the documents to that folder. It would be nice to do it in a single pass with one tool if possible (and a useful learning exercise)

Thanks in advance for any tips.

J

-

July 10, 2020 at 12:10 pm

Thanks for posting your issue and hopefully someone will answer soon.

This is an automated bump to increase visibility of your question.

-

July 10, 2020 at 5:06 pm

Not real sure I follow - but it shouldn't be too much of an issue to add a script task with the UserID (defined as a variable and populated in the for-each loop). Using either C# of VB.Net you can then easily check for the sub-folder and if it doesn't exist then create it.

In C# you can do this:

string userDirectory = Dts.Variables["User::UserDirectory"].Value.ToString();

Directory.CreateDirectory(userDirectory);Create a variable in SSIS named UserDirectory - and in that variable you build out the full path to the directory location using a project parameter for the root directory and the UserID variable for the sub-folder.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

July 14, 2020 at 8:13 am

Thank you for the reply, Jeffrey.

Before reading your reply - I had done the following:

1 -Execute SQL task that gets all of the userID's that have attachments present in other tables (result set is a variable)

2 - For Each Loop with an ADO Record set enumerator- populated by the above variable

3 - Inside the FE Loop I have a File System Task that creates each users directory in a designated root folder (parameterised)

4 - Outside of the for each loop I have a Data Flow Task with OLEDB Source (Running pretty much the same query as in the Execute SQL Task in step 1 - with the inclusion of the Binary column to be exported)I have parameterised the query so the file path is built when the query is executed and it is stored as a column in the OLEDB result set.

5 - And export column task takes the binary data from the 'Content' column and exports it to the filepath .

It works, but is very crude. It just creates a load of folders, then spits out the documents to those folders. To be a bit more elegant, I'd like to do the document extraction in the FEL container using the ADO enumerator (Create Folder>Create Document>Loop)

Your suggestion of the script task to populate a variable with the export filepath may be a way forward (using a derived column and an export column task)

I'm not very good at all with C# scripts or VB - I'll have a play and see what I come up with - suggestions, pointers all welcome 🙂

Thank you again.

-

So - in your for-each loop all you are doing is creating the directory if it doesn't exist? If so - then all you really need to do is move the data flow inside the for-each loop.

Use the same process - but the data flow now uses the user id to get just that users data and outputs that users data to the specified folder.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

July 22, 2020 at 1:25 pm

Just a follow up on this, Jeffrey.

I have managed to get a working solution with your help.

I had been trying to read in the 'collection' variable from the For Each Loop Container (@UserID) and re-use it in the data flow (Which I moved into the loop at your suggestion)

When passing the collection variable to the query in the OLEDB connection (that enumerates the records with BLOB storage) it wasn't returning anything (as if the WHERE clause contained no result) I think this may have been something to do with the scope of the variable being 'package'?

Solution was to create an Expression task in the FEL container which populated a new Variable (using the FEL 'collection variable' @user-id as a base) I used this new variable as a parameter in the where clause of the OLEDB query and this worked a treat.

The package now gets a list of users with documents attached, loops through the list :- For each user - Create Folder>Dump Documents for that user in the folder - rinse repeat.

Thank you again for taking the time to reply 🙂

Viewing 6 posts - 1 through 6 (of 6 total)

You must be logged in to reply to this topic. Login to reply