Having

applied dozens and dozens of Service Packs to various SQL Server clusters, I

still never cease to learn that not everything always goes as planned. J

So, a funny thing happened on the way to apply my service pack to a SQL

Server 2008 R2 cluster. Well, it really wasn’t all that funny. In fact, I was for the moment, somewhat sad

at event viewer log pronouncing the untimely demise of my SQL Server:

“[sqsrvres]

CheckServiceAlive: Service is dead”

Famous last

words, “He’s dead Jim.”

(youtube clip)

Can’t help to

think the author/developer of that error msg, was a Trekkie (noun - a fan of the US science fiction

television program Star Trek.)

So here’s a

harrowing tale with a happy ending.

Follow me on a rollercoaster ride of twists and turns on my journey through

the event logs, and it’s very informative error messages. It turned out ok, but it temporarily gave me

a “cluster headache” The solution was fairly simple, but not

obvious.

I know I

executed this service pack upgrade flawlessly! For a quick review here, I

performed the following steps:

Identified

the passive node

Log

onto any of the SQL nodes -> Open command prompt -> Type ‘Cluster Group”

and Enter.

If the cluster groups are hosted between the two

nodes, you need to run the command “cluster group “<Group Name>” /move” where <Group

Name> is the name of each cluster group e.g “cluster

group “Available Storage” /move“ to move the group

named Available Storage to the second node.

Type the command “cluster resource”

and ensure all resources are online and owned by only one of the nodes.

2. Install

the Service Pack on the Passive Node

Identify

the correct service pack/build for your SQL Server version http://sqlserverbuilds.blogspot.com/

Download

or copy the service pack locally (on each node)

Right-click

à ‘Run

as Administrator

Follow

the prompts, select the instance/features, accept the license agreement, check

for warnings, click <Update> (off you go!)

Several

minutes later, Node 1 is successfully updated!

Voila:

Great! Now

all we need to do is apply the SQL service pack on the second node which is

currently holding all the cluster resources. The next action is to failover all

the cluster groups to the passive node which we just updated.

You can use

the command line options as follows:

- cluster group “Cluster Group” /move

- cluster group “Available Storage” /move

- cluster group “SQL Server (Instance)” /move

- cluster group “MSDTC” /move

When

all groups have been moved over to the other node, verify all the resources

using the command ‘cluster res’ Follow

the above steps to install the service pack on the second node (which is now

the passive node) so both servers are running the same service pack. When the

second node had been updated, test the failover a few times to ensure that your

failover clustering is working as expected.

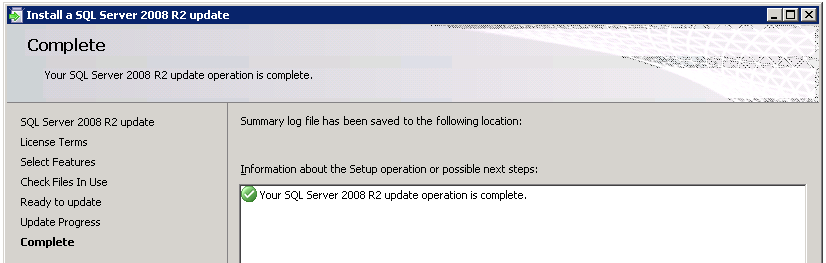

So,

I get the above success and completion screen on Node2, and all looks

good. All I needed to do, was validate

the services were online, and databases accessible. Launch SSMS, and try to connect. No go.

“17187

SQL Server is not ready to accept new client connections. Wait a few minutes

before trying again....”

Ok,

I can deal with that. I’m as patient as

the next guy. I see another helpful

error msg, allegedly referring to the state of my SQL Service:

“19019

[sqsrvres] OnlineThread: Could not connect to server but server is considered

up.”

Yeah,

well, try telling that to the users! “Uh,

you may not be able to connect to the server, but the server is considered up” :-O

Checking

the Failover Cluster Manager, I see the SQL Services, still offline. I attempt to bring it online, and monitoring

the event viewer I get another more serious game changing error:

“error

912 Script level upgrade for database 'master' failed because upgrade step

'sqlagent100_msdb_upgrade.sql' encountered error 33009, state 2, severity 16.

This is a serious error condition which might interfere with regular operation and

the database will be taken offline. If the error happened during upgrade of

the 'master' database, it will prevent the entire SQL Server instance from

starting. Examine the previous errorlog entries for errors, take the

appropriate corrective actions and re-start the database so that the script upgrade

steps run to completion”

So, this one

is a “serious error”. I guess the other

ones should NOT be taken too seriously, and just a light-hearted “YOU’RE

SCREWED” but don’t worry error! Then

again, it must be a serious error, there’s 82 words in this one! Of course, it’s a good thing the error

message lets me know that, the fact that the ‘master’ database failed, “might

interfere with regular operation and the database will be taken offline.” Do you think!??

Anyways, this

cannot be going in a positive direction.

At this time, I was looking for that motrin, because my cluster headache

was underway.

Not having a

good feeling about this, I sat breathlessly while I scrolled through the event

logs, uncovering more and more red-alert level messages. The simultaneous IMs, email, and ringing

phone to let me know the server is down, was not as helpful. And yes, there was an approved and scheduled change

control ticket, as well as an fyi email, before I began.

Along the

way, I see that, as expected, the SQLAgent could also not start, but was sure

glad I caught that extremely informative error message:

“SQLServerAgent

could not be started (reason: Unable to connect to server '(local)';

SQLServerAgent cannot start).”

So, let me

try to understand this. The reason why

SQLServerAgent could not be started is because….(long pause for dramatic

effect) …SQLServerAgent cannot start.

OK, that makes perfect sense!

Moving on.

In my

continued travel to unravel (ha a rhyme) this mysterious cluster conundrum, I

attempted to failover to the other node.

Hey, maybe it will come online there.

Was worth a shot.

No dice! I peered into the event viewer there, to see

what, if any, new error message would clue me in:

And then, the

dreaded of all messages,

“error 3417 Cannot recover the master database. SQL Server

is unable to run. Restore master from a full backup, repair it, or rebuild it.

For more information about how to rebuild the master database, see SQL Server

Books Online.”

Noooooooooooooooooooo! Ok, chill out, collect yourself Pearl, and

well, find the installation media, and verify the most recent backup of the

master database is at hand. This was

going to be a long day. And, I didn’t

even have lunch yet!

Just before,

I was to embark on the point of no return, I wanted to see if there was

anything, something, I could possibly do to avoid having to rebuild &

restore the master.

I hopped on

twitter, and used the most helpful hash tags of all social media: #SQLHelp

I also used my bingoogle-fu, (a

term I think was coined by one of the SQL Server MVPs). Oh, and I used my deductive powers of

reasoning by looking over the error messages again.

Let me pause,

and say thank you to Argenis Fernandez (@DBArgenis) and Joe Fleming (@Muad_DBA)

for their quick replies. They mentioned

to check the registry, compare keys on both nodes, as well as provided some

links to look at.

Having

possibly seen an error 435 along the way, Joe suggested this link: SQLAgent

435. I reference it here, but don’t

think this was the issue. However, it reminded

me of the upgrade error mentioned earlier:

“error 912

Script level upgrade for database 'master' failed because upgrade step

'sqlagent100_msdb_upgrade.sql'”

Hmm,

SQLAgent, msdb, failed to upgrade. It

does seem to involve something failing in the msdb_upgrade.sql script. I came upon this

link, which was a triple-play, in the sense that it contained the three

error messages I saw, 912, 3417, and 33009, respectively. Keep in mind, the referenced link discusses a

different scenario where an upgrade from SQL 2000 to SQL 2008 failed. But it was useful in giving me clues towards

the final resolution.

I did find

one more msdn blog, which was a direct hit on the error message, “Script

level upgrade….failed” Script! That’s it, my upgrade script failed! Ok, I thought I knew that. So, if the script failed, let’s try to

disable script execution. How do we do

that? The article tells us to start the server

by using trace flag –T902, which basically its purpose is to bypass upgrade

scripts.

In the

meanwhile, the twitter conversation was continuing, and @Muad_DBA asked me

about the tempdb. What is the size and

configuration of the tempdb? Yikes, it

was only the default 8,192 or 8MB, one file.

He suggested that the upgrade was running out of temp space to do the

upgrade, and the tempdb growth is impacting the upgrade process. I increased the size of the tempdb, just to

be sure, but didn’t see anything indicating it ran out of space or couldn’t

auto-grow.

I also tried one other article I found, thatreflected the current state of affairs, ie, SQL

Server 2008 Service fails to start after Service Pack Installation. It also mentions the Script level upgrade 'sqlagent100_msdb_upgrade.sql'

failed error. It has you check and

create some registry entries (specifically to add the DefaultData &

DefaultLog paths). This didn’t seem to

make a difference.

Back to –T902. By using –T902 as startup parameter, I was

able to get the SQL Service running, via the services.msc, and log in via sqlcmd,

as well as launch a SSMS instance.

Yet, another

similar hit, but not exactly my build, I stumbled closer to the issue, and here

was the closest yet. SQL

Server 2008 R2 Unable to Start After Applying CU1. Bringing you

attention to Step 3, listed here:

“ALTER AUTHORIZATION ON DATABASE::MSDB TO SA”

When

I looked at the owner of database ‘msdb’, I did in fact see that it was an

owner, other than ‘sa’. It was never

restored, so not sure how it changed, but apparently this WAS the key.

I

ran the above statement, and also, sp_changedbowner ‘sa’ (which I believe is

essentially the same thing, other that Alter

Authorization, (see the highlighted hyperlink) applies to greater securables

in SQL Server, and provides more granularity.

Once

I changed the ownership of msdb back to ‘sa’, I restarted the services via the

Failover Cluster Manager, and….several hours later, it’s online, up and

running. Failed over back and forth a

few times, to make sure.

So,

in a nutshell, remember to check the ownership of the system databases before

any upgrade, and ensure that it is set to ‘sa’, because you too will end up

with a cluster headache.

Quite

anti-climatic for sure, but don’t avoid updating your SQL Servers. There is no patch for stupidity. I hope the above information is helpful.

May

your SQL Servers Live

Long, and Prosper !

=============================================================

You could and should follow me on Twitter! @Pearlknows

Please inquire about our comprehensive SQL Server Health Check assement. We will provide you with a plan to optimize your slow performance! pearlknows@yahoo.com