In part 1 of the In-memory OLTP articles we gave you an introduction into In-memory OLTP, showing what the requirements are, the limitations and a quick look at the Advisors.

In this article we are going to dive a little deeper into a large portion of In-memory OLTP: Memory-Optimized (or In-memory) tables! A large part of the performance increase in In-memory OLTP comes from these Memory-Optimized tables where your data is stored in your system’s RAM instead of a storage subsystem.

Memory-Optimized table theory

Before we can dive into the fun practical part I want to give you some information on how Memory-Optimized tables work.

Memory-Optimized tables reside in the memory of the server that is running SQL Server. Changes to table data are directly written to the table into the memory and reads are read directly from memory. Even though it seems no disk actions are being performed, Memory-Optimized tables are fully durable, every transaction will be logged in the transaction log after it has been committed (there is an option to use non-durable Memory-Optimized tables which are not logged, data is lost when a restart of the SQL Server service occurs) and is saved into a Data and Delta files (or Checkpoint File Pairs, CFP).

The Data files contains rows from one or more Memory-Optimized tables that were updated or inserted by transactions. The rows are inserted into the Data file sequentially, in the order that the transactions were processed. Since rows are added sequentially it is completely possible that a row from table C is saved between two rows of table A. Data files can have a maximum size of 128MB when you have 16GB or more memory, and 16MB when you have less then 16GB memory. When a Data file reaches fullness a new Data and Delta file is created automatically. Along with the rows a transaction commit timestamp is saved so rows can be matched to transactions.

When we delete or update (which is actually a delete + insert in a CFP) a row that is saved inside the Data file it is not changed or removed from the Data file. This is where the Delta files come in, they track what rows were deleted or changed inside the Data file (A Delta file is always paired with a Data file as a CFP and cover the same transaction timestamps).

Eventually rows in the Data file that are marked deleted in the Delta file and are no longer referenced by any transactions, will be cleaned up by a CFP Merge operation to free up space in the CFP’s.

The rows in Memory-Optimized tables are versioned. Each row inside the Memory-Optimized table can have multiple versions. A big change compared to Snapshot Isolation (which also allows multiple versions of a row to exist) is that the versions are stored and maintained in-line, along with the table data, in memory (and in the CFP) instead of TempDB.

Concurrency is handled through an optimistic lock and latch free mechanism, where readers do not block writers and writers do not block readers, by using snapshots of the rows using row versioning. Because the concurrency control is optimistic, it assumes there are no conflicts in the transaction isolation. If any conflict does occur, one of the transaction will be terminated and an error will be generated. The client will need to retry the transaction.

Row versioning

The data structure for Memory-Optimized tables is also different compared to disk-based tables. Where disk-based tables are organized into pages and extents, row versions are located by using memory pointers instead of page numbers.

Creating Memory-Optimized tables

So let’s move on with the fun stuff, creating Memory-Optimized tables!

For this test I created a new test database without a Memory-Optimized filegroup, we will add this filegroup in the next step.

-- Create OLTP Test database

CREATE DATABASE [OLTP_Test] CONTAINMENT = NONE

ON PRIMARY

(

NAME = N'OLTP_Test', FILENAME = N'E:\Data\OLTP_Test_Data.mdf' , SIZE = 51200KB , FILEGROWTH = 10%

)

LOG ON

(

NAME = N'OLTP_Test_log', FILENAME = N'E:\Log\OLTP_Test_Log.ldf' , SIZE = 10240KB , FILEGROWTH = 10%

)

GO

The next thing we need to do before we can create the actual Memory-Optimized tables is add a Memory-Optimized filegroup and add a data file.

-- Add filegroup for memory optimized data

ALTER DATABASE OLTP_Test ADD FILEGROUP OLTP_MO CONTAINS MEMORY_OPTIMIZED_DATA

-- Add file

ALTER DATABASE OLTP_Test ADD FILE (name='OLTP_mo_01', filename='e:\data\OLTP_Test_mo_01.ndf') TO FILEGROUP OLTP_MO

The magic parameter in the ADD FILEGROUP command is the CONTAINS MEMORY_OPTIMIZED_DATA. This indicates that this filegroup will hold Memory-Optimized tables.

Now that we have created our filegroup and added a file we need to switch the isolation level for Memory-Optimized tables. We can do this with the SQL command below:

-- Alter the isolation level

ALTER DATABASE OLTP_Test SET MEMORY_OPTIMIZED_ELEVATE_TO_SNAPSHOT=ON

This means that transaction that are being run against Memory-Optimized tables will automatically run with Snapshot isolation.

Let’s create a Memory-Optimized table:

-- Create our test table

USE OLTP_Test

GO

CREATE TABLE dbo.OLTP

(

[AddressID] [int] IDENTITY(1,1) NOT NULL PRIMARY KEY NONCLUSTERED,

[AddressLine1] [nvarchar](60) NOT NULL,

[AddressLine2] [nvarchar](60) NULL,

[City] [nvarchar](30) NOT NULL,

[StateProvinceID] [int] NOT NULL,

[PostalCode] [nvarchar](15) NOT NULL,

[rowguid] [uniqueidentifier] ,

[ModifiedDate] [datetime]

)

WITH (MEMORY_OPTIMIZED=ON)

GO

The MEMORY_OPTIMIZED=ON hint indicates this will be a Memory-Optimized table.

One thing to note is that I indicate that the index created by the Primary Key is a non-clustered index. This is because clustered indexes (and a lot of other things) are not supported for Memory-Optimized tables.

We can actually check if a table is Memory-Optimized by viewing the properties of a table:

Memory-Optimized table properties

If the Memory optimized option is set to True this means the table is a Memory-Optimized table!

Another interesting option is Durability. In this case it is set to SchemaAndData this means that this Memory-Optimized table is “durable”, no data will be lost when your server crashes!

Just to add some data to the table you can use the query below:

-- Insert some data into our table

INSERT INTO dbo.OLTP

(

AddressLine1, AddressLine2, City, StateProvinceID, PostalCode, rowguid

)

VALUES

('1970 Napa Ct.',NULL,'Bothell','79','98011','9AADCB0D-36CF-483F-84D8-585C2D4EC6E9'), ('8192 Seagull Court', NULL, 'Kenmore','79', '98028', '3DE1BDE3-FF37-4992-B50E-E12D5E247132')

GO

As some of you might have recognized, the table structure and data of my test table closely resembles the AdventureWorks database. I actually used the Person.Address table to create the OLTP table in our test database but I removed the data types that are not supported for Memory-Optimized table use.

Why do I insert those two rows manually and not through a SELECT from the Person.Address table in the AdventureWorks database? This is why:

Msg 41317, Level 16, State 5, Line 1

A user transaction that accesses memory optimized tables or natively compiled procedures cannot access more than one user database or databases model and msdb, and it cannot write to master.

Let’s take a look at the data we just inserted:

-- Select data from Memory-Optimized table

SELECT * FROM OLTP

Awesome, it’s all there!

Creating a non-durable Memory-Optimized table

Now that we created a durable Memoru-Optimzed table, let’s check out non-durable Memory-Optimized tables.

The syntax to creating a non-durable Memory-Optimized table is for biggest part the same as creating a durable table:

-- Create a non-durable Memory-Optimized table

USE OLTP_Test

GO

CREATE TABLE dbo.OLTP_nd

(

[AddressID] [int] IDENTITY(1,1) NOT NULL PRIMARY KEY NONCLUSTERED,

[AddressLine1] [nvarchar](60) NOT NULL,

[AddressLine2] [nvarchar](60) NULL,

[City] [nvarchar](30) NOT NULL,

[StateProvinceID] [int] NOT NULL,

[PostalCode] [nvarchar](15) NOT NULL,

[rowguid] [uniqueidentifier] ,

[ModifiedDate] [datetime]

)

WITH (MEMORY_OPTIMIZED=ON, DURABILITY=SCHEMA_ONLY)

GO

The big change is the addition of the DURABILITY=SCHEMA_ONLY option to indicate this table is non-durable.

Let’s insert the same data as we did for the durable Memory-Optimized table:

-- Insert some data into our table

INSERT INTO dbo.OLT_nd

(

AddressLine1, AddressLine2, City, StateProvinceID, PostalCode, rowguid

)

VALUES

('1970 Napa Ct.',NULL,'Bothell','79','98011','9AADCB0D-36CF-483F-84D8-585C2D4EC6E9'), ('8192 Seagull Court', NULL, 'Kenmore','79', '98028', '3DE1BDE3-FF37-4992-B50E-E12D5E247132')

GO

Let’s check if everything is inside the table:

-- Check table contents

SELECT * FROM OLTP_nd

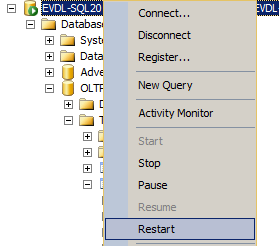

Again, the rows we inserted are there, the interesting part comes when we restart our SQL Server service!

Restart incoming!

If we run the same select query against our non-durable table now:

Nothing to see here…

The table is empty, exactly as you would suspect by creating a non-durable table. The table structure is still in place though, so a restart of the SQL Server service will only remove the rows in your non-durable table but not the table itself!

Wrap up

In this article we took a closer look into Memory-Optimized tables. We discussed some internals on how Memory-Optimized tables work and created a durable and non-durable Memory-Optimized table.

In the next article of the In-Memory OLTP series we will take a look at Hash Indexes, the new index type used for Memory-Optimized tables!