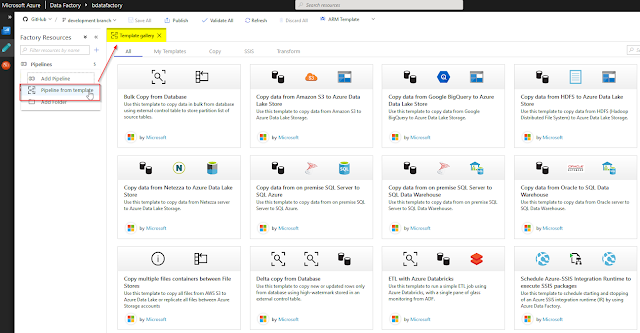

A good friend of mine, Simon Nuss, reminded me this morning, that ADF had been enriched with an additional feature that can help to create pipelines using existing templates from the Template gallery. All you need to do is to click the ellipsis beside Pipelines in order to select this option:

Currently, there are 13 templates that you can start using right away:

- Bulk Copy from Database

- Copy data from Amazon S3 to Azure Data Lake Store

- Copy data from Google BiqQuery to Azure Data Lake Store

- Copy data from HDFS to Azure Data Lake Store

- Copy data from Netezza to Azure Data Lake Store

- Copy data from on premise SQL Server to SQL Azure

- Copy data from on premise SQL Server to SQL Data Warehouse

- Copy data from Oracle to SQL Data Warehouse

- Copy multiple files containers between File Stores

- Delta copy from Database

- ETL with Azure Databricks

- Schedule Azure-SSIS Integration Runtime to execute SSIS package

- Transform data using on-demand HDInsight

And I would expect that Microsoft will be adding more new templates to this list.

Test case:

To test this out, I decided to use "Copy multiple files containers between File Stores" template with a use case of copying sourcing data files from one blob container to another staging blob container.

I already have a blob container storesales with several CSV files and I want to automate the copying process to a new container storesales-staging that I have just created in my existing blob storage account:

Step 1: Selecting an ADF pipeline template from the gallery

After choosing the "Copy multiple files containers between File Stores" template, a window pops up where I can set linked services for both source and sink file stores.

After finishing this, a new pipeline is created in my ADF that has two activity tasks: "Get Metadata" and "For Each" container. There is also a link to the Microsoft official documentation website for Azure Data Factory, which will only be available during the initial working stage with this new pipeline; next time when you open it, this link will no longer be there.

Step 2: Parameters setting

This particular pipeline template already has two parameters which I set to my sourcing and destination file paths of my blob storage to "/storesales" and "/storesales-staging" correspondingly:

Step 3: Testing my new ADF pipeline

Further along the way, I test my new pipeline in the Debug mode. It gets successfully executed:

And I can also see that all my CSV files were copied into my destination blob storage container. One of the test cases using ADF pipeline templates is successfully finished!

Summary:

1) Gallery templates in Azure Data Factory is a great way to start building your data transfer/transformation workflows.

2) More new templates will become available, I hope.

3) And you can further adjust pipelines based on those templates with your custom logic.

Let's explore and use ADF pipelines from its Template gallery more!

And let's wait for Mapping Data flows general availability, it will become even more interesting!