In this blog post, we’re going to work on deploying a SQL Server Availability Group in a Kubernetes Cluster in on-premises virtual machines. I’m going to walk you through the process as it’s documented by Microsoft at this link here. This document is very good but only shows you how to do it in Azure, we’re going to do it in VMs. I’m going to follow Microsoft’s documentation as much as possible, deviating only when necessary for on-premises deployments. I’m also going to explain the key Kubernetes concepts that you need to know to understand how all these pieces together. This is a long one, buckle up.

Creating Your Cluster

In my last blog post, I showed you how to create a three-node Kubernetes on premise, virtual machine based cluster. I’m going to use the same setup for this blog post. Check out how to create this cluster at this link here. There’s a reason I wrote that post first 🙂

Process Overview

Here’s the big picture of all the steps we will perform in this demonstration

- Create a Namespace

- Create Secrets

- Create a Storage Class and mark it default

- Create Persistent Volumes

- Create a ServiceAccount, ClusterRole, ClusterRoleBinding and a Deployment

- Deploy the SQL Server Pods

- Expose the Availability Group Services as a Kubernetes Service

- Connect to our Availability Group from outside our cluster

- Create a database

Create a Namespace

First up, we’ll create a Namespace. In Kubernetes you can use namespaces to put boundaries around resources for organizational or security reasons.

demo@k8s-master1:~/ag$ kubectl create namespace ag1

namespace/ag1 created

demo@k8s-master1:~/ag$ kubectl get namespaces

NAME STATUS AGE

ag1 Active 11m

default Active 28h

kube-public Active 28h

kube-system Active 28h

Create Secrets

In Kubernetes, the cluster store can hold Secrets…in other words sensitive data like passwords. This is valuable because we don’t want to store this information in our containers and we certainly don’t want to have our passwords as clear text in our deployment manifests. So in those manifests, we can reference these values and then upon deployment the Pods will retrieve the secrets when they’re started and pass the secret into the container for the application to use. In this case, we’re creating two secrets to be used by our Pods. The first is the SA password we’ll use for our SQL Server Instance, the second is the password for our Service Master Key which is behind the certificates that are used to authenticate the Availability Group (*cough*) Database Mirroring endpoints.

Let’s create the secrets with kubectl.

demo@k8s-master1:~/ag$ kubectl create secret generic sql-secrets –from-literal=sapassword=”1-S0methingS@Str0ng” –from-literal=masterkeypassword=”2-S0methingS@Str0ng” –namespace ag1

secret/sql-secrets created

demo@k8s-master1:~/ag$ kubectl get secret sql-secrets -o yaml –namespace ag1 | grep masterkeypassword | awk ‘{ print $2 }’ | base64 –decode

2-S0methingS@Str0ng

demo@k8s-master1:~/ag$

Create a Storage Class and Mark it Default

OK, now it’s time to work on our storage configuration. This is where we’re going to deviate most from the Microsoft documentation. We’re going to start off by creating a StorageClass. A StorageClass is a way to group storage together based on the storage’s attributes. In this case, we’re going to use a StorageClass because later on in the YAML files provided by Microsoft they are going to make storage requests of the cluster referencing the default storage class in the namespace. So that’s what this code does, creates the local-storage StorageClass and marks it as default.

Here is the YAML definition of our StorageClass, save this into a file called StorageClass.yaml.

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: local-storage

annotations: {

"storageclass.kubernetes.io/is-default-class" : "true"

}

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumerFile 1 – StorageClass.yaml

Once you have that code saved into StorageClass.yaml, go ahead and run the following command to pass the StorageClass manifest to the API server for it to create the resource for you.

demo@k8s-master1:~/ag$ kubectl apply -f StorageClass.yaml –namespace ag1

storageclass.storage.k8s.io/local-storage created

demo@k8s-master1:~/ag$ kubectl get storageclass –namespace ag1

NAME PROVISIONER AGE

local-storage (default) kubernetes.io/no-provisioner 3h20m

Create Persistent Volumes

Next up is creating our actual storage. In Kubernetes, the cluster provides storage to Pods and the Pods request the storage. Cluster storage can be many different types. You can have NFS, virtual disks from your cloud provider, local storage and many more. Local storage is the storage that’s attached to the Kubernetes Node itself. In this demonstration, we’re going to use local storage. Since we’re deploying Availability Groups that will put a Pod on each Node in our cluster, we’re going to need to define three PersistentVolumes, one on each Node.. Looking at the code in File 2 – PV.yaml (below) you will see three PersistentVolumes each with a different name, all pointing to /var/opt/mssql. This will be local on each Node in our cluster. So we will need to make a /var/opt/mssql directory on each node in our cluster. So go ahead and do that now. Storage in HA systems requires understanding of what data is living where in your system and layering the appropriate data protections to meet your recovery objectives. This configuration places the storage on each Node in your Kubernetes Cluster. For more information on the local storage type in Kubernetes check out this post here. In that post they specifically call out that this Persistent Volume type is appropriate for “Distributed storage systems that shard or replicate data across multiple nodes” and Availability Groups fall into that category.

In an effort to loosely couple Pods and their storage, the cluster administrator defines Persistent Volumes, that what you’ll do when you run the code in File 2 – PV.yaml below. Then the pods will use PersistentVolumeClaim to attach the Pods to the PersistentVolumes.

kind: PersistentVolume

apiVersion: v1

metadata:

name: ag1-pv-volume-node1

labels:

type: local

spec:

storageClassName: local-storage

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

local:

path: "/var/opt/mssql"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node1

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: ag1-pv-volume-node2

labels:

type: local

spec:

storageClassName: local-storage

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

local:

path: "/var/opt/mssql"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node2

---

kind: PersistentVolume

apiVersion: v1

metadata:

name: ag1-pv-volume-node3

labels:

type: local

spec:

storageClassName: local-storage

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

local:

path: "/var/opt/mssql"

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node3File 2 – PV.yaml

Once you have that code saved into PV.yaml, go ahead and run the following command to pass the PersistentVolume manifest to the API server for it to create the resource for you.

demo@k8s-master1:~/ag$ kubectl apply -f pv.yaml –namespace ag1

persistentvolume/ag1-pv-volume-node1 created

persistentvolume/ag1-pv-volume-node2 created

persistentvolume/ag1-pv-volume-node3 created

demo@k8s-master1:~/ag$ kubectl get PersistentVolume –namespace ag1

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

ag1-pv-volume-node1 10Gi RWO Retain Available local-storage 29s

ag1-pv-volume-node2 10Gi RWO Retain Available local-storage 29s

ag1-pv-volume-node3 10Gi RWO Retain Available local-storage 29s

Create a ServiceAccount, ClusterRole, ClusterRoleBinding and a Deployment

Now that we have our storage created it’s time to get started creating our resources for our cluster. In the Samples provided by Microsoft, they provide operator.yaml. For our deployment, we’ll use that file just as is from Microsoft. I have the contents below for you. The core details of the operator.yaml manifest file are that it creates a ServiceAccount, a ClusterRole, a ClusterRoleBinding and also defines the Deployment for the Kubernetes Operator Pod for our Availability Group. Basically, this sets up the security contexts in the cluster for our Pods and also kicks off the Deployment of the Availability Group Kubernetes Operator Pod. A Deployment is a Kubernetes object that will ensure that the defined Pod is up and running at all times. Looking towards the bottom of the file you’ll see replicas: 1 this defines that there must be exactly one Operator pod using the 2019-CTP2.1-ubuntu container at all times.

apiVersion: v1

kind: Namespace

metadata: {name: ag1}

---

apiVersion: v1

kind: ServiceAccount

metadata: {name: mssql-operator, namespace: ag1}

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata: {name: mssql-operator-ag1}

rules:

- apiGroups: ['']

resources: [serviceaccounts, services]

verbs: [create, get, update, delete]

- apiGroups: [batch]

resources: [jobs]

verbs: [create, get, update, delete]

- apiGroups: [rbac.authorization.k8s.io]

resources: [roles, rolebindings]

verbs: [create, get, update, delete]

- apiGroups: [apps]

resources: [statefulsets]

verbs: [create, delete, get, update]

- apiGroups: ['']

resources: [configmaps, endpoints, secrets]

verbs: [create, get, update, watch, delete]

- apiGroups: ['']

resources: [pods]

verbs: [get, list, update]

- apiGroups: [apiextensions.k8s.io]

resources: [customresourcedefinitions]

verbs: [create]

- apiGroups: [apiextensions.k8s.io]

resourceNames: [sqlservers.mssql.microsoft.com]

resources: [customresourcedefinitions]

verbs: [delete, get, update]

- apiGroups: [mssql.microsoft.com]

resources: [sqlservers]

verbs: [get, list, watch]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata: {name: mssql-operator-ag1}

roleRef: {apiGroup: rbac.authorization.k8s.io, kind: ClusterRole, name: mssql-operator-ag1}

subjects:

- {kind: ServiceAccount, name: mssql-operator, namespace: ag1}

---

apiVersion: apps/v1beta2

kind: Deployment

metadata: {name: mssql-operator, namespace: ag1}

spec:

replicas: 1

selector:

matchLabels: {app: mssql-operator}

template:

metadata:

labels: {app: mssql-operator}

spec:

containers:

- command: [/mssql-server-k8s-operator]

env:

- name: MSSQL_K8S_NAMESPACE

valueFrom:

fieldRef: {fieldPath: metadata.namespace}

image: mcr.microsoft.com/mssql/ha:2019-CTP2.1-ubuntu

name: mssql-operator<br /> serviceAccount: mssql-operator File 3 – operator.yaml

Now let’s go ahead and deploy operator.yaml and create the resources.

demo@k8s-master1:~/ag$ kubectl apply -f operator.yaml –namespace ag1

Warning: kubectl apply should be used on resource created by either kubectl create –save-config or kubectl apply

namespace/ag1 configured

serviceaccount/mssql-operator created

clusterrole.rbac.authorization.k8s.io/mssql-operator-ag1 created

clusterrolebinding.rbac.authorization.k8s.io/mssql-operator-ag1 created

deployment.apps/mssql-operator created

kubectl get ServiceAccount –namespace ag1

kubectl describe ClusterRole mssql-operator-ag1 –namespace ag1

kubectl describe ClusterRoleBinding mssql-operator-ag1 –namespace ag1

kubectl get deployment –namespace ag1

kubectl describe deployment –namespace ag1

Before moving on, we’ll want to make sure our Deployment is finished and Ready. We can use kubectl get deployment for that, the key here is the DESIRED and CURRENT are both 1. This means our Operator is online and ready.

demo@k8s-master1:~/ag$ kubectl get deployment –namespace ag1

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

mssql-operator 1 1 1 1 4m38s

demo@k8s-master1:~/ag$ kubectl get pods –namespace ag1

NAME READY STATUS RESTARTS AGE

mssql-operator-6d88564d97-hqz8r 1/1 Running 0 7m8s

Deploy the SQL Server Pods

Ok, now that the Storage, Security and the Operator are deployed. Now it’s time to deploy the Pods for our SQL Server Availability Group Replicas. In File 3 – sqlserver.yaml (below) we define the resources needed to get our Pods up and running. But, this file is a little bit different…it’s using Custom Resources. Your first hint to that is look at the apiVersion, it references mssql.microsoft.com/v1 and the kind: SqlServer. Microsoft has chosen to implement a custom object likely so that it can abstract away some of the complexity of creating this configuration.

In this file, you are going to see a lot of things come together from the other configurations we’ve made so far in this post. You’ll find that the ag1 namespace is referenced, you’ll see the storageClass is default, and the saPassword being read from the Cluster’s secrets.

In addition to the custom SqlServer resource, in this file, you will also find the Service object defined for each Pod replica. The Service provides persistent access to the Pod’s application. In this file, we create three services all on 1433. This port is the port listening on the cluster network.

I did make one change to this file from the original Microsoft file. I changed the Service Type from LoadBalancer to NodePort. Doing this exposes the Service which is running on 1433 inside the Pod and also on the Pod network to the Node’s real IP address and bind the Pods internal 1433 port to an available ephemeral port on the Node. This means I can connect to each of the SQL Instances outside of the Kubernetes cluster using the IP address of the virtual machine (Node) and the NodePort ephemeral port. The type LoadBalancer is used in cloud scenarios where the cloud provider stands up and load balancer resource for you. We’re doing this On-Prem, so this had to change.

apiVersion: mssql.microsoft.com/v1

kind: SqlServer

metadata:

labels: {name: mssql1, type: sqlservr}

name: mssql1

namespace: ag1

spec:

acceptEula: true

agentsContainerImage: mcr.microsoft.com/mssql/ha:2019-CTP2.1-ubuntu

availabilityGroups: [ag1]

instanceRootVolumeClaimTemplate:

accessModes: [ReadWriteOnce]

resources:

requests: {storage: 5Gi}

storageClass: default

saPassword:

secretKeyRef: {key: sapassword, name: sql-secrets}

sqlServerContainer: {image: 'mcr.microsoft.com/mssql/server:2019-CTP2.1-ubuntu'}

---

apiVersion: v1

kind: Service

metadata: {name: mssql1, namespace: ag1}

spec:

ports:

- {name: tds, port: 1433}

selector: {name: mssql1, type: sqlservr}

type: NodePort

---

apiVersion: mssql.microsoft.com/v1

kind: SqlServer

metadata:

labels: {name: mssql2, type: sqlservr}

name: mssql2

namespace: ag1

spec:

acceptEula: true

agentsContainerImage: mcr.microsoft.com/mssql/ha:2019-CTP2.1-ubuntu

availabilityGroups: [ag1]

instanceRootVolumeClaimTemplate:

accessModes: [ReadWriteOnce]

resources:

requests: {storage: 5Gi}

storageClass: default

saPassword:

secretKeyRef: {key: sapassword, name: sql-secrets}

sqlServerContainer: {image: 'mcr.microsoft.com/mssql/server:2019-CTP2.1-ubuntu'}

---

apiVersion: v1

kind: Service

metadata: {name: mssql2, namespace: ag1}

spec:

ports:

- {name: tds, port: 1433}

selector: {name: mssql2, type: sqlservr}

type: NodePort

---

apiVersion: mssql.microsoft.com/v1

kind: SqlServer

metadata:

labels: {name: mssql3, type: sqlservr}

name: mssql3

namespace: ag1

spec:

acceptEula: true

agentsContainerImage: mcr.microsoft.com/mssql/ha:2019-CTP2.1-ubuntu

availabilityGroups: [ag1]

instanceRootVolumeClaimTemplate:

accessModes: [ReadWriteOnce]

resources:

requests: {storage: 5Gi}

storageClass: default

saPassword:

secretKeyRef: {key: sapassword, name: sql-secrets}

sqlServerContainer: {image: 'mcr.microsoft.com/mssql/server:2019-CTP2.1-ubuntu'}

---

apiVersion: v1

kind: Service

metadata: {name: mssql3, namespace: ag1}

spec:

ports:

- {name: tds, port: 1433}

selector: {name: mssql3, type: sqlservr}

type: NodePortFile 3 – sqlserver.yaml

Let’s now deploy this Pods and Services by passing sqlserver.yaml into our API Server. This might take a minute or two as the containers are downloaded from the Internet and started. In the output below you can see the three Pods are Created and the three services are created. I do want to call out when you create the Pods, you will see 6 Pods when you use the kubectl get pods command. The “initialize” Pods are managed by the Deployment as Kubernetes Jobs that are used to configure the Availability Group Replicas, these run only once and complete. When running the deployment, a successful deployment will have three mssqlN-0 Pods up with a status of Running and the initialize pods are Completed.

demo@k8s-master1:~/ag$ kubectl apply -f sqlserver.yaml –namespace ag1

sqlserver.mssql.microsoft.com/mssql1 created

service/mssql1 created

sqlserver.mssql.microsoft.com/mssql2 created

service/mssql2 created

sqlserver.mssql.microsoft.com/mssql3 created

service/mssql3 created

demo@k8s-master1:~/ag$ kubectl get pods –namespace ag1

NAME READY STATUS RESTARTS AGE

mssql-initialize-mssql1-klhzh 0/1 Completed 0 7m

mssql-initialize-mssql2-8vd6r 0/1 Completed 0 6m57s

mssql-initialize-mssql3-4tjvd 0/1 Completed 0 6m54s

mssql-operator-6d88564d97-hqz8r 1/1 Running 0 30m

mssql1-0 2/2 Running 0 7m

mssql2-0 2/2 Running 0 6m58s

mssql3-0 2/2 Running 0 6m55s

If you want to get a peek at what’s been written to standard out in a Pod you can use kubectl logs. Let’s do that for one of the initialize Pods above to see what they did for us. In the output below you can see it’s the job of the initialize container to configure the Availability Group on the Pod that’s running our AG Replica on that same Node. There’s an initialize Pod for each replica on each Node.

demo@k8s-master1:~/ag$ kubectl logs mssql-initialize-mssql1-klhzh –namespace ag1

2018/11/13 02:26:14 Using randomly generated master key password

2018/11/13 02:26:14 Statefulset has 1 replicas but could only find 0

Found pod [mssql1-0] with owner [7d5ef98d-e6eb-11e8-816e-000c296ac327]2018/11/13 02:26:15 [192.168.3.5] Setting sa pasword…

ERROR: 2018/11/13 02:26:15 [192.168.3.5] Could not connect: Unresponsive or down Unable to open tcp connection with host ‘192.168.3.5:1433’: dial tcp 192.168.3.5:1433:

…output omitted…

ERROR: 2018/11/13 02:26:28 [192.168.3.5] Could not connect: Unresponsive or down Unable to open tcp connection with host ‘192.168.3.5:1433’: dial tcp 192.168.3.5:1433: getsockopt: connection refused

ERROR: 2018/11/13 02:26:28 [192.168.3.5] Both old and new sa password failed

ERROR: 2018/11/13 02:26:29 [192.168.3.5] Could not connect: Unresponsive or down Login error: mssql: Login failed for user ‘sa’.

ERROR: 2018/11/13 02:26:29 [192.168.3.5] Could not connect: Unresponsive or down Login error: mssql: Login failed for user ‘sa’.

ERROR: 2018/11/13 02:26:29 [192.168.3.5] Both old and new sa password failed

ERROR: 2018/11/13 02:26:30 [192.168.3.5] Could not connect: Unresponsive or down Login error: mssql: Login failed for user ‘sa’.

2018/11/13 02:26:31 [192.168.3.5] sa password updated

2018/11/13 02:26:31 [192.168.3.5] Creating master key

2018/11/13 02:26:31 [192.168.3.5] Creating sql login dbm-mssql1 if it does not already exist

2018/11/13 02:26:31 [192.168.3.5] Creating sql user dbm-mssql1 if it does not already exist

2018/11/13 02:26:31 [192.168.3.5] Granting dbm-mssql1 availability group permissions

2018/11/13 02:26:31 [192.168.3.5] Granting dbm-mssql1 control to the database mirroring endpoint, dbm, to if it already exists

2018/11/13 02:26:31 [192.168.3.5] Granting dbm-mssql1 control to the certificates used on the database mirroring endpoint, dbm

2018/11/13 02:26:31 [192.168.3.5] Granting dbm-mssql1 control to availibility group ag1

2018/11/13 02:26:31 Updating secret to note that the initialization is complete

2018/11/13 02:26:31 Uploading cert secret for local instance…

2018/11/13 02:26:31 Initialization complete

demo@k8s-master1:~/ag$ kubectl describe pods -n ag1

demo@k8s-master1:~/ag$ kubectl describe statefulset –namespace ag1

Expose the Availability Group Services as a Service

apiVersion: v1

kind: Service

metadata: {annotations: null, name: ag1-primary, namespace: ag1}

spec:

ports:

- {name: tds, port: 1433, targetPort: 1433}

selector: {role.ag.mssql.microsoft.com/ag1: primary, type: sqlservr}

type: NodePort

---

apiVersion: v1

kind: Service

metadata: {annotations: null, name: ag1-secondary, namespace: ag1}

spec:

ports:

- {name: tds, port: 1433}

selector: {role.ag.mssql.microsoft.com/ag1: secondary, type: sqlservr}

type: NodePort

---demo@k8s-master1:~/ag$ kubectl apply -f ag-services.yaml –namespace ag1

service/ag1-primary created

service/ag1-secondary created

demo@k8s-master1:~/ag$ kubectl get service –namespace ag1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ag1 ClusterIP None <none> 1433/TCP,5022/TCP 9h

ag1-primary NodePort 10.110.116.77 <none> 1433:30625/TCP 2m6s

ag1-secondary NodePort 10.100.132.228 <none> 1433:30380/TCP 2m6s

mssql1 NodePort 10.103.126.32 <none> 1433:32572/TCP 9h

mssql2 NodePort 10.102.175.227 <none> 1433:30001/TCP 9h

mssql3 NodePort 10.107.215.217 <none> 1433:31539/TCP 9h

demo@k8s-master1:~/ag$ kubectl describe service ag1-primary -n ag1

Name: ag1-primary

Namespace: ag1

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration:

{“apiVersion”:”v1″,”kind”:”Service”,”metadata”:{“annotations”:{},”name”:”ag1-primary”,”namespace”:”ag1″},”spec”:{“ports”:[{“name”:”tds”,”p…

Selector: role.ag.mssql.microsoft.com/ag1=primary,type=sqlservr

Type: NodePort

IP: 10.110.116.77

Port: tds 1433/TCP

TargetPort: 1433/TCP

NodePort: tds 30625/TCP

Endpoints: 192.168.3.5:1433

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

Anthonys-MacBook-Pro:~ aen$ sqlcmd -S k8s-master1,30625 -Q “SELECT @@SERVERNAME” -U sa -p

Password:

——————————————————————————————————————————–

mssql1-0

(1 rows affected)

Network packet size (bytes): 4096

1 xact:

Clock Time (ms.): total 2 avg 2.0 (500.0 xacts per sec.)

Anthonys-MacBook-Pro:~ aen$ sqlcmd -S 172.16.94.136,30625 -Q “SELECT @@SERVERNAME” -U sa -p

Password:

——————————————————————————————————————————–

mssql1-0

(1 rows affected)

Network packet size (bytes): 4096

1 xact:

Clock Time (ms.): total 1 avg 1.0 (1000.0 xacts per sec.)

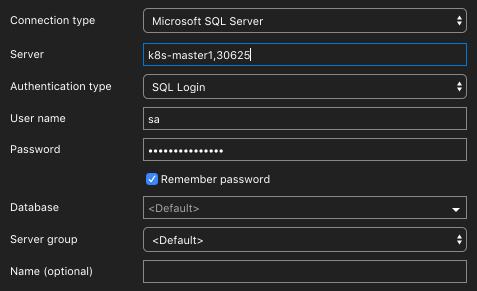

In figure 1, you can find the connection information to connect to our AG primary with Azure Data Studio. Remember, I have that host name in a host file. So create that entry if you need one.

Create an Availability Group Database

CREATE DATABASE [TestAG1] GO BACKUP DATABASE [TestAG1] TO DISK = 'nul' BACKUP LOG [TestAG1] TO DISK = 'nul' GO ALTER AVAILABILITY GROUP [ag1] ADD DATABASE [TestAG1] GO

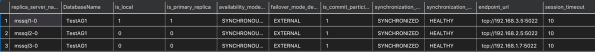

SELECT r.replica_server_name

, DB_NAME(rs.database_id) AS [DatabaseName]

, rs.is_local

, rs.is_primary_replica

, r.availability_mode_desc

, r.failover_mode_desc

, rs.is_commit_participant

, rs.synchronization_state_desc

, rs.synchronization_health_desc

, r.endpoint_url

, r.session_timeout

FROM sys.dm_hadr_database_replica_states rs

JOIN sys.availability_replicas r ON r.group_id = rs.group_id

AND r.replica_id = rs.replica_id

ORDER BY r.replica_server_name;

GO

Wrap up

demo@k8s-master1:~/ag$ kubectl get all –namespace ag1

NAME READY STATUS RESTARTS AGE

pod/mssql-initialize-mssql1-klhzh 0/1 Completed 0 12h

pod/mssql-initialize-mssql2-8vd6r 0/1 Completed 0 12h

pod/mssql-initialize-mssql3-4tjvd 0/1 Completed 0 12h

pod/mssql-operator-6d88564d97-hqz8r 1/1 Running 0 12h

pod/mssql1-0 2/2 Running 0 12h

pod/mssql2-0 2/2 Running 0 12h

pod/mssql3-0 2/2 Running 0 12h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ag1 ClusterIP None <none> 1433/TCP,5022/TCP 12h

service/ag1-primary NodePort 10.110.116.77 <none> 1433:30625/TCP 176m

service/ag1-secondary NodePort 10.100.132.228 <none> 1433:30380/TCP 176m

service/mssql1 NodePort 10.103.126.32 <none> 1433:32572/TCP 12h

service/mssql2 NodePort 10.102.175.227 <none> 1433:30001/TCP 12h

service/mssql3 NodePort 10.107.215.217 <none> 1433:31539/TCP 12h

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/mssql-operator 1 1 1 1 12h

NAME DESIRED CURRENT READY AGE

replicaset.apps/mssql-operator-6d88564d97 1 1 1 12h

NAME DESIRED CURRENT AGE

statefulset.apps/mssql1 1 1 12h

statefulset.apps/mssql2 1 1 12h

statefulset.apps/mssql3 1 1 12h

NAME COMPLETIONS DURATION AGE

job.batch/mssql-initialize-mssql1 1/1 19s 12h

job.batch/mssql-initialize-mssql2 1/1 18s 12h

job.batch/mssql-initialize-mssql3 1/1 17s 12h

kubectl get all –namespace ag1 -o yaml

Please feel free to contact me with any questions regarding Linux, Kubernetes or other SQL Server related issues at : aen@centinosystems.com

The post Deploying SQL Server Availability Groups in Kubernetes appeared first on Centino Systems Blog.