Before we get into the implementation of tables, there are several concepts that need to be understood so that you can understand the ramifications of you design choice for tables. These concepts include:

- Distributions

- Nodes

- Service Objective

Distributions

SQL Data Warehouse is a Massively Parallel Processing (MPP) system. Data is distributed across multiple locations across the MPP system referred to as distributions and is fundamental to running queries in parallel to achieve high query performance.

When a query runs in parallel, all 60 distribution performs a SQL query on its portion of the data. SQL Data Warehouse uses SQL Database to run the query. This shared-nothing architecture design is fundamental to achieving scale-out parallel computing. Every SQL Data Warehouse has a fixed number of 60 distributions.

Nodes

There are two types of nodes with Azure SQL Data Warehouse: Control Nodes, and Compute Nodes

The Control node manages and optimizes queries. It is the front end that interacts with all applications and connections. In SQL Data Warehouse, the Control node is powered by SQL Database, and connecting to it looks and feels the same. Under the surface, the Control node coordinates all the data movement and computation required to run parallel queries on your distributed data across distribution nodes. When you submit a T-SQL query to SQL Data Warehouse, the Control node transforms it into separate queries that run on each Compute node in parallel.

The Compute nodes serve as the power behind SQL Data Warehouse. They are SQL Databases that store your data and process your query. When you add data, SQL Data Warehouse distributes the rows to your Compute nodes. The Compute nodes are the workers that run the parallel queries on your data. After processing, they pass the results back to the Control node. To finish the query, the Control node aggregates the results and returns the final result.

The number of Compute nodes can change according to the backend service workload and the computing capacity (DWUs) you specify for the data warehouse. When the number of Compute nodes changes, the number of distributions per Compute node also changes.

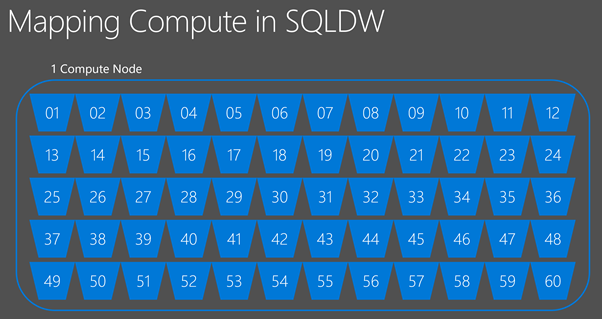

For example, if you select DWU 100, then the 60 distributions are allocated to a single compute node working with a control node.

Should you increase the DWU to 200, then the 60 distributions are divided between two compute nodes working with a control node.

Service Objective

Service objective is the generic name to describe “Data Warehouse Units (DWU)” in Azure Data Warehouse, and “Data Transaction Units (DTU)” in Azure SQL Database. In Azure SQL Data Warehouse the service objectives is used to define the scale of the data warehouse, with a minimum of DWU100 to a maximum of DWU6000 at the time of writing. The higher the DWU, the more nodes that are created to distribute the 60 distributions and increase the performance capability of the Azure SQL Data Warehouse.

Table and Table Geometries

To create a table within Azure SQL Database, we use code very similar to the CREATE TABLE statement for an on premise SQL Server. The difference is we can (or need) to include a WITH clause that specifies specific ASDW options. The following example creates a date dimension table

CREATE TABLE [dbo].[Dates]

(

[Date] [datetime2](3) ,

[DateKey] [nchar](8),

…

…

…

[WeekDay] [nvarchar](100) ,

[Day Of Month] [decimal](38, 0)

)

WITH

(

DISTRIBUTION = ROUND_ROBIN

);You can see from the code, that you create a table defining a column name and data type. You can also specify the nullability of a column as well. What is different is what is contained in the WITH clause with the DISTRIBUTION clause. The distribution clause defines how the data will be stored across the distribution in the Azure SQL Data Warehouse and there are two options:

DISTRIBUTION = ROUND_ROBIN | HASH(column_name)

Round_robin distribution

A distribution of round_robin will evenly distribute the data across all the 60 distributions. The benefit of this approach is that the data is evenly spread, but there is no control over where the data is stored. The round_robin distribution works very well when initially loading data into a staging table. By default, tables are Round Robin distributed. This makes it easy for users to get started creating tables without having to decide how their tables should be distributed. Round Robin tables may perform sufficiently for some workloads, but in most cases selecting a distribution column will perform much better.

Hash distribution

Selecting a distribution column is done when using the hash distribution. In this situation, a column must be defined when using the hash. Using a hash algorithm to distribute your table data can improve performance for many scenarios by reducing data movement at query time. Hash distributed tables are tables which are divided between the distributions using a hashing algorithm on a single column which you select.

The distribution column is what determines how the data is divided across your distributed databases. The hash function uses the distribution column to assign rows to distributions. The hashing algorithm and resulting distribution is deterministic. That is the same value with the same data type will always has to the same distribution.

Therefore, the column that is selected for the hash distribution should be chosen carefully. If a column is chosen on 10 million row table for the hash distribution. Let’s say the City column as an example. And 98% of the records in the City column have a value of Manchester, then there would be an uneven spread of the data across the distributions. This data skew towards Manchester can adversely affect the performance of the Azure SQL Data Warehouse. So you would want to select a column that has relative even distribution of data and a column that won’t be updated. In fact, our friends in the Azure CAT team have written a great article on loading patterns in Azure SQL Data Warehouse, and I highly recommend that you read it.

So, the choice of the distribution method, and the column to be used when a hash distribution is used is vitally important to ensure a performant Data Warehouse. Choose your columns carefully.

See how you can create table in the following video

Other Table Options

The logical architecture for the creation of tables still largely remains the same. You can still create star schema, or snowflake schemas with fact and dimension tables.

The Create Table statement in Azure SQL Data Warehouse also supports Indexing and Partitioning. Interestingly, there are subtle variations with the partitioning in particular, but I will save this for another blog. But partitioning can be important in aiding the manageability of data by merging and switching partitions to aid the fast loading of data.

Statistics also play an important role in aiding query execution plans. However, it should be noted that at the time of writing, the statistics are not updated on the control node. So, it is best practice to create statistics on specific columns that aid querying so that the information is up to date for the query optimization process.

Previous Articles

In this series, we have also covered the following topics: