Introduction

I've been searching for a more or less comprehensible and fast way to import and export binary files using the SQL Server 2005. I've figured out my own way, which may be not the best or fastest. There may be a bunch of ways to improve the process, but who's perfect after all, it works well for me, and suits my purposes.

Preparations

We begin with the file which contains the absolute path for the binary files. Assume the extension of the binaries is .dat and let the play begin. I use the GNU find utility to get the names of the files.

find d:\malware -name *.dat -type f > out.txt

This will create the out.txt file with names in it. Next I create a table:

CREATE TABLE files (filepath varchar(512), --file_id INT IDENTITY(1,1) )

You can take any size of VARCHAR you like as it depends on the depth of your directory structure and a file name length. The entire path and name of the file must be stored in this table. We load the filepaths using the following SQL:

BULK INSERT files FROM 'd:\out.txt' WITH ( --fieldterminator='', rowterminator='\n', codepage=1251);

Trick

I'm using here the CODEPAGE because I have some files which are named using the Russian CP1251.

If needed, later you can add constraints,indexes or an IDENTITY column to further speed up the SELECT procedures or for any other purpose you like, e.g.

BEWARE: Be careful with truncation error this error might occur because of the find's output file format. You will probably have to convert the out.txt file to CR-LF windows format later.

The files table:

Loading the files.

IMHO before any bulk loading operation you should alter the database's recovery model to SIMPLE or BULK_LOGGED if you have the luxury to do so and disable the INDEX (file_id_idx) in the bindata table on the file_id column if you have created one - see below:

Creating the table with binary data.

CREATE TABLE bindata (data varbinary(max), --file_id INT IDENTITY(1,1) ) /* CREATE NONCLUSTERED INDEX file_id_idx ON bindata (file_id) */

Loading procedure:

DECLARE

@filename VARCHAR(512),

@sql VARCHAR(512);

DECLARE a_cur CURSOR STATIC FOR

SELECT filepath FROM files;

/*WHERE file_id >(SELECT MAX(file_id) FROM bindata); This part is needed when you load the data NOT for the first time from the [files] table. */OPEN a_cur

FETCH NEXT FROM a_cur INTO @filename;

WHILE (@@fetch_status=0)

BEGIN

--using dynamic sql in order to use the @filename variable

SET @sql ='

INSERT INTO bindata(data)

SELECT * FROM

OPENROWSET(BULK '''+ @filename+''', SINGLE_BLOB) as f;'

EXEC (@sql);

FETCH NEXT FROM a_cur INTO @filename;

END;

CLOSE a_cur;

DEALLOCATE a_cur;

The process of loading the data depends, of course, on the number and size of the files you want to load.

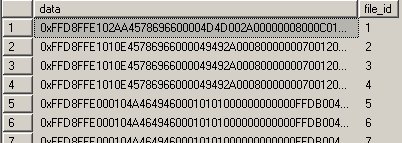

Once this is completed, you will see this in the Bindata table:

Exporting the files from the database

To dump the files from the database I use the script represented below. In order to execute the script, first you have to enable the xp_cmdshell extended stored procedure as shown below.

EXEC sp_configure 'xp_cmdshell', 1 GO RECONFIGURE GO

To read more on xp_cmdshell please refer to http://technet.microsoft.com/en-us/library/ms190693.aspx

Exporting procedure:

DECLARE

@a INT

DECLARE @i INT,

@filename VARCHAR(300),

@cmd VARCHAR(500)

SET @i = 0;

SELECT @a=max(file_id) from bindata; -- for speeding up this query

abit you may want to create and INDEX on file_id

WHILE @i != @a

BEGIN

SET @filename = 'd:\tmp\'+CAST (@i AS VARCHAR)+'.dat';

SELECT @filename;

SET @cmd =

'bcp "SELECT data FROM db1.dbo.bindata WHERE file_id='+

CAST(@i+1 AS VARCHAR)+'" queryout '+@filename+' -T -N';

EXEC master..xp_cmdshell @cmd;

SET @i=@i+1;

END;

Problems with File Deltas

At the end I want to admit that there's such a problem like uploading the delta between the previous load into a DB and current files which appeared in the same dirrectories after the previous load. For I have the task to keep the binaries on the file system as well as in the database. I have two ways of dealing with this.

Method 1

There're multiple ways to do it actually but mine way was to dump the existing filepaths from DB using the BCP utility:

bcp "select filepath from db.dbo.files" queryout out.txt -T -c -C 1251

Again I'm using here the -C 1251 because my files have CP1251 characters in their names. I want to admit that my DB has the collation_name of 'SQL_Latin1_General_CP1251_CI_AS'.

We create a new list using the find utility again:

find d:\malware\ -type f -name *.dat

To get the delta we're using the diff utility:

diff -u out.txt new.txt

would look something like this:

--- out.txt Wed Jun 09 21:42:34 2008 +++ new.txt Wed Jun 09 21:44:05 2008 @@ -36,3 +36,9 @@ d:\malware\02\010.dat d:\malware\02\011.dat d:\malware\02\014.dat +d:\malware\02\016.dat +d:\malware\02\017.dat +d:\malware\02\018.dat +d:\malware\02\019.dat +d:\malware\02\020.dat +d:\malware\02\021.dat

I use the GNU grep and sed utilities to cleanse the delta data.

diff -u out.txt new.txt|grep ^+d |sed -e s/+// >delta.txt

The delta.txt will be

d:\malware\02\016.dat d:\malware\02\017.dat d:\malware\02\018.dat d:\malware\02\019.dat d:\malware\02\020.dat d:\malware\02\021.dat

Then we load the delta into the files table and loading the files using the procedure described above.

Method 2

We upload the whole newly created text file with paths once again without any constraint checking (if you have created, for example, a PRIMARY KEY on the filepath field in the files table) in the BULK INSERT operation. Before the BULK INSERT operation, we memorize or write down the current max(file_id) from the files table.

Finding the duplicates:

SELECT * FROM files WHERE filepath IN ( SELECT filepath FROM files GROUP BY filepath HAVING COUNT(*)>1) AND file_id > YOUR_MEMORIZED_MAX_FILE_ID

or

SELECT f1.filepath as dups,f2.file_id FROM files f1 INNER JOIN files f2 on f1.filepath=f2.filepath WHERE f2.file_id > YOUR_MEMORIZED_MAX_FILE_ID GROUP BY f1.filepath,f2.file_id

This will give you the rows with duplicates and you will be able easily eliminate them later.

Conclusion

So this was basicly my way of using MSSQL 2005 as the DB storage for binaries. MSSQL2005 did its job perfectly well. The techniques used here were flawless in accomplishing this particular task. But I'm pretty sure that anyone can have their own ways to perform this task and which have also an absolute right to exist.