This level will examine the most common problems and forms of mismanagement that lead to excessive growth of the transaction log, including:

- operating a database in

FULLrecovery model, without taking log backups - performing index maintenance

- long-running or uncommitted transactions that prevent space in the transaction log from being reused.

Of course, if growth is left unchecked, the log file may expand until it devours all of the available disk space or the maximum size specified for the log file, at which point you'll receive the infamous 9002 (transaction log full) error, and the database will become read-only. This level will cover the correct ways to respond to runaway log growth and the 9002 error, and also explain why commonly given advice to truncate the log and shrink it is often dangerous.

Finally, we'll cover strategies for ensuring smooth and predictable growth of your log file, while minimizing problems associated with log fragmentation. In a busy database, a large transaction log may be a simple fact of life and, managed properly, this is not necessarily a bad thing, even if the log file space is unused a majority of the time.

Sizing and Growing the Log

Whenever a log file needs to grow, and additional space is allocated, this space is divided evenly into VLFs, based on the amount of space that is being allocated.

For example, the log file may, by default, have an initial size of 2 MB and a ten percent auto-growth increment (settings inherited from the model database). This means that, initially at least, the log file will grow in very small increments and so have a large number of small VLFs.

When we allocate additional space in very large chunks, for example when initially sizing the log to 16 GB in a single operation, the resulting transaction log has a small number of larger VLFs.

A very high number of small VLFs, a condition referred to as log file fragmentation, can have a considerable impact on performance, especially for crash recovery, restores, and backups, particularly log backups. In other words, it can affect the performance of operations that read the log file. We will examine this problem in more detail in Level 8.

Transaction Log VLFs – too many or too few?

SQL Server MVP Kimberly Tripp discusses the impact of VLF sizes and provides guidance for how to properly manage VLF size in her blog post, Transaction Log VLFs – too many or too few? (http://www.sqlskills.com/BLOGS/KIMBERLY/post/Transaction-Log-VLFs-too-many-or-too-few.aspx).

Conversely, if the log file has only a few VLFs that are very large, we risk tying up large portions of the log for long periods. Each VLF will hold a very large number of log records, and SQL Server cannot truncate a VLF until it contains no part of the active log. In cases where truncation is delayed for some reason (see the Lack of log space reuse section), this can lead to rapid log growth. For example, let's assume that each VLF is 1 GB in size and that the log is full. You perform a log backup, but all VLFs contain some part of the active log and so SQL Server cannot truncate the log. It has no option but to add more VLFs and, if the growth increment for the log is set to a similarly large size then the log might grow rapidly, until some existing VLFs become eligible for truncation.

As such, it's important that we size the log appropriately initially, and then grow it in appropriately sized steps, to minimize log fragmentation but also to avoid rapid growth.

There is also a second reason why it is very important to size the log appropriately and grow it in a very controlled fashion: for log files, each growth event is a relatively expensive operation. It is natural that both data and log files will grow in size over time. SQL Server can optimize the process of adding new data files and expanding existing data files, via instant file initialization (introduced in SQL Server 2005, this allows the data files to allocate space on disk without having to fill the space with zeros). Unfortunately, the same is not true for log files, which still require initialization and "zeroing out" whenever space is allocated for log file creation or growth.

Why can't the transaction log use instant initialization?

For further information about transaction log zeroing, see Paul Randal's blog post, Search Engine Q&A #24: Why can't the transaction log use instant initialization? (http://sqlskills.com/BLOGS/PAUL/post/Search-Engine-QA-24-Why-cant-the-transaction-log-use-instant-initialization.aspx).

Diagnosing a Runaway Transaction Log

If you are experiencing uncontrolled growth of the transaction log, it is due, either to a very high rate of log activity, or to factors that are preventing space in the log file from being reused, or both.

If the growth is due primarily to excessive log activity, you need to investigate whether there might be log activity that could be avoided, for example by adjusting how you carry out bulk data and index maintenance operations, so that these operations are not fully logged (i.e. the BULK_LOGGED recovery model is used for these operations). However, any bulk-logged operation will immediately prevent point-in-time recovery to any point within a log backup that contains records relating to the minimally logged operations (refer to Level 6 for full details). If this is not acceptable, you must simply accept a large log as a fact, and plan its growth and management (such as frequency of log backups) accordingly, as described in the Proper Log Management section later in this level.

If the growth is due to a lack of log space reuse, you need to find out what is preventing this reuse and take steps to correct the issue.

Excessive logging: index maintenance operations

Index maintenance operations are a very common cause of excessive transaction log usage and growth, especially in databases using the FULL recovery model. The amount of log space required to perform index maintenance depends on the following factors:

- Rebuild or reorganize – Index rebuilds generally use a lot more space in the log.

- Recovery model – If the risks to point-in-time recovery are understood and acceptable, then index rebuilds can be minimally logged by temporarily switching the database to run in

BULK LOGGEDrecovery model. Index reorganization, however, is always fully logged.

Index rebuilds

When rebuilding an index, either offline or online, using ALTER INDEX REBUILD, or the deprecated DBCC DBREINDEX in SQL Server 2000, SQL Server creates a new copy of the index and then, once the rebuild is complete, drops the old copy (this is why you need at least as much free space as the size of the index in the data file).

Logging and online index rebuilds

Online index rebuild is a fully logged operation on SQL Server 2008 and later, whereas it is minimally logged in SQL Server 2005. Therefore, performing such operations in later SQL Server versions will require substantially more transaction log space. See: http://support.microsoft.com/kb/2407439, as well as Kalen Delaney's blog, investigating logging during online and offline index rebuilds, for both FULL and BULK_LOGGED recovery model databases: http://sqlblog.com/blogs/kalen_delaney/archive/2011/03/08/what-gets-logged-for-index-rebuilds.aspx.

In the FULL recovery model, index rebuilds can be a very resource-intensive operation, requiring a lot of space in the transaction log. In the SIMPLE or BULK_LOGGED recovery model, rebuilding an index is a minimally logged operation, meaning that only the allocations are logged, and the actual pages are not changed, therefore reducing the amount of log space required by the operation.

If you switch to the SIMPLE model to perform an index rebuild, the LSN chain will be immediately broken. You'll only be able to recover your database to a point in time contained in the previous transaction log backup. To restart the chain, you'll need to switch back to the FULL model and immediately take a full or differential database backup.

If you switch to the BULK_LOGGED model (see Level 6), the LSN chain is always maintained but there are still implications for your ability to perform point-in-time restores, since a log backup that contains a minimally logged operation can't be used to recover to a point in time. If the ability to perform a point-in-time recovery is paramount for a database, then don't use the BULK_LOGGED recovery model for index rebuilds or any other minimally logged operation, unless you can do it at a time when there is no concurrent user activity in the database. Alternatively, consider performing index reorganizations, in FULL recovery model, where possible.

If the BULK_LOGGED model is used, take steps to minimize the time period where point-in-time restore is unavailable, and so minimize exposure to data loss. To do this, take a log backup in FULL model, switch to BULK_LOGGED, perform the index rebuild, then switch back to FULL and take another log backup.

A final important point to note is that an ALTER INDEX REBUILD operation occurs in a single transaction. If the index is large, this could represent a long-running transaction that will prevent space reuse in the log for its duration. This means that, even if you rebuild an index in SIMPLE model, where you might think that the log should remain small since it is auto-truncated after a CHECKPOINT operation, and the rebuild is minimally logged, the log file can still expand quite rapidly during an extensive rebuild operation.

Index reorganization

In contrast to rebuilding an index, reorganizing (defragmenting) an index, using ALTER INDEX REORGANIZE or, in SQL Server 2000, DBCC INDEXDEFRAG (since deprecated) is always a fully logged operation, regardless of the recovery model, and so the actual page changes are always logged. However, index reorganizations generally require less log space than an index rebuild, although this is a function of the degree of fragmentation in the index; a heavily fragmented index will require more log space to reorganize than a minimally fragmented one.

Furthermore, the ALTER INDEX REORGANIZE operation is accomplished using multiple shorter transactions. Therefore, when performed in conjunction with frequent log backups (or when working in SIMPLE model), log space can be made available for reuse during the operation, so minimizing the size requirements for the transaction log during the operation.

For example, rebuilding a 20 GB index can require more than 20 GB of space for the rebuild operation because it occurs in a single transaction. However, reorganizing a 20 GB index may require much less log space because each page allocation change in the reorganization is a separate transaction, and so the log records can be truncated with frequent log backups, allowing the log space to be reused.

Strategies for controlling excessive logging

If your organization has zero tolerance to any potential data loss, then you'll have no choice but to run all database maintenance operations in the FULL recovery model, and plan your log size and growth appropriately. Since index rebuilds occur as a single transaction, the log will be at least as large as the largest index that you are rebuilding. As discussed above, index reorganizations require less log space, and allow for log truncation via log backups, during the operation. As such, they may offer a viable alternative to rebuilds, while avoiding explosive log growth.

If your SLAs and Operational Level Agreements (OLAs) allow some potential for data loss, then switching to BULK_LOGGED recovery at the start of an index rebuild can minimize the amount of space required to rebuild the index. However, do so in a way that minimizes exposure to data loss, as discussed earlier.

Regardless of the recovery model in use, you can minimize the impact of index maintenance operations on the transaction log by reorganizing rather than rebuilding, if possible. Microsoft has provided guidelines appropriate for most, but not all, environments for determining when to rebuild an index versus when to reorganize it to minimize the impact of index maintenance operations (see Reorganize and Rebuild Indexes, http://technet.microsoft.com/en-us/library/ms189858.aspx). They state that for fragmentation levels greater than 5 percent but less than or equal to 30 percent, you should reorganize the index, and for fragmentation levels greater than 30 percent, you should rebuild it.

However, the most effective weapon in guarding against excessive log growth during index maintenance is to maintain only those indexes that really need it. With the SSMS Maintenance Plans Wizard, index maintenance is an all-or-nothing process: you either rebuild (or reorganize) all indexes in your database (and all databases in the maintenance plan) or you maintain none of them. A better approach is to use the sys.dm_db_index_physical_stats DMV to investigate fragmentation and so determine a rebuild/reorganize strategy based on need.

Ola Hallengren's free maintenance scripts

Ola Hallengren offers a comprehensive set of free maintenance scripts which demonstrate how to use sys.dm_db_index_physical_stats to perform index analysis for intelligent maintenance, and which can be used as a replacement for Database Maintenance Plans created by the wizards in SSMS (http://ola.hallengren.com).

The best approach, however, is to schedule regular maintenance on only those indexes where you can prove a positive, sustained impact on query performance. Logical fragmentation (index pages in the wrong order) thwarts SQL Server's read-ahead mechanism (http://msdn.microsoft.com/en-us/library/ms191475(v=sql.105).aspx) and makes it less I/O-efficient at reading contiguous pages on disk. However, this only really affects large range scans from disk. Even for very fragmented indexes, if you are not scanning the table, rebuilding or reorganizing indexes might not help performance. Reduced page density (many gaps causes by page splits and deletes) will cause pages to take up more space on disk, and in memory, and require the I/O bandwidth to transfer the data. Again, though, this form of fragmentation won't really affect infrequently modified indexes and so rebuilding them won't help.

Before scheduling index maintenance, ask yourself what performance metrics benefited from the maintenance? Did it reduce I/O significantly? How much did it improve the performance of your most expensive queries? Was the positive impact a sustained one? If the answers to these are "no" or "don't know," then it's probable that regular index maintenance is not the right long-term answer. Finally, it's also worth noting that maintaining small indexes is generally not worthwhile. The commonly cited threshold is around 1,000 pages. Paul Randal suggested these values as guidelines when he was managing the storage engine development team at Microsoft, and they are documented in Books Online. Note, though, that this is guideline advice only and may not be appropriate for all environments, as discussed by Paul in his blog post, Where do the Books Online index fragmentation thresholds come from? (http://www.sqlskills.com/BLOGS/PAUL/post/Where-do-the-Books-Online-index-fragmentation-thresholds-come-from.aspx).

Investigating heavy log-writing transactions

The sys.dm_tran_database_transactions DMV provides useful insight into effects of transaction activity on the transaction log. In their book, Performance Tuning with SQL Server Dynamic Management Views (http://www.simple-talk.com/books/sql-books/performance-tuning-with-sql-server-dynamic-management-views/), reproduced here with their kind permission, the authors, Louis Davidson and Tim Ford, demonstrate how to use this DMV, and a few others, to investigate transactions that may be causing explosive transaction log growth.

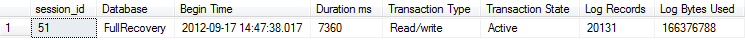

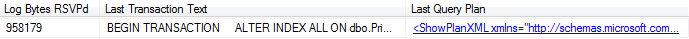

The example in Listing 7.1 reuses the FullRecovery database and PrimaryTable_Large table, from Level 6. While not repeated here, we provide the code to recreate this database and table in the code download file. Within an explicit transaction, it rebuilds the clustered index and then investigates log growth.

USE FullRecovery

GO

BEGIN TRANSACTION

ALTER INDEX ALL ON dbo.PrimaryTable_Large REBUILD

SELECT DTST.[ session_id ],

DES.[login_name] AS [Login Name],

DB_NAME (DTDT.database_id) AS [Database],

DTDT.[database_transaction_begin_time] AS [Begin Time],

DATEDIFF(ms, DTDT.[database_transaction_begin_time], GETDATE())

AS [Duration ms] ,

CASE DTAT.transaction_type

WHEN 1 THEN 'Read/write'

WHEN 2 THEN 'Read-only'

WHEN 3 THEN 'System'

WHEN 4 THEN 'Distributed'

END AS [Transaction Type],

CASE DTAT.transaction_state

WHEN 0 THEN 'Not fully initialized'

WHEN 1 THEN 'Initialized, not started'

WHEN 2 THEN 'Active'

WHEN 3 THEN 'Ended'

WHEN 4 THEN 'Commit initiated'

WHEN 5 THEN 'Prepared, awaiting resolution'

WHEN 6 THEN 'Committed'

WHEN 7 THEN 'Rolling back'

WHEN 8 THEN 'Rolled back'

END AS [Transaction State],

DTDT.[database_transaction_log_record_count] AS [Log Records],

DTDT.[database_transaction_log_bytes_used] AS [Log Bytes Used],

DTDT.[database_transaction_log_bytes_reserved] AS [Log Bytes RSVPd],

DEST.[text] AS [Last Transaction Text],

DEQP.[query_plan] AS [Last Query Plan]

FROM sys.dm_tran_database_transactions DTDT

INNER JOIN sys.dm_tran_session_transactions DTST

ON DTST.[transaction_id] = DTDT.[transaction_id]

INNER JOIN sys.[dm_tran_active_transactions] DTAT

ON DTST.[transaction_id] = DTAT.[transaction_id]

INNER JOIN sys.[dm_exec_sessions] DES

ON DES.[ session_id ] = DTST.[ session_id ]

INNER JOIN sys.dm_exec_connections DEC

ON DEC.[ session_id ] = DTST.[ session_id ]

LEFT JOIN sys.dm_exec_requests DER

ON DER.[ session_id ] = DTST.[ session_id ]

CROSS APPLY sys.dm_exec_sql_text (DEC.[most_recent_sql_handle]) AS DEST

OUTER APPLY sys.dm_exec_query_plan (DER.[plan_handle]) AS DEQP

WHERE DB_NAME(DTDT.database_id) = 'FullRecovery'

ORDER BY DTDT.[database_transaction_log_bytes_used] DESC;

-- ORDER BY [Duration ms] DESC;

COMMIT TRANSACTIONListing 7.1: Investigating heavy log-writing transactions.

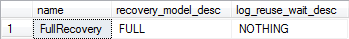

Figure 7.1 shows some sample output (we split the result set in two, for readability).

Figure 7.1: Log activities resulting from an index rebuild.

Incidentally, if we rerun this example but with ALTER INDEX…REORGANIZE, then the value in the Log Bytes Used column reduces from about 159 MB to around 0.5 MB.

Lack of log space reuse

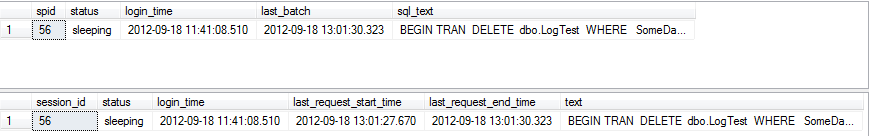

If you suspect that lack of log space reuse is the cause of log growth, your first job is to find out what's preventing reuse. Start by querying sys.databaseslog_reuse_wait_desc

SELECT name ,

recovery_model_desc ,

log_reuse_wait_desc

FROM sys.databases

WHERE name = 'FullRecovery'Listing 7.2: Examining the value of the log_reuse_wait_desc column.

The value of the log_reuse_wait_desc column will show the current reason why log space cannot be reused. If you've run the previous example (Listing 7.1), then it's likely that the FullRecovery database will display the value LOG_BACKUP

It may be that more than one thing is preventing log reuse. The sys.databasessys.databaseslog_reuse_wait reason.

The possible values for log_reuse_wait_desc

FULL recovery model without log backups

If the value returned for log_reuse_wait_desc, from the previous sys.databases query, is LOG_BACKUP, then you are suffering from probably the most common cause of a full or large transaction log, namely operating a database in the FULL recovery model (or less common, the BULK_LOGGED recovery model), without taking transaction log backups.

In many editions of SQL Server, the model database is in FULL recovery model by default. Since the model database is a "template" for creating all new SQL Server user databases, the new database inherits this configuration from model.

Using the FULL recovery model is a recommended practice for most production databases, since it allows for point-in-time recovery of the database, minimizing data loss in the event of a disaster. However, a common mistake is then to adopt a backup strategy consisting entirely of full (and possibly differential) database backups without taking frequent transaction log backups. There are two big problems with this strategy:

- Taking full database backups only protects the contents of the data file, not the log file.The only way to fully protect the data that has changed since the last full or differential backup, which will be required for point-in-time restores, is to perform a log backup.

- Full database backups do not truncate the transaction log.Only a log backup will cause the log file to be truncated. Without the latter, space in the log file is never marked for reuse, and the log file will constantly grow in size.

In order to perform a point-in-time recovery and control the size of the log, we must take transaction log backups in conjunction with full database backups or full and differential database backups. For our FullRecovery database, we can take a log backup, as shown in Listing 7.3, and then re-query sys.databases.

USE master

GO

BACKUP LOG FullRecovery

TO DISK = 'D:\SQLBackups\FullRecovery_log.trn'

WITH INIT

GO

SELECT name ,

recovery_model_desc ,

log_reuse_wait_desc

FROM sys.databases

WHERE name = 'FullRecovery'

Listing 7.3: Solving the log backup issue.

If a lack of log backups is the cause of log growth problems, the first thing to do is to verify that the database in question really does need to be operating in FULL recovery. This will be true if it must be possible to restore the database to an arbitrary point in time, or to point of failure in the case of a disaster, or if full recovery model is required for another reason (such as database mirroring). If the Recovery Point Objective (RPO) in the SLA stipulates a maximum of 15 minutes potential data loss, then it's highly unlikely you can fulfill this with only full and differential database backups and, again, log backups will be necessary.

However, if it turns out there are no log backups simply because they are not required, then the database should not be operating in FULL recovery; we can switch to using the SIMPLE recovery model, where the inactive portion of the transaction log is automatically marked as reusable, at checkpoint.

If the database does need to operate in the FULL recovery model, then start taking log backups, or investigate the need to take more frequent log backups. The frequency of the transaction log backups depends on a number of factors such as the frequency of data changes, and on SLAs for acceptable data loss in the event of a crash. In addition, you should take steps to ensure that the log growth is controlled and predictable in future, as described in the Proper Log Management section, later in this level.

Active transactions

If the value returned for log_reuse_wait_desc is ACTIVE_TRANSACTION, then you are suffering from the second most common cause of a full or large transaction log in SQL Server: long-running or uncommitted transactions. Rerun the transaction from Listing 7.1, but without committing it, and then rerun Listing 7.2 and you should see this value listed (don't forget to go back and commit the transaction).

As discussed in the Log Truncation and Space Reuse section of Level 2, a VLF inside the transaction log can only be truncated when it contains no part of the active log. If the database is using the FULL or BULK_LOGGED recovery models, only a log backup operation can perform this truncation. Long-running transactions in a database delay truncation of the VLFs that contain the log records generated after the start of the transaction, including the log records generated by changes to data in the database by other concurrent sessions, even when those changes have been committed. Additionally, the amount of space required by a long-running transaction will be increased by space reservations for "compensation log records," which are the log records that would be generated if the transaction were rolled back in the system. This reservation is required to ensure that the transaction can be reverted successfully without running out of log space during the rollback.

Another common cause of the Active Transaction value for log_reuse_wait_desc is the presence of "orphaned" explicit transactions that somehow never got committed. Applications that allow for user input inside a transaction are especially prone to this kind of problem.

Long-running transactions

One of the most common operations that results in a long-running transaction, which also generates large numbers of log records in a database, is archiving or purging of data from a database. Data retention tends to be an afterthought in database design, usually considered after the database has been active for a period and is approaching the capacity limits of the available storage on a server.

Usually, when the need to archive data arises, the first reaction is to remove the unneeded data from the database using a single DELETE statement, as shown in Listing 7.4. To produce some simple test data, this script uses a simplified version of Jeff Moden's random data generator (see Level 1, Listing 1.3), modified slightly to produce dates into 2012.

USE FullRecovery ;

GO

IF OBJECT_ID('dbo.LogTest', 'U') IS NOT NULL

DROP TABLE dbo.LogTest ;

SELECT TOP 500000

SomeDate = CAST(RAND(CHECKSUM(NEWID())) * 3653.0 + 37534.0 AS DATETIME)

INTO dbo.LogTest

FROM sys.all_columns ac1

CROSS JOIN sys.all_columns ac2 ;

-- delete all but the last 60 days of data

DELETE dbo.LogTest

WHERE SomeDate < GETDATE() - 60Listing 7.4: Bulk data deletion.

Depending on the number of rows that exist in the date range to be deleted, this can become a long-running transaction that will cause transaction log growth issues, even when the database is using the SIMPLE recovery model. The presence of cascading FOREIGN KEY constraints or auditing triggers exacerbates the problem. If other tables reference the target table, via FOREIGN KEY constraints designed to CASCADE ON DELETE , then SQL Server will also log details of the rows deleted through the cascading constraint. If the table has a DELETE trigger on it, for auditing data changes, SQL Server will also log the operations performed during the trigger's execution.

To minimize the impact on the transaction log, the data purge operation should be broken down into a number of shorter, individual transactions. There are a number of ways to break a long-running transaction down into smaller batches. If cascading constraints or a DELETE trigger exist for a table, we can perform the DELETE operation inside of a loop, to delete one day of data at a time, as shown in Listing 7.5. Note that, in this simple example, there are insufficient rows in our table to justify use of this technique over a simple DELETE; it is better suited to data purges of millions of rows. Note also that speed is not necessarily the primary concern with batch deletes (Listing 7.5 will run much slower than Listing 7.4). The bigger concerns are avoiding explosive log growth and lock escalation.

DECLARE @StopDate DATETIME ,

@PurgeDate DATETIME

SELECT @PurgeDate = DATEADD(DAY, DATEDIFF(DAY, 0, MIN(SomeDate)), 0) ,

@StopDate = DATEADD(DAY, DATEDIFF(DAY, 0, GETDATE()) - 60, 0)

FROM dbo.LogTest

WHILE @PurgeDate < @StopDate

BEGIN

DELETE dbo.LogTest

WHERE SomeDate < @PurgeDate

SELECT @PurgeDate = DATEADD(DAY, 1, @PurgeDate)

ENDListing 7.5: Breaking down data purges into smaller transactions.

Using this model for purging data, the duration of each DELETE transaction is only the time required to delete a single day's data from the table, plus the time required for any triggers or cascading constraints to perform their operations. If the database uses the SIMPLE recovery model, the next checkpoint will truncate the log records generated by each daily purge. If the database uses the FULL or BULK_LOGGED recovery model, the next log backup will truncate the log records generated by each daily purge, as long as no part of the active log exists inside the VLFs containing log records relating to the data purge.

When cascading constraints or auditing triggers are not a factor in the process, we can use a different method to purge the data from the table while minimizing the transaction duration. Instead of performing a single-day DELETE operation, which can affect more or less data, depending on the number of rows that exist for a specific date, use of the TOP operator inside the DELETE statement will limit the number of rows affected by each loop of the operation. By capturing into a variable the number of rows affected by the DELETE operation, using @@ROWCOUNT , the operation can continue to purge data from the table in small batches, until the value of @@ROWCOUNT is less than the number of rows specified in the TOP clause of the DELETE statement, as shown in Listing 7.6.

This method only works when triggers and cascading constraints aren't being used because, when they are, the result of @@ROWCOUNT will not be the actual rows deleted from the base table, but instead the number of rows that are affected by the trigger execution or through enforcing the cascading constraint.

DECLARE @Criteria DATETIME ,

@RowCount INT

SELECT @Criteria = GETDATE() - 60 ,

@RowCount = 10000

WHILE @RowCount = 10000

BEGIN

DELETE TOP ( 10000 )

FROM dbo.LogTest

WHERE SomeDate < @Criteria

SELECT @RowCount = @@ROWCOUNT

ENDListing 7.6: Using the TOP operator inside the DELETE statement for data purges.

These methods work in any edition of SQL Server 2000, 2005, and 2008 to minimize transaction duration during data purge operations.

However, if the database is SQL Server 2005 or 2008 Enterprise Edition, and the data purging process runs regularly, then an even better way to purge the data is to partition the table using a sliding window partition on the column used to delete the data. This will have even less impact on the transaction log, since the partition containing the data can be switched out of the table and truncated, which is an operation for which SQL Server logs only the extent de-allocations.

Managing archiving

It is well outside the scope of this Stairway to delve into full, automated archiving schemes. However, a possible archiving process could involve partitioning, and duplicate schemas between tables, allowing a partition to be switched out of one table and into another one, minimizing the active portion of data in the main OLTP table, but reducing the archiving process to being metadata changes only. Kimberley Tripp has produced a detailed white paper called Partitioned Tables and Indexes in SQL Server 2005, which also covers the sliding window technique, (see http://msdn.microsoft.com/en-us/library/ms345146(v=sql.90).aspx).

Uncommitted transactions

By default, SQL Server wraps any data modification statement in an implicit transaction to ensure that, in the event of a failure, SQL Server can roll back the changes already made at the point of failure, returning the data to a consistent state. If the changes succeed, the implicit transaction is committed to the database. In contrast to implicit transactions, which occur automatically, we create explicit transactions in code to wrap multiple changes into a single transaction, ensuring that all the changes can be undone by issuing a ROLLBACK command, or persisted by issuing a COMMIT for the transaction.

When used properly, explicit transactions can ensure that data modifications that span multiple tables complete successfully as a unit, or not at all. When used incorrectly, however, orphaned transactions remain active in the database, preventing truncation of the transaction log, and so resulting in the transaction log growing or filling up. There are a number of causes of orphaned transactions in SQL Server, and it's beyond the scope of this level to investigate them in full detail. However, some of the most common causes are:

- application timeouts caused by a long-running transaction

- incorrect error handling in T-SQL or application code

- failure during trigger execution

- linked server failures resulting in orphaned distributed transactions

- no corresponding

COMMIT/ROLLBACKstatement to aBEGIN TRANSACTIONcommand.

Once a transaction starts, it will remain active until the connection that created the transaction issues a COMMIT or ROLLBACK statement, or the connection disconnects from the SQL Server (the exception is when using bound connections, which allow sessions to share locks).

Modern applications generally utilize connection pooling, keeping connections to the SQL Server in a pool for reuse by the application, even when the application code calls the Close() method on the connection. It is critical that you understand this last point when troubleshooting orphaned transactions, since even though the connection is reset before being added or returned to the application's connection pool, open transactions continue to exist in the database if they have not been properly terminated.

Identifying the active transaction

The transaction-related Dynamic Management Views (http://msdn.microsoft.com/en-us/library/ms178621.aspx) provide a wealth of extra information regarding about the state of current transactions and the work they perform (see Listing 7.1). However, some DBAs still regard DBCC OPENTRAN as the fastest way to identify whether an orphaned (or just long-running) transaction is the root cause of transaction log growth.

This command can accept the database name as an input parameter in the format DBCC OPENTRAN DatabaseName) where DatabaseName is the name of the database to check for open transactions. If an active transaction exists in the database, this command will output information similar to that shown in Listing 7.7.

DBCC OPENTRAN (FullRecovery) Transaction information for database 'FullRecovery'. Oldest active transaction: SPID (server process ID): 56 UID (user ID) : -1 Name : user_transaction LSN : (897:15322:1) Start time : Sep 18 2012 1:01:29:390PM SID : 0x010500000000000515000000fd43461e19525f12828ba628ee0a0000 DBCC execution completed. If DBCC printed error messages, contact your system administrator.

Listing 7.7: Sample output from DBCC OPENTRAN .

DBCC OPENTRAN reports only the oldest active transaction, and the primary indicator of whether or not the active transaction is problematic is the Start Time. Generally, uncommitted transactions that become problematic with regard to transaction log growth have been open for a long period of time.

The other important piece of information is the SPID (server process ID; in the DMVs this is replaced by session_id ), which identifies the session that created the open transaction. We can use the SPID to determine whether the transaction is actually an orphaned transaction or just a long-running one, by querying the sysprocesses view (in SQL Server 2000) or the sys.dm_exec_sessions and sys.dm_exec_connections DMVs in SQL Server 2005 and later, as shown in Listing 7.8. Note that the sysprocesses view is still available in SQL Server 2005 and later for backwards compatibility. In each query, simply replace the session_id value with the one you saw when running Listing 7.7 (we have commented out certain columns, simply for readability of the output).

USE master

GO

SELECT spid ,

status ,

-- hostname ,

-- program_name ,

-- loginame ,

login_time ,

last_batch ,

( SELECT text

FROM ::

fn_get_sql(sql_handle)

) AS [sql_text]

FROM sysprocesses

WHERE spid = 56

USE FullRecovery

GO

SELECT s.session_id ,

s.status ,

-- s.host_name ,

-- s.program_name ,

-- s.login_name ,

s.login_time ,

s.last_request_start_time ,

s.last_request_end_time ,

t.text

FROM sys.dm_exec_sessions s

JOIN sys.dm_exec_connections c ON s.session_id = c.session_id

CROSS APPLY sys.dm_exec_sql_text(c.most_recent_sql_handle) t

WHERE s.session_id = 56

Listing 7.8: Identifying orphaned or long-running transactions using the DMVs.

If the session is in a runnable, running, or suspended status, then it is likely that the source of the problem is a long-running, rather than orphaned, transaction. However, only further investigation will confirm. It is possible that an earlier transaction failed and the connection was reset, for use under connection pooling, and that the currently executing statement is not associated with the open transaction.

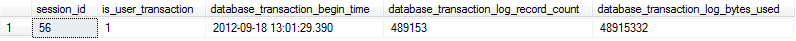

In SQL Server 2005 and later, we can use the sys.dm_tran_session_transactions and sys.dm_tran_database_transactions DMVs to gather information specific to the open transaction, including the transaction start time, number of log records used by the open transaction, as well as the bytes of log space used, as we saw previously in Listing 7.1. Listing 7.9 shows a simplified version, with sample output.

SELECT st.session_id ,

st.is_user_transaction ,

dt.database_transaction_begin_time ,

dt.database_transaction_log_record_count ,

dt.database_transaction_log_bytes_used

FROM sys.dm_tran_session_transactions st

JOIN sys.dm_tran_database_transactions dt

ON st.transaction_id = dt.transaction_id

AND dt.database_id = DB_ID('FullRecovery')

WHERE st.session_id = 56

Listing 7.9: Gathering information about the open transaction.

Unless the application was specifically designed to check for, and handle, orphaned transactions, the only way to clear the transaction is to KILL the session, which will cause the transaction to roll back as the connection terminates, allowing the space in the log be made available for reuse during the next log backup. However, the ramifications of performing the rollback must be understood.

Other possible causes of log growth

In addition to those previously identified, there are a few other problems that may prevent reuse of space in the log, and so lead to excessive log growth. I'll cover a few of them here, but for further discussion on these issues, please see Gail Shaw's article, Why is my transaction log full? at http://www.sqlservercentral.com/articles/Transaction+Log/72488/.

Replication

During transactional replication, it is the job of the log reader agent to read the transaction log, looking for log records that are associated with changes that need to be replicated to subscribers (i.e. are "pending replication"). Once the changes are replicated, it marks the log entry as "replicated." Slow or delayed log reader activity can lead to records being left as "pending replication" for long periods, during which time they will remain part of the active log, and so the parent VLF cannot be truncated. A similar problem exists for log records required by the Change Data Capture (CDC) feature.

In either case, the log_reuse_wait_desc column of sys.databases will show REPLICATION as the root cause of the problem. The problem will also reveal itself in the form of bottlenecks in the throughput performance of the transaction log disk array, specifically, delayed read operations under concurrent write loads. Writes to the log file occur sequentially, but read operations associated with the log reader agent and log backups read the file sequentially as well. Having sequential reads and writes occurring at the same time can, depending on the level of activity in the system and the size of the active portion of the log, result in random I/O activity as the disk heads have to change position to read from the beginning of the active log and then write to the end of the active log. We can use the disk latency PerfMon counters, Physical Disk\Disk Reads/sec and Physical Disk\Disk Writes/sec counters to troubleshoot this type of problem. See Level 2 of the free eBook, Troubleshooting SQL Server (http://www.simple-talk.com/books/sql-books/troubleshooting-sql-server-a-guide-for-the-accidental-dba/) for further details on this topic.

The first step in troubleshooting these REPLICATION wait issues is to verify that the log reader SQL Agent jobs are actually running. If they are not, attempt to start them. If this fails, you'll need to find out why.

If the jobs are running but the REPLICATION waits persist, and the transaction log is growing rapidly, you need to find some way to get the relevant log entries marked as "replicated" so that space in their parent VLFs can be reused. Unfortunately, there is no perfect solution that will avoid side effects to replication or CDC in the environment, but you could try one of the solutions below.

- In the case of transactional replication, use the

sp_repldonecommand to mark all of the log records currently waiting on the log reader to mark them as replicated, but this will require re-initialization of the subscribers. With CDC, this command will not resolve the problem with transaction log growth. - Disabling CDC or replication and performing a manual resynchronization of the data. Having disabled CDC or replication, the pending replication log records in the transaction log will no longer be pending and the next log backup, in

FULLorBULK_LOGGEDrecovery, orCHECKPOINToperation inSIMPLErecovery, will clear them out. However, the trade-off is that the environment will require manual synchronization of the data for CDC, or it will require re-initialization of the subscribers for replication, if these features are added back to the database.

Remember that simply switching to the SIMPLE recovery model, in the hope of truncating the log, will not work since replication and CDC are both supported using SIMPLE recovery, and the log records will continue to be required until the log reader SQL Agent process processes them.

Snapshot Replication schema change issue

There is a known issue with Snapshot Replication in SQL Server 2005 that causes log entries that are marked for replication of schema changes not to be unmarked when the changes are replicated. This problem is explained in the following blog post that also explains how to work around the issue by using sp_repldone: Size of the transaction log increasing and cannot be truncated or shrunk due to Snapshot Replication (http://blogs.msdn.com/b/sqlserverfaq/archive/2009/06/01/size-of-the-transaction-log-increasing-and-cannot-be-truncated-or-shrinked-due-to-snapshot-replication.aspx).

ACTIVE_BACKUP_OR_RESTORE

When the log_reuse_wait_desc column shows ACTIVE_BACKUP_OR_RESTORE as the current wait description, a long-running full or differential backup of the database is the most likely cause of the log reuse problems. During a full or differential backup of the database, the backup process delays log truncation so that the active portion of the transaction log can be included as a part of the full backup. This allows changes made to database pages during the backup operation to be undone when the backup is restored WITH RECOVERY, to bring the database to a consistent state. If such waits are causing persistent problems, you'll need to investigate ways to optimize the backup process, such as by improving the performance of the backups (via backup compression) or improving the performance of the underlying disk I/O system.

DATABASE_MIRRORING

When the log_reuse_wait_desc column shows DATABASE_MIRRORING, as the current wait description, asynchronous database mirroring operations may be the cause of the log reuse issues.

In synchronous mirroring, transactions on the principal are only committed once their related log records have been transferred to the mirror database. For asynchronous database mirroring, the log records are transferred later and the principal's log can't be truncated until they are. When mirroring problems arise, a large number of log records on the principal can remain part of the active log, preventing log space reuse, until copied over to the mirror.

For synchronous database mirroring, we may see a value of DATABASE_MIRRORING if the mirror is not contactable, due to a broken or very slow connection, or suspension of the mirroring session. For asynchronous database mirroring, we may well see this value during normal operation, as well as during connection problems.

In such cases, I would first check the status of the mirroring session for the affected database(s). If they are not synchronizing correctly, then you will need to troubleshoot the cause of the failed connection between the principal and the mirror. One of the most common problems with database mirroring, when certificates are used to secure the endpoints, is the expiration of the certificates, requiring that they be recreated. A full discussion of troubleshooting mirroring connectivity problems is outside of the scope of this Stairway but, unless the databases are properly synchronizing so that the log records are being sent to the mirror, the active portion of the transaction log on the principal will continue to grow and not be able to be truncated without breaking the mirroring setup.

If the transaction rate on the principal greatly exceeds the rate at which log records can be transferred to the mirror, then the log on the principal can grow rapidly. If the mirror server is being used for reporting, by creating snapshots, verify that the disk I/O configuration for the mirror is not saturated, by using the disk latency PerfMon counters mentioned earlier. If this is where the problem is, eliminating use of the mirror server for reporting may provide temporary relief of the problem. If the problem is strictly the sheer volume of transactions and the database is not running on SQL Server 2008 or higher, then upgrading may be able to resolve the problem due to the use of log stream compression in SQL Server 2008 and beyond.

The best approach is to determine the cause of the mirroring issue and resolve it. For example, tuning operations that produce a significant number of log records, such as bulk loading data, or reorganizing indexes, may reduce the impact to the system overall during the operation.

Handling a Transaction Log Full Error

In the worst case, transaction log mismanagement or sudden, rapid, log growth can cause a transaction log to grow and eventually devour all available space on its drive. At this point it can grow no more, you'll encounter Error 9002, the transaction log full error, and the database will become read-only.

Despite the urgency of this problem, it's important to react calmly, and avoid the sort of "spontaneous" solutions that are covered in the following section, Mismanagement or What Not To Do. Obviously the pressing concern is to allow SQL Server to continue to write to the log, by making more space available. The first port of call is to establish if the cause is a lack of log backups. Run the query in Listing 7.1 and if the value for the log_reuse_wait_desc column is Log Backup then this is the likely cause of the issue. A query to the backupset table (http://msdn.microsoft.com/en-us/library/ms186299.aspx) in the MSDB database, as shown in Listing 7.10, will confirm whether or not log backups are being taken on the database, and when the last one was taken.

USE msdb ;

SELECT backup_set_id ,

backup_start_date ,

backup_finish_date ,

backup_size ,

recovery_model ,

[type]

FROM dbo.backupset

WHERE database_name = 'DatabaseName'Listing 7.10: Which backups were taken, and when.

In the type column, a D represents a database backup, L a log backup and I a differential backup. If there are no log backups, or they are very infrequent, then your best course of action is to take a log backup (assuming the database is operating in FULL or BULK_LOGGED recovery model). Hopefully, this will free up substantial space within the log and you can then implement an appropriate log backup scheme, and log file growth management strategy.

If, for some reason, it is not possible to perform a log backup due to a lack of disk space, or the time it would take to perform a log backup exceeds the acceptable time to resolve the problem, then, depending on the disaster recovery policy for the database in question, it might be acceptable to force a truncation of the log by temporarily switching the database to the SIMPLE recovery model in order that inactive VLFs in the log can be truncated on CHECKPOINT. You can then switch the recovery model back to FULL and perform a new full database backup (or a differential backup, assuming a full backup was taken at some previous time) to restart the log chain for point-in-time recovery. Of course, you'll still need to investigate the problem fully, in order to make sure that the space isn't simply devoured again. Bear in mind also that, as discussed previously, if the problem preventing space reuse is anything other than Log Backup, then this technique won't work, since those records will simply remain part of the active log, preventing truncation.

If a lack of log backups isn't the problem, or taking a log backup doesn't solve the problem, then investigating the cause will require a little more time. If it is quick and easy to make extra space on the log drive then do so. This might mean shifting off other files, or adding capacity to the current log drive, or adding an extra log file on a different disk array, but it will buy you the bit of breathing space you need to get the database out of read-only mode, and perform a log backup.

If a log backup fails to free up space, you need to find out what is preventing space reuse in the log. Interrogate sys.databases (Listing 7.1) to find out if anything is preventing reuse of space in the log, and take appropriate action, as described in the earlier Lack of log space reuse section.

If this reveals nothing, you'll need to investigate further and find out which operations are causing the excessive logging that led to the log growth, as described in the Diagnosing a Runaway Transaction Log section.

Ultimately, having resolved any space reuse issue, we may still have a log file that is consuming the vast majority of the space on the drive. As a one-off measure, i.e. assuming we will take steps to ensure proper management of log growth in the future (see the Proper Log Management section, following shortly), it is acceptable to use DBCC SHRINKFILE (see http://msdn.microsoft.com/en-us/library/ms189493.aspx) to reclaim the space used by a bloated transaction log file. We'll provide an example of how to do this in Level 8.

We can either specify a target_size to which to shrink the log file, or we can specify 0 (zero) as the target size and shrink the log to its smallest possible size, and then immediately resize it to a sensible size using ALTER DATABASE . The latter is the recommended way, as it minimizes fragmentation of the log file. This fragmentation issue is the main reason why you should never schedule regular DBCC SHRINKFILE tasks as a means of controlling the size of the log; we discuss this in more detail in the next section.

Mismanagement or What Not To Do

Unfortunately, a quick search of the Internet for "Transaction Log Full" will return a number of forum threads, blog posts, and even articles published on seemingly reputable SQL Server sites, which recommend remedial action that is, frankly, dangerous. We'll cover a few of the more popular suggestions here.

Detach database, delete log file

The idea here is that you clear all users off the database, detach the database (or shut it down), delete the log file (or rename it) and then re-attach the database, causing a new log file to be created at whatever size is dictated by the model database. This is arguably the most appalling of all the terrible ways to handle a full transaction log. It can result in the database failing to start, leaving it in the RECOVERY_PENDING state.

Depending on whether or not the database had been cleanly shut down at the time of the log deletion, the database may not be able to perform the UNDO and REDO operations that are a normal part of the database recovery process, because the transaction log is missing, and so can't return the database to a consistent state. When the log file is missing, and the database requires the transaction log to perform crash recovery, the database will fail to start up properly and the only recourse will be to restore the database from the most recent backup available, which will most likely result in data loss.

Creating, detaching, re-attaching, and fixing a suspect database

Under specific circumstances, it may be possible to hack the existing database into a configuration that allows the transaction log to be rebuilt, although it may compromise the integrity of the data contained in the database. This type of operation is, at best, a last-ditch effort that may be used when there is absolutely no other way of recovering the database data, and it is not a recommended practice of the authors, technical editors, or anyone else involved in the authoring of this Stairway series. For an explanation of how to attempt hacking a database back into SQL Server where the transaction log file has been deleted, see Paul Randal's blog post, Creating, detaching, re-attaching, and fixing a suspect database (http://www.sqlskills.com/blogs/paul/post/TechEd-Demo-Creating-detaching-re-attaching-and-fixing-a-suspect-database.aspx).

Forcing log file truncation

In SQL Server 2000 and 2005, BACKUP LOG WITH TRUNCATE_ONLY was a supported (though deprecated in SQL 2005) way of forcing SQL Server to truncate the transaction log, while the database was operating in the FULL or BULK_LOGGED model. Using this command does not actually make a backup copy of the contents of the log; the records in the truncated VLFs are discarded. So, unlike with a normal log backup, you're destroying your LSN chain and you will only be able to restore to a point in time in any previous log backup files. Also, even though the database is set to FULL (or BULK_LOGGED) recovery, it will actually, from that point on, operate in an auto-truncate mode, continuing to truncate inactive VLFs on checkpoint. In order to get the database operating in FULL recovery again, and restart the LSN chain, you'd need to perform a full (or differential) backup.

This command was often used without people realizing the implications it had for disaster recovery, and it was deprecated in SQL Server 2005 and removed from SQL Server 2008. Unfortunately, an even more insidious variation of this technique, which continues to be supported, has crept up to take its place, and that is BACKUP LOG TO DISK='NUL', where NUL is a "virtual file" that discards any data that is written to it. The really nasty twist to this technique is that, unlike with BACKUP LOG WITH TRUNCATE_ONLY , SQL Server is unaware that the log records have simply been discarded. As far as SQL Server is concerned, a log backup has been performed, the log records are safely stored in a backup file so the LSN chain is intact, and any inactive VLFs in the live log can safely be truncated. Any subsequent, conventional log backups will succeed but will be entirely useless from the point of view of disaster recovery since a log backup file is "missing" and so the database can only be restored to some point in time covered by the last standard log backup that was taken before BACKUP LOG TO DISK='NUL' was issued.

Do not use either of these techniques. The right way to "force" log truncation is to temporarily switch the database into the SIMPLE recovery model, as discussed earlier.

Scheduled shrinking of the transaction log

As discussed in the Handling a Transaction Log Full Error section, in rare circumstances where transaction log growth has occurred due to a lack of management, and where the log growth is currently being actively managed, using DBCC SHRINKFILE to reclaim the space used by the transaction log file is an acceptable operation.

However, we should never shrink the transaction log as part of normal, scheduled maintenance operations. The reason for this is that every time we shrink the log, it will need to grow again immediately to store log records for subsequent transactions. As discussed previously in the Sizing and Growing the Log section, the transaction log cannot take advantage of instant file initialization, so all log growths incur the cost to zero-byte the storage space that SQL Serve needs to allocate. In addition, if we rely on auto-growth for transaction log growth (see the next section for a fuller discussion), excessive VLFs can accumulate in the log file and this log fragmentation will impact the performance of any process that needs to read the log file and, if fragmentation gets really bad, possibly even the performance of data modifications.

The best practice for the transaction log file continues to be to size it appropriately up front, so it does not have to grow under normal operations. Then, monitor its usage periodically to determine if the need to grow it manually occurs, allowing you to determine the appropriate growth size and determine the number and size of VLFs that will be added to the log file. We'll discuss this in more detail in Level 8.

Proper Log Management

In the absence of any unexpected operations or problems that have resulted in unusual log growth (replication problems, uncommitted transactions, and so on, as discussed earlier), if the transaction log associated with a FULL recovery model database fills up, and is forced to grow, there are really only two causes:

- the size of the log file was too small to support the volume of data changes that were occurring in the database

- the frequency of log backups was insufficient to allow rapid reuse of space within the log file.

The best thing to do, if you can't increase the frequency of the log backups by decreasing the amount of time between them, is to manually grow the log file to a size that prevents it from having to grow using auto-growth when under load, and then leave the log file that size. Having a large transaction log file that we've grown manually to minimize the number of VLFs is not a bad thing, even if the log file has free space a majority of the time. We'll discuss this in full detail in Level 8.

Summary

The transaction log is critical to the operation of a SQL Server database, and the ability to minimize data loss in the event of a disaster. In a situation where the log is growing explosively, or is even full, the DBA needs to act very quickly to diagnose and fix the problem, but it's also important to act calmly, and avoid unthinking reactions such as forcing log truncation, then scheduling regular log shrinks, which will cause more harm than good.

Acknowledgements

Many thanks to Jonathan Kehayias, lead author of Troubleshooting SQL Server (http://www.simple-talk.com/books/sql-books/troubleshooting-sql-server-a-guide-for-the-accidental-dba/), available as a free eBook, for contributing additional material to this level.