Introduction

This paper will demonstrate a process for loading a comma-delimited data file into a staging table for further processing then validating the data and moving specific data into host tables. There are several excellent papers and tutorials individually describing simple data load processes, error-handling, logging, etc. using SSIS but I haven’t found a source demonstrating a process start-to-finish which motivated me to write this paper. The intended audience includes beginning SSIS developers, DBAs, and anyone with a desire to experience the magic of data management with this remarkable tool.

A significant challenge in producing this paper was the identification of a data set that was non-trivial, not proprietary, yet allowed the demonstration of important techniques available in SSIS. I settled on using a data set I downloaded from a U.S. government WWW site containing data produced using taxpayer dollars and thus, publicly available. The data set is the Worldwide M1+ Earthquakes, Past 7 Days csv file available at http://explore.data.gov/catalog/raw/.

I’ve included ample screen shots where appropriate (please note that the following screen shots are of my local system’s directory structure, etc.) as well as attempted to describe the process as clearly and concisely as possible without insulting anyone’s intelligence; however, be forewarned that finding the balance between too much or not enough detail is a challenge unto itself.

In developing this paper it quickly become apparent that a complete treatment of the sample ETL project would be too involved for a single paper; therefore, I divided the paper into two parts where I believe the division makes sense. Taken together the two parts of the paper provide, in my opinion (biased as it is), a thorough example of a non-trivial ETL project using “real” data. For the record, I use the terms Task and Transformation synonymously SSIS purest would say that this is incorrect I accept their judgment (although, I would argue that the definition of a “task” may generically include a transformation. For the novice, a Task acts upon the Control Flow while a Transformation acts upon the Data Flow).

Validation and Transformation

The goal of this example is to demonstrate the use of the SSIS components to import, validate, transform, and load data into a database. When I was searching for a data set for this example I wanted a data set that was non-trivial, somewhat interesting, and that would require certain data validation and transformations. The validations and transformations are not particularly stupendous, they are not meant to be; instead they are meant to demonstrate the technique which can be exploited in more complex scenarios.

Transformation

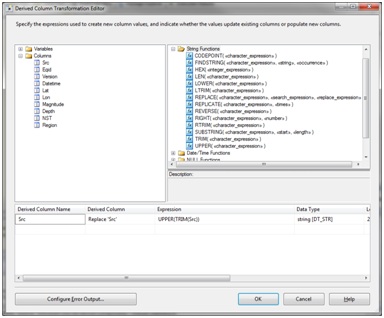

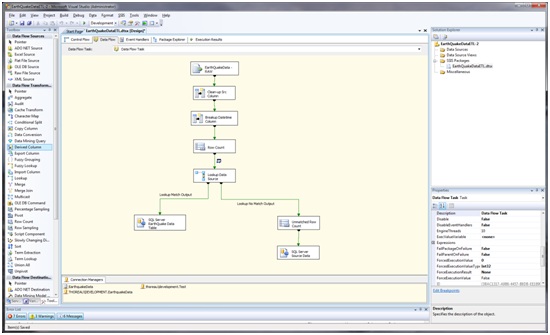

First let’s add a Row Count Task and an integer variable to store the count of rows imported. Now, add a Derived Column Task so that we can make in-stream changes to the Src column. The Derived Column Task should be configured as shown below:

I leave the transformation of the Datetime input field into its component DayOfWeek and UTCDateTime parts as an exercise for the reader.

Validation

Validating the source of the data requires dragging a Lookup Task onto the design surface. The EarthQuake data sources are:

AK,Alaska Seismic Network

BK,UC Berkeley

CI,Caltech/USGS Regional Seismic Network

GO,USGS Golden Network

HV,Hawaii Volcano Observatory Network

LL,Lawrence Livermore Lab NTS network

MB,Montana Bureau of Mines and Geology

NC,NCSN

NE,Nevada Seismic Network

PG,Pacific Gas & Electric Diablo Canyon network

SN,Southern Great Basin network - UNR

TS,Terrascope Network

UG,Unocal Geothermal network

US,US NSN

UU,University of Utah Regional Network

UW,University of Washington Seismic Network

WR,California Department of Water Resources

YL,Yellowstone Seismic Network

Create and populate a table to hold these values:

CREATE TABLE [dbo].[SourceID]

(

[ID] [char](2) NULL,

[Name] [varchar](50) NULL

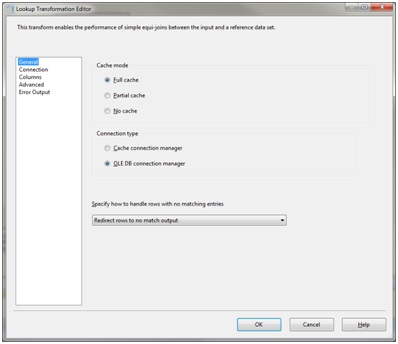

)The Lookup Task outputs are distinguished by whether they match the validation criteria or not. Here’s what the configuration of the task should look like:

Select Redirect rows to no match output. Create a table with the same definition as the destination table naming it EarthQuakeDataLookupErrors (Note the added ID identity column).

CREATE TABLE [EarthQuakeDataLookupErrors]

(

[ID] int IDENTITY(1,1),

[Src] varchar(2),

[Eqid] varchar(8),

[Version] varchar(1),

[Datetime] varchar(42),

[Lat] real,

[Lon] real,

[Magnitude] real,

[Depth] real,

[NST] smallint,

[Region] varchar(52)

)

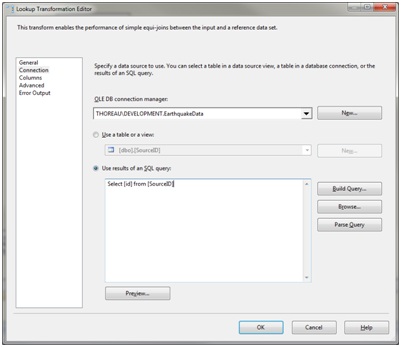

Maximize performance by using a SQL query to limit the comparison data to the specific column values of interest; in this case ID.

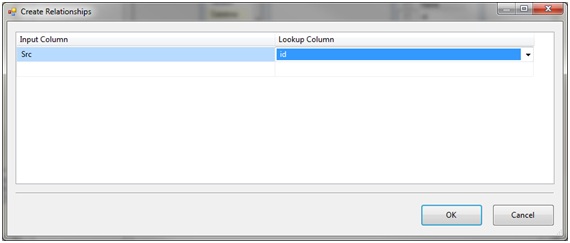

Relate the source column to be validated to the validation column by connecting the columns with a line or, as shown above, right click on the Src column and select Edit Mappings.

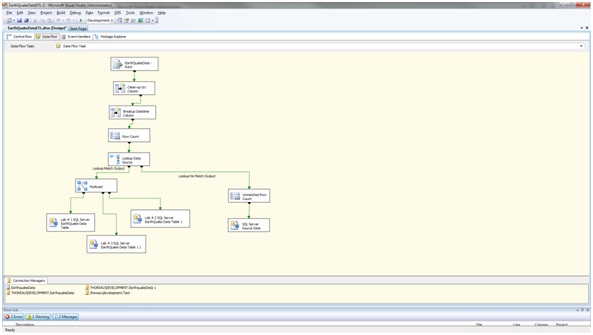

The design surface should now look like this:

Add a Row Count Task and a variable named UnmatchedRowCount and another SQL Server Destination connected to the EarthQuakeDataLookupErrors table.

Remove one of the records from the SourceID lookup table as a test. Run the package. Green? Check your tables; did the data get loaded as expected? Did the unmatched data get loaded into the EarthQuakeDataLookupErrors table?

Logging

During the package development process we can use breakpoints and watches to discern the details of the execution of the package. However, we use logging when the package is moved to the testing and / or production environments so we can know how the package is behaving during execution. As with other features in SSIS we have choices for the implementation of logging; I choose to log to a SQL Server table because I run dozens of packages and have scripts monitoring the logging table to alert me to any issues. Again, it’s my choice it doesn’t have to be yours.

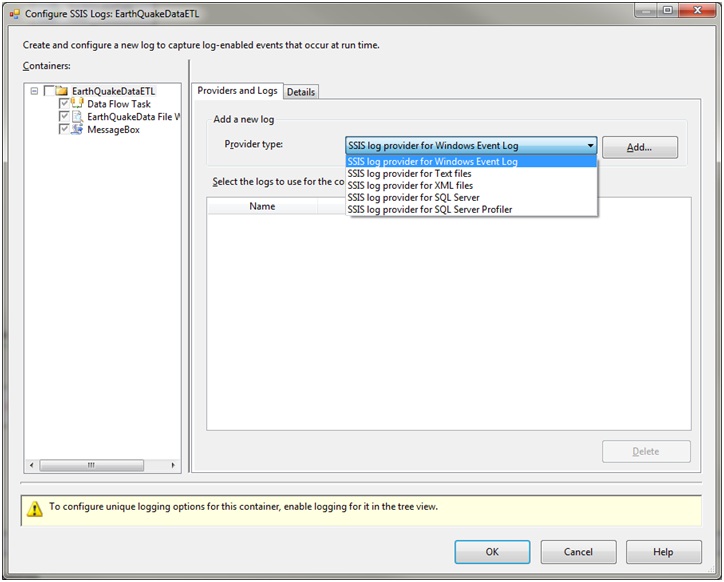

To enable logging select Logging… under the SSIS menu item in BIDS, the following dialog appears:

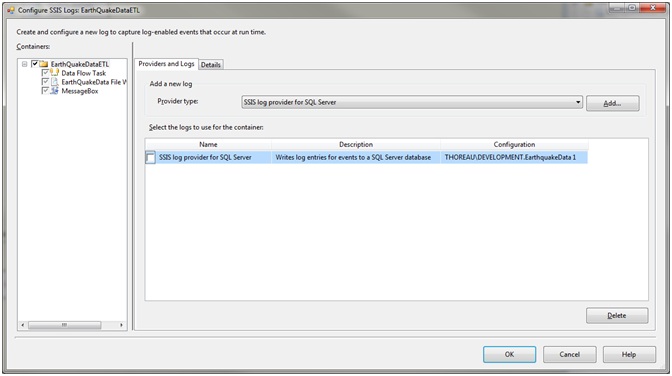

Notice the built-in logging choices! Select SSIS log provider for SQL Server then click the Add… button. Typically, I use a distinct database for logging to simplify my monitoring tasks; however, for this example let’s but the logging in the EarthquakeData database by selecting Configuration, then either creating or selecting the appropriate Connection Manager as shown below:

The log configuration dialog should resemble the following:

Examine the Details tab that lists the available events we can now log. Click the OK button.

Create the following tables in the EarthquakeData database:

Create table PackageLog ( [ExecutionID] varchar(50), [PackageName] varchar(50), [PackageID] varchar(50), [UserName] varchar(50), [MachineName] varchar(50), [StartDateTime] DateTime, [EndDateTime] DateTime ) Create Table ErrorLog ( [ExecutionID] varchar(50), [SourceName] varchar(250), [Message] varchar(250), [MessageCode] varchar(25), [LogDateTime] DateTime )

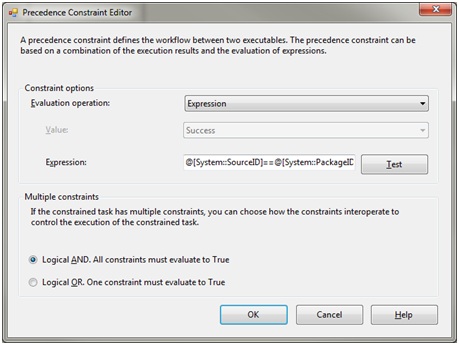

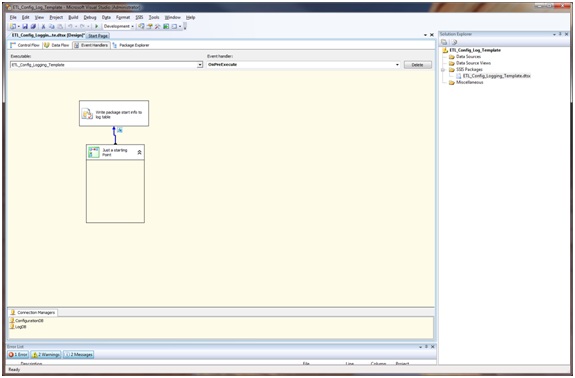

Now, let’s build an Event Handler. Select the Event Handlers tab then select the OnPreExecute event from the Event handler drop-down. To define the actions to be taken for the OnPreExecute event drag a Sequence Container and an Execute SQL Task onto the design surface; connect the Sequence Container to the Execute SQL task. In this case the Sequence Container is simply a place holder allowing the definition of an expression; right click on the task connector and select Edit… to display the following dialog:

From the Evaluation operation: drop down select Expression, then in the Expression: edit box enter the following and press the Test button:

@[System::SourceID]==@[System::PackageID]

Click the OK button.

The Execute SQL Task contains the following statement to insert values into the PackageLog table we created previously:

INSERT INTO [PackageLog]

([ExecutionID],

[PackageName],

[PackageID],

[UserName],

[MachineName],

[StartDateTime],

[EndDateTime])

VALUES (?,?,?,?,

Getdate(),

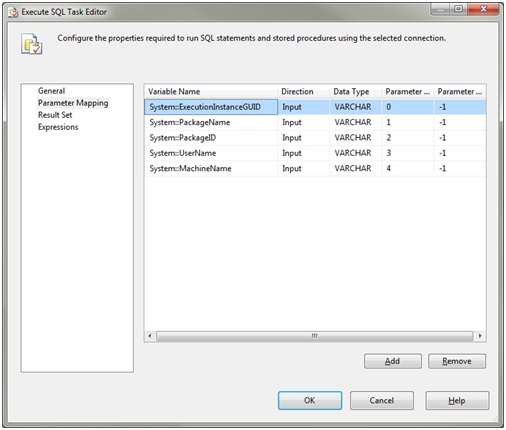

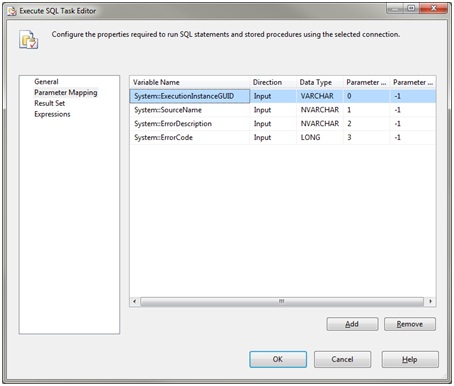

NULL) Create the following Parameter Mapping:

Use an Execute SQL Task defined with the following T-SQL command in the OnError event handler as follows:

INSERT INTO [ErrorLog]

([ExecutionID],

[SourceName],

[Message],

[MessageCode],

[LogDateTime])

VALUES (?,?,?,?,

Getdate())Create the following Parameter Mapping:

In general, I do not log to the destination database as I’ve shown in this example instead I have an ETL database where I store configuration, logging, and error tables used by all of my SSIS packages. The centralized repository simplifies the use of a “template” project that contains the basic logging demonstrated above as well as the package database configuration demonstrated previously.

Propagation

This example requires propagation of the data to multiple environments immediately following the processing of the source file.

Drag a Multicast task onto the design surface and connect the Lookup Match Output as its input. Then create three SQL Server Destinations using Connections Managers of your choosing, your Data Flow design surface might look like this:

The Multicast task can direct identical output to multiple destinations. In this example use of the Multicast task is trivial; the power to create copies of the data stream allows the data to be processed in multiple ways within the same package.

Summary

SSIS provides a rich set of components to perform the most common ETL tasks with “drag-and-drop” ease. Packages can be constructed with rigorous error and exception handling as well as verbose logging allowing deployment of robust ETL processes. This paper introduced basic SSIS concepts for ETL development intended to prime the reader’s conceptual framework for future exploration; as an example, the reader may choose to experiment with the Fuzzy Logic task which is very handy when you need to clean-up data, or the reader may choose to explore the extensibility of SSIS using the Script task.

My best recommendation for becoming adept in the use of SSIS is to experiment with the examples provided in this paper and the many other papers on the subject, try the different components, build a dataflow, and begin the journey to becoming an SSIS magician.