Article Overview

Recently I had a requirement to extract a few million records of consisting of different transaction types. These were to be stored on a data warehouse server due the operational system purging this data after a period of time.

Previously, the archived transaction records table was some 39 million rows,and storing all that data in one table could cause the table to become a bit of an unwieldy beast, especially on a server that was not configured cope with such table sizes. I decided that the best way forward was to split the data based on the transaction type into separate tables. This would keep the table sizes smaller, and subsequently more manageable, especially when querying specific transaction types.

My initial thought was that I need to use a conditional split in a Integration Services package, to split the data and populate predefined tables using OLE DB connections. There are a number of drawbacks to this solution. The conditional split must be maintained and it does not allow for new transaction types, without maintenance. Secondly, each destination table for a transaction type must have its own destination connector.

The Solution

Using a process that Tim Mitchell devised in Creating Dynamic Outputs in SSIS, as a starting point, the process I describe in this article imports data into a SQL database and splits the data into separate tables and based on the data transaction type. The process will also create a new destination table, for a new transaction transaction types, without the need to modifying the package.

The process has 4 key tasks.

- Get a list of Distinct Transaction Types.

- Using a For Each Loop to iterate through the transactions types.

- Create the Destination Tables

- Populate the relevant transaction type table

Demo Data

To implement this demo, use the following SQL statements to create the tables and data used.

CREATE TABLE [dbo].[transactions_src]( [No] [numeric](10, 0) NOT NULL, [Type] [varchar](3) NOT NULL, [TxDate] [datetime] NOT NULL, [PackNo] [varchar](30) NULL ) ON [PRIMARY] go -- CREATE SAMPLE DATA Insert into dbo.transactions_src VALUES(43165068,'024','Apr 26 2010 3:48PM','10018462670205') Insert into dbo.transactions_src VALUES(43303078,'024','May 1 2010 7:46AM','81638794101878') Insert into dbo.transactions_src VALUES(43303080,'024','May 1 2010 7:46AM','21301754010217') Insert into dbo.transactions_src VALUES(43373284,'024','May 5 2010 3:23PM','31409594050217') Insert into dbo.transactions_src VALUES(43428086,'024','May 7 2010 12:35AM','21116154100208') Insert into dbo.transactions_src VALUES(43428089,'024','May 7 2010 12:35AM','21116154200208') Insert into dbo.transactions_src VALUES(43428092,'024','May 7 2010 12:35AM','21116154700208') Insert into dbo.transactions_src VALUES(43755186,'024','May 20 2010 3:52AM','23117047300208') Insert into dbo.transactions_src VALUES(43821593,'006','May 22 2010 7:58AM','23519055700208') Insert into dbo.transactions_src VALUES(43821630,'006','May 22 2010 7:58AM','23519060000208') Insert into dbo.transactions_src VALUES(43821631,'006','May 22 2010 7:58AM','23519060100208') Insert into dbo.transactions_src VALUES(43821632,'006','May 22 2010 7:58AM','23519060300208') Insert into dbo.transactions_src VALUES(43821633,'006','May 22 2010 7:58AM','23519060400208') Insert into dbo.transactions_src VALUES(43821634,'006','May 22 2010 7:58AM','23519060500208') Insert into dbo.transactions_src VALUES(43886329,'008','May 25 2010 9:38AM','61382122071815') Insert into dbo.transactions_src VALUES(43886330,'008','May 25 2010 9:38AM','42014799101874') Insert into dbo.transactions_src VALUES(43886333,'008','May 25 2010 9:38AM','42014770101874') Insert into dbo.transactions_src VALUES(43886335,'008','May 25 2010 9:39AM','42014793101874') Insert into dbo.transactions_src VALUES(43891812,'008','May 25 2010 1:34PM','24518043800208') Insert into dbo.transactions_src VALUES(43891818,'008','May 25 2010 1:34PM','24518044400208') Insert into dbo.transactions_src VALUES(43908261,'001','May 26 2010 6:41AM','00000000135334') Insert into dbo.transactions_src VALUES(43915085,'024','May 26 2010 11:43AM','31912600050217') Insert into dbo.transactions_src VALUES(43915088,'024','May 26 2010 11:43AM','31912745050217') Insert into dbo.transactions_src VALUES(43915091,'008','May 26 2010 11:43AM','21115194400208') Insert into dbo.transactions_src VALUES(43915092,'008','May 26 2010 11:43AM','21115195300208') Insert into dbo.transactions_src VALUES(43915093,'008','May 26 2010 11:43AM','21116003100208') Insert into dbo.transactions_src VALUES(43915094,'008','May 26 2010 11:43AM','21116003300208') Insert into dbo.transactions_src VALUES(43926976,'024','May 26 2010 7:08PM','38957090200210') Insert into dbo.transactions_src VALUES(43926977,'024','May 26 2010 7:08PM','37957090100210') Insert into dbo.transactions_src VALUES(43926978,'024','May 26 2010 7:08PM','33957040200210') Insert into dbo.transactions_src VALUES(43926982,'008','May 26 2010 7:09PM','SEPL1007139') Insert into dbo.transactions_src VALUES(43967758,'027','May 28 2010 7:51AM','31905477030217') Insert into dbo.transactions_src VALUES(43967760,'027','May 28 2010 7:51AM','31905473030217') Insert into dbo.transactions_src VALUES(43967762,'024','May 28 2010 7:52AM','41924328101874') Insert into dbo.transactions_src VALUES(43967764,'027','May 28 2010 7:52AM','31905123050217') Insert into dbo.transactions_src VALUES(43967765,'024','May 28 2010 7:52AM','41924327101874') Insert into dbo.transactions_src VALUES(43967766,'042','May 28 2010 7:53AM','ET057075') Insert into dbo.transactions_src VALUES(43967768,'027','May 28 2010 7:53AM','31905598030217') Insert into dbo.transactions_src VALUES(43967771,'027','May 28 2010 7:53AM','31905214030217') Insert into dbo.transactions_src VALUES(43967773,'027','May 28 2010 7:53AM','31905256030217') Insert into dbo.transactions_src VALUES(43985538,'008','May 28 2010 8:56PM','31908857101874') Insert into dbo.transactions_src VALUES(43985543,'008','May 28 2010 8:56PM','22319033900208') Insert into dbo.transactions_src VALUES(43985544,'008','May 28 2010 8:56PM','21320029000208') Insert into dbo.transactions_src VALUES(43985549,'008','May 28 2010 8:56PM','21120070300208') Insert into dbo.transactions_src VALUES(43995612,'027','May 29 2010 8:53AM','21319119700208')

Get a list of transaction types

A key objective of this process is to deal with potential new transaction types. To do this the first task must be to get a list of the transaction types that need to be handled and can be iterated through later in the process.

First add a data flow task to the Control Flow area and give it a suitable name. e.g. "DFT - Get Tx Types".

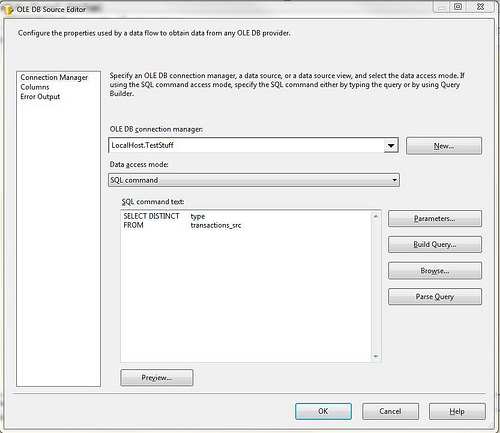

Next create an OLE DB connection to the data source table. (the source table in this example is: transaction_src). Specify the data access mode as SQL command, this example use a T-SQL Select Distinct statement to get a distinct list of transaction types (field name: type).

SELECT DISTINCT type FROM transactions_src

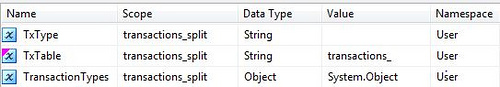

Return to the control flow area, right click and select Variables.

Now create a new variable called TransactionTypes, with a data type of object. Ensure the scope is at package level (this allows the variable to be accessed from any task in the package). The variable will store the list of transaction types, to feed a for each loop container.

Return to the data flow task DFT - Get Tx Types. Add a Record Set Destination. name it: RS_DST - TransactionTypes

Connect the OLE_SRC - transaction_src component using the on success output.

Open the RS_DST - TransactionTypes component. Set the variableName value to the variable set up in step 5. User::TransactionTypes.

Under Input columns, select the type column and then click ok to complete defining the Record Set.

Comment: At this point it is probably useful to add a data viewer to the data flow and execute the package so you can see what transaction types will come through. The example record set should produce the following record set:

type

001

006

008

024

027

042

Create a For Each Loop to iterate through the transactions types

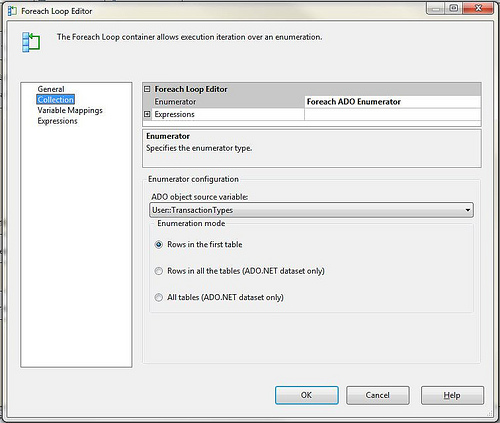

The second task of the process, is to iterate through each identified value in the record set. This is done using the For Each Loop Container, that will carry out defined tasks for each value in the record set.

1. Add a For Each Loop Container to the control flow and connect the data flow task DFT - Get Tx Types to the For Each Loop container.

2. Open the For Each Loop container

3. Rename the For Each Loop container to FEL - TransactionTypes

4. Under collection, set the Enumerator to Foreach ADO Enumerator

5. Then from ADO component Source variable dropdown list, select the ADO record set variable we defined earlier: User::Transaction

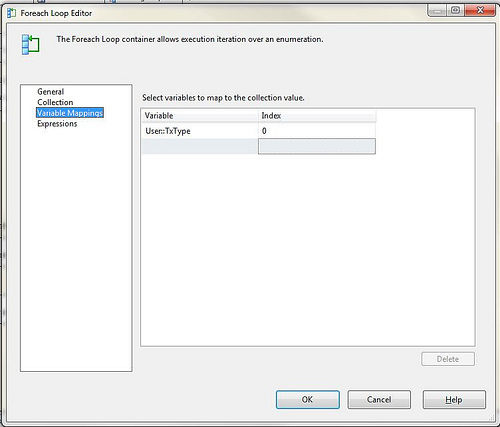

6. Under Variable Mappings, Create a new variable called: TxType and give it an index of 0

7. Click ok to complete the defining of FEL - TransactionTypes

Create the Destination Tables

At this stage of the process, you define the task that will create the destination tables for the data that will be split. A fundamental part of this it to make the process dynamic enough that no human intervention is required. This can be achieved by using a SQL Task in the control flow, that utilizes the transaction types returned to a data set earlier in the process, as a parameter, to create the required destination tables and relevant table keys and indexes.

At this point you need to think about how you will be using the data in the destination table, once it has been extracted.

Think about what you will do with the data once it is extracted. Is there is any commonality in what data fields you will query over all or the majority of data types to be split into separate tables? Defining an index on the destination tables for the data that is going to be queried on will be beneficial, especially if you have millions of rows.

If each data type has completely differing querying criteria then talking the approach of always adding a index on a data field, is unlikely to be beneficial. For example, the scenario that lead me to the solution I describe here was the need query millions of rows in one table. While splitting that data by transaction type into separate tables will make it more manageable, I will in 95% of cases, still need to query that information based on either transaction No, transaction date or pack no, no matter what the data type is. So, in my design I take the step of defining the relevant primary keys and indexes on those fields.

Creating the index as part of the create table statement for the destination table, removes the need to retrospectively add the index once the table has been created and populated with data, which would have an increased overhead on the system during the create.

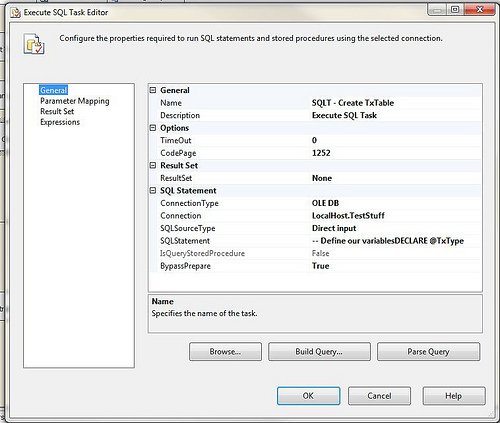

Having defined the For each loop container and decided on your destination tables layout and indexes, the next task is to add a task to the control flow in the For Each Loop container to create the destination tables if the destination table does not already exist.

In order to successfully configure a destination adapter later in the process, you will need to create a 'default' table, with the same table layout as the destination tables. (It is not necessary to create the indexes on the default table, as it will not be populated with data).

For this example, destination tables will be named 'transactions_{TxTpe}' e.g. transactions_000. The table will have a primary key on the [No] column. The 'default table will be named 'transactions_'with no primary keys or indexes.

Use the following T-SQL to create the default table (transactions_') in the database for the example:

CREATE TABLE [dbo].[transactions_]( [No] [numeric](10, 0) NOT NULL, [Type] [varchar](3) NOT NULL, [TxDate] [datetime] NOT NULL, [PackNo] [varchar](30) NULL ) ON [PRIMARY]

In the SSIS package, add an Execute SQL Task to the FEL - TransactionTypes and name it SQLT - Create TxTable. Edit the SQL Task setting the connection string the to test database configured earlier. Set the SQL SourceType property to Direct Input and enter the following T-SQL statement (explanations as to purpose are noted in the code comments):

-- Define our variables

DECLARE @TxType char(3)

DECLARE @TableName varchar(30)

DECLARE @TableCreateCmd varchar(max) -- Set @TxType: Maps a parameter to the SQL Task parameter list

set @TxType = ? -- Set @TableName: Defines what the destination table name, e.g. dbo.transactions_000

set @TableName = 'dbo.transactions_' + @TxType -- Set @TableCreateCmd: Assigns the create table statement,for the destination table to the variable

-- This uses the @table name variable & @TxType variable

set @TableCreateCmd = 'CREATE TABLE ' + @TableName + '(

[No] [numeric](10, 0) NOT NULL,

[Type] [varchar](3) NOT NULL,

[TxDate] [datetime] NOT NULL,

[PackNo] [varchar](30) NULL

CONSTRAINT [PK_extract_saves_trans_' + @TxType + '] PRIMARY KEY CLUSTERED

( [NO] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]' -- Check if destination table already exists, If it does not, execute the create table statement

IF NOT EXISTS (SELECT * FROM sys.components WHERE component_id = COMPONENT_ID(@TableName) AND type in (N'U'))

begin

EXEC (@TableCreateCmd)

end

Click ok to save the T-SQL statement.

To complete the definition, under parameter mappings add the User::TxType variable. (this will be passed to the T-SQL script that generates the create table statement). The parameter mapping for the script must be defined as follows:

- Variable Name: User::TxType

- Direction: Input

- Data Type: Varchar

- Parameter Name: 0

- Parameter Size: -1

Click Ok to complete defining the create table SQL task.

Populate the relevant transaction type table

The final stage of the process it to build the data flow task that will populate the destination tables dynamically, based on the transaction type in the current iteration of the For Each Loop.

The steps listed below create a data flow task in the for each loop container. Add a variable to the package, that will use a dynamic SQL statement for source table select and lastly a dynamic dynamic OLE DB destination adapter that will populate the relevant destination tables based on transaction type. I.e. type 000 transactions will populate transactions_000, type 006 will populate transactions_006 etc etc.

1. Add a Data Flow Task to the FEL - TransactionTypes

2. Rename it DFT - Extract Transactions

3. Connect the SQLT - Create TxTable component to DFT - Extract Transactions

4. Right Click the control flow area and select Variables

5. Create a new variable called TxTable. with a package level scope, a String data type and a value of transactions_

6. When complete - under the properties pane for the variable TxTable, set the property EvaluateAsExpression to True.

7. Then set the Expression property value to: " transactions_" + @[User::TxType]

This will setup a variable table name that will be set to the transaction type of the current iteration of the For Each Loop.. E.g. transactions_000

8. Open the DFT - Extract Transactions component

9. Add an OLE Source Component to the data flow.

10. Rename it OLE_SRC - transactions_src

11. Open the OLE_SRC - transactions_src component to configure it

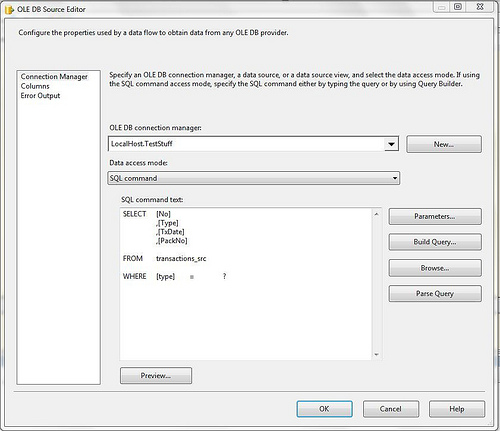

12. Connect the connection Manager to our localhost connection defined earlier in the first section.

13. From the Data Access Mode dropdown, select SQL Command and enter the following SQL command:

SELECT [No], [Type], [TxDate], [PackNo] FROM transactions_src WHERE [type] = ?

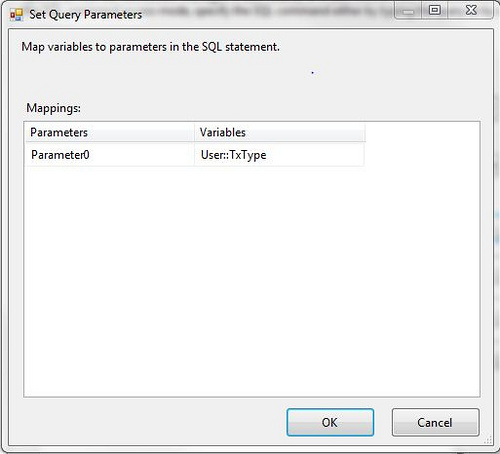

14. Then click Parameters

15. Map the variable User::TxType to the parameter0, click ok

16. Select the columns to validate the column mappings

17. Click Ok to exit

18. Add to the DFT - Extract Transactions data flow an OLE DB Destination

19. Rename the OLE Destination as OLE_DST - transactions_

20. Connect OLE_SRC - transactions_src to OLE_DST - transactions_

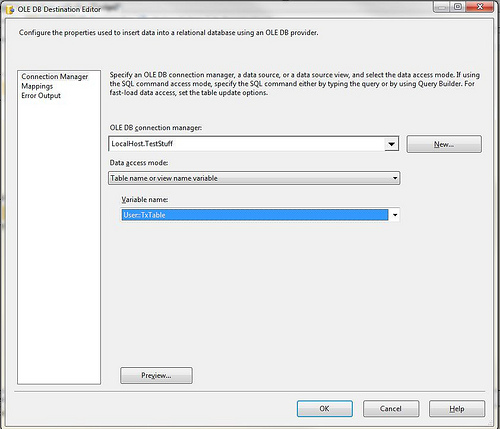

21. Open the OLE_DST - transactions_

22. Connect the connection Manager to our localhost connection defined earlier in the first section.

23. From the Data Access Mode dropdown, select Table name or view name variable and then select the variable User::TxTable

The default the table name was set earlier to be transactions_. When package is run, it will then append transaction type of the current iteration of the for each loop, thereby populating the correct transaction destination table, e.g transaction_000.

24. Click Mappings to map the columns correctly.

25. Click ok to complete.

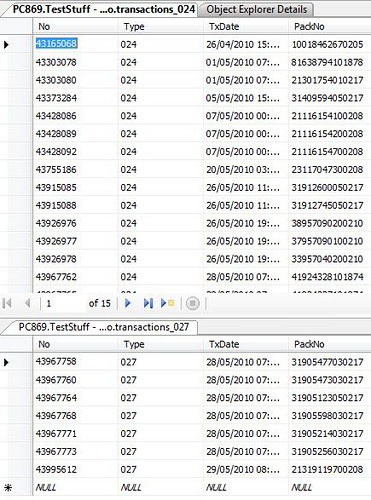

And that is it! Execute the package. The result will be the creation and population of transaction tables for each transaction type

Conclusion

This article demonstrates how integration services can be used to split transactions types (including the unexpected transactions types), into their own database table with suitable predefined keys and indexes.

It removes the need to know in advance what transaction types the process will receive. It also removes the need for maintenance of a conditional split component in an integration service package and the creation of destination tables for new transaction types in the database.

Thanks to Tim Mitchell for inspiring me to come up with the solution.