You are likely reading this series to learn how to be a more productive SQL Server Integration Services developer. I applaud your desire and decision, and I sincerely hope the information contained herein has provided ideas and information to help you be more productive. I am always on the lookout for ways to become a better data integration developer. Specifically, I seek out ways to improve code quality and reduce the amount of time required to build solutions. Those goals motivated me to begin practicing patterns-based development in the first place, which eventually led to the idea for the SQL Server 2012 Integration Services Design Patterns book.

This article is adapted from a chapter in the SQL Server 2012 Integration Services Design Patterns book – the chapter entitled Business Intelligence Markup Language. If you are interested in learning patterns of data integration using SSIS, I encourage you to obtain a copy of the book. Although the book focuses on SSIS 2012, most of the material applies to earlier versions of SSIS. In this article we will build a basic Incremental Load SSIS 2012 package.

The SSIS sample in this article is based on an article at SQL Server Central – Anatomy of an Incremental Load and a blog post at SqlBlog.com: SSIS Design Patterns – Incremental Load. In those writings, Andy demonstrated how to load data incrementally first using Transact-SQL, followed by examples of how to perform the same loads using SSIS.

Template-izing a Biml File

In this article we will inject BimlScript into the code in the IncrementalLoad.biml file you created in Building an Incremental Load Package – Level 3. We will use IncrementalLoad.biml as a template to create a Biml file that will construct one SSIS package – using the same pattern (the Incremental Load pattern) – for each table in a database.

The Incremental Load pattern can be used to accomplish the capital “E” in ETL (Extract, Transform, and Load) as it is an Extraction SSIS Design Pattern.

Note: This pattern requires that the Source and Stage tables must exist prior to expanding the Biml file to create the SSIS Packages. Even with this caveat – which we will overcome in a future article in the Stairway to Biml series – we believe this example demonstrates the power and game-changing attributes of Biml.

Prerequisites

Let’s begin by adding new tables to the SSISIncrementalLoad_Source database and creating – and populating – a new database named SSISIncrementalLoad_Stage. First, add new tables to SSISIncrementalLoad_Source by executing the T-SQL script shown in Listing 1:

USE SSISIncrementalLoad_Source

GO

-- Create Source1

If Not Exists(Select name

From sys.tables

Where name = 'Source1')

CREATE TABLE dbo.Source1

(ColID int NOT NULL

,ColA varchar(10) NULL

,ColB datetime NULL

,ColC int NULL

,constraint PK_Source1 primary key clustered (ColID))

Go

-- Load Source1

INSERT INTO dbo.Source1

(ColID,ColA,ColB,ColC)

VALUES

(0, 'A', '1/1/2007 12:01 AM', -1),

(1, 'B', '1/1/2007 12:02 AM', -2),

(2, 'C', '1/1/2007 12:03 AM', -3),

(3, 'D', '1/1/2007 12:04 AM', -4),

(4, 'E', '1/1/2007 12:05 AM', -5),

(5, 'F', '1/1/2007 12:06 AM', -6)

-- Create Source1

If Not Exists(Select name

From sys.tables

Where name = 'Source2')

CREATE TABLE dbo.Source2

(ColID int NOT NULL

,Name varchar(25) NULL

,Value int NULL

,constraint PK_Source2 primary key clustered (ColID))

Go

-- Load Source2

INSERT INTO dbo.Source2

(ColID,Name,Value)

VALUES

(0, 'Willie', 11),

(1, 'Waylon', 22),

(2, 'Stevie Ray', 33),

(3, 'Johnny', 44),

(4, 'Kris', 55)

-- Create Source3

If Not Exists(Select name

From sys.tables

Where name = 'Source3')

CREATE TABLE dbo.Source3

(ColID int NOT NULL

,Value int NULL

,Name varchar(100) NULL

,constraint PK_Source3 primary key clustered (ColID))

Go

-- Load Source3

INSERT INTO dbo.Source3

(ColID,Value,Name)

VALUES

(0, 101, 'Good-Hearted Woman'),

(1, 202, 'Lonesome, Onry, and Mean'),

(2, 303, 'The Sky Is Crying'),

(3, 404, 'Ghost Riders in the Sky'),

(4, 505, 'Sunday Morning, Coming Down')Listing 1 – Adding and Populating New SSISIncrementalLoad_Source Tables

The T-SQL in Listing 1 creates and populates three new tables.

- dbo.Source1

- dbo.Source2

- dbo.Source3

Execute the T-SQL shown in Listing 2 to build and populate the SSISIncrementalLoad_Stage database:

Use master

Go

If Not Exists(Select name

From sys.databases

Where name = 'SSISIncrementalLoad_Stage')

Create Database SSISIncrementalLoad_Stage

Go

Use SSISIncrementalLoad_Stage

Go

CREATE TABLE dbo.tblSource(

ColID int NOT NULL,

ColA varchar(10) NULL,

ColB datetime NULL,

ColC int NULL

)

CREATE TABLE dbo.stgUpdates_tblSource(

ColID int NOT NULL,

ColA varchar(10) NULL,

ColB datetime NULL,

ColC int NULL

)

Go

INSERT INTO dbo.tblSource

(ColID,ColA,ColB,ColC)

VALUES

(0, 'A', '1/1/2007 12:01 AM', -1),

(1, 'B', '1/1/2007 12:02 AM', -2),

(2, 'N', '1/1/2007 12:03 AM', -3)

Go

CREATE TABLE dbo.Source1(

ColID int NOT NULL,

ColA varchar(10) NULL,

ColB datetime NULL,

ColC int NULL

)

CREATE TABLE dbo.stgUpdates_Source1(

ColID int NOT NULL,

ColA varchar(10) NULL,

ColB datetime NULL,

ColC int NULL

)

Go

INSERT INTO dbo.Source1

(ColID,ColA,ColB,ColC)

VALUES

(0, 'A', '1/1/2007 12:01 AM', -1),

(1, 'Z', '1/1/2007 12:02 AM', -2)

Go

CREATE TABLE dbo.Source2(

ColID int NOT NULL,

Name varchar(25) NULL,

Value int NULL

)

CREATE TABLE dbo.stgUpdates_Source2(

ColID int NOT NULL,

Name varchar(25) NULL,

Value int NULL

)

Go

INSERT INTO dbo.Source2

(ColID,Name,Value)

VALUES

(0, 'Willie', 11),

(1, 'Waylon', 22),

(2, 'Stevie', 33)

Go

CREATE TABLE dbo.Source3(

ColID int NOT NULL,

Value int NULL,

Name varchar(100) NULL

)

CREATE TABLE dbo.stgUpdates_Source3(

ColID int NOT NULL,

Value int NULL,

Name varchar(100) NULL

)

Go

INSERT INTO dbo.Source3

(ColID,Value,Name)

VALUES

(0, 101, 'Good-Hearted Woman'),

(1, 202, 'Are You Sure Hank Done It This Way?')

GoListing 2 – Building and Populating the SSISIncrementalLoad_Stage Database

Add a New Biml File

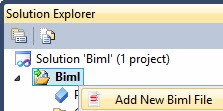

Let’s continue by adding a new Biml file to the Biml project by right-clicking the “Biml” project in Solution Explorer and then click “Add New Biml File” as shown in Figure 1:

Figure 1 – Adding a New Biml File

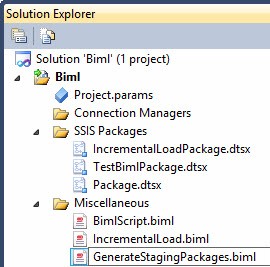

Rename this file GenerateStagingPackages.biml as shown in Figure 2:

Figure 2 – Renaming the New Biml File

Business Intelligence Markup Language (Biml) supports .Net code inline. It is quite easy to underestimate the power of this functionality, known as BimlScript. We will use C# to create table objects based on the tables in a source database. We begin with importing assemblies for use in the remainder of the Biml file.

Before the <Biml> tag, add the code snippet highlighted and shown in Listing 3:

<#@ import namespace="System.Data" #>

<#@ import namespace="Varigence.Hadron.CoreLowerer.SchemaManagement" #>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

</Biml>

<# var connection = SchemaManager.CreateConnectionNode("SchemaProvider", "Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;"); #>

<# var tables = connection.GenerateTableNodes(); #>Listing 3 – Adding .Net Namespaces to Biml

Adding BimlScript

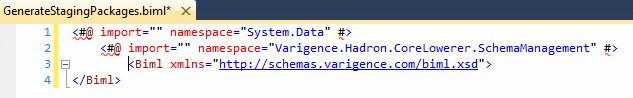

This is our first encounter with BimlScript. So far, we have been working with Biml proper, which is XML. Visual Studio understands XML so it works well with Biml, but BimlScript is not XML. Visual Studio, installed out of the box, does not understand and, if you paste BimlScript into your new Biml file, the Visual Studio environment will appear similar to that shown in Figure 3:

Figure 3 – Visual Studio is not Liking BimlScript!

Let’s fix this issue before continuing. How? By following the excellent advice of Paul Waters in his article entitled Overcoming BimlScript Copy and Paste Issues in Visual Studio.

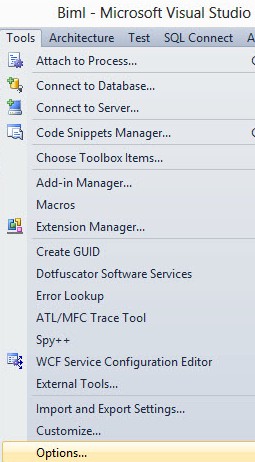

To stop Visual Studio from altering your BimlScript when you paste it into the editor, do the following:

1. Click Tools -> Options... as shown in Figure 4:

Figure 4 – Opening Visual Studio ToolsàOptions

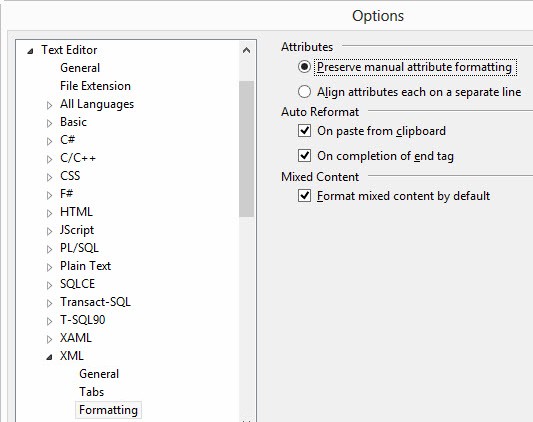

1. In the tree in the dialog that comes up, go to Text Editor -> XML -> Formatting as shown in Figure 5:

Figure 5 – The Text Editor / XML / Formatting Page in Visual Studio Options

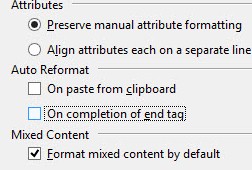

3. Uncheck "On Paste from clipboard"

4. Consider also unchecking "On completion of end tag"

Figure 6 – Unchecking the Recommended Options

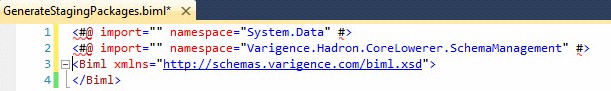

Now, cut the two lines of code from the GenerateStagingPackages.biml file and re-paste them. Your file should appear similar to that shown in Figure 7:

Figure 7 – Visual Studio Behaving When We Paste BimlScript

Defining Connections

The code in Listing 3 imports the System.Data and Varigence.Hadron.CoreLowerer.SchemaManagement namespaces into the Biml file. We will make use of the SchemaManagement next, shown in Listing 4:

<#@ import namespace="System.Data" #>

<#@ import namespace="Varigence.Hadron.CoreLowerer.SchemaManagement" #>

<# var connection = SchemaManager.CreateConnectionNode("SchemaProvider", "Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;"); #>

<# var tables = connection.GenerateTableNodes(); #>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

</Biml>Listing 4 – Initial Method Calls to Namespaces in BimlScript

In Listing 4, we create a variable named “connection” and assign it the value of a SchemaManager ConnectionNode object which is aimed at the SSISIncrementalLoad_Source database. The “Connection” variable supports another variable named “tables”. The “tables” variable is populated from a call the “connection” variable’s “GenerateTableNodes()” method which populates the “tables” variable with a list of the tables found in the SSISIncrementalLoad_Source database.

After the <Biml> tag, add a “Connections” XML Node that contains two “Connection” child nodes. The Connections node and the Connections nodes are identical to those we built in Building an Incremental Load Package – Level 3, so I am not going to explain in detail. Your Biml file now appears similar to that shown in Listing 5:

<#@ import namespace="System.Data" #>

<#@ import namespace="Varigence.Hadron.CoreLowerer.SchemaManagement" #>

<# var connection = SchemaManager.CreateConnectionNode("SchemaProvider", "Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;"); #>

<# var tables = connection.GenerateTableNodes(); #>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<Connections>

<Connection Name="SSISIncrementalLoad_Source" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;" />

<Connection Name="SSISIncrementalLoad_Stage" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Stage;Provider=SQLNCLI11.1;OLE DB Services=1;Integrated Security=SSPI;" />

</Connections>

</Biml>Listing 5 – Adding Connections to the GenerateStagingPackages.biml File

As with the IncrementalLoad.biml file we designed in Building an Incremental Load Package – Level 3, the Connection nodes are the templates for SSIS Connection Managers in the SSIS Package.

Defining Packages

Next, add a “Package” node immediately after the </Connections> tag. Here we will make a crucial modification to this Biml file and its capability. We begin a C# loop here that spans all but the last two lines of this Biml file. Inside this loop is found the Biml code, augmented with BimlScript, that will generate a package using the Incremental Load pattern for each table found in the SSISIncrementalLoad_Source database. How cool is that?

Your Biml file should now include the code from Listing 6, immediately after the </Connections> tag:

<#@ import namespace="System.Data" #>

<#@ import namespace="Varigence.Hadron.CoreLowerer.SchemaManagement" #>

<# var connection = SchemaManager.CreateConnectionNode("SchemaProvider", "Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;"); #>

<# var tables = connection.GenerateTableNodes(); #>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<Connections>

<Connection Name="SSISIncrementalLoad_Source" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;" />

<Connection Name="SSISIncrementalLoad_Stage" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Stage;Provider=SQLNCLI11.1;OLE DB Services=1;Integrated Security=SSPI;" />

</Connections>

<Packages>

<# foreach (var table in tables) { #>

</Packages>

</Biml>Listing 6 – Adding the Packages Node and Starting a Loop

The loop defined in Listing 6 will drive the BimlScript engine as it creates an SSIS Package for each table found in the SSISIncrementalLoad_Source database. Because we are using the SSIS Incremental Load Design Pattern as the template for this package, this Biml file will construct an Incremental Load SSIS Package for each of these tables.

The “table” variable defined in the loop’s signature is used throughout the remainder of the GenerateStagingPackages.biml file.

Immediately after the beginning of the foreach loop BimlScript, add a “Package” node. Add a “Name” attribute to the Package node and set its value to “IncrementalLoad_<#=table.Name#>”. Next add a “ConstraintMode” attribute and set its value to “Linear”. Finally, add a “ProtectionLevel” attribute and set its value to “EncryptSensitiveWithUserKey” as shown in Listing 7:

<#@ import namespace="System.Data" #>

<#@ import namespace="Varigence.Hadron.CoreLowerer.SchemaManagement" #>

<# var connection = SchemaManager.CreateConnectionNode("SchemaProvider", "Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;"); #>

<# var tables = connection.GenerateTableNodes(); #>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<Connections>

<Connection Name="SSISIncrementalLoad_Source" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;" />

<Connection Name="SSISIncrementalLoad_Stage" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Stage;Provider=SQLNCLI11.1;OLE DB Services=1;Integrated Security=SSPI;" />

</Connections>

<Packages>

<# foreach (var table in tables) { #>

<Package Name="IncrementalLoad_<#=table.Name#>" ConstraintMode="Linear" ProtectionLevel="EncryptSensitiveWithUserKey">

</Package>

</Packages>

</Biml>Listing 7 – The Package Node with BimlScript Replacements

This Biml code, like much in this Biml file, is copied from the IncrementalLoad.biml file and modified to accept BimlScript overrides from the foreach loop. Each SSIS Package generated when this Biml is executed will be named consistently: “IncrementalLoad_<Source Table Name>”.

Also note the “ConstraintMode” attribute of the ‘Package” node is set to “Linear.” In the IncrementalLoad.biml file, this was set to “Parallel.” The differences are subtle but powerful. First, the Biml compiler will automatically create precedence constraints for you. Specifically, it will create an OnSuccess precedence constraint in the Control Flow from one task to the next, based on the order the executables appear in the Biml file. This functionality makes scripting and simple file authoring extremely quick. Second, you can eliminate default InputPath nodes in the Data Flow Task because the default InputPath will connect to the default output path of the transformation that appears directly before it. You will only need to add InputPath and OutputPath nodes when connecting non-default inputs and outputs.

Defining the Truncate Execute SQL Task

Immediately following the <Package> tag, add a “Tasks” node, followed by an “ExecuteSQL” node configured as shown in Listing 8. Keep in mind you can copy similar Biml from the IncrementalLoad.biml file you built as part of Building an Incremental Load Package – Level 3. That’s one reason I am not going into a lot of detail here about the purpose and function of the Biml in this file; the purpose and function are described in great detail in Building an Incremental Load Package – Level 3:

<#@ import namespace="System.Data" #>

<#@ import namespace="Varigence.Hadron.CoreLowerer.SchemaManagement" #>

<# var connection = SchemaManager.CreateConnectionNode("SchemaProvider", "Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;"); #>

<# var tables = connection.GenerateTableNodes(); #>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<Connections>

<Connection Name="SSISIncrementalLoad_Source" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;" />

<Connection Name="SSISIncrementalLoad_Stage" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Stage;Provider=SQLNCLI11.1;OLE DB Services=1;Integrated Security=SSPI;" />

</Connections>

<Packages>

<# foreach (var table in tables) { #>

<Package Name="IncrementalLoad_<#=table.Name#>" ConstraintMode="Linear" ProtectionLevel="EncryptSensitiveWithUserKey">

<Tasks>

<ExecuteSQL Name="Truncate stgUpdates_<#=table.Name#>" ConnectionName="SSISIncrementalLoad_Stage">

<DirectInput>Truncate Table stgUpdates_<#=table.Name#></DirectInput>

</ExecuteSQL>

</Tasks>

</Package>

</Packages>

</Biml>Listing 8 – Adding Tasks and the “Truncate Staging Table” Execute SQL Task

Again, note the generic naming of the Execute SQL Task that performs the truncate operation on the staging table. The name of the Source table will replace the <#=table.Name#> placeholder when the GenerateStagingPackages.biml file is executed. It will be named differently for each table in the Source database, but it will also be descriptive and accurate.

Defining the Incremental Load Data Flow Task

In the next listing (Listing 9), I am simply going to show you the Biml for the incrementally loading Data Flow Task, modified directly from the IncrementalLoad.biml file created in Building an Incremental Load Package – Level 3. Each component includes BimlScript where necessary to make the Biml generic enough to respond to different Source table schemas:

<#@ import namespace="System.Data" #>

<#@ import namespace="Varigence.Hadron.CoreLowerer.SchemaManagement" #>

<# var connection = SchemaManager.CreateConnectionNode("SchemaProvider", "Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;"); #>

<# var tables = connection.GenerateTableNodes(); #>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<Connections>

<Connection Name="SSISIncrementalLoad_Source" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;" />

<Connection Name="SSISIncrementalLoad_Stage" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Stage;Provider=SQLNCLI11.1;OLE DB Services=1;Integrated Security=SSPI;" />

</Connections>

<Packages>

<# foreach (var table in tables) { #>

<Package Name="IncrementalLoad_<#=table.Name#>" ConstraintMode="Linear" ProtectionLevel="EncryptSensitiveWithUserKey">

<Tasks>

<ExecuteSQL Name="Truncate stgUpdates_<#=table.Name#>" ConnectionName="SSISIncrementalLoad_Stage">

<DirectInput>Truncate Table stgUpdates_<#=table.Name#></DirectInput>

</ExecuteSQL>

<Dataflow Name="Load <#=table.Name#>">

<Transformations>

<OleDbSource Name="<#=table.Name#> Source" ConnectionName="SSISIncrementalLoad_Source">

<DirectInput>SELECT <#=table.GetColumnList()#> FROM <#=table.SchemaQualifiedName#></DirectInput>

</OleDbSource>

<Lookup Name="Correlate" OleDbConnectionName="SSISIncrementalLoad_Stage" NoMatchBehavior="RedirectRowsToNoMatchOutput">

<DirectInput>SELECT <#=table.GetColumnList()#> FROM dbo.<#=table.Name#></DirectInput>

<Inputs>

<# foreach (var keyColumn in table.Keys[0].Columns) { #>

<Column SourceColumn="<#=keyColumn.Column#>" TargetColumn="<#=keyColumn.Column#>" />

<# } #>

</Inputs>

<Outputs>

<# foreach (var col in table.Columns) { #>

<Column SourceColumn="<#=col#>" TargetColumn="Dest_<#=col#>" />

<# } #>

</Outputs>

</Lookup>

<ConditionalSplit Name="Filter">

<OutputPaths>

<OutputPath Name="Changed Rows">

<# string exp ="";

foreach (var colex in table.Columns) { exp += "(" + colex + " != Dest_" + colex + ") || "; } #>

<Expression><#=exp.Substring(0, exp.Length - 4)#></Expression>

</OutputPath>

</OutputPaths>

</ConditionalSplit>

<OleDbDestination Name="stgUpdates_<#=table.Name#>" ConnectionName="SSISIncrementalLoad_Stage">

<InputPath OutputPathName="Filter.Changed Rows" />

<ExternalTableOutput Table="dbo.stgUpdates_<#=table.Name#>" />

</OleDbDestination>

<OleDbDestination Name="<#=table.Name#> Destination" ConnectionName="SSISIncrementalLoad_Stage">

<InputPath OutputPathName="Correlate.NoMatch" />

<ExternalTableOutput Table="dbo.<#=table.Name#>" />

</OleDbDestination>

</Transformations>

</Dataflow>

</Tasks>

</Package>

</Packages>

</Biml>Listing 9 –The Generic Data Flow Task

The BimlScript shown in Listing 9 dynamically generates an incrementally loading Data Flow Task, given the caveats listed near the beginning of this section. I’m not going to go through each injection of BimlScript but let’s take a look at some of the cooler automation, shall we?

First, take a look at the “DirectInput” tag of the “Correlate” Lookup Transformation:

<DirectInput>SELECT <#=table.GetColumnList()#> FROM dbo.<#=table.Name#></DirectInput>

This tag holds the SQL Statement that will be used by the lookup to bring back rows from the Destination table, shown in Figure 8:

Figure 8 – The SQL Command Text in the OLE DB Source Adapter

The GetColumnList method of the table variable returns a comma-delimited list of columns in the table. It is important to point out that this works because the source and destination tables are identical in design. This returns a list of columns from the source table and applies to a SELECT statement from the destination table. This is one of the caveats I mentioned earlier. Are there ways around this? Yes. But I am intentionally keeping this demonstration simple while pointing out the caveats of doing so.

There is more interesting BimlScript automation a few lines below:

<# foreach (var keyColumn in table.Keys[0].Columns) { #>

<Column SourceColumn="<#=keyColumn.Column#>" TargetColumn="<#=keyColumn.Column#>" />

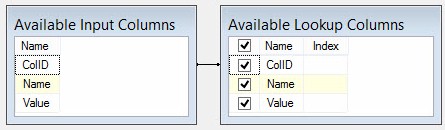

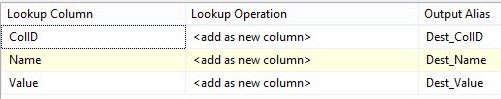

<# } #>This foreach loop iterates over the columns contained in the first keys collection of the table, table.Keys[0].Columns. This snippet of BimlScript is nested inside the Lookup Transformation’s <Input> node. The <Input> node defines the relationship – the “joining” – between the rows flowing into the lookup and the rows being returned by the lookup’s query against the destination table and is shown in Figure 9:

Figure 9 – “Joining” the Available Input Columns to the Available Lookup Columns

Note the “join” is assigned to columns in the first key, which is the primary key. Primary keys must contain at least one column but can contain many columns (this is called a compound or composite key, depending on the uniqueness of the values in the individual columns). The loop works for cases of one and for cases of many fields in the primary key, and the code inside the loop creates a mapping between each primary key field in the lookup transformation input (from the source table) and its corresponding field in the destination table.

The next bit of BimlScript is found in the Lookup Transformation’s <Output> node:

<# foreach (var col in table.Columns) { #>

<Column SourceColumn="<#=col#>" TargetColumn="Dest_<#=col#>" />

<# } #>The <Output> node configures the Lookup Transformation’s rows returned on the Lookup Transformation’s Columns page. The columns returned are added to the Data Flow Path in the output of the Lookup Transformation. This BimlScript code selects each column in the table.Columns collection, builds a <Column> node, applies the “Dest_” prefix, and maps it into the output of the lookup, as shown in Figure 10:

Figure 10 – Columns Returned From the Lookup Operation

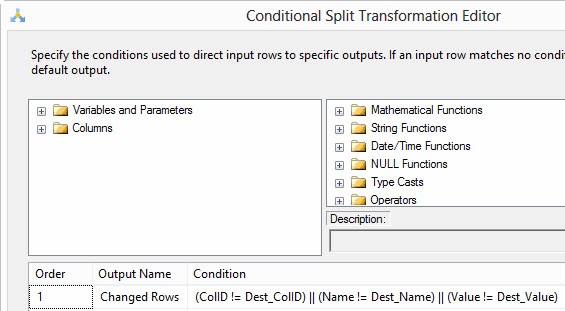

Let’s next look at the automation supplied to define the Conditional Split Transformation’s output path named “Changed Rows”:

<# string exp ="";

foreach (var colex in table.Columns) {

exp += "(" + colex + " != Dest_" + colex + ") || ";

} #>

<Expression><#=exp.Substring(0, exp.Length - 4)#></Expression>The BimlScript starts with the declaration of a variable named exp. A loop rolls through each column in table.Columns and is used to build a “brute-force” change-detection expression in the Conditional Split. The brute-force method works because the “Correlate” lookup transformation merges input and lookup columns to make one long row when it finds a match between values in the “joined” fields. This long row is sent to the Match output of the lookup transformation. The purpose of the brute-force expression is to compare the values in each field, searching for changes – inequality – in values, as shown in Figure 11:

Figure 11 – The “Brute-Force” Expression in the Changed Rows Condition

Each input field (from the source) is checked for inequality with its destination counterpart (example: Name != Dest_Name). These checks are strung together with OR operators (||), so the functional result of the expression is to detect any difference between the source and destination values. If a difference is detected, the row is sent to the Changed Rows output of the Conditional Split Transformation named “Filter”.

The remainder of the data flow task logic is described well in Building an Incremental Load Package – Level 3.

Defining the Set-Based Update Execute SQL Task

Let’s complete the Biml file by creating a generic template for the final Execute SQL Task that performs the set-based update for Changed Rows between the staging table and destination, shown highlighted in Listing 10:

<#@ import namespace="System.Data" #>

<#@ import namespace="Varigence.Hadron.CoreLowerer.SchemaManagement" #>

<# var connection = SchemaManager.CreateConnectionNode("SchemaProvider", "Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;"); #>

<# var tables = connection.GenerateTableNodes(); #>

<Biml xmlns="http://schemas.varigence.com/biml.xsd">

<Connections>

<Connection Name="SSISIncrementalLoad_Source" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Source;Provider=SQLNCLI11.1;Integrated Security=SSPI;" />

<Connection Name="SSISIncrementalLoad_Stage" ConnectionString="Data Source=(local);Initial Catalog=SSISIncrementalLoad_Stage;Provider=SQLNCLI11.1;OLE DB Services=1;Integrated Security=SSPI;" />

</Connections>

<Packages>

<# foreach (var table in tables) { #>

<Package Name="IncrementalLoad_<#=table.Name#>" ConstraintMode="Linear" ProtectionLevel="EncryptSensitiveWithUserKey">

<Tasks>

<ExecuteSQL Name="Truncate stgUpdates_<#=table.Name#>" ConnectionName="SSISIncrementalLoad_Stage">

<DirectInput>Truncate Table stgUpdates_<#=table.Name#></DirectInput>

</ExecuteSQL>

<Dataflow Name="Load <#=table.Name#>">

<Transformations>

<OleDbSource Name="<#=table.Name#> Source" ConnectionName="SSISIncrementalLoad_Source">

<DirectInput>SELECT <#=table.GetColumnList()#> FROM <#=table.SchemaQualifiedName#></DirectInput>

</OleDbSource>

<Lookup Name="Correlate" OleDbConnectionName="SSISIncrementalLoad_Stage" NoMatchBehavior="RedirectRowsToNoMatchOutput">

<DirectInput>SELECT <#=table.GetColumnList()#> FROM dbo.<#=table.Name#></DirectInput>

<Inputs>

<# foreach (var keyColumn in table.Keys[0].Columns) { #>

<Column SourceColumn="<#=keyColumn.Column#>" TargetColumn="<#=keyColumn.Column#>" />

<# } #>

</Inputs>

<Outputs>

<# foreach (var col in table.Columns) { #>

<Column SourceColumn="<#=col#>" TargetColumn="Dest_<#=col#>" />

<# } #>

</Outputs>

</Lookup>

<ConditionalSplit Name="Filter">

<OutputPaths>

<OutputPath Name="Changed Rows">

<# string exp ="";

foreach (var colex in table.Columns) { exp += "(" + colex + " != Dest_" + colex + ") || "; } #>

<Expression><#=exp.Substring(0, exp.Length - 4)#></Expression>

</OutputPath>

</OutputPaths>

</ConditionalSplit>

<OleDbDestination Name="stgUpdates_<#=table.Name#>" ConnectionName="SSISIncrementalLoad_Stage">

<InputPath OutputPathName="Filter.Changed Rows" />

<ExternalTableOutput Table="dbo.stgUpdates_<#=table.Name#>" />

</OleDbDestination>

<OleDbDestination Name="<#=table.Name#> Destination" ConnectionName="SSISIncrementalLoad_Stage">

<InputPath OutputPathName="Correlate.NoMatch" />

<ExternalTableOutput Table="dbo.<#=table.Name#>" />

</OleDbDestination>

</Transformations>

</Dataflow>

<ExecuteSQL Name="Apply stgUpdates_<#=table.Name#>" ConnectionName="SSISIncrementalLoad_Stage">

<# string upd ="Update Dest Set ";

foreach (var colex in table.Columns.Where(column => !table.Keys[0].Columns.Select(keyColumn => keyColumn.Column).Contains(column))) {

upd = upd + "Dest." + colex + " = Upd." + colex + ",";

}

var updc = upd.Substring(0,upd.Length-1) + " From " + table.SchemaQualifiedName + " Dest Join [" + table.Schema.Name + "].[stgUpdates_" + table.Name + "] Upd On Upd." + table.Keys[0].Columns[0].Column + " = Dest." + table.Keys[0].Columns[0].Column;#>

<DirectInput><#=updc#></DirectInput>

</ExecuteSQL>

</Tasks>

</Package>

</Packages>

</Biml>Listing 10 – The Generic “Apply Staged Updates” Execute SQL Task

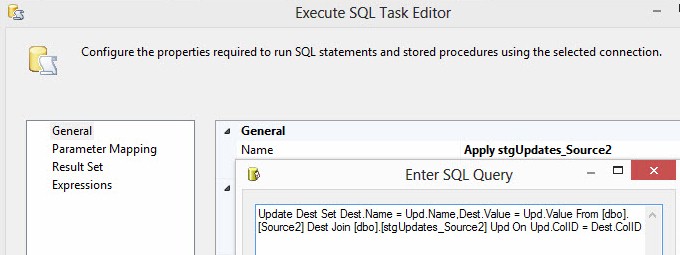

This is perhaps the most complex BimlScript in the GenerateStagingPackages.biml file:

<# string upd ="Update Dest Set ";

foreach (var colex in table.Columns.Where(column => !table.Keys[0].Columns.Select(keyColumn => keyColumn.Column).Contains(column))) {

upd = upd + "Dest." + colex + " = Upd." + colex + ",";

}

var updc = upd.Substring(0,upd.Length-1) + " From " + table.SchemaQualifiedName + " Dest Join [" + table.Schema.Name + "].[stgUpdates_" + table.Name + "] Upd On Upd." + table.Keys[0].Columns[0].Column + " = Dest." + table.Keys[0].Columns[0].Column;#>

<DirectInput><#=updc#></DirectInput>This chunk of code will build the SQL Statement of the Execute SQL Task shown in Figure 12:

Figure 12 – The SQL Statement Generated by the BimlScript for the Update Statement

Let’s break this down some. First, the upd string variable is declared and initialized with the value “Update Dest Set “. The upd variable will be used to dynamically construct the SQL Statement executed by the Execute SQL Task (shown in Figure 12). A foreach loop is started for each column in the table.Columns collection, modified with a Where method. Further, the Where method is modified by a fairly complex lambda expression: column => !table.Keys[0].Columns.Select(keyColumn => keyColumn.Column).Contains(column)). This lambda expression starts by isolating column ‘s where the column is not (!) in the collection of columns returned by the next part of the lambda expression. The next part of the lambda expression returns the list of columns we do not want; which is the key columns. We want the non-key columns so we can include them in the Set clause. The lambda expression to return the key columns selects the keyColumn from the keyColumn.Column collection contained in table.Keys[0]. The list of key columns is returned by table.Keys[0].Columns.Select(keyColumn => keyColumn.Column). The exclamation point (!) before this list tells the lambda expression we wish to filter these key columns from the list. Finally, the Contains(column) method is added to return the remaining columns, after the key-column-lambda filter is applied.

The BimlScript logic in this package is complex, but it’s the kind of complexity I find tolerable. It is complexity with a purpose, and the purpose is simplifying the life (and work) of the data integration developer.

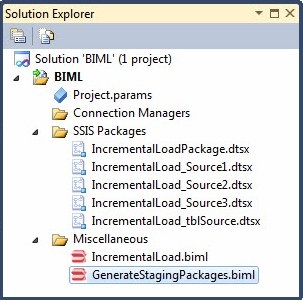

Time for a Test

In Solution Explorer, right-click the GenerateStagingPackages.biml file and click “Generate SSIS Packages”. If all goes as planned, your Solution Explorer window should appear similar to that shown in Figure 13:

Figure 13 – Four SSIS packages from one Biml file!

Conduct further testing by executing (and re-executing) each of the four SSIS Packages created by the Biml expansion. When I execute the SSIS package named “IncrementalLoad_tblSource.dtsx” I see results (shown in Figure 14) remarkably similar to those observed earlier (in Building an Incremental Load Package – Level 3, Figure 23).

Figure 14 –Dynamically-Built Incremental Load SSIS package

Testing will reveal the other SSIS packages perform similarly.

Conclusion

In this Biml Basics mini-series, we have taken a brief tour of some of the functionality of Business Intelligence Markup Language. We have demonstrated its usefulness as a domain-specific language for generating SSIS Design Patterns, focusing on the Incremental Load pattern. In the final example (this article), we demonstrated how Biml files can leverage BimlScript and use integrated .Net functionality to create a patterns-based approach to building four SSIS packages. Further, the packages are constructed using a tried and true data integration pattern (Incremental Load). The four packages were generated in a matter of seconds. I assure you, based on real-world experience, that Biml can produce hundreds of Incremental Load SSIS packages in a matter of minutes. This is game-changing technology. Generating hundreds of SSIS packages – even using templates and patterns – can easily consume data integration developer-months.