Browse Tab Cube, Query Out Large Blocks of Historical Data an Option to Restore History to a Table?

-

February 9, 2018 at 3:02 pm

Hello, with my question, I'm looking for a way to extract a block of historical data from a cube to a table, and this is an idea i want to know is feasible.

I have two very large cubes, one hold subs data back 13 months, it loads daily, and is a 2014 formatted Tabular cube, and all of it's data is stored in one month data partitions. Same story for a Revenue tab cube formatted in 2014 living in a 2016 formatted instance with 38 months, each month in a data partition.

Can I navigae to the cube in the SSAS instance, right click it, go to Browse, and write or design a query that can extract out certain columns across a year or multiple years of data and pipe that out to a table in a database?

The issue, for either of the cubes, I take in one or two months at a time to a trunc and load table, where it then gets processed into the appropriate data partition of the cube. So I do not have a table of historical data, the cube hold the historical data in an aggregated format. I need that historical data in table format (or flat file maybe) so a sister team can make use of it to stop back hauling the same several years of data every other day and hammering the system so.

I'm open to any and all ideas to efficiently accomplish this task.

Thank you,

JQ -

February 14, 2018 at 5:42 am

You should be able to extract the cube data to Excel, and use the Excel file as source for your table. An SSIS package can be developed to import the data.

That being said, you're just putting a band-aid on the real problem of not storing your historical data in the first place.

-

February 14, 2018 at 9:54 am

Martin Schoombee - Wednesday, February 14, 2018 5:42 AMYou should be able to extract the cube data to Excel, and use the Excel file as source for your table. An SSIS package can be developed to import the data.That being said, you're just putting a band-aid on the real problem of not storing your historical data in the first place.

Hi, Excel goes to 1 million records, I'm around 1.5+B rows. Thanks

-

February 14, 2018 at 10:27 am

quinn.jay - Wednesday, February 14, 2018 9:54 AMMartin Schoombee - Wednesday, February 14, 2018 5:42 AMYou should be able to extract the cube data to Excel, and use the Excel file as source for your table. An SSIS package can be developed to import the data.That being said, you're just putting a band-aid on the real problem of not storing your historical data in the first place.

Hi, Excel goes to 1 million records, I'm around 1.5+B rows. Thanks

Well now that would've been great information to have at the start, but in any event you can surely limit the data to fit into an Excel workbook...or us multiple workbooks etc.

-

February 14, 2018 at 11:23 am

Martin Schoombee - Wednesday, February 14, 2018 10:27 AMquinn.jay - Wednesday, February 14, 2018 9:54 AMMartin Schoombee - Wednesday, February 14, 2018 5:42 AMYou should be able to extract the cube data to Excel, and use the Excel file as source for your table. An SSIS package can be developed to import the data.That being said, you're just putting a band-aid on the real problem of not storing your historical data in the first place.

Hi, Excel goes to 1 million records, I'm around 1.5+B rows. Thanks

Well now that would've been great information to have at the start, but in any event you can surely limit the data to fit into an Excel workbook...or us multiple workbooks etc.

I think an approach I've not tried before, would be OPENQUERY linked to the SSAS Tab instance, and try to extract that large volume that way, then find a way to build deltas say monthly from that processes. I'll have to ping back in to mention if this was a feasible solution.

-

March 23, 2018 at 11:39 am

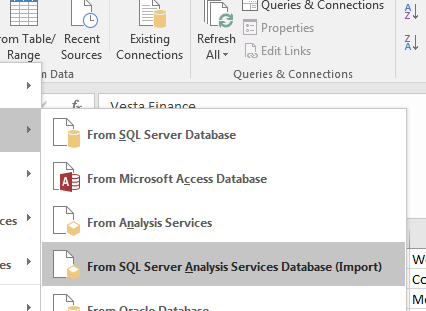

Power Query and any connector using M has the ability to connect to and import data from an SSAS db. SSIS 2017 should have the ability to add a connector that takes advantage of this, so your data source could be SSAS db directly.

Viewing 6 posts - 1 through 5 (of 5 total)

You must be logged in to reply to this topic. Login to reply