SSIS package assistance, importing files from folder

-

October 25, 2018 at 11:40 am

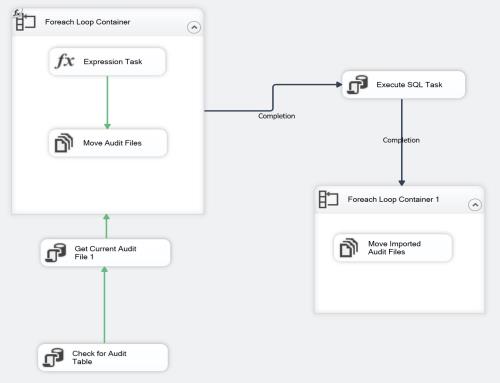

I'm trying to create a package to import SQL Audit files to a table on my server, to make searching them easier when I'm trying to track down something. I've got a package that works, mostly. I've attached an image (I know, not 100% helpful, I can also upload the project) to show the current steps.

Right now, it verifies the table exists that the records will be put in, creating it if needed.

Gets the current active audit file:select top 1 audit_file_path as curAuditFile from sys.dm_server_audit_status

Which is put into a variable (User::curAuditFile1). This is currently safe to use on my servers, as there's only one defined SQL Audit, down the road I'll probably add something so that if additional audits get added, it'll still find the correct one.

The Foreach loop reads in all the files in the audit location and puts that into a variable (User::varFilesToMove).

I think my problem is in the expression task, which is supposed to compare the current audit file to the audit file to be imported:@[User::curAuditFile1] != @[User::varFilesToMove]Other than throwing an error (when I add some breakpoints and test it on my lab, it fails on the move audit files / foreach loop, which matches what's reported) everything does what I want it to do. I'm sure there's some other things I can do to tighten up and improve possible errors elsewhere, but right now I just want to resolve the problem of trying to process the active file (which can't be moved to the folder that the Execute SQL Task hits to read in the audit files.)

(Some other notes: This is using the Project Deployment Model to SSISDB, not the Package Deployment model.)

-

October 25, 2018 at 11:56 am

I'm sure that there are good reasons for you choosing to do this the way you have, but I do have a few questions / comments.

- DDL operations (CREATE TABLE in this case) are best done in a software release and dictated by what is in your VCS.

- Why aren't you importing the data from the flat files using data flow tasks?

- Why not set up your process such that it works as follows:

- Loop round files in folder

- Jump over any locked files (if you are happy to include a script task in your code to determine whether or not a file is locked, I will post some code which you can use as a starting point)

- After successfully importing a file, archive it.

If you haven't even tried to resolve your issue, please don't expect the hard-working volunteers here to waste their time providing links to answers which you could easily have found yourself.

-

October 25, 2018 at 12:12 pm

Phil,

DDL: I plan to create the table in question before hand, but included the "check for existence and create" as a "oops" protection. The audits are getting imported into a "DBA Tools" database which is only for DBA use, so it won't impact other application databases.

Importing in a data flow: Um, because I was following what seemed to me the easy / right direction? Honestly, this is the first really involved SSIS package I've every whipped up, I didn't really think about doing it in a data flow task.As for what you suggested for the process, that's largely what I'm aiming for. The for each loop moves the inactive files to an import folder, so I can import the audit files with "insert into... select... from sys.fn_get_audit_file('s:\SQLAuditImport\*', NULL, NULL)" and not have to try to pass each individual filename to the fn_get_audit_file.

I have nothing against giving a script task a try, so if you don't mind shooting me the starting point, I'll see what I can do from there.

Of course, the slightly annoying part is, the reason I started working on this was to satisfy a requirement for audit files to be "centrally managed," which the folks in charge of such requirements when asked told me that "as long as the audit files get moved off the server, you're good," so our file-system backups satisfy the requirement. So this has somewhat devolved into a "can I do this" exercise with a dash of "it'll make searching the audits easier" for spice.

-

October 25, 2018 at 12:26 pm

You can check this tutorial i made on how you can import several text files to a table using SSIS

SSIS Basics: Bulk-Import various text files into a table

http://www.sqlservercentral.com/articles/Integration+Services+(SSIS)/177918/

for your particular case, you can think, or need to replace the bulk insert task with a dataflow task with the required conversions.

MCSE, MCSA SQL Server Database Developer/Administrator

-

October 25, 2018 at 12:51 pm

epivaral - Thursday, October 25, 2018 12:26 PMYou can check this tutorial i made on how you can import several text files to a table using SSISSSIS Basics: Bulk-Import various text files into a table

http://www.sqlservercentral.com/articles/Integration+Services+(SSIS)/177918/

for your particular case, you can think, or need to replace the bulk insert task with a dataflow task with the required conversions.

One thing to keep in mind, the audit files aren't text files, they're binary files written out by the SQL Audit, which is why I have to use the fn_get_audit_file to import them.

-

October 25, 2018 at 1:15 pm

jasona.work - Thursday, October 25, 2018 12:51 PMOne thing to keep in mind, the audit files aren't text files, they're binary files written out by the SQL Audit, which is why I have to use the fn_get_audit_file to import them.That makes all the difference – so stick with what you are doing.

If you haven't even tried to resolve your issue, please don't expect the hard-working volunteers here to waste their time providing links to answers which you could easily have found yourself.

-

October 25, 2018 at 1:19 pm

Phil Parkin - Thursday, October 25, 2018 1:15 PMjasona.work - Thursday, October 25, 2018 12:51 PMOne thing to keep in mind, the audit files aren't text files, they're binary files written out by the SQL Audit, which is why I have to use the fn_get_audit_file to import them.That makes all the difference – so stick with what you are doing.

Yeah, I took a look to see if I could do this in a data flow task, and it doesn't have a way to pull in the information.

-

October 25, 2018 at 1:22 pm

Here is a simple C# function which returns true if a file is locked, false otherwise.

private bool FileIsLocked(string filename, FileAccess file_access)

{

// Try to open the file with the indicated access.

try

{

FileStream fs =

new FileStream(filename, FileMode.Open, file_access);

fs.Close();

return false;

}

catch (IOException)

{

return true;

}

catch (Exception)

{

throw;

}

}So now that you know how to perform the test, you need to decide what to do if a failure is returned. For example:

- Move to next file?

- Wait a while and try again?

- Abort processing?

- Something else?

If you haven't even tried to resolve your issue, please don't expect the hard-working volunteers here to waste their time providing links to answers which you could easily have found yourself.

-

October 25, 2018 at 1:44 pm

Phil Parkin - Thursday, October 25, 2018 1:22 PMHere is a simple C# function which returns true if a file is locked, false otherwise.

private bool FileIsLocked(string filename, FileAccess file_access)

{

// Try to open the file with the indicated access.

try

{

FileStream fs =

new FileStream(filename, FileMode.Open, file_access);

fs.Close();

return false;

}

catch (IOException)

{

return true;

}

catch (Exception)

{

throw;

}

}- Move to next file?

- Wait a while and try again?

- Abort processing?

- Something else?

The goal is to work through all the audit files, except the currently active (and thus, locked) file.

So I just need to sort out how to get the package to keep working through files until there's none left except the active file.Thank you Phil, I'll putter with this and see what I can do.

-

October 25, 2018 at 1:57 pm

jasona.work - Thursday, October 25, 2018 1:44 PMPhil Parkin - Thursday, October 25, 2018 1:22 PMHere is a simple C# function which returns true if a file is locked, false otherwise.

private bool FileIsLocked(string filename, FileAccess file_access)

{

// Try to open the file with the indicated access.

try

{

FileStream fs =

new FileStream(filename, FileMode.Open, file_access);

fs.Close();

return false;

}

catch (IOException)

{

return true;

}

catch (Exception)

{

throw;

}

}- Move to next file?

- Wait a while and try again?

- Abort processing?

- Something else?

The goal is to work through all the audit files, except the currently active (and thus, locked) file.

So I just need to sort out how to get the package to keep working through files until there's none left except the active file.Thank you Phil, I'll putter with this and see what I can do.

Before you waste too much time, let me suggest how I would do it.

1) Create a package-scoped Boolean variable called IsLocked (or whatever)

2) Add the script task as your first step in the foreach loop. Set IsLocked to true or false, for the file currently being processed.

3) Connect the script task to your next task and then edit the properties of the precedence constraint. Change it to 'Expression and Constraint', value false, Expression: @[User::IsLocked]That should do it. If the file is not locked, processing continues as usual. If the file is locked, processing should move to the next file.

If you haven't even tried to resolve your issue, please don't expect the hard-working volunteers here to waste their time providing links to answers which you could easily have found yourself.

-

October 25, 2018 at 2:02 pm

Phil Parkin - Thursday, October 25, 2018 1:57 PMBefore you waste too much time, let me suggest how I would do it.

1) Create a package-scoped Boolean variable called IsLocked (or whatever)

2) Add the script task as your first step in the foreach loop. Set IsLocked to true or false, for the file currently being processed.

3) Connect the script task to your next task and then edit the properties of the precedence constraint. Change it to 'Expression and Constraint', value false, Expression: @[User::IsLocked]That should do it. If the file is not locked, processing continues as usual. If the file is locked, processing should move to the next file.

I suspect that will work much better (and easier) than my thought of having the package continue on if the script task returned false...

Once again Phil, thank you!

Viewing 11 posts - 1 through 10 (of 10 total)

You must be logged in to reply to this topic. Login to reply