SQL Server stored procedures fast in SQL but slow when called by ASP.NET

-

September 23, 2018 at 4:37 am

Query inside the stored procedure giving result fast (twenty seven thousand records in 2 seconds) but when i called the procedure from asp.net(web application),it takes one minute to give the result.So what will be the reason for this?.How to fix it?.

-

September 23, 2018 at 9:48 am

please add "Set arbithabort on" before execute Stored PRocedure and test it.

-

September 23, 2018 at 10:30 am

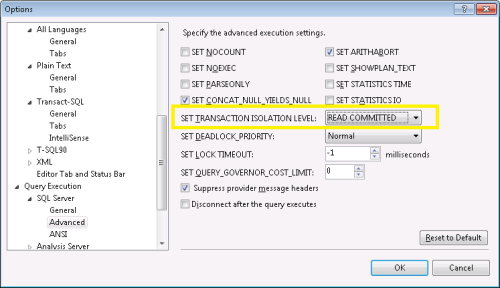

Improperly parameterized queries that cause recompiles, network latency, the fact that most connections made from the front end use different settings than what SSMS uses, overly busy or poorly configured web server(s), having M.A.R.S. enable in the connection strings, etc, etc... there are a whole lot of reasons.

The other thing that I'd like to know is who is going to use 27 Thousand rows and for what?

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 23, 2018 at 11:21 pm

Jeff Moden - Sunday, September 23, 2018 10:30 AMImproperly parameterized queries that cause recompiles, network latency, the fact that most connections made from the front end use different settings than what SSMS uses, overly busy or poorly configured web server(s), having M.A.R.S. enable in the connection strings, etc, etc... there are a whole lot of reasons.The other thing that I'd like to know is who is going to use 27 Thousand rows and for what?

we tested without filter condition so it picks 27 thousand records from db.But in front end it displays 25 to 30 records only by using some filter condition in the query.Whether i have to set

"Multiple Active Result Sets = true" in my connection string? -

September 24, 2018 at 12:16 am

jkramprakash - Sunday, September 23, 2018 11:21 PMJeff Moden - Sunday, September 23, 2018 10:30 AMImproperly parameterized queries that cause recompiles, network latency, the fact that most connections made from the front end use different settings than what SSMS uses, overly busy or poorly configured web server(s), having M.A.R.S. enable in the connection strings, etc, etc... there are a whole lot of reasons.The other thing that I'd like to know is who is going to use 27 Thousand rows and for what?

we tested without filter condition so it picks 27 thousand records from db.But in front end it displays 25 to 30 records only by using some filter condition in the query.Whether i have to set

"Multiple Active Result Sets = true" in my connection string?Please add this Set arbithabort on before execute your sp.

Because in ssms this option by default is on and in applications and connections is off. when you execute your sp in ssms , the new plan was generate for your sp with new values but from applications use cached plans. if with this option, your problem solved. you must to investigate for parameter sniffing . -

September 24, 2018 at 4:16 am

Hamid-Sadeghian - Monday, September 24, 2018 12:16 AMjkramprakash - Sunday, September 23, 2018 11:21 PMJeff Moden - Sunday, September 23, 2018 10:30 AMImproperly parameterized queries that cause recompiles, network latency, the fact that most connections made from the front end use different settings than what SSMS uses, overly busy or poorly configured web server(s), having M.A.R.S. enable in the connection strings, etc, etc... there are a whole lot of reasons.The other thing that I'd like to know is who is going to use 27 Thousand rows and for what?

we tested without filter condition so it picks 27 thousand records from db.But in front end it displays 25 to 30 records only by using some filter condition in the query.Whether i have to set

"Multiple Active Result Sets = true" in my connection string?Please add this Set arbithabort on before execute your sp.

Because in ssms this option by default is on and in applications and connections is off. when you execute your sp in ssms , the new plan was generate for your sp with new values but from applications use cached plans. if with this option, your problem solved. you must to investigate for parameter sniffing .ok.i will add and test it.

-

September 24, 2018 at 6:31 am

jkramprakash - Sunday, September 23, 2018 11:21 PMJeff Moden - Sunday, September 23, 2018 10:30 AMImproperly parameterized queries that cause recompiles, network latency, the fact that most connections made from the front end use different settings than what SSMS uses, overly busy or poorly configured web server(s), having M.A.R.S. enable in the connection strings, etc, etc... there are a whole lot of reasons.The other thing that I'd like to know is who is going to use 27 Thousand rows and for what?

we tested without filter condition so it picks 27 thousand records from db.But in front end it displays 25 to 30 records only by using some filter condition in the query.Whether i have to set

"Multiple Active Result Sets = true" in my connection string?That's the setting but it should generally be set to "false". There are only very rare conditions where it will advantageous to turn it on and none of us are ever likely to run into those conditions. Do not set it to true and, if you can, make sure that all of your connection strings have it set to OFF.

I also agree with jkramprakash ... Set arbithabort should be turned on. You CAN actually do that at the server level but you need to test to ensure that it doesn't break some other application.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 24, 2018 at 6:44 am

jkramprakash - Sunday, September 23, 2018 4:37 AMQuery inside the stored procedure giving result fast (twenty seven thousand records in 2 seconds) but when i called the procedure from asp.net(web application),it takes one minute to give the result.So what will be the reason for this?.How to fix it?.I had a similar problem with a query that was reading uncommitted rows in SSMS but not when called from an application. So just check that you are reading committed rows in SSMS.

-

September 24, 2018 at 7:53 am

don't forget the basics: make sure you've added SET NOCOUNT ON at the top of your procedure, as well as commenting out any PRINT statements.

those add some added overhead as they send messages back to the application, which are most likely ignored anyway.from there i would start checking to make sure that all queries are SARG-able(meaning the SearchArgument can use an index...

no LIKE statements,

no functions on column names,

no OR statements,

no implicit conversions on data types.Lowell

--help us help you! If you post a question, make sure you include a CREATE TABLE... statement and INSERT INTO... statement into that table to give the volunteers here representative data. with your description of the problem, we can provide a tested, verifiable solution to your question! asking the question the right way gets you a tested answer the fastest way possible! -

September 26, 2018 at 4:50 am

i made some changes in the query.actually i created clustered index for three columns for example

(column1,column2,column3) but i not used column1 in my where condition i used column2 and column3 only.

Now i used column1 also in my where condition(example-> where column1 is not null).Now it improves

performance slightly and i am checking from .net side also.Actually if we skip the clustered index first column

in the where condition will it impact performance? -

September 26, 2018 at 5:20 am

jkramprakash - Wednesday, September 26, 2018 4:50 AMi made some changes in the query.actually i created clustered index for three columns for example

(column1,column2,column3) but i not used column1 in my where condition i used column2 and column3 only.

Now i used column1 also in my where condition(example-> where column1 is not null).Now it improves

performance slightly and i am checking from .net side also.Actually if we skip the clustered index first column

in the where condition will it impact performance?orders of index key is important .

can you test with " set arbithabort on" . I want to know that what is the result.

-

September 26, 2018 at 5:51 am

No.I am not tested I am checking in db side now and i will test and tell u.

-

September 26, 2018 at 5:56 am

jkramprakash - Wednesday, September 26, 2018 4:50 AMi made some changes in the query.actually i created clustered index for three columns for example

(column1,column2,column3) but i not used column1 in my where condition i used column2 and column3 only.

Now i used column1 also in my where condition(example-> where column1 is not null).Now it improves

performance slightly and i am checking from .net side also.Actually if we skip the clustered index first column

in the where condition will it impact performance?Then the clustered index isn't correct for this query... period.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

September 26, 2018 at 6:12 am

if i use the same order of clustered index,i think it will improve.if i skip the first column,it decrease the performance.i have to use the existing index only,I cannot create new

index.so shall i include first column? -

October 4, 2018 at 6:25 am

Hamid-Sadeghian - Wednesday, September 26, 2018 5:20 AMjkramprakash - Wednesday, September 26, 2018 4:50 AMi made some changes in the query.actually i created clustered index for three columns for example

(column1,column2,column3) but i not used column1 in my where condition i used column2 and column3 only.

Now i used column1 also in my where condition(example-> where column1 is not null).Now it improves

performance slightly and i am checking from .net side also.Actually if we skip the clustered index first column

in the where condition will it impact performance?orders of index key is important .

can you test with " set arbithabort on" . I want to know that what is the result.

Arranged the columns in where conditions as per column order which is used in indexes and also used "set arbithabort on"

.net,now it takes 8 sec to give results.Thank You.

Viewing 15 posts - 1 through 15 (of 17 total)

You must be logged in to reply to this topic. Login to reply