Rebuilding Indexes

-

August 21, 2018 at 11:38 am

How do you determine how often you need to rebuild your indexes?

-

August 22, 2018 at 5:31 am

And therein lies a philosophical debate.

After reading a lot of what Jeff Moden has to say on fragmentation, some testing, some research, I lean heavily towards setting an appropriate fill factor (50-60%) and then letting my indexes fragment naturally. Done.

However, you may also want to examine your queries. If they are predominantly smalls scans using an index (AKA a seek), fragmentation is not hurting your queries. If, on the other hand, they are large scans (AKA scans), you may be seeing some pain from the fact that you are accessing more pages that is strictly necessary if you were to defragment the indexes.

Once you've determined that you have pain, the way to set up a defragmentation automation is to observe the fragmentation. How quickly does it go above your pain threshold? Does it take a day or a week? There's your answer. If it takes a day, you need to defragment daily. If it takes a week, you need to defragment weekly. You may find that different indexes need a different schedule. Or, you may just run a daily schedule, but just look for a level of fragmentation. That's a very common approach. Pick a schedule and just check all the indexes for fragmentation and fix the ones beyond a certain percentage.

Three additional comments. First, don't bother with reorg. It generally does very little for fragmentation, but costs about the same as rebuild. Second, be aware that a rebuild of an index updates the statistics using a full scan, so you shouldn't then run a sampled statistics update after rebuilding the index. Third, different rules entirely for columnstore indexes since they use the defrag process to clean up the delta store and properly compress the data, I'd regularly defragment columnstore indexes.

"The credit belongs to the man who is actually in the arena, whose face is marred by dust and sweat and blood"

- Theodore RooseveltAuthor of:

SQL Server Execution Plans

SQL Server Query Performance Tuning -

August 22, 2018 at 8:27 am

yoyodynedata - Tuesday, August 21, 2018 11:38 AMHow do you determine how often you need to rebuild your indexes?You'll end up getting a lot of advice from people to Reorg at 10% fragmentation and Rebuild at 30% fragmentation. I don't have the time to write a proper full reply for this post but if you follow those recommendations without understanding how the index is getting fragmented and assigning the correct Fill Factor, it's actually better to not defrag the index at all. The only time logical fragmentation causes a problem is when you read data from disk into memory. Once the data is in memory, logical fragmentation does NOT cause any performance issues.

Physical Fragmentation (Page Densisty or "avg percent of page fullness") matters only because pages appear in memory the same as they are on disk. If your page density is only 50% full, you're wasting half your memory.

For "append only" indexes, set the Fill Factor to 100% and REBUILD if the fragmentation exceeds 1.0% (not a mistake... one percent, not 10 percent). The trouble with such indexes is "expAnsive" updates where the initial values in VARCHAR/NVARCHAR columns get larger. Those cause massive logical and physical fragmentation. There's no sense in defragging those unless you fix the "expAnsive" update problem.

Evenly distributed inserts (like those based on GUID keys) are actually a marvel of efficiency (contrary to general consensus). I've recently done a couple of hundred hours of testing and if you assign an 80% Fill Factor to such indexes, you can go for weeks or even months (depending on the insert rate and width of the rows) with absolutely ZERO page splits (even the good ones cost but won't happen here) which also means almost ZERO fragmentation for the same amount of time. Rebuild those when they hit 1% fragmentation.

Any index that get's below 70% page density deserves a good hard look no matter what your settings are. And remember that when the page density falls below the Fill Factor, it's because of bad page splits and needs a second look for "expAnsive" updates, Fill Factor, etc.

A lot of this will appear to be chicken-feed to a lot of people because of the small size of non-clustered indexes. That's also the definition of "Death by a Thousand Cuts".

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

August 26, 2018 at 2:18 pm

Jeff Moden - Wednesday, August 22, 2018 8:27 AMYou'll end up getting a lot of advice from people to Reorg at 10% fragmentation and Rebuild at 30% fragmentation. I don't have the time to write a proper full reply for this post but if you follow those recommendations without understanding how the index is getting fragmented and assigning the correct Fill Factor, it's actually better to not defrag the index at all. The only time logical fragmentation causes a problem is when you read data from disk into memory. Once the data is in memory, logical fragmentation does NOT cause any performance issues.

Physical Fragmentation (Page Densisty or "avg percent of page fullness") matters only because pages appear in memory the same as they are on disk. If your page density is only 50% full, you're wasting half your memory.

For "append only" indexes, set the Fill Factor to 100% and REBUILD if the fragmentation exceeds 1.0% (not a mistake... one percent, not 10 percent). The trouble with such indexes is "expAnsive" updates where the initial values in VARCHAR/NVARCHAR columns get larger. Those cause massive logical and physical fragmentation. There's no sense in defragging those unless you fix the "expAnsive" update problem.

Evenly distributed inserts (like those based on GUID keys) are actually a marvel of efficiency (contrary to general consensus). I've recently done a couple of hundred hours of testing and if you assign an 80% Fill Factor to such indexes, you can go for weeks or even months (depending on the insert rate and width of the rows) with absolutely ZERO page splits (even the good ones cost but won't happen here) which also means almost ZERO fragmentation for the same amount of time. Rebuild those when they hit 1% fragmentation.

Any index that get's below 70% page density deserves a good hard look no matter what your settings are. And remember that when the page density falls below the Fill Factor, it's because of bad page splits and needs a second look for "expAnsive" updates, Fill Factor, etc.

A lot of this will appear to be chicken-feed to a lot of people because of the small size of non-clustered indexes. That's also the definition of "Death by a Thousand Cuts".

There's a nasty tendency for developers who write in brain-dead languages like C++ and Java to build code that ensures a serious exPansive problem: they want to treat a what ought to be a concatenation of rows resulting from a manyway join as a single column.

Anyway, the conclusion I draw from this is that developers and software designers and software architects who don't undertand databases should be forbidden from writing any database queries ever. unless they are willing and able to learn. And Database Administrators who don't understand it should be educated (if they are able to learn) or fired (if they think they already know it all).

I've known as many of the latter (DBA) incompetents as of the former (develpers and SAs) - which I find quite frightening, as wrong answers can be created just as can performace problems, and wrong answers can have unpleasant consequences. when the powers that be believe them (which happens far too often).

But I guess I knew that anyway, because of the other problems such ignoramuses cause.

Tom

-

August 26, 2018 at 3:22 pm

TomThomson - Sunday, August 26, 2018 2:18 PMThere's a nasty tendency for developers who write in brain-dead languages like C++ and Java to build code that ensures a serious exPansive problem: they want to treat a what ought to be a concatenation of rows resulting from a manyway join as a single column.Anyway, the conclusion I draw from this is that developers and software designers and software architects who don't undertand databases should be forbidden from writing any database queries ever. unless they are willing and able to learn. And Database Administrators who don't understand it should be educated (if they are able to learn) or fired (if they think they already know it all).

I've known as many of the latter (DBA) incompetents as of the former (develpers and SAs) - which I find quite frightening, as wrong answers can be created just as can performace problems, and wrong answers can have unpleasant consequences. when the powers that be believe them (which happens far too often).

But I guess I knew that anyway, because of the other problems such ignoramuses cause.

Heh... Oh, yeah... the now infamous "GETDATE()" question is proof positive of all that.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

November 28, 2018 at 2:39 am

You should rebuild indexes often enough so that production is not detrimentally affected by index degradation. You can You should reorganize your indices as soon as index fragmentation reaches more than 5 or sometimes 10% and you should rebuild them completely when it goes beyond 30%. Use dbcc showcontig([Table]) to check the fragmentation level of indexes.

-

November 28, 2018 at 9:16 am

Johnson Welch - Wednesday, November 28, 2018 2:39 AMYou should rebuild indexes often enough so that production is not detrimentally affected by index degradation. You can You should reorganize your indices as soon as index fragmentation reaches more than 5 or sometimes 10% and you should rebuild them completely when it goes beyond 30%. Use dbcc showcontig([Table]) to check the fragmentation level of indexes.DBCC ShowContig is quite old and hasn't been the recommended method for checking index fragmentation since 2005 came out. Instead, you should use sys.dm_db_index_physical_stats(). Here's the link to the MS-Doc article on that function along with a whole lot of other information.

https://docs.microsoft.com/en-us/sql/relational-databases/system-dynamic-management-views/sys-dm-db-index-physical-stats-transact-sql?view=sql-server-2017I've also been doing a deep study with a whole lot of tests on index fragmentation and what to do about it. It all started in January of 2016 when I found that the standard recommendations for defragging indexes were the actual source of some serious blocking issues the day after such index maintenance. If you have a look at the article I linked to, it says right in the article...

Fragmentation alone is not a sufficient reason to reorganize or rebuild an index. The main effect of fragmentation is that it slows down page read-ahead throughput during index scans.

I've been doing some intense testing for the last year (and now have a 2 hour presentation on the subject) and I have to tell you that if you don't actually know what the index is doing and you defrag it (especially using REORGANIZE) you can cause both massive blocking, decrease performance because of the massive increase in bad page splits (which also beats the hell out of your transaction log no matter which Recovery Model you're using), and actually perpetuate the need for index maintenance.And if you think that REORGANIZE is "less resource intensive" in all cases, you've got another thing coming. I cloned one of my databases on another system and then did a REORGANIZE on a 146GB Clustered Index that had "only" 12% Logical Fragmentation and a REBUILD of the same index in the Bulk_Logged mode on the cloned database. Here are the results.

REORGANIZE:

Duration: 1 Hour and 21 Minutes.

Log File: Grew from ~21GB to 227GB with the expected effects of such a large amount of log file activity on not only disk space but on backups and size on the eventual tapes they made it to.

Fragmentation % after. 0.3%REBUILD (Bulk_Logged Recovery Model)

Duration: 12 MINUTES and 44 Seconds

Log File: Grew from ~21GB to 37GB (that's a hell of a lot less than the 1/4TB it grew to with REORGANIZE)

Fragmentation % after. 0.001%Remember that the % of logical fragmentation is a percent of 146GB worth of pages. The REORGANIZE left a lot of partially full "straggler" pages with anywhere from just 10% page fullness up to what the Fill Factor was. Consider that "just" 0.3 % of a 146GB index is more than 5 million pages that still "aren't quite right" and on an ever-increasing index suffering only recent "ExpAnsive Updates" at the end of the index, that means that most of the out of place pages are going to be in the area of the index most frequently read.

And, if you haven't actually taken the time to apply the correct Fill Factor (which may be all for naught in the face of "ExpAnsive Updates"), then you could be wasting a shedload of not only disk space, but memory, as well. For example, that 146GB Ci I've been talking about? Yeah.... even though it should be an "append only" (has an ever increasing key), "ExpAnsive Updates" kill that notion and it fragments like the tip of an exploded tip broom straw and only the "tip" of the index is affected because, once a row is inserted and then updated, it is never changed again. A lower Fill Factor of even just 90% would simply waste 16GB and a Fill Factor of 80% would waste 36GB.

And that brings us back to the quote I cited that states that Logical Fragmentation should NOT be the only reason to drag an index. It even says that the only thing that "Logical Fragmentation" really only affects performance of Read Aheads. To continue the rest of the quote from that same article/paragraph...

The main effect of fragmentation is that it slows down page read-ahead throughput during index scans. This causes slower response times. If the query workload on a fragmented table or index does not involve scans, because the workload is primarily singleton lookups, removing fragmentation may have no effect.

What that also means is that once you have data in memory, the Logical Fragmentation actually doesn't matter. I've also tested it for scans once in memory. It doesn't matter there either. It only matters if you have to read more than 1 page from disk to memory. What DOES really matter once in memory is "Page Density" (also known as percent of page fullness). Oddly enough, fixing "Page Density" also fixes Logical Fragmentation, as well.Also consider what I call an "Ever Increasing Siloed" index. This is one of those puppies that continues to have nearly 100% page density (which is good) even with zero index maintenance but has 99% Logical Fragmentation. It's a strange bird but what would you do? Decrease the Fill Factor? Again, that would only waste disk space and memory and REORGANIZEing the index on a regular basis would only have the same effect it did on my 146GB example.

The bottom line is that, unless you have a deep and actual understanding of the very "DNA" of a large index AND the Insert/Update patterns that affect the index, it's sometimes better to leave it alone rather than make a mess of it with unqualified index maintenance that may actually be the cause of more performance issues than it fixes. And, no... that's not my final recommendation. My final recommendation is the same as what should be done with poorly performing code. Find out the reasons why and fix it!

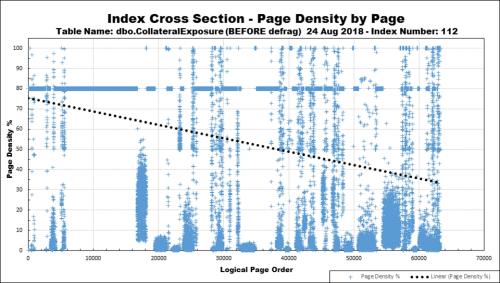

As an example, here's a 30GB non-clustered index just a week after a REBUILD using an 80% Fill Factor. The Logical Order of pages is from left to right and the page density is from bottom (0%) to top (100% full). I have to tell you that REORGANIZING it at the supposed "best practice" levels don't even come close to doing anything great for this index (and neither does REBUILD, in this case). The underlying table needs to be fixed and so does some of the code doing inserts/updates.

Here's one of my favorites. It's an index that has only been REORGANIZED for a year because of the way the fragmentation occurs never sending it to the REBUILD over 30% mode. The 80% Fill Factor setting isn't doing squat for it.

If you don't actually know what the index is doing nor the effects of the code that's doing the inserts/updates, you could be causing more harm than not with your index maintenance. Heh... which pill will you choose? The Red one or the Blue one? 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 7 posts - 1 through 6 (of 6 total)

You must be logged in to reply to this topic. Login to reply