How to import json from http request into SQL table by using SSIS 2008

-

October 29, 2014 at 10:24 am

Hello,

I know parsing json data has been discussed lots but what I want is probably a little simpler or different:

I have a URL where I can open and get the Json data.

I need to parse and load the Json data into a SQL table, and I want to use it in SSIS, not using C# or VB.NET coding.

I am on Visual Studio.NET 2008 and SQL 2008R2

Any help is appreciated in advance.

-

October 29, 2014 at 11:38 am

The easiest way is to paste the URL into json-csv.com. This will generate a CSV spreadsheet. Then you can either copy the CSV text from a spreadsheet and paste into the table or use the import wizard.

-

November 19, 2014 at 12:23 pm

did you find a solution?

-

November 19, 2014 at 1:37 pm

ricva (11/19/2014)

did you find a solution?didn't look into it as I've been drag away by other project, if you have example or any finding, please post it here, thanks

-

November 19, 2014 at 5:00 pm

I believe I can.

Do you have an example of the json data and the table you want to put it into?

Would you call a stored proc from SSIS to insert?

How many rows would be inserted at one time?

Thanks

Ric

-

November 19, 2014 at 6:46 pm

ricva (11/19/2014)

I believe I can.Do you have an example of the json data and the table you want to put it into?

Would you call a stored proc from SSIS to insert?

How many rows would be inserted at one time?

Thanks

Ric

Thank you Ric for your kind help.

The json data was from an internal website that contains confidential data and I am not allowed to show it here, but I can adapt your sample to use internally, there are 87 fields in one record and over 10k records.

Can you please just use some sample json data for the education purpose?

Thanks

-

November 19, 2014 at 6:48 pm

Joe Bloggs-301800 (10/29/2014)

The easiest way is to paste the URL into json-csv.com. This will generate a CSV spreadsheet. Then you can either copy the CSV text from a spreadsheet and paste into the table or use the import wizard.Thanks, but sorry this easy solution doesn't work here because the data can not be used outside of the DMZ

-

November 19, 2014 at 7:01 pm

Does this help?

-

November 19, 2014 at 8:04 pm

Thanks Luis,

The article might be helpful to someone, but the author is really making things way too complex, it won't pass the code review in my work place.

-

November 25, 2014 at 9:36 am

Is this a onetime load? I did a test with 10k rows and 90+ columns and was successful loading sample data. However, because it has to process row by row the load is very slow.

The method would use SSIS to load the data into a staging table, and then call a stored procedure to load into your final table.

How familiar are you with writing stored procedures?

Ric

-

December 18, 2015 at 4:43 am

Hello..

I am sharing a link where you will get exact answer to load Json files to SQL server[/url].Hope it will be helpful for you. To see Detail follow this link : http://binaryworld.net/blogs/consuming-json-data-in-sql-server-and-ssis-convert-json-toxml/

-

December 19, 2015 at 4:59 am

Hello...

You want to load Json files to SQL server[/url], Right ??

So visit on : http://binaryworld.net/blogs/consuming-json-data-in-sql-server-and-ssis-convert-json-toxml/

Hope it will be helpful for You.:-)

-

January 11, 2017 at 5:14 pm

There's a bug importing csv files, and it's not flexible.

I can convert xls to json with nodejs and 2 lines of code. Now if i could import json to sql, that would be ideal.

I guess I can make a driver, but I am sure it's been done.

-

January 12, 2017 at 4:10 pm

Since you are pulling from a URL, have you tried studying the Web Services task in SSIS 2008? I have not touched it in a while so don't want to provide information where my memory is sketchy but advise you read up on it if you haven't. It may just be what you need.

----------------------------------------------------

-

January 13, 2017 at 9:11 pm

I import JSON via HTTPS requests in 2008 R2 using Python.

Here is a really rough example of like 9 lines of code to show how easy it is to iterate over JSON to create a CSV export for SQL Server to ingest.

Couple of things here if you're interested in this option:

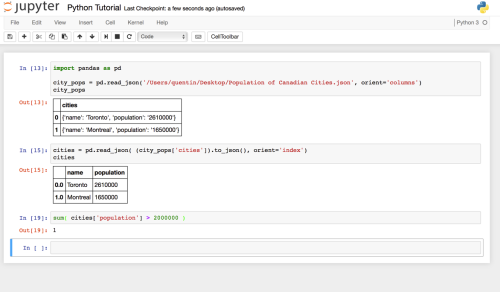

Python is installable on Windows. I use 2.7 on most Window servers. Pandas is a module with Python. It allows you to create data frames and data series in Python. For a SQL guy, it's like creating SQL tables you can query (reference: Pandas comparison to SQL). Requests is another module with Python that allows you to formulate those HTTPS requests like you're doing in .NET.

Make a request, jam it into a Pandas data frame, and then do your normal ETL process like you would in SSIS. You can easily interpret JSON in Python and export the final results from Pandas to CSV like in the example. It's insanely easy and no reason for you do to more than you need with .NET.

import pandas as pd

import requests# Your get request of the https link

someRequest = requests.get('https://somelink/')# Your blank data frame, which allows you to create a object like a SQL table

# You can clean, query and do whatever your like to data frames just like SQL tables

# This is where you data will live and it easily exports to CSV, Excel etc

df = pd.DataFrame(index=None, columns=['Date', 'ID', 'Revenue'])# List variable to store the records you will jam into your data frame

record = []# Iterate over the JSON output

for items in someRequest.json().iteritems():

# Add each field of data to a list

record = [items['date'], items['ID'], items['Revenue']]

# Add the record to the end of the data frame

df.loc[len(df)] = record# Export the data frame with each record to CSV.

df.to_csv('./files/mydata.csv', sep=',')Pandas also supports loading JSON straight into a data frame in some cases. Other cases you may have to conform the output depending on the JSON output. This is why I typically just iterate over JSON output using a forloop. I like going record-by-record so it allows me to perform ETL per record as it's going into a list (array).

import Pandas as pddf = pd.read_json('https://api.github.com/repos/pydata/pandas/issues?per_page=5')

Another example:

P.S

The above script obviously iterates over JSON and outputs to CSV. Nothing is stopping you from using SQL Alchemy in Python where you connect directly to your SQL Server and writing the Pandas data frame right to a table. I just choose the CSV option because it's better for ETL to do everything on disk rather than loading everything on top of SQL Server's shoulders. Good ETL is augmented across multiple machines and spindles regardless of the chosen languages.

Viewing 15 posts - 1 through 15 (of 16 total)

You must be logged in to reply to this topic. Login to reply